IT environments today have a passing resemblance to those from 15 or 20 years ago, when enterprise workloads mostly ran on industry standard servers connected through networks and into storage systems that were all contained within the four walls of a datacenter, where performance as the name of the game and was protected by a perimeter of security designed to keep the bad guys out.

These days, the words many associate with these modern workloads and datacenters are “diversity” and “efficiency.” Workloads today still include traditional, general-purpose enterprise applications but now also stretch into high-performance computing (HPC), analytics, and the headlining act of modern computing, AI and, in particular, generative AI.

Partner Content: And much of this has exploded out of on-premises facilities, stretching out to the cloud and beyond to the edge, where everything from the Internet of Things to microservices and all the data that comes with them live.

This is where efficiency comes in. These demanding and highly diversified workloads require a lot of power to run and the key driver of this is AI, fueling a staggering increase in electricity demand that will only grow as commercial and consumer adoption of the emerging technology expands. And that demand is growing, with more than 80 percent of enterprises using AI to some extent and 35 percent saying they are leveraging it across multiple departments.

The insatiable need for more power will grow with it, which isn’t surprising. The average ChatGPT query – with the accompanying data and billions of parameters that must be processed – uses almost 10 times as much electricity as a Google search, a good illustration of the resources that will be consumed as AI usage – and the technology behind it, such as AI agents and reasoning AI – grows.

Goldman Sachs Research analysts expect power demand from datacenters around the world will jump by 50 percent between 2023 and 2027 and up to 165 percent by 2030.

Intel Xeon 6 CPUs Leveraged In AI Hosts

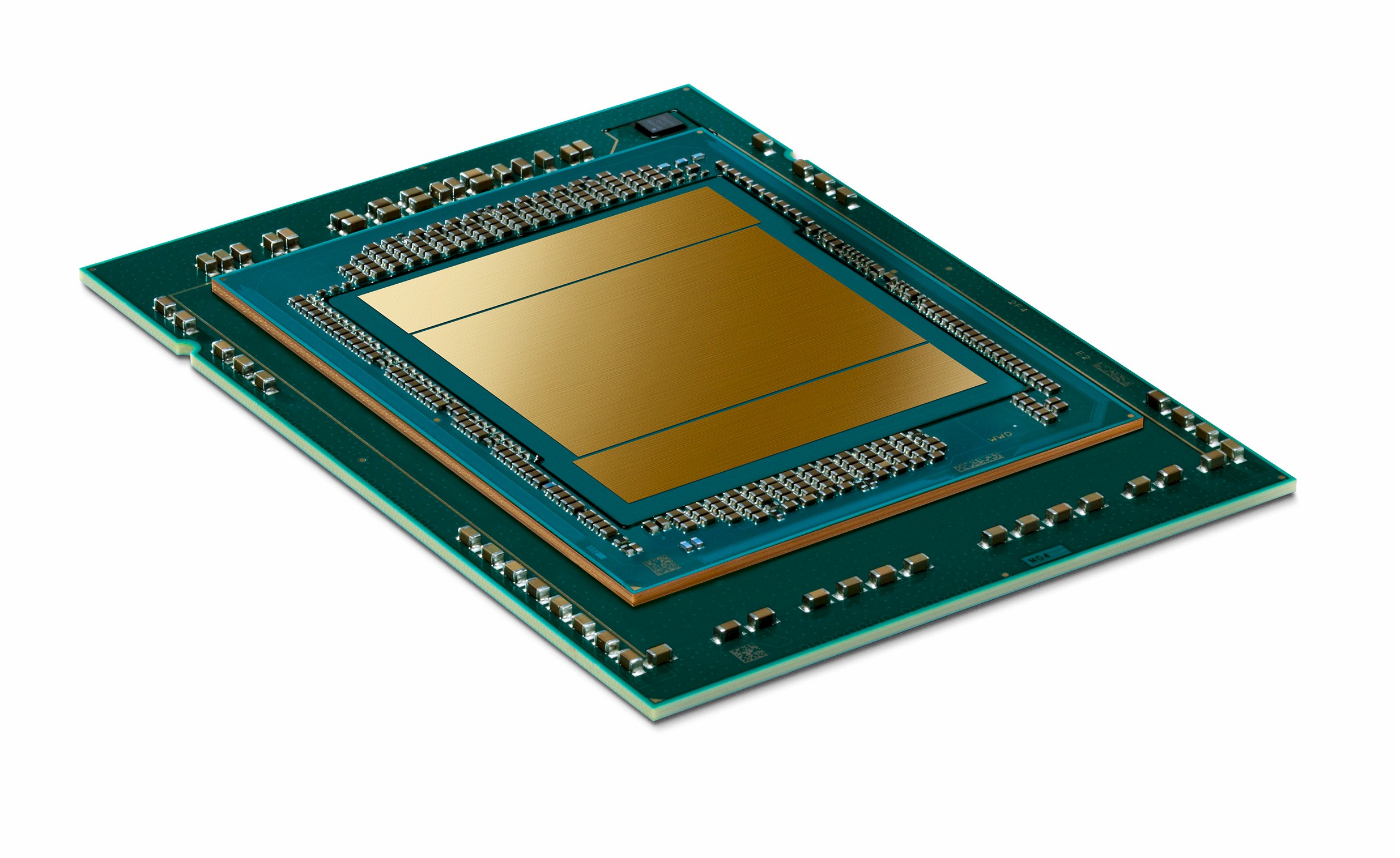

Intel over the past year has waded deep into these roiling waters, buoyed by its portfolio of Intel Xeon 6 processors that represent an evolution in the chip maker’s architecture that takes into account the broad diversity of modern workloads, from power-hungry AI and HPC operations to the high density and low power needed for space-constrained edge and IoT jobs.

With all of this in mind, the company at its Intel Vision event in April 2024 unveiled how it revamped its venerable Intel Xeon datacenter processors for the modern IT world, and did so in strikingly simple ways, with the foundation being the introduction of two microarchitectures aimed at different workloads rather than a single CPU core to handle all of them.

That allowed Intel to bring forth two Intel Xeon 6 cores, a Performance-Core (or P-core) with its industry-best memory bandwidth and throughput for compute-intensive workloads like AI and HPC, and Efficient-core (E-core) chips for high-density and scale-out workloads, which can cover the edge and IoT devices along with increasingly popular cloud-native and hyperscale applications.

Intel released the first of the E-core processors in mid-2024 and the initial P-cores a couple of months later. In February 2025, the chip maker rounded out the Intel Xeon 6 family with a host of CPUs, including the Intel Xeon 6700P for the broadest mainstream use. The portfolio is set.

Diverse And Complementary

The modular design of the Intel Xeon 6 CPUs cover a broad array of workloads, offering both high versatility and a complementary nature for any workload or environment, including private, public, and hybrid clouds that contain high-density and scale-out jobs to high-performance, multi-core AI operations. Intel Xeon 6 with E-cores can be used for datacenter consolidation initiatives that can make room for modern AI systems running on chips with P-cores.

Datacenters also can mix Intel Xeon 6 P-core and E-core processors, moving workloads from one core type to another as power and performance needs change, making datacenter scaling easier and more efficient.

All of this means faster business results across a wide spectrum of workloads. Organizations have a choice of microarchitecture and cores, high memory bandwidth, and I/O for myriad workloads. Performance and efficiency are further improved with capabilities like support for Multiplexed Rank DIMM (MRDIMM) supporting memory-bound AI and HPC workloads, enhancements to Compute Express Link (CXL), and integrated accelerators.

Enterprises also have an option of four Intel Xeon 6 processor series with a range of features – from more cores, a larger cache, higher-capacity memory and improved I/O – for entry-level to high-end workloads. All the while, every processor shares a compatible x86 instruction set architecture (ISA) and common hardware platform.

Significant Changes By Intel

The versatility delivered through having two microarchitectures is significant. Intel Xeon 6 CPUs with P-cores are aimed at a broad range of workloads, from AI to HPC, with a better performance than any other general-purpose chip for AI inference, machine learning, and other compute-intensive jobs.

The improved performance-per-vCPU for floating point operations, transactional databases, and HPC applications also make the chips perfect for cloud workloads.

For Intel Xeon 6 processors with E-cores, the job is more about performance per watt and high core density for cloud workloads that call for high task-parallel throughput, and for environments with limited power, space, and cooling.

There isn’t a datacenter workload whose performance or efficiency isn’t improved by using Intel Xeon 6 chips.

Xeon 6 Use Cases

Here are some key areas that the new Intel Xeon 6 chips will excel in:

- AI workloads: The complexity and adoption of AI will only grow, and with it will come higher costs. Some of that comes with expensive GPUs being used now for AI workloads that less costly and more efficient CPUs can handle. The Intel Xeon 6 chips come with more cores (up to 128 cores per CPU) and better memory bandwidth through MRDIMM. There is AI acceleration integrated in every core, and the processors allow for greater server consolidation, saving space and power. Intel’s AMX (Advanced Matrix Extensions) are key elements for AI acceleration. It supports INT8, BF16, and FP16 data sets, boosting model speed and efficiency and accelerating AI training and inferencing.

- Host CPU: One way to stem the rising costs and power consumption that comes with predictive AI, generative AI, and HPC is by creating an AI-accelerate system that includes a host CPU and discrete IA accelerators. The host CPU is the conductor of the AI orchestra, optimizing processing performance and resource utilization and running other jobs, from managing tasks to preprocessing, processing, and job offloading to GPUs or Intel’s Gaudi AI accelerators to ensure the system’s performance and efficiency. Intel Xeon 6 CPUs include such features as high I/O bandwidth, higher core counts than competitive chips, memory bandwidth that’s as much as 30 percent higher speed than Epyc chips, and flexibility for mixed workloads. These features combine to make Intel Xeon 6 a great CPU host option.

- Server consolidation: In this area, it comes down to math. The more efficient and better performing the CPU, the fewer servers are needed to do the job, saving space, power, and money. Intel Xeon 6 chips with P-cores provide twice the performance on a wide range of workloads, with more cores, twice the memory bandwidth, and AI acceleration in every core. Switching from 2nd Gen Xeons to Intel Xeon 6 means a 5:1 reduction in servers needed, freeing up rack space and reducing the datacenter footprint. It reduces the server count by 80 percent, the carbon emissions and power by 51 percent, and TCO by 60 percent. Intel Xeon 6 with E-cores is almost as good, with a 4:1 server consolidation and reductions in server count (70 percent), carbon emissions (53%), and TCO (53 percent).

- One-socket systems: Single-socket systems are trending again. The cores-per-socket count continues to expand, most applications can fit in a single socket, and markets and use cases are moving in that direction. Intel is meeting that demand with one-socket SKUs of Intel Xeon 6700/6500 products to bring greater I/O in a single socket. Fewer sockets and CPUs means improved efficiencies, better TCO, and less unnecessary scaling. Not every workload needs to scale. Single-socket chips can benefit a range of datacenter uses cases, from storage and scale-out databases to VDI, and edge operations like content delivery networks and IoT. All of these can help with I/O per socket. For organizations, it means allowing them to meet I/O count mandates for specific workloads, consolidation, and TCO reduction. The single-socket Intel Xeon 6 chips can deliver 136 PCI3 lanes, which can handle myriad workloads. If that’s enough for your requirements, you don’t need a two-socket system.

The datacenter field is changing rapidly, and with AI, HPC, and other factors weighing on it. With all this, efficiency, performance, and versatility become paramount, and that’s what is being delivered with Intel Xeon 6 CPUs. With two microarchitectures, enterprises can choose between the high-powered P-cores or E-cores made for space-constrained environments like IoT and the edge. Or they can use both together.

In a datacenter environment that is becoming awash in AI, Intel’s latest-generation Xeons is letting the IT world know that AI isn’t only a GPU’s game and that significant performance gains and power savings are being delivered by these flexible, versatile CPUs.

Sign up to our Newsletter

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.

Subscribe now