调查影响用户基于扩展UTAUT模型采用人工智能健康助理的因素

作者:Li, Zhirong

介绍

AI虚拟助手是一种高级软件解决方案,能够解释用户的自然语言说明并执行任务或提供服务1。这些系统利用人工智能算法并将自动语音识别(ASR)与自然语言理解(NLU)技术集成到履行其功能2。AI虚拟助手已被广泛地纳入各种智能设备中,包括智能手机,智能扬声器,智能可穿戴设备,智能电视和智能汽车。所有这些设备都可以响应用自然语言阐明的用户命令并执行相关任务或请求1。先前的研究表明,目前约有85%的智能手机配备了虚拟助手功能3。此外,总共有44%的用户与跨不同设备的虚拟助手互动,例如Google Assistant,Cortana,Alexa和Siri3。随着对AI虚拟助手及其使用频率不断增加的兴趣4,他们的应用程序范围也在扩大。他们在各种情况下发挥各种角色2。这项技术在心理保健方面取得了重大进步5,增强客户服务6,个性化的教育计划7,车辆运输解决方案8,以及医疗保健提供的改进9。

聊天机器人和由人工智能提供支持的虚拟助手在医疗保健发展中被越来越多地被认为是关键10。他们的出现正在改变医疗保健交付的景观,并迅速整合到现实世界中11。近年来,专注于虚拟健康助手的开发和评估的研究已经扩大,涵盖了各种各样的应用程序方案。例如,有旨在促进自我管理慢性疼痛的机器人12,有助于自我诊断的医疗聊天机器人13,聊天机器人,能够通过智能语音识别来预测心脏病14,旨在促进育龄妇女的生育意识和怀孕前健康的机器人15。此外,还有致力于体育锻炼和饮食管理的虚拟助手16,以及陷阱,一种心理健康聊天机器人,为抑郁症和焦虑症的青少年提供支持17。

作为人工智能的重要分支,AI健康助理是个性化健康生活方式推荐系统不可或缺的一部分,并且属于决策支持的领域18。它们的主要功能是通过量身定制的互动来帮助用户和患者采用更健康的生活方式,同时支持与不健康习惯相关的慢性疾病的管理19。AI健康助理在医疗保健中的应用主要关注健康管理,预计将有助于降低住院率,门诊就诊和治疗要求20。患者的整体健康状况受到各种因素的影响21,,,,22。利用高级算法的AI健康助理可以对用户的健康问题进行精心评估和分类,同时对特定查询的全面响应23,,,,24。用户可以提出与他们的健康有关的问题,或通过语音或文字通信寻求有关疾病的信息。利用自然语言理解和机器学习技术使AI健康助理能够准确解释和分析用户命令25。为了响应特定的用户要求,这些系统可以执行各种任务,例如提供个性化的健康建议,提醒用户及时摄入药物或安排医疗预约26。如今,许多患者和准患者可以利用AI健康在线平台主动研究自己的健康状况或获取有关疾病的信息27。这种发展有可能减轻与远程医疗相关的一些限制,例如长期等待时间对专家的明确反应以及在线咨询医师时产生的额外费用28。AI健康助理不仅有助于传播基本的健康知识,还可以增强早期疾病诊断,促进个性化治疗计划的设计,并提高后续效率20。因此,这些进步支持个人维持最佳健康。

尽管AI健康助理为当代医疗保健系统提供了许多优势,但他们的广泛应用和实施伴随着几个挑战29。这些挑战包括隐私保护,网络安全,数据所有权和共享,医学伦理以及系统故障的风险6,,,,22,,,,30,,,,31,,,,32。鉴于医疗保健提供的性质,道德问题特别明显。AI技术的利用可能对患者的安全和隐私构成威胁22,,,,32,有可能导致信任危机和各种风险。此类问题可能是对AI健康助理的用户接受的重大障碍33,,,,34。

用户接受是指个人使用,购买或实验系统或服务的行为意图或意愿35。对于成功促进任何系统至关重要36鉴于AI健康助理的巨大潜力,确保高度接受至关重要。低水平的接受可能会阻碍AI健康助理的普及,导致医疗资源浪费,并且对AI设备的供过于求,甚至扼杀技术创新,最终影响了患者的兴趣35,,,,37。技术接受是用户愿意采用AI健康助理的关键指标38。先前关于在医疗和健康领域接受AI系统的研究已经很多35。但是,大多数调查主要集中于医学专业人员,算法解释性或医生互动场景等技术方面39,,,,40,,,,41,,,,42。尽管这些研究为这一领域做出了重大贡献,但有关公众在健康咨询环境中接受AI健康助理的研究仍然有限。从角度来看,这一差距可能会阻碍研究人员对非专业用户与AI健康助手互动时所经历的心理机制的理解。因此,这项研究在对普通用户的健康咨询和决策场景的背景下描述了其理论界限,旨在探讨其对AI健康助理的接受和使用行为。

用户感知信任从根本上受到对系统有效性,完整性和能力的评估的影响43。在采用AI健康助理的背景下,用户感知信任起着关键作用:它不仅会影响用户是否愿意首次与该系统互动,而且还决定了他们持续依赖和利用系统来实现健康管理目的29。相反,当用户认为较高的风险水平时,他们对系统的信任会相应地减少33。对潜在风险的这种忧虑和不确定性直接影响用户对AI助手的信任评估44,随后影响他们对使用的行为意图33。因此,本研究旨在通过整合对信任和风险的看法来阐明与用户采用AI健康助手相关的行为意图。

通常,这项研究的目标如下:(1)检查用户对AI健康助手的接受以及他们利用这些技术的意图;(2)分析感知的信任和感知风险如何影响用户对AI健康助理使用的决定;(3)探索这些因素之间的相互关系。为了实现这些目标,我们根据UTAUT扩展了两个核心变量的信任和感知的风险,从而开发了一种新的研究模型,以预测与AI健康虚拟助手交往时,可以预测个人的行为意图。这项研究的结构如下:第一部分是介绍,概述了研究的背景和目标;第二部分提出了建立理论框架的文献综述。第三部分详细阐述了扩展的UTAUT模型和相关假设。第四部分总结了所采用的研究方法;第五部分报告了从数据分析获得的结果;第六部分讨论了研究结果的含义,并解释了这些结果。最后,在第七部分中,我们提供了一个结论,该结论封装了我们的发现,突出了研究的局限性,并提出了未来研究的方向。通过这种结构化方法,本研究旨在全面了解影响用户接受AI健康助手的关键因素和潜在机制。

相关研究

UTAUT:统一接受和使用技术的理论

信息系统的接受和利用(IS)和信息技术(IT)创新已成为学术研究和实际应用中的关键问题45。了解驱使用户接受或拒绝新技术的因素已成为所有IS/IT生命周期的重要任务46,,,,47。为了调查这一主题,先前的研究引入了一系列理论模型,包括理性行动理论(tra48,计划行为理论(TPB49,技术接受模型(TAM36和创新扩散理论(IDT50,其他。Venkatesh等。51提出的UTAUT旨在建立一个综合框架来解释和预测技术接受行为52。该模型是通过整合八种著名理论和模型来开发的:TRA,TPB,TPB,TAM,一个组合的TBP/TAM,动机模型,PC利用的模型,社会认知理论(SCT)和IDT53。通过从这些有影响力的框架中综合关键概念,UTAUT提供了一种更全面的方法来分析技术接受动态54。

UTAUT模型非常强大,因为它确定了四个关键构造的预期性能,预期努力,社会影响力和促进条件,从而共同影响行为意图和实际行为。与早期的行为意图变量模型相比,UTAUT框架表现出更大的解释力(Venkatesh等,2003)。具体而言,UTAUT认为这四个核心构建体(PE,EE,SI,FC)是行为意图和最终行为的直接决定因素。在这种情况下,PE指的是个人认为使用系统会增强其工作绩效的程度。EE表示与使用系统相关的可感知性。SI反映了个人对他人对新系统的使用的看法的重要性。最后,足球俱乐部表示个人认为存在有效系统利用的组织和技术基础设施的程度(Venkatesh等,2003)。此外,UTAUT考虑了调节变量,例如性别,年龄,自愿性和分析中的经验。

作为一个公认的理论模型,UTAUT在各个领域的广泛应用表明了其重要的适用性。它已被用来调查包括数字图书馆在内的众多技术接受方案55,物联网56, 人工智能40,,,,57,电子健康技术58,电子政务倡议59,自行车共享系统60和教育聊天机器人61。这些研究共同确认UTAUT可以有效地阐明和预测用户接受和意愿采用新技术,尤其是在涉及技术互动的环境中53。此外,通过集成特定领域的变量或扩展模型本身,UTAUT可以进一步增强其解释能力,以满足不同技术和环境的各种需求35。因此,本研究旨在利用UTAUT作为理论框架,以探索影响用户接受AI健康助手的关键因素,同时还验证其在基于AI的医疗保健领域内的适用性和有效性。

研究接受AI系统

在AI系统中,接受研究的重点是客户服务62, 教育7和消费品63和其他领域。先前的研究已经采用了几种模型来评估包括TAM,UTAUT和人工智能设备使用使用的AI系统的使用(AIDUA)64。AIDUA框架包括接受生成的三个阶段:初级评估,中学评估和结果阶段。它还确定了六个先例:社会影响力,享乐动机,拟人化,预期表现,预期和情感。Gursoy等。64确定,社会影响力和享受动机在AI设备的背景下与预期绩效呈正相关。此外,发现拟人化与预期努力呈正相关。另外,李65通过将态度和学习动机纳入原始的TAM构造,感知到的有用性,可感知的易用性,行为意图和实际用法中,探索了大学生对AI系统的实际使用。最后,发现人们对AI系统的有用性和易用性对学生的态度,行为意图和实际用法产生了积极影响。但是,大学生对AI系统的态度对实现目标和主观规范的学习动机的影响很小。Xiong等。33随后,通过纳入了用户对信任和风险的看法,以更好地了解AI虚拟助手的接受度,从而扩展了UTAUT模型。他们的发现表明,预期绩效,预期努力,社会影响力和促进条件与使用AI虚拟助手的行为意图呈正相关。此外,信任表现出积极的影响,而感知风险对使用AI虚拟助手的态度和行为意图都有负面影响。

在医疗保健领域,接受AI系统的研究主要集中在医疗专业人员上。Alhashmi等。39进行了卫生及IT部门的员工进行了一项调查,揭示了管理,组织结构,运营过程和IT基础架构可显着增强人们对AI计划的易用性和感知的有用性。Lin等。40研究了医务人员中有关AI申请学习的态度,意图和相关的影响因素。该研究确定了主管规范,并认为易用性是行为意图的关键决定因素。同样,So等。41得出的结论是,感知的有用性与AI接受的主观规范之间存在显着关系;这两个因素直接影响医务人员对拥抱AI技术的态度。Fan等。66采用了UTAUT框架来调查医疗保健专业人员对基于AI的医疗诊断支持系统(AIMDSS)的采用。调查结果表明,初始信任和预期性能在利用AIMDS时对行为意图产生实质性影响。但是,预期努力和社会影响并未表现出对行为意图的重大影响。此外,表中还提供了这项研究的更全面的概述 1。该表介绍了研究研究结果的研究结果的部分摘要,包括研究人员,对象,理论框架,方法论,国家和关键结果等细节。

此外,在AI系统的接受研究中,感知到的信任和感知的风险始终是重要因素。许多先前的研究都将这些元素视为扩展构造4,,,,68,,,,70。研究表明,信任,隐私问题和风险期望共同预测AI在各个行业中的意图,意愿和使用行为35。用户对AI如何利用其财务,与健康相关和其他个人信息表示担忧71,因为共享此类数据引发了实质性的隐私问题72。刘和陶73和Guo等。74发现失去隐私会对信任水平产生不利影响;因此,对隐私问题提高的消费者不太倾向于对这些服务信任。此外,Prakash和Das68确认用户对威胁和风险的看法极大地影响了他们对采用AI系统的抵抗力。Choung等。75通过证明信任积极地预测感知的有用性来扩展TAM。刘和陶73还确定信任直接影响了利用智能医疗服务的行为意图。在Miltgen等人的研究中76,在使用AI进行虹膜扫描应用程序时,信任是行为意图的最强预测指标。因此,对AI技术及其提供商的信心都是接受AI解决方案的关键驱动力35。但是,值得注意的是,以前的接受研究经常将人们认为的信任和被认为是自变量的风险分析。例如,研究人员已单独检查了隐私风险对采用意图的抑制作用,或者讨论了技术信任的促进功能。在AI健康助理的背景下探索这两个因素之间的相互作用的研究仍然有限。因此,本研究旨在研究感知到的信任对感知风险的影响。此外,必须进一步确认感知信任如何影响AI健康助理的预期绩效和预期努力。

建议的模型和假设

鉴于UTAUT具有强大的综合性,出色的预测能力和高可扩展性45,以及它对人工智能等复杂技术的重要适用性33,,,,57,这项研究旨在扩展并基于此模型进行深入探索。文献综述表明,UTAUT整合了几种经典的技术接受理论(包括TAM,IDT,TPB等),实际上涵盖了影响用户技术接受的关键因素。特别是,对于AI健康助理的新兴技术,UTAUT可以从多个维度,例如绩效期望,努力期望,社会影响力等阐明用户行为。大多数先前研究AI系统及其产品接受的研究已采用UTAUT40,,,,57,,,,77,进一步验证其在该领域内的适用性和有效性。此外,UTAUT的灵活性和可扩展性使研究人员能够合并针对特定研究需求的新变量,以便更好地与不同的研究环境保持一致。

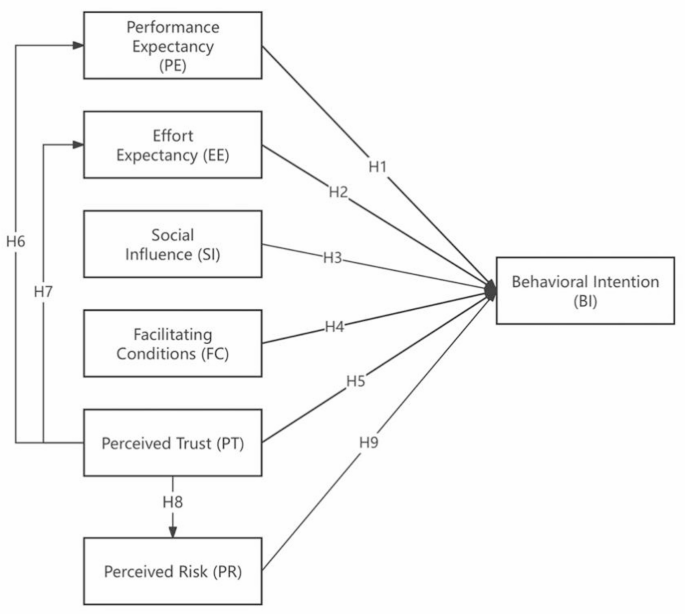

该研究模型基于UTAUT框架,该框架包括预期性能,预期努力,社会影响力和促进条件。为了增强UTAUT模型,已经纳入了另外两个感知的信任和感知的风险。通过行为意图评估了对AI健康助手的用户接受。在这种情况下,行为意图是因变量,而绩效预期,预期努力,社会影响力,促进条件,感知的信任和感知的风险被视为独立变量。所提出的研究模型及其假设如图所示。 1。

预期性能

绩效期望定义为个人认为使用该系统将有助于其改善的工作绩效的程度”51。这个概念还反映了技术的应用可以使用户在执行特定活动中的用户有益于多大程度上52。许多研究表明,在UTAUT框架内,绩效期望积极影响个人采用技术的意愿45,,,,51,,,,78。先前对AI系统的研究也证实了预期性能对使用意图的重大影响79,,,,80。特别是,在AI健康助理的背景下,绩效期望与AI卫生系统的能力提供了提高用户任务执行效率的服务的能力。因此,这项研究认为,在AI健康助理的支持下,绩效期望不仅可以促进用户更有效地访问健康信息,还可以提高其咨询效率,从而提高他们利用该应用程序的意愿。基于此前提,我们提出以下假设:

H1

预期性能对使用AI健康助理的行为意图产生积极影响。

预期

预期努力是指与特定系统相关的易用性51并且还与用户如何与技术互动的方式链接52。预期努力在有关AI接受的研究中表现出积极影响33,,,,40,,,,81。此外,一些研究表明,尽管预期努力的影响可能是适中的,但仍然是积极的82。因此,在AI健康助理的背景下,我们假设预期努力将对行为意图产生有利的影响。

H2

预期努力对使用AI健康助理的行为意图产生积极影响。

社会影响力

社会影响被定义为个人对他人对采用特定技术的期望的看法,以及这些外部观点影响个人接受和利用该技术的程度51。先前的研究表明,当用户在社交界的重要人物认可时,用户更倾向于与系统互动51,,,,83。换句话说,更强大的社会影响与采用新技术的愿意更加相关。先前的研究表明,接受AI系统也是如此84,,,,85。因此,这项研究假设用户对社会影响力的看法将对他们利用AI健康助理的意愿产生积极影响。

H3

社会影响对使用AI健康助理的行为意图产生积极影响。

促进条件

促进条件是指个人认为其组织和技术基础设施可以支持技术利用的程度51。这个概念涵盖了用户环境中影响行为易于的外部因素和客观情况86。促进条件包括两个维度:技术方面的条件和资源相关条件87。在这项研究中,我们将便利定义为涵盖使用AI卫生系统期间可用的计算机设施和技术支持。但是,值得注意的是,根据Venkatesh等人提出的原始UTAUT。51,FC定义为直接影响实际使用(AU)而不是行为意图的变量。最初的理论认为,即使有强烈的使用意图,在必要的资源或技术支持(例如设备兼容性和知识储备)的必要资源或技术支持时仍然可以实际使用用户的实际使用。因此,FC对AU具有直接调节作用51。但是,在这项研究的背景下,中国医疗保健中AI技术的采用目前正处于早期促进阶段。因此,我们提出了以下假设:用户对技术支持的可用性的看法可能会对他们的采用决策产生先发制人的影响。例如,当用户意识到他们可以获得官方培训资源或设备兼容性保证时,它可能会直接促使他们形成使用意图,而不仅仅是在实际使用阶段发挥积极效果。此外,先前对AI系统的研究还显示了FC与BI之间的显着正相关80,,,,88,,,,89基于这种理解,我们提出了以下假设:

H4

促进条件积极影响使用AI健康助理的行为意图。

感知的信任

感知的信任被定义为用户的信念,即代理可以帮助他在不确定情况下实现目标90。技术信任在预测各种在线系统的用户行为意图中起着至关重要的作用,包括移动付款91,信息系统92,在线购物,93和电子商务94。大多数研究表明,信任会极大地影响预期性能95,,,,96,系统的易用性97和使用技术的意图97具有显着的积极影响。但是,对AI系统的一些研究表明,信任与预期绩效之间的关系可能并不总是重要的33。这表明,在AI健康助理的背景下,信任与这些变量之间的相互作用需要进一步探索。此外,当面对新技术表现为恐惧,犹豫和其他负面情绪时,用户可能会遇到心理风险43。因此,在AI助手的领域中,信任与感知的风险密切相关。信任可以增强用户对技术的信心,同时减轻他们对采用新创新的担忧97。先前的研究证实了在互联网技术环境中的信任与感知风险之间存在负相关关系98,,,,99。基于这个基础,我们提出以下假设:

H5

感知信任对使用AI健康助理的行为意图产生了积极影响。

H6

感知信任对使用AI健康助理的预期产生积极影响。

H7

感知到的信任对使用AI健康助理的预期努力产生了积极影响。

H8

感知信任对使用AI健康助理的感知风险产生负面影响。

感知的风险

感知的风险被定义为当未实现预期目标时可能出现的负面结果的不确定性和期望100。在由AI技术提供支持的服务中,感知的风险包括各种维度,包括个人风险,心理风险,经济风险,隐私风险和技术风险,每种风险与潜在损失有关97,,,,101。这项研究专门研究了与AI卫生系统有关的风险,重点是隐私和安全。感知的风险会引起负面情绪,并随后影响用户的行为意图102,在医疗保健系统的背景下特别明显的现象103。When individuals experience discomfort due to uncertainty and ambiguity, they are more inclined to refrain from using such systems in order to avoid confronting the aforementioned risks104。Based on this analysis, we propose the following hypothesis:

H9: Perceived risk negatively affects behavioral intent to use the AI health assistant.

Method design

材料

The IFLY Healthcare application (app) served as the experimental material for this study.Developed by IFlytek, the app is an AI-powered health management tool designed to function as a personal AI health assistant for users.This app integrates various features, including disease self-examination, report interpretation, drug inquiries, medical information searches, and health file management.It primarily targets ordinary Chinese users and offers comprehensive health consultation services.Since its official launch in October 2023, the app has been downloaded over 12Â million times and boasts a positive rating of 98.8% along with an active recommendation rate of 42%.

Questionnaire development

The questionnaire is structured into three distinct sections.The first section gathers demographic information, including variables such as gender, age, education level, and experience with the IFLY Healthcare app.The second section comprises items designed to assess the four constructs of the UTAUT, specifically performance expectancy, effort expectancy, social influence, and facilitating conditions.Additionally, this section includes items that evaluate BI regarding the use of AI health assistants.The measurement items for each construct were adapted from prior research demonstrating their reliability and validity51,,,,52。All items are formulated to gauge respondents’ perceptions concerning specific statements, such as “I think the app can provide useful health consultation services.†The third part of the questionnaire encompasses items aimed at measuring participants’ perceived trust and perceived risk associated with using this technology.All measures utilized in this section were adapted from previous studies33。Perceived trust is assessed across four dimensions: reliability, accuracy, worthiness, and overall trust.Perceived risk is evaluated in terms of privacy, information security, and overall risk.All items were measured using a seven-point Likert scale (where “1†indicates strong disagreement and “7†indicates strong agreement).The supplementary material presents the structure, items, and sources of the measurements utilized in this study.In structural equation modeling (SEM), it is recommended that each construct comprises no fewer than three factors for analysis105。This study designed a total of 23 items, with each dimension containing between 3 and 4 items.This approach was adopted to mitigate participant fatigue and boredom resulting from an excessive number of items—factors that could adversely affect response quality and completion rates106。Consequently, we aimed to simplify the questionnaire as much as possible while preserving its clarity.

Pilot study

After determining the structure and candidate items, we invited five experts with backgrounds in AI and healthcare research to discuss the questionnaire and clarify the objectives and scope of the study.To ensure the facial validity of the survey instrument, we incorporated their suggestions and comments into a structured questionnaire, which was subsequently confirmed as a pilot version.The experts indicated that there were no apparent issues with either the construction or presentation of the items.However, they recommended that participants watch a tutorial video on using the IFLY Healthcare app prior to completing the questionnaire.Following this recommendation, participants were asked to utilize a specific feature within the app before filling out an evaluation questionnaire.Consequently, we refined both our experimental procedures and protocols.Pilot studies are essential for verifying item comprehension and experimental methodologies prior to data collection.We randomly selected 35 participants who had experience using AI systems on social platforms such as Weibo and WeChat.All participants completed the survey;most reported that items were easy to understand without any difficulties, taking between one to five minutes to complete.Four participants expressed uncertainty regarding what was meant by “I have the resources necessary to use the app.†In response to this feedback, we considered their suggestions and revised certain wording in order to enhance clarity by including explanations related to hardware and software.

参与者

We recruited 400 Chinese users online.Prior to conducting the survey, all participants provided informed consent by signing informed consent forms.A total of 400 responses were collected.The data underwent a screening process, resulting in the removal of 27 samples deemed invalid.The criteria for screening included completion times of less than one minute (n = 9), response repetition rates exceeding 85% (n = 15), missing data (n = 0), and outliers identified through SPSS analysis (n = 3).Consequently, the total number of valid samples was reduced to 373. This sample size exceeds ten times the number of items, thereby ensuring reliable results from CB-SEM analysis107,,,,108。The demographic results indicate that among the participants there were 114 men and 259 women (see Table 2)。The majority of participants were aged between 19 and 39 years old (80.7%) and held a bachelor’s degree (82.04%).Additionally, a significant portion had experience using the IFLY Healthcare app (62.73%).Table 2 Descriptive statistics of the participants (

1。

-

Researchers disseminated recruitment advertisements via online social platforms, including Weibo, WeChat, Xiaohongshu, and relevant forums.

Participants who expressed interest reviewed the experiment’s introduction and signed an informed consent form.

-

2。

Upon signing the consent form, participants accessed the introduction page by clicking on a link or scanning a QR code.They then read the experimental descriptions and viewed a 2-minute tutorial video demonstrating how to use the IFLY Healthcare app.

-

3。

Participants were instructed to install and access the IFLY Healthcare app.After completing their health management profile with basic information, they engaged in at least two back-and-forth conversations with the AI health assistant for a maximum duration of 10Â min.

-

4。

Following either the completion of these interactions or after 10Â min had elapsed, participants submitted screenshots to the lab assistant for verification.Once confirmed, participants clicked on a link to complete an online questionnaire.

-

5。

After receiving submissions of questionnaires, the lab assistant verified whether any data was missing and issued a payment of 5 yuan upon confirmation that all data was complete.

结果

Measurement evaluation

SEM offers a versatile approach for constructing models based on latent variables, which are connected to observable variables through measurement models109,,,,110。This methodology facilitates the analysis of correlations among underlying variables while accounting for measurement errors, thereby enabling the examination of relationships between mental constructs111。Two widely utilized SEM methods are Covariance-Based SEM and Partial Least Squares SEM.While both methodologies are effective in developing and analyzing structural relationships, CB-SEM imposes stricter requirements on data quality compared to PLS-SEM, which is more accommodating112。CB-SEM rigorously tests the theoretical validity of complex path relationships among multiple variables by assessing the fit between the model’s covariance matrix and the sample data.Furthermore, CB-SEM’s precise estimation capabilities for factor loadings and measurement errors facilitate an accurate evaluation of construct validity113。Research also suggests that CB-SEM models are particularly well-suited for factor-based frameworks, whereas composite-based models yield superior results within PLS-SEM contexts112。Consequently, this study adopted CB-SEM for its analytical framework.In this study, two software packages were employed for data analysis: the Statistical Package for the Social Sciences (SPSS) and the Analysis of Moment Structures (AMOS).Prior to analysis, sample cleaning and screening were conducted to ensure that all data was complete with no missing values and that any invalid data points were removed.It is essential to assess the normality of the data before proceeding with further analyses.In this research, the Jarque-Bera test (which evaluates skewness and kurtosis) was utilized to determine whether the dataset followed a normal distribution.According to Tabachnick et al.114, a normal range for skewness-kurtosis values is considered to be less than 2.58.Following this guideline, it was found that all items in the dataset exhibited a normal distribution (i.e., < 2.58).

We subsequently evaluated the convergence validity, discriminant validity, and model fit of the proposed structure.According to established guidelines, a factor loading of 0.70 or higher is indicative of good index reliability;Composite Reliability (CR) and Cronbach’s alpha values exceeding 0.70 signify adequate internal consistency reliability;an Average Variance Extracted (AVE) value of 0.50 or greater indicates sufficient convergent validity;and the square root of each AVE should surpass the corresponding inter-construct correlations to demonstrate adequate discriminant validity107,,,,115,,,,116。As presented in Table 3, Cronbach’s alpha ranges from 0.838 to 0.897, with all values exceeding 0.8.Both CR and AVE meet the recommended thresholds of 0.7 and 0.5 for all constructs respectively, while standardized factor loadings above 0.7 for all measurement items indicate strong internal consistency.Furthermore, as illustrated in Table 4, the mean square root for each construct is greater than its estimated correlation with other constructs.This finding suggests that each construct is more closely related to its own measurements than to those of other constructs within this study framework.Thus, these results provide support for the discriminant validity inherent in this research.In addition, the heterotrait-monotrait (HTMT) ratio correlation test supplements and reconfirms the discriminant validity.The results in Table 5show that all the values of the structures are less than 0.90, indicating good discriminant validity117。

The fit index encompasses several key metrics, including χ2/df, Root Mean Square Error of Approximation (RMSEA), Standardized Root Mean Square Error (SRMR), Tucker-Lewis Index (TLI), Normative Fit Index (NFI), Non-Normative Fit Index (NNFI), Comparative Fit Index (CFI), Goodness of Fit Index (GFI), and Adjusted Goodness of Fit Index (AGFI).According to established criteria, a model structure is deemed acceptable when χ2/df falls between 1 and 3, RMSEA and SRMR are below 0.08, and TLI, NFI, NNFI, AGFI, CFI, and GFI all exceed 0.90107。The model presented in this study demonstrated a good fit based on these fit index estimates as shown in Table 6。

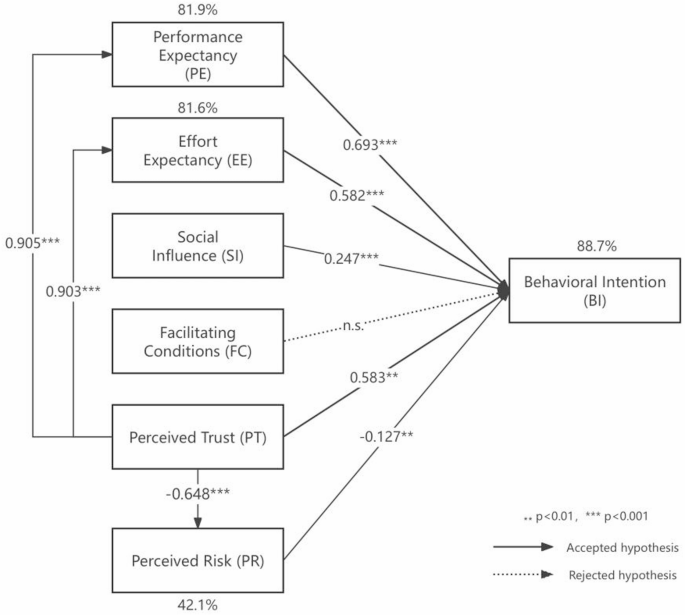

Path analysis and hypothesis testing

The results of the path analysis indicate that eight hypotheses were accepted while one was rejected.All hypotheses received support except for H4.As illustrated in Table 7;Fig. 2, the relationships among PE, EE, SI, and BI in the original UTAUT remain significant;specifically, H1, H2, and H3 are all supported.PE, EE, and SI demonstrated significant positive effects on BI with coefficients of β = 0.693 (p < 0.001), β = 0.582 (p < 0.001), and β = 0.247 (p < 0.001) respectively.However, it is noteworthy that FC did not have a significant effect on BI (β = 0.01,p > 0.05), leading to the rejection of H4.Furthermore, PT significantly positively influenced BI as well as both PE and EE with coefficients of β = 0.583 (p < 0.01), β = 0.905 (p < 0.001), and β = 0.903 (p < 0.001).Conversely, PT negatively affected PR with a coefficient of β=− 0.648 (p < 0.001);thus, hypotheses H5, H6, H7, and H8 are accepted.On another note, PR exhibited a significant negative impact on BI with a coefficient of β=− 0.127 (p < 0 0.01);therefore, H9 is supported.In addition to these findings, the combined influence of PE, EE, SI, PT, and PR accounted for 88.7% of the variance in BI.Moreover, PT explained 81.9% of the variance in PE, 81.6% in EE, and 42.1% in PR.

讨论

This study extends the two constructs of perceived trust and perceived risk based on the UTAUT to investigate ordinary users’ behavioral intentions towards AI health assistants.The findings confirm the relationship between the original UTAUT constructs—namely, performance expectancy, effort expectancy, social influence, facilitating conditions, and behavioral intentions—and the two extended factors.Overall, the foundational structure of UTAUT demonstrates considerable robustness;specifically, performance expectancy, effort expectancy, and social influence exert positive and significant effects on users’ behavioral intention to utilize this technology.However, the impact of facilitating conditions is not statistically significant.Furthermore, perceived trust is found to be closely associated with performance expectancy, effort expectancy, and usage intention while exhibiting a significant negative effect on perceived risk.Conversely, perceived risk negatively influences usage behavior intention.Path analysis results indicate that eight hypotheses were supported while one hypothesis was rejected.

Performance expectancy and effort expectancy

The results indicated that PE and EE significantly and positively influenced BI in the context of AI health assistants, with coefficients β = 0.693 (p < 0.001) for PE and β = 0.582 (p < 0.001) for EE;notably, PE exerted a more substantial impact on BI than EE.This suggests that higher user expectations regarding the performance achievable through AI health assistants correlate with an increased likelihood of intending to utilize the system.Similarly, lower user expectations concerning the effort required to engage with the system also enhance their intention to use it.These findings align with the majority of prior research, including studies on artificial intelligence-based clinical diagnostic decision support systems68, artificial intelligence virtual assistants33, and service delivery artificial intelligence systems64。However, they stand in contrast to the findings related to organizational decision-making artificial intelligence systems118。Specifically, when users perceive that their health management objectives—such as enhancing health knowledge, better managing diseases, or improving lifestyle—can be effectively attained through the utilization of AI health assistants, they are likely to exhibit a greater willingness to experiment with and persist in using these systems.This positive performance expectancy may bolster users’ confidence in viewing engagement with AI health assistants as a worthwhile investment119。Furthermore, if users perceive that utilizing an AI health assistant will not require excessive time and energy, or if the system features a user-friendly interface that facilitates ease of operation—thereby alleviating the burden during usage—this may further motivate them to engage with the system.A lower anticipated effort implies that users believe they can derive benefits without expending significant resources (such as time and energy), which in turn enhances their willingness to adopt it120。

Furthermore, it is worth noting that due to the random sampling method adopted in this study, the proportion of females is relatively high, and the majority of participants have received higher education.Therefore, the research results can also be interpreted from the perspectives of gender and educational background.Regarding gender, the strong predictive power of PE on BI may partly stem from the specific demands of women for technical efficacy in health management scenarios.Previous studies have shown that women attach great importance to the accuracy of information and the reliability of results in medical decision-making121。This tendency may lead them to more strictly evaluate whether AI health assistants can effectively enhance the efficiency of health management when assessing them, thereby strengthening the correlation between performance expectancy and the intention to adopt technology.In terms of educational background, users with higher education tend to have a relatively higher utilization rate of AI-assisted tools in professional settings.Therefore, they have a relatively stronger ability to understand operational goals and evaluate the functions of AI systems122。This cognitive difference may amplify the validity of performance expectancy measurement indicators among the highly educated group, thereby explaining their higher standardized path coefficients.At the same time, a high level of education may moderate the effect boundary of effort expectancy by reducing the perception of technological complexity.When users have sufficient technical literacy, even if the system has a certain learning curve, they can quickly overcome usage barriers through their existing knowledge reserves.This makes the influence of effort expectancy remain significant but relatively weaker in explaining performance expectancy.

Social influence and facilitating conditions

The findings also indicated that SI had a significant positive effect on BI (β = 0.247,p < 0.001), consistent with previous research40,,,,64。Specifically, when users perceive favorable attitudes and recommendations regarding the use of AI health assistants from individuals in their social circles—such as family members, friends, colleagues, or other network associates—they are more likely to express an intention to utilize the system.This phenomenon may be attributed to the intrinsic nature of humans as social beings, we often shape our behaviors based on the actions and opinions of others123。In particular, if an individual is surrounded by groups that maintain a positive perspective on AI health assistants and these individuals demonstrate the advantages of using such systems in their daily lives—such as enhancing the efficiency of health consultations or streamlining medical procedures—the individual is consequently more inclined to regard the utilization of AI health assistants as beneficial.In addition, social support networks can provide individuals with valuable resources in terms of information and practical assistance, thereby further mitigating the perceived risks associated with adopting a new system124。For instance, by engaging in discussions about the effective utilization of AI health assistants, users can acquire essential tips and advice that not only enhance their confidence but also foster greater acceptance of the system and an increased willingness to utilize it.Alternatively, social influence may operate by fostering a sense of belonging and social identity125。When individuals observe that those around them are using a specific application or technology, they may perceive this behavior as a social norm and feel compelled to participate themselves in order to avoid marginalization or to gain group approval.Furthermore, regarding gender, the relatively high proportion of female participants may have enhanced the path effect of social influence.This phenomenon could be attributed to a characteristic prevalent among women: they tend to rely more on social network support when making health-related decisions126。Specifically, women’s adoption of healthcare technologies is often embedded in denser social relationship networks (such as the role of family health managers, interactions in mother and baby communities, etc.), which makes them more susceptible to the influence of medical professionals’ recommendations or the experiences of friends and relatives when evaluating AI health assistants.Additionally, the high concentration of educational attainment may also influence how social influence operates.Individuals with higher education levels typically possess stronger information screening capabilities;thus, their responses to social influence may prioritize authoritative professional sources (e.g., conclusions drawn from medical journals or expert consensus).Consequently, it is possible that the measurement of social influence in this study primarily captures the mechanism through which professional opinions are adopted.

Moreover, contrary to previous research and unexpectedly33,,,,51, FC had no significant effect on BI (β = 0.01,p > 0.05).Nevertheless, our findings are consistent with the observation that when PE and EE are included in the model, FC appears to be an insignificant factor in predicting usage intent127。Furthermore, the establishment of facilitation conditions seems to exert a more direct influence on actual system use than on behavioral intention51。In this study, despite the presence of various supportive environments or resources designed to facilitate user engagement with AI health assistants—such as technical support, usage tutorials, and device compatibility—these factors do not significantly enhance users’ intentions to utilize these systems.This situation may indicate that users are influenced by various factors when deciding whether to utilize AI health assistants, including personal familiarity with the technology, social influence, performance expectancy, and effort expectancy.Even in the presence of favorable facilitation conditions, users may remain hesitant to try or continue using the system if they lack confidence in their own skills or do not perceive adequate social support and positive social influence128。Furthermore, this suggests that users’ perceptions of the value of an AI health assistant may not be directly shaped by external supportive conditions.For instance, if users believe that the system provides significant health benefits and is user-friendly, they may exhibit a strong inclination to use it even in the absence of additional supportive measures.Furthermore, this phenomenon may be attributed to the distinctive characteristics of the technological ecosystem and the developmental stage of AI health assistants.As a relatively nascent field of technological application in recent years, AI health assistants require further enhancements regarding hardware compatibility.For instance, users may still experience challenges such as failed device logins or data synchronization issues during actual operation.These deficiencies can undermine the effectiveness of theoretical facilitating conditions, making it challenging for infrastructure support to translate into user-perceived convenience.In terms of the service support system, there exists a disparity between the maturity of supporting services provided by AI health assistants and user expectations.Although companies offer online tutorials and customer service channels, the response speed and problem-solving efficiency of these services may not yet al.ign with those found in mature medical IT systems.This is particularly critical when addressing sensitive matters such as privacy data breaches or uncertainties surrounding diagnostic results;in these instances, the professionalism and authority of support services may fall short.Such limitations in service capabilities could lead users to perceive facilitating conditions as “formal guarantees†rather than “substantial support,†thereby diminishing their overall influence.

Perceived trust and perceived risk

This study confirmed that PT significantly positively affected BI, PE, and EE in the context of AI health assistants (β = 0.583,p < 0.01;β = 0.905,p < 0.001;β = 0.903,p < 0.001), and negatively affected PR (β=− 0.648,p < 0.001).This is similar to most studies based on UTAUT33,,,,66,,,,68,,,,129。The results suggest that a higher degree of trust in AI health assistant systems correlates with increased expectations regarding their performance and effort requirements, thereby enhancing users’ inclination to utilize these systems while simultaneously diminishing their perceived risks associated with usage.Specifically, when users exhibit trust towards AI health assistants, they are more likely to believe that such systems can effectively assist them in achieving their health management objectives—such as providing valuable health information or optimizing medication planning—which further elevates their expectations concerning system performance.Moreover, trust may also mitigate psychological barriers related to the effort necessary for utilizing these systems;this is because trust encourages users to invest time and energy into learning how to operate the system effectively while integrating it into their daily routines130。Therefore, a high level of trust not only enhances users’ confidence in the system’s effectiveness but may also mitigate the hesitancy and apprehension typically associated with trying new technologies.Furthermore, this trust directly influences users’ intentions to engage with the system.A trusted system is more likely to garner user favor as individuals perceive it as a reliable option for long-term use.Concurrently, as trust levels rise, users’ perceived risks—such as concerns regarding privacy breaches and data security issues—tend to diminish correspondingly.This indicates that trust can not only foster positive attitudes toward AI health assistants but also alleviate concerns about potential negative outcomes, thereby further encouraging user engagement.Furthermore, and more importantly, the transparency of AI health systems is a fundamental element in fostering user trust131。When users can comprehend how the system generates diagnostic suggestions, understand the data upon which health plans are based, and are aware of the limitations regarding their personal information usage, the “black box†perception associated with technical operations will be significantly diminished.Simultaneously, this transparency mitigates perceived risks through a dual mechanism: on one hand, visualizing decision-making logic (such as illustrating the weight of key symptoms or outlining health trend analysis pathways) enables users to verify the rationality of the system’s outputs.This verification process alleviates concerns about potential algorithmic misjudgments.On the other hand, clear disclosure of data policies (including details on information collection scope, storage duration, and third-party sharing regulations) empowers users to establish a sense of control based on informed consent while diminishing fears related to privacy breaches.This transparent operation not only directly enhances users’ perceptions of technological reliability but also fosters an environment where users regard the system as a collaborative partner rather than an enigmatic external technology by establishing a “traceable and explainable†service framework.When transparency is combined with technical efficacy and robust privacy protection to create a synergistic effect, users’ risk assessments tend toward objective facts rather than subjective speculation.Consequently, driven by trust in these systems, they are more likely to actively embrace and consistently utilize AI health assistants.

In addition, our findings indicate that PR has a significant negative impact on BI (β = − 0.127,p < 0.01).Specifically, when users perceive that utilizing AI health assistants may entail certain risks or uncertainties, their willingness to engage with the system is markedly diminished.These risks can encompass various dimensions, including privacy protection, information security, and technical reliability.If users harbor concerns regarding the potential for improper access to or leakage of their personal health information—or if they express skepticism about the technical performance and accuracy of AI health assistants—such apprehensions may create psychological barriers that deter them from adopting the system.Furthermore, fears related to possible operational errors, misdiagnoses, or an overreliance on AI recommendations at the expense of professional medical advice may also contribute to user hesitancy in employing AI health assistants132。It is essential to recognize that perceived risk not only influences users’ initial willingness to experiment with these technologies but may also impede their transition from short-term trials to sustained usage120。A heightened perception of risk necessitates a greater psychological burden for users;this stands in contrast to their inherent need for security and stability133。Consequently, it is crucial to address and mitigate users’ concerns regarding potential risks in order to enhance their intentions toward using these systems.

In terms of gender, the preponderance of female participants may have strengthened the penetration effect of perceived trust on the assessment of technical efficacy.That is, women may exhibit a stronger need for systemic trust in the adoption of health technologies (such as the demand for data transparency), which may explain the predictive power of perceived trust on performance expectancy and effort expectancy.Specifically, women’s trust in AI health systems may be more dependent on the empathetic design of the interaction interface (such as the humanized expression of health advice) and the dual verification of technical reliability (such as clinical effectiveness certification).This dual anchoring mechanism means that once trust is established, it may simultaneously enhance users’ positive expectancy of technical efficacy and ease of use.However, it should be noted that women’s sensitivity to privacy leaks may partially suppress the negative effect of perceived risk on usage intention in the sample, as the high education level characteristic may enhance the risk compensation mechanism through technical cognitive ability, including install privacy protection software and regularly check the permission settings.For example, even with privacy concerns, highly educated women may still maintain their willingness to use due to the security guarantees provided by the trust mechanism (such as explanations of encryption technology).This explains the relatively small coefficient of perceived risk.Additionally, in terms of educational background, the trust construction of high-education groups (especially those with STEM backgrounds) in AI technology may be more inclined towards cognitive trust rather than emotional trust134,,,,135, which means that the perceived trust variable in the model essentially captures users’ rational recognition of the underlying logic of the technology.This mechanism may explain the significant influence of perceived trust on performance expectancy because when users can deconstruct the decision tree of AI diagnosis through professional knowledge, technical trust will directly translate into a definite judgment of expected efficacy.

Conclusions, limitations and future work

By developing and testing an extended UTAUT model, our study enhances the understanding of general users’ acceptance and behavioral intentions regarding the use of AI health assistants.This research underscores the importance of comprehending individual behavioral intentions by taking into account both the potential benefits and adverse effects associated with AI-based health advisory systems.By integrating UTAUT with perceived trust and perceived risk, a more nuanced discussion on the advantages and disadvantages of AI health assistants can be fostered, thereby aiding in the interpretation and prediction of individuals’ intentions and behaviors when utilizing AI technologies, as well as informing the development of AI health assistants.

This study focuses on addressing two key limitations in existing research at the theoretical level.On the one hand, most existing studies on the adoption of medical AI systems have concentrated on the perspectives of professionals such as doctors to explore the impact of technical features like algorithm explainability on doctor-patient collaboration.However, the unique cognitive mechanisms of ordinary users as direct users remain to be explored.On the other hand, previous studies often analyzed perceived trust and perceived risk as independent variables, while the impact of perceived trust on perceived risk and the expected perception of system functionality still needs to be verified in AI health assistants.Therefore, based on the UTAUT, this study constructed the influence path of perceived trust on perceived risk and empirically found that perceived trust not only directly enhances the intention to use but also has an indirect promoting effect by weakening perceived risk.At the same time, the study also revealed the reinforcing effect of perceived trust on performance expectancy and effort expectancy, proving that users’ confidence in AI health systems positively reshapes their technical utility assessment.This mechanism helps to expand the role map of cognitive variables in traditional UTAUT model.Overall, these findings can provide a new perspective for understanding the technology adoption of ordinary people towards healthcare AI systems.

The findings of this research have the potential to inform the development of more effective strategies and provide valuable insights for developers and related industries aimed at enhancing existing systems or launching new ones.System developers and industry investors must investigate the factors influencing the acceptance of AI health assistants, as well as their operational mechanisms.This exploration will not only promote the effective utilization of technology but also ensure that the capacity of AI health assistants to enhance public health is maximized while safeguarding user privacy and security.By addressing these critical issues, public trust in AI health assistants can be bolstered, thereby increasing their acceptance and utilization rates.For instance, this study identified that social influence exerts a significant positive effect on users’ behavioral intentions regarding system usage.In the context of AI health assistants, social influence is not only reflected in the dissemination of technological practicality but is also deeply embedded in the process of shaping users’ social identity and sense of group belonging.Developers can consider strengthening this mechanism by building a “health decision-making communityâ€.For instance, by embedding a database of real user cases in the system, showcasing successful chronic disease management cases certified by medical experts, and designing a group matching function based on health profiles to enable users to form interactive alliances with groups having similar health backgrounds.At the same time, a “family health data sharing†module can be developed, allowing users to synchronize AI recommendations to family members’ devices under the premise of privacy authorization, thereby enhancing the credibility of the technology through natural dissemination within the intimate relationship network.In marketing strategies, regional health improvement reports can be jointly released with medical institutions, presenting the actual intervention effects of AI health assistants on specific groups (such as the hypertensive patient group) through visualized data.By leveraging the dual role of authoritative institution endorsement and social evidence, individual usage behavior can be transformed into collective health actions.This three-dimensional construction of social influence can enable the adoption of technology to spread from individual cognition to the social network level, ultimately forming a chain effect of “personal health management - family health collaboration—community health demonstrationâ€, systematically enhancing users’ adoption willingness and continuous usage stickiness.

Additionally, the conclusions regarding perceived trust and perceived risk indicate that fostering the application and development of AI health assistants necessitates a concerted effort to build and enhance users’ sense of trust.Developers should prioritize improving system transparency, ensuring robust data security and privacy protection measures, as well as providing clear user guides and support services.These actions are essential for earning users’ trust, thereby increasing their willingness to utilize these technologies while alleviating concerns related to potential risks.Developers can enhance system transparency and privacy protection through a layered strategy.Technically, explainable artificial intelligence (XAI) can serve as the core of the system.For instance, by building real-time visual interfaces that transform decision-making processes such as symptom analysis and health assessment into flowcharts or heat maps and adding a “decision traceability†feature for users to review the sources of medical knowledge and the confidence level of algorithms.Meanwhile, the design should cater to different users, providing non-professionals with brief explanations (such as “Blood pressure warnings are based on the analysis of data fluctuations over the past 30 daysâ€), while retaining an entry for professionals to adjust algorithm parameters.In terms of privacy protection, blockchain technology can be used to establish a data sovereignty mechanism, that is, by using distributed storage to separate biometric features from identity information and leveraging smart contracts to enforce user-customized data access rules (such as only allowing diabetes algorithms to read blood sugar data), with diagnostic data stored on a consortium chain to ensure auditability and immutability.Additionally, transparency requires institutional support.When users add new devices, the system should automatically generate data usage instructions and re-authorize.Regular algorithm update reports should also be released to form a trust loop of “technically traceable—data controllable—process traceableâ€.

Finally, regarding the discovery of facilitating conditions, it suggests that developers, in terms of system compatibility, can consider collaborating with mainstream smart device manufacturers to promote the standardization of data interfaces, such as accessing the open API of the national medical data center to achieve cross-device synchronization of data.Develop an automatic detection module that initiates adaptation optimization when identifying user devices and uses visual indicators (such as “certified adaptation†prompts) to let users perceive the improvement in compatibility.The service support system can consider adopting a three-level response.That is, routine problems are handled by AI, privacy issues are transferred to professional teams, and diagnostic doubts are directly connected to medical institution experts.At the same time, add a “risk-sharing†function.When data synchronization fails, the system not only fixes the problem but also provides free remote consultations and other compensations, converting service commitments into trust support.In addition, in terms of user capability development, a dynamic training system can be built.For example, customized content can be pushed based on user operation habits (such as the frequency of function usage).For users with lower digital skills, virtual tutorials for simulated consultations can be provided, while for high-knowledge users, the algorithm flow diagram function can be opened to enhance technical understanding.At the same time, develop personalized benefit reports, using specific data to present the benefits of health management.

Although this study has drawn several meaningful conclusions, it is important to acknowledge certain limitations.Firstly, regarding demographic characteristics, the random sampling method resulted in an unbalanced proportion of men and women within the sample.This imbalance may introduce bias related to gender differences in the evaluation of results33。Furthermore, approximately 80% of participants possessed a bachelor’s degree or higher, which is significantly greater than the corresponding percentage in the overall population60。Additionally, the age distribution exhibited limitations as only four samples represented individuals aged 18 years and under.Collectively, these factors constrain our ability to conduct comprehensive analyses of how demographic variables differ across various levels and their moderating effects.In addition, this study classifies user experience into two broad categories: “used†and “not used.†This simplistic classification method does not adequately capture the nuanced differences in user experience across various stages.Future research should consider implementing more detailed classification criteria, such as segmenting usage duration into intervals of less than one month, 1 to 3 months, 3 to 6 months, and so forth.Such an approach would enable a more precise assessment of how usage experiences influence acceptance.Furthermore, key variables—such as financial status and occupational background—that were not thoroughly examined in this study may also significantly affect the acceptance of AI health assistants.Finally, in terms of the theoretical framework, although the UTAUT effectively supports the exploration of basic adoption mechanisms, this study does not incorporate elements specific to personal consumption scenarios as included in UTAUT2 (such as Price Value and Habit).As AI health assistants gradually enter the commercial stage, future research could integrate the UTAUT2, particularly focusing on the moderating effects of Habit and Price Value in paid service scenarios.Additionally, future research could further refine personal habits into the interaction effect between technology use inertia and health management inertia.Moreover, when the industry reaches maturity, a dynamic adoption model could be established to track the longitudinal changes in the weights of various variables as users transition from free trials to paid subscriptions.These expansions not only enhance theoretical explanatory power but also provide differentiated evidence support for product strategies at different development stages.

数据可用性

The datasets generated and/or analysed during the current study are not publicly available due to the protection of participants’ privacy but are available from the corresponding author on reasonable request.

参考

Dousay, T. A. & Hall, C. Alexa, tell me about using a virtual assistant in the classroom.在Proceedings of EdMedia: World Conference on Educational Media and Technology1413–1419.https://www.learntechlib.org/primary/p/184359/(Association for the Advancement of Computing in Education (AACE), 2018).

Song, Y. W.User Acceptance of an Artificial Intelligence (AI) Virtual Assistant: An Extension of the Technology Acceptance Model。Doctoral Dissertation (2019).

Arora, S., Athavale, V. A. & Himanshu Maggu, A. Artificial intelligence and virtual assistant—working model.在Mobile Radio Communications and 5G Networks.Lecture Notes in Networks and Systems, vol 140 (eds Marriwala, N. et al.)https://doi.org/10.1007/978-981-15-7130-5_12(Springer, 2021).

Zhang, S., Meng, Z., Chen, B., Yang, X. & Zhao, X. Motivation, social emotion, and the acceptance of artificial intelligence virtual assistants—Trust-based mediating effects.正面。Psychol。 12, 95.https://doi.org/10.3389/fpsyg.2021.728495(2021)。

文章一个 Google Scholar一个

Doraiswamy, P. M. et al.Empowering 8 billion minds: enabling better mental health for all via the ethical adoption of technologies.NAM Perspect.。https://doi.org/10.31478/201910b(2019)。Murphy, K. et al.

Artificial intelligence for good health: a scoping review of the ethics literature.BMC Med.伦理。22, 14.https://doi.org/10.1186/s12910-021-00577-8(2021)。文章

一个 PubMed一个 PubMed Central一个 Google Scholar一个 Kashive, N., Powale, L. & Kashive, K. Understanding user perception toward artificial intelligence (AI) enabled e-learning.int。

J. Inform.学习。技术。 38, 1–19.https://doi.org/10.1108/IJILT-05-2020-0090(2021)。

文章一个 Google Scholar一个

Kaye, S. A., Lewis, I., Forward, S. & Delhomme, P. A priori acceptance of highly automated cars in Australia, France, and Sweden: A theoretically-informed investigation guided by the TPB and UTAUT.Accid.肛门。上一条。 137, 105441.https://doi.org/10.1016/j.aap.2020.105441(2020)。

文章一个 PubMed一个 Google Scholar一个

Becker, D. Possibilities to improve online mental health treatment: recommendations for future research and developments.在Advances in Information and Communication Networks.FICC 2018. Advances in Intelligent Systems and Computing,卷。886 (eds Arai, K. et al.)https://doi.org/10.1007/978-3-030-03402-3_8(Springer, 2019).

Hirani, R. et al.Artificial intelligence and healthcare: a journey through history, present innovations, and future possibilities.生活 14, 557 (2024).

文章一个 广告一个 PubMed一个 PubMed Central一个 Google Scholar一个

Anchitaalagammai, J. V. et al.Predictive health assistant: AI-driven disease projection tool.在。2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India1–6.https://doi.org/10.1109/ICRITO61523.2024.10522266(2024)。

Hauser-Ulrich, S., Künzli, H., Meier-Peterhans, D. & Kowatsch, T. A smartphone-based health care chatbot to promote self-management of chronic pain (SELMA): pilot randomized controlled trial.JMIR Mhealth Uhealth。8, e15806.https://doi.org/10.2196/15806(2020)。

文章一个 PubMed一个 PubMed Central一个 Google Scholar一个

Divya, S., Indumathi, V., Ishwarya, S., Priyasankari, M. & Devi, S. K. A self-diagnosis medical chatbot using artificial intelligence.J. Web Dev.网页设计。3, 1–7 (2018).Google Scholar

一个 Muneer, S. et al.

Explainable AI-driven chatbot system for heart disease prediction using machine learning.int。J. Adv。计算。科学。应用。 15, 1 (2024).

Maeda, E. et al.Promoting fertility awareness and preconception health using a chatbot: a randomized controlled trial.复制。生物。在线的。41, 1133–1143.https://doi.org/10.1016/j.rbmo.2020.09.006(2020)。文章

一个 PubMed一个 Google Scholar一个 Davis, C. R., Murphy, K. J., Curtis, R. G. & Maher, C. A. A process evaluation examining the performance, adherence, and acceptability of a physical activity and diet artificial intelligence virtual health assistant.int。

J. Environ。res。公共卫生。17(23), 9137.https://doi.org/10.3390/ijerph17239137(2020)。文章

一个 PubMed一个 PubMed Central一个 Google Scholar一个 Fitzpatrick, K. K., Darcy, A. & Vierhile, M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): A randomized controlled trial.JMIR Ment Health

。4, e19.https://doi.org/10.2196/mental.7785(2017)。文章一个

PubMed一个 PubMed Central一个 Google Scholar一个 Donadello, I. & Dragoni, M. AI-enabled persuasive personal health assistant.Social Netw.

肛门。最小。 12, 106.https://doi.org/10.1007/s13278-022-00935-3(2022)。

文章一个 Google Scholar一个

Chatterjee, A., Prinz, A., Gerdes, M. & Martinez, S. Digital interventions on healthy lifestyle management: systematic review.J. Med。Internet Res. 23, e26931.https://doi.org/10.2196/26931(2021)。

文章一个 PubMed一个 PubMed Central一个 Google Scholar一个

Davis, C. R., Murphy, K. J., Curtis, R. G. & Maher, C. A. A process evaluation examining the performance, adherence, and acceptability of a physical activity and diet artificial intelligence virtual health assistant.int。J. Environ。res。公共卫生。17(23), 9137.https://doi.org/10.1016/B978-0-12-818438-7.00002-2(2020)。文章

一个 PubMed一个 PubMed Central一个 Google Scholar一个 Melnyk, B. M. et al.Interventions to improve mental health, well-being, physical health, and lifestyle behaviors in physicians and nurses: a systematic review.

是。J. Health Promotion。34, 929–941 (2020).文章

一个 Google Scholar一个 Lee, D. & Yoon, S. N. Application of artificial intelligence-based technologies in the healthcare industry: opportunities and challenges.int。

J. Environ。res。公共卫生。18, 1 (2021).Google Scholar

一个 Gao, X., He, P., Zhou, Y. & Qin, X. Artificial intelligence applications in smart healthcare: a survey.

Future Internet。16, 1 (2024).文章一个

Google Scholar一个 Laker, B. & Currell, E. ChatGPT: a novel AI assistant for healthcare messaging—a commentary on its potential in addressing patient queries and reducing clinician burnout.BMJ Lead.

8, 147. https://doi.org/10.1136/leader-2023-000844(2024)。文章一个

PubMed一个 Google Scholar一个 Dragoni, M., Rospocher, M., Bailoni, T., Maimone, R. & Eccher, C. Semantic technologies for healthy lifestyle monitoring.在

The Semantic Web–ISWC 2018. ISWC 2018. Lecture Notes in Computer Science, vol 11137 (eds VrandeÄić, D. et al.)https://doi.org/10.1007/978-3-030-00668-6_19(Springer, 2018).Babel, A., Taneja, R., Mondello Malvestiti, F., Monaco, A. & Donde, S. Artificial intelligence solutions to increase medication adherence in patients with non-communicable diseases.

正面。数字。健康。3, 69.https://doi.org/10.3389/fdgth.2021.669869(2021)。文章

一个 Google Scholar一个 Bickmore, T. W. et al.Patient and consumer safety risks when using conversational assistants for medical information: an observational study of Siri, Alexa, and Google assistant.

J. Med。Internet Res. 20, e11510.https://doi.org/10.2196/11510(2018)。

文章一个 PubMed一个 PubMed Central一个 Google Scholar一个

Bulla, C., Parushetti, C., Teli, A., Aski, S. & Koppad, S. A review of AI based medical assistant chatbot.res。应用。Web开发。des。 3(2), 1–14.https://doi.org/10.5281/zenodo.3902215(2020)。

文章一个 Google Scholar一个

Shevtsova, D. et al.Trust in and acceptance of artificial intelligence applications in medicine: mixed methods study.JMIR Hum.因素。11, e47031.https://doi.org/10.2196/47031(2024)。文章

一个 PubMed一个 PubMed Central一个 Google Scholar一个 Gerke, S., Minssen, T. & Cohen, G. Ethical and legal challenges of artificial intelligence-driven healthcare.在

Artificial Intelligence in Healthcare295–336 (Academic Press, 2020).Hlávka, J. P. Security, privacy, and information-sharing aspects of healthcare artificial intelligence.

在Artificial Intelligence in Healthcare235–270 (Academic Press, 2020).

Rigby, M. J. Ethical dimensions of using artificial intelligence in health care.AMA J. Ethics。21, 121–124.https://doi.org/10.1001/amajethics.2019.121(2019)。文章

一个 Google Scholar一个 Xiong, Y., Shi, Y., Pu, Q. & Liu, N. More trust or more risk?User acceptance of artificial intelligence virtual assistant.

哼。Factors Ergon.制造。服务。印第安 34, 190–205.https://doi.org/10.1002/hfm.21020(2024)。

文章一个 Google Scholar一个

Ostrom, A. L., Fotheringham, D. & Bitner, M. J. inHandbook of Service Science。卷。2, 77–103 (eds Maglio, P. P.) (Springer, 2019).

Kelly, S., Kaye, S. A. & Oviedo-Trespalacios, O. What factors contribute to the acceptance of artificial intelligence?系统评价。Telematics Inform. 77, 101925.https://doi.org/10.1016/j.tele.2022.101925(2023).

文章一个 Google Scholar一个

Davis, F. D. Perceived usefulness, perceived ease of use, and user acceptance of information technology.MIS Q. 13, 319–340.https://doi.org/10.2307/249008(1989).

文章一个 Google Scholar一个

Kirlidog, M. & Kaynak, A. Technology acceptance model and determinants of technology rejection.int。J. Inform.Syst.社会变革。2, 1–12.https://doi.org/10.4018/jissc.2011100101(2011)。文章

一个 Google Scholar一个 Zhan, X., Abdi, N., Seymour, W. & Such, J. Healthcare voice AI assistants: factors influencing trust and intention to use.Proc。

ACM Hum.计算。相互影响。 8(62).https://doi.org/10.1145/3637339(2024)。

Alhashmi, S. F. S. et al.Proceedings of the International Conference on Advanced Intelligent Systems and Informatics(eds Ella Hassanien, A. et al.) 393–405 (Springer, 2019).

Lin, H. C., Ho, C. F. & Yang, H. Understanding adoption of artificial intelligence-enabled Language e-learning system: an empirical study of UTAUT model.int。J. Mob.学习。组织。16, 74–94.https://doi.org/10.1504/IJMLO.2022.119966(2021)。文章

一个 Google Scholar一个 So, S., Ismail, M. R. & Jaafar, S. Exploring acceptance of artificial intelligence amongst healthcare personnel: a case in a private medical centre.int。

J. Adv。工程。管理。3, 56–65 (2021).Google Scholar

一个 van Bussel, M. J. P., Odekerken–Schröder, G. J., Ou, C., Swart, R. R. & Jacobs, M. J. G. Analyzing the determinants to accept a virtual assistant and use cases among cancer patients: a mixed methods study.

BMC Health Serv.res。 22, 890.https://doi.org/10.1186/s12913-022-08189-7(2022)。

文章一个 PubMed一个 PubMed Central一个 Google Scholar一个

Mcknight, D. H., Carter, M., Thatcher, J. B. & Clay, P. F. Trust in a specific technology: an investigation of its components and measures.ACM Trans。Manag Inf.Syst. 2,2。https://doi.org/10.1145/1985347.1985353(2011)。

文章一个 Google Scholar一个

Glikson, E. & Woolley, A. W. Human trust in artificial intelligence: review of empirical research.学院。管理。安。 14, 627–660.https://doi.org/10.5465/annals.2018.0057(2020)。

文章一个 Google Scholar一个

Dwivedi, Y. K., Rana, N. P., Jeyaraj, A., Clement, M. & Williams, M. D. Re-examining the unified theory of acceptance and use of technology (UTAUT): towards a revised theoretical model.通知。Syst.正面。 21, 719–734.https://doi.org/10.1007/s10796-017-9774-y(2019)。

文章一个 Google Scholar一个

Liao, C., Palvia, P. & Chen, J. L. Information technology adoption behavior life cycle: toward a technology continuance theory (TCT).int。J. Inf.管理。 29, 309–320.https://doi.org/10.1016/j.ijinfomgt.2009.03.004(2009)。

文章一个 Google Scholar一个

Pillai, R. & Sivathanu, B. Adoption of AI-based chatbots for hospitality and tourism.int。J. Contemp.Hospitality Manage. 32, 3199–3226.https://doi.org/10.1108/IJCHM-04-2020-0259(2020)。

文章一个 Google Scholar一个

Ajzen, I. & Fishbein, M. Attitude-behavior relations: A theoretical analysis and review of empirical research.Psychol。公牛。 84, 888–918.https://doi.org/10.1037/0033-2909.84.5.888(1977)。

文章一个 Google Scholar一个

Ajzen, I. The Theory of planned behavior.在Organizational Behavior and Human Decision Processes(1991)。

Rogers, E. M., Singhal, A. & Quinlan, M. M.An Integrated Approach to Communication Theory and Research432–448 (Routledge, 2014).

Venkatesh, V., Morris, M. G., Davis, G. B. & Davis, F. D. User acceptance of information technology: toward a unified view.MIS Q. 27, 425–478.https://doi.org/10.2307/30036540(2003)。

文章一个 Google Scholar一个

Venkatesh, V., Thong, J. Y. & Xu, X. Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology.MIS Q. 1, 157–178 (2012).

文章一个 Google Scholar一个

Williams, M. D., Rana, N. P. & Dwivedi, Y. K. The unified theory of acceptance and use of technology (UTAUT): a literature review.J. Enterp.通知。管理。 28, 443–488.https://doi.org/10.1108/JEIM-09-2014-0088(2015)。

文章一个 Google Scholar一个

Xue, L., Rashid, A. M. & Ouyang, S. The unified theory of acceptance and use of technology (UTAUT) in higher education: A systematic review.圣人打开 2024。https://doi.org/10.1177/21582440241229570(2024)。

Abeysekera, K., Jayasundara, C. & Withanaarachchi, A. Exploring digital library adoption intention through UTAUT: A systematic review.J. Desk Res.Rev. Anal. 2, 1 (2024).

Ronaghi, M. H. & Forouharfar, A. A contextualized study of the usage of the internet of things (IoTs) in smart farming in a typical middle Eastern country within the context of unified theory of acceptance and use of technology model (UTAUT).技术。Soc。 63, 101415.https://doi.org/10.1016/j.techsoc.2020.101415(2020)。

文章一个 Google Scholar一个

Venkatesh, V. Adoption and use of AI tools: a research agenda grounded in UTAUT.安。Oper.res。 308, 641–652.https://doi.org/10.1007/s10479-020-03918-9(2022)。

文章一个 Google Scholar一个

Dash, A. & Sahoo, A. K. Exploring patient’s intention towards e-health consultation using an extended UTAUT model.J. Enabling Technol. 16, 266–279.https://doi.org/10.1108/JET-08-2021-0042(2022)。

文章一个 Google Scholar一个

Zeebaree, M., Agoyi, M. & Aqel, M. Sustainable adoption of e-government from the UTAUT perspective.可持续性 14, 1 (2022).

文章一个 Google Scholar一个

Jahanshahi, D., Tabibi, Z. & van Wee, B. Factors influencing the acceptance and use of a bicycle sharing system: applying an extended unified theory of acceptance and use of technology (UTAUT).Case Stud.transp。政策。8, 1212–1223.https://doi.org/10.1016/j.cstp.2020.08.002(2020)。文章

一个 Google Scholar一个 Tian, W., Ge, J., Zhao, Y. & Zheng, X. AI chatbots in Chinese higher education: adoption, perception, and influence among graduate students—an integrated analysis utilizing UTAUT and ECM models.正面。

Psychol。15 , 549.https://doi.org/10.3389/fpsyg.2024.1268549(2024)。文章

一个 Google Scholar一个 Ali, K. & Freimann, K.Applying the Technology Acceptance Model to AI Decisions in the Swedish Telecom Industry

(2021)。Gao, B. & Huang, L. Understanding interactive user behavior in smart media content service: an integration of TAM and smart service belief factors.Heliyon

5, 983. https://doi.org/10.1016/j.heliyon.2019.e02983(2019)。文章一个

Google Scholar一个 Gursoy, D., Chi, O. H., Lu, L. & Nunkoo, R. Consumers acceptance of artificially intelligent (AI) device use in service delivery.int。

J. Inf.管理。 49, 157–169.https://doi.org/10.1016/j.ijinfomgt.2019.03.008(2019)。

文章一个 Google Scholar一个

Li, K. Determinants of college students’ actual use of AI-based systems: an extension of the technology acceptance model.可持续性 15, 1 (2023).

Fan, W., Liu, J., Zhu, S. & Pardalos, P. M. Investigating the impacting factors for the healthcare professionals to adopt artificial intelligence-based medical diagnosis support system (AIMDSS).安。Oper.res。 294, 567–592.https://doi.org/10.1007/s10479-018-2818-y(2020)。

文章一个 Google Scholar一个

Lin, H. C., Tu, Y. F., Hwang, G. J. & Huang, H. From precision education to precision medicine factors affecting medical staffs intention to learn to use AI applications in hospitals.Educational Technol.Soc。 24, 123–137 (2021).

Prakash, A. V. & Das, S. Medical practitioner’s adoption of intelligent clinical diagnostic decision support systems: A mixed-methods study.inf。管理。 58, 103524.https://doi.org/10.1016/j.im.2021.103524(2021)。

文章一个 Google Scholar一个

Zarifis, A., Peter, K., Azadegan, A. & and Evaluating if trust and personal information privacy concerns are barriers to using health insurance that explicitly utilizes AI.J. Internet Commer. 20, 66–83.https://doi.org/10.1080/15332861.2020.1832817(2021)。

文章一个 Google Scholar一个

Choi, Y. A study of employee acceptance of artificial intelligence technology.欧元。J. Manage.公共汽车。Econ。 30, 318–330.https://doi.org/10.1108/EJMBE-06-2020-0158(2021)。

文章一个 Google Scholar一个

Zarifis, A., Kawalek, P. & Azadegan, A. Evaluating if trust and personal information privacy concerns are barriers to using health insurance that explicitly utilizes AI.J. Internet Commer. 20, 66–83.https://doi.org/10.1080/15332861.2020.1832817(2021)。

文章一个 Google Scholar一个

Bansal, G., Zahedi, F. M. & Gefen, D. The impact of personal dispositions on information sensitivity, privacy concern and trust in disclosing health information online.决策。Support Syst. 49, 138–150.https://doi.org/10.1016/j.dss.2010.01.010(2010)。

文章一个 Google Scholar一个

Liu, K. & Tao, D. The roles of trust, personalization, loss of privacy, and anthropomorphism in public acceptance of smart healthcare services.计算。哼。行为。 127, 107026.https://doi.org/10.1016/j.chb.2021.107026(2022)。

文章一个 Google Scholar一个

Guo, X., Zhang, X. & Sun, Y. The privacy–personalization paradox in mHealth services acceptance of different age groups.电子。Commer.res。应用。 16, 55–65.https://doi.org/10.1016/j.elerap.2015.11.001(2016)。

文章一个 Google Scholar一个

Choung, H., David, P. & Ross, A. Trust in AI and its role in the acceptance of AI technologies.int。J. Human–Computer Interact. 39, 1727–1739.https://doi.org/10.1080/10447318.2022.2050543(2023).

文章一个 Google Scholar一个

Lancelot Miltgen, C., PopoviÄ, A. & Oliveira, T. Determinants of end-user acceptance of biometrics: integrating the big 3 of technology acceptance with privacy context.决策。Support Syst. 56, 103–114.https://doi.org/10.1016/j.dss.2013.05.010(2013)。

文章一个 Google Scholar一个

Al-Sharafi, M. A. et al.Generation Z use of artificial intelligence products and its impact on environmental sustainability: A cross-cultural comparison.计算。哼。行为。 143, 107708.https://doi.org/10.1016/j.chb.2023.107708(2023).

文章一个 Google Scholar一个

Khechine, H., Lakhal, S. & Ndjambou, P. A meta-analysis of the UTAUT model: eleven years later.能。J. Administrative Sci. 33, 138–152.https://doi.org/10.1002/cjas.1381(2016)。

文章一个 Google Scholar一个

Andrews, J. E., Ward, H. & Yoon, J. UTAUT as a model for Understanding intention to adopt AI and related technologies among librarians.J. Acad.Librariansh. 47, 102437.https://doi.org/10.1016/j.acalib.2021.102437(2021)。

文章一个 Google Scholar一个

Chatterjee, S., Rana, N. P., Khorana, S., Mikalef, P. & Sharma, A. Assessing organizational users’ intentions and behavior to AI integrated CRM systems: a meta-UTAUT approach.通知。Syst.正面。 25, 1299–1313.https://doi.org/10.1007/s10796-021-10181-1(2023).

文章一个 Google Scholar一个

Sharma, P., Taneja, S., Kumar, P., Özen, E. & Singh, A. Application of the UTAUT model toward individual acceptance: emerging trends in artificial intelligence-based banking services.int。J. Electron。金融。13, 352–366.https://doi.org/10.1504/IJEF.2024.139584(2024)。文章

一个 Google Scholar一个 Nordhoff, S. et al.Using the UTAUT2 model to explain public acceptance of conditionally automated (L3) cars: A questionnaire study among 9,118 car drivers from eight European countries.

transp。res。部分。F: Traffic Psychol.行为。 74, 280–297.https://doi.org/10.1016/j.trf.2020.07.015(2020)。

文章一个 Google Scholar一个

Agarwal, R. & Prasad, J. The antecedents and consequents of user perceptions in information technology adoption.决策。Support Syst. 22, 15–29.https://doi.org/10.1016/S0167-9236(97)00006-7(1998)。

文章一个 Google Scholar一个

Yakubu, M. N., David, N. & Abubakar, N. H. Students’ behavioural intention to use content generative AI for learning and research: A UTAUT theoretical perspective.教育。通知。技术。 https://doi.org/10.1007/s10639-025-13441-8(2025)。

文章一个 Google Scholar一个

Cheng, M., Li, X. & Xu, J. Promoting healthcare workers’ adoption intention of artificial-intelligence-assisted diagnosis and treatment: the chain mediation of social influence and human–computer trust.int。J. Environ。res。公共卫生。19, 1 (2022).文章

一个 Google Scholar一个 Kidwell, B. & Jewell, R. D. An examination of perceived behavioral control: internal and external influences on intention.Psychol。

标记。20 , 625–642.https://doi.org/10.1002/mar.10089(2003)。文章

一个 Google Scholar一个 Sichone, J., Milano, R. J. & Kimea, A. J. The influence of facilitating conditions, perceived benefits, and perceived risk on intention to adopt e-filing in Tanzania.公共汽车。

管理。Rev. 20, 50–59 (2017).

Vimalkumar, M., Sharma, S. K., Singh, J. B. & Dwivedi, Y. K. Okay Google, what about my privacy?’: user’s privacy perceptions and acceptance of voice based digital assistants.计算。哼。行为。 120, 106763.https://doi.org/10.1016/j.chb.2021.106763(2021)。

文章一个 Google Scholar一个

Muhammad Sohaib, Z., Zunaina, A., Arshma, M. & Muhammad, A. Determining behavioural intention to use artificial intelligence in the hospitality sector of Pakistan: an application of UTAUT model.J. Tourism Hospitality Serv.Industries Res. 4, 1–21.https://doi.org/10.52461/jths.v4i01.3041(2024)。

文章一个 Google Scholar一个

Lee, J. D. & See, K. A. Trust in automation: designing for appropriate reliance.哼。因素。46, 50–80 (2004).文章

一个 PubMed一个 Google Scholar一个 Alalwan, A. A., Dwivedi, Y. K. & Rana, N. P. Factors influencing adoption of mobile banking by Jordanian bank customers: extending UTAUT2 with trust.int。

J. Inf.管理。 37, 99–110.https://doi.org/10.1016/j.ijinfomgt.2017.01.002(2017)。

文章一个 Google Scholar一个

Söllner, M., Hoffmann, A. & Leimeister, J. M. Why different trust relationships matter for information systems users.欧元。J. Inform.Syst. 25, 274–287.https://doi.org/10.1057/ejis.2015.17(2016)。

文章一个 Google Scholar一个

Faqih, K. M. S. Internet shopping in the Covid-19 era: investigating the role of perceived risk, anxiety, gender, culture, and trust in the consumers’ purchasing behavior from a developing country context.技术。Soc。 70, 101992.https://doi.org/10.1016/j.techsoc.2022.101992(2022)。

文章一个 Google Scholar一个

Al-Gahtani, S. S. Modeling the electronic transactions acceptance using an extended technology acceptance model.应用。计算。inf。 9, 47–77.https://doi.org/10.1016/j.aci.2009.04.001(2011)。

文章一个 Google Scholar一个

Zhou, T. An empirical examination of initial trust in mobile payment.Wireless Pers.社区。 77, 1519–1531.https://doi.org/10.1007/s11277-013-1596-8(2014)。

文章一个 广告一个 Google Scholar一个

Lee, J. H. & Song, C. H. Effects of trust and perceived risk on user acceptance of a new technology service.Social Behav.性格。41, 587–597.https://doi.org/10.2224/sbp.2013.41.4.587(2013)。文章

一个 Google Scholar一个 Seo, K. H. & Lee, J. H. The emergence of service robots at restaurants: integrating trust, perceived risk, and satisfaction.可持续性

13, 4431 (2021). 文章一个

Google Scholar一个 Namahoot, K. S. & Laohavichien, T. Assessing the intentions to use internet banking.int。

J. Bank.标记。 36, 256–276.https://doi.org/10.1108/IJBM-11-2016-0159(2018)。

文章一个 Google Scholar一个

Kesharwani, A. & Singh Bisht, S. The impact of trust and perceived risk on internet banking adoption in India.int。J. Bank.标记。 30, 303–322.https://doi.org/10.1108/02652321211236923(2012年)。

文章一个 Google Scholar一个

Chao, C. Y., Chang, T. C., Wu, H. C., Lin, Y. S. & Chen, P. C. The interrelationship between intelligent agents’ characteristics and users’ intention in a search engine by making beliefs and perceived risks mediators.计算。哼。行为。 64, 117–125.https://doi.org/10.1016/j.chb.2016.06.031(2016)。

文章一个 Google Scholar一个

Schwesig, R., Brich, I., Buder, J., Huff, M. & Said, N. Using artificial intelligence (AI)?Risk and opportunity perception of AI predict People’s willingness to use AI.J. Risk Res. 26, 1053–1084.https://doi.org/10.1080/13669877.2023.2249927(2023).

文章一个 Google Scholar一个

Udo, G. J., Bagchi, K. K. & Kirs, P. J. An assessment of customers’ e-service quality perception, satisfaction and intention.int。J. Inf.管理。 30, 481–492.https://doi.org/10.1016/j.ijinfomgt.2010.03.005(2010)。

文章一个 Google Scholar一个

Kamal, S. A., Shafiq, M. & Kakria, P. Investigating acceptance of telemedicine services through an extended technology acceptance model (TAM).技术。Soc。 60, 101212.https://doi.org/10.1016/j.techsoc.2019.101212(2020)。

文章一个 Google Scholar一个

Hofstede, G.Culture’s Consequences: International Differences in Work-Related Values,卷。5 (SAGE, 1984).

Byrne, B. M. Structural equation modeling with AMOS, EQS, and LISREL: Comparative approaches to testing for the factorial validity of a measuring instrument.int。J. Test. 1, 55–86 (2001).

Lenzner, T., Kaczmirek, L. & Lenzner, A. Cognitive burden of survey questions and response times: A psycholinguistic experiment.应用。Cogn。Psychol。 24, 1003–1020.https://doi.org/10.1002/acp.1602(2010)。

文章一个 Google Scholar一个

Hair, J. F., Black, W. C., Babin, B. J. & Anderson, R. E. Advanced diagnostics for multiple regression: A supplement to multivariate data analysis.在Advanced Diagnostics for Multiple Regression: A Supplement to Multivariate Data Analysis(2010)。

Hair, J. F.Multivariate Data Analysis(2009)。

Bagozzi, R. P. Structural equation models are modelling tools with many ambiguities: comments acknowledging the need for caution and humility in their use.J. Consumer Psychol. 20, 208–214.https://doi.org/10.1016/j.jcps.2010.03.001(2010)。

文章一个 Google Scholar一个

Chin, W. W. Commentary: issues and opinion on structural equation modeling.MIS Q. 22, 7–16 (1998).

Nachtigall, C., Kroehne, U., Funke, F. & Steyer, R. Pros and cons of structural equation modeling.Methods Psychol.res。在线的。8, 1–22 (2003).Google Scholar

一个 Dash, G., Paul, J. & CB-SEM vs PLS-SEM methods for research in social sciences and technology forecasting.

技术。预报。Soc。张。 173, 121092.https://doi.org/10.1016/j.techfore.2021.121092(2021)。

文章一个 Google Scholar一个

Hair, J. F., Matthews, L. M., Matthews, R. L. & Sarstedt, M. PLS-SEM or CB-SEM: updated guidelines on which method to use.int。J. Multivar.Data Anal. 1, 107–123.https://doi.org/10.1504/IJMDA.2017.087624(2017)。

文章一个 Google Scholar一个

Tabachnick, B. G., Fidell, L. S. & Ullman, J. B.Using Multivariate Statistics,卷。5 (Pearson, 2007).

Fornell, C. & Larcker, D. F. Evaluating structural equation models with unobservable variables and measurement error.J. Mark.res。 18, 39–50.https://doi.org/10.1177/002224378101800104(1981)。

文章一个 Google Scholar一个

Kline, P.Handbook of Psychological Testing(Routledge, 2013).

Li, W. A study on factors influencing designers’ behavioral intention in using AI-generated content for assisted design: perceived anxiety, perceived risk, and UTAUT.int。J. Hum。计算。相互影响。 41, 1064–1077.https://doi.org/10.1080/10447318.2024.2310354(2025)。

文章一个 Google Scholar一个

Cao, G., Duan, Y., Edwards, J. S. & Dwivedi, Y. K. Understanding managers’ attitudes and behavioral intentions towards using artificial intelligence for organizational decision-making.技术 106, 102312.https://doi.org/10.1016/j.technovation.2021.102312(2021)。

文章一个 Google Scholar一个

Arshad, S. Z., Zhou, J., Bridon, C., Chen, F. & Wang, Y.Proceedings of the Annual Meeting of the Australian Special Interest Group for Computer Human Interaction352–360 (Association for Computing Machinery, 2015).

Frederiks, E. R., Stenner, K. & Hobman, E. V. Household energy use: applying behavioural economics to understand consumer decision-making and behaviour.更新。维持。Energy Rev. 41, 1385–1394.https://doi.org/10.1016/j.rser.2014.09.026(2015)。

文章一个 Google Scholar一个

Brown, J. B., Carroll, J., Boon, H. & Marmoreo, J. Women’s decision-making about their health care: views over the life cycle.Patient Educ.Couns. 48, 225–231.https://doi.org/10.1016/S0738-3991(02)00175-1(2002).

文章一个 PubMed一个 Google Scholar一个

McGrath, C., Pargman, C., Juth, T., Palmgren, P. J. & N. & University teachers’ perceptions of responsibility and artificial intelligence in higher education—An experimental philosophical study.Computers Education: Artif.Intell。 4, 100139.https://doi.org/10.1016/j.caeai.2023.100139(2023).

文章一个 Google Scholar一个

Fiske, S. T.Social Beings: Core Motives in Social Psychology(Wiley, 2018).

Whittaker, J. K. & Garbarino, J.Social Support Networks: Informal Helping in the Human Services(Transaction Publishers, 1983).

Hogg, M. A.Individual Self, Relational Self, Collective Self123–143 (Psychology Press, 2015).

Hether, H. J., Murphy, S. T. & Valente, T. W. It’s better to give than to receive: the role of social support, trust, and participation on health-related social networking sites.J. Health Communication。19, 1424–1439 (2014).文章

一个 PubMed一个 Google Scholar一个 Chong, A. Y. L. Predicting m-commerce adoption determinants: A neural network approach.Expert Syst.