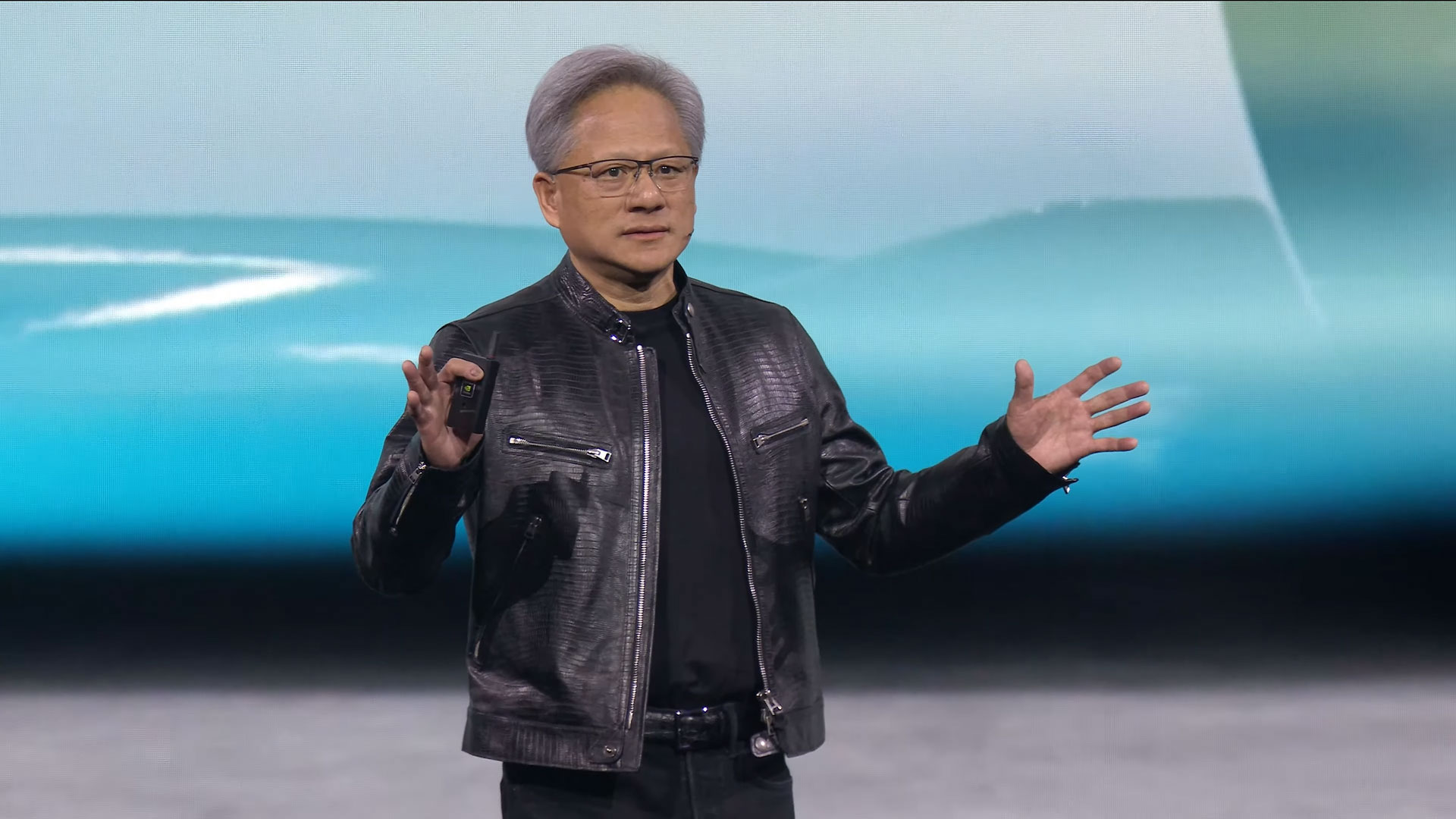

Following Nvidia's keynote at Computex 2025, Nvidia's CEO, Jensen Huang, sat down with journalists to talk about all of Team Green's latest announcements, including talk of GB200, and international market opportunities.

In response to one question, Huang talked about export controls which were first imposed by the Biden administration saying that the limitations had caused Nvidia's China Market share to be cut from 95 to 50 percent within in the presidency and hadn't accomplished what they set out to do. He also posited that the restrictions did not prevent China from developing its own, competing technologies.

Huang also talked about the massive write-downs his company had to take because of bans on the H20, saying "export controls resulted in us writing off multiple billions of dollars. Then, write off the inventory. The write-off of H20 is as big as many semiconductor companies."

He later praised the Trump administration for ending Biden's AI diffusion rule, saying "I think it's really a great reversal of a wrong policy."

This was a roundtable talk with several other journalists from other publications, but this is not a complete transcription of the entire Q&A. However, we've transcribed all of the questions we did manage to hear, some elements lightly edited for flow and clarity. Some speakers did not have clear audio while speaking, and we have noted as such on the transcript.

Ahead of reading this Q&A, you should familiarize yourself with what Huang announced at Nvidia during his Computex 2025 keynote, we've popped it down below, just so you can get a refresher.

NVIDIA CEO Jensen Huang Keynote at COMPUTEX 2025 - YouTube

Jensen Huang: Good Morning, very nice to see all of you. Did you guys see all of this? Isn't this just incredible?

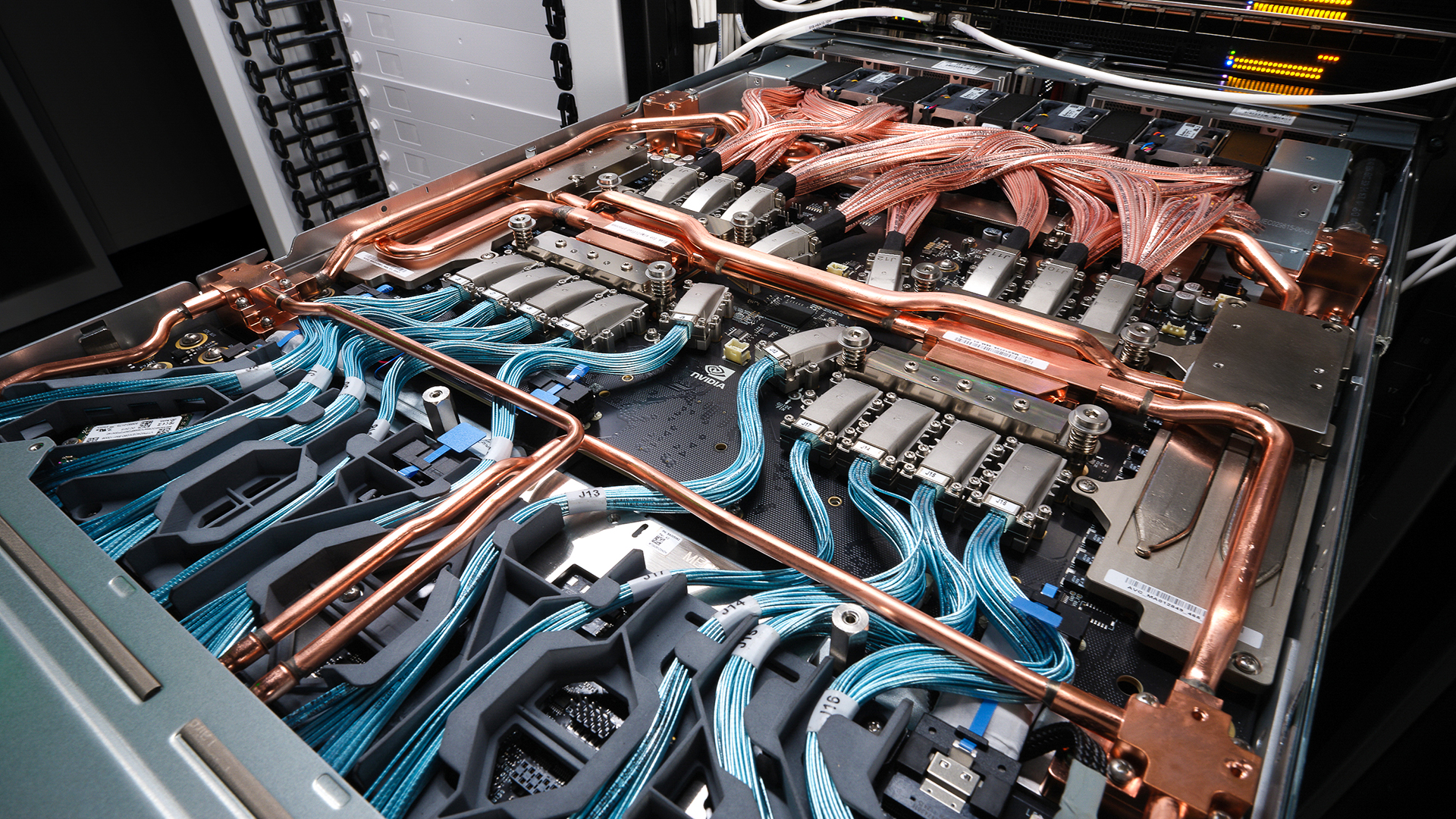

So, this is the motherboard of a new server [Presumably the GB200 NVL72, or RTX Pro machine], and this server has many GPUs that are connected. The GPU's are connected on the bottom, or on top. And on the bottom are switches that connect all the GPUs together, and these switches also connect this computer to all the other computers, using CX8 networking (Nvidia ConnectX-8), 800 gigabits per-second, and then the transceivers plug in right there... Plug this into that, now you have an enterprise AI supercomputer. Because this system is air cooled, it's very easy for enterprise [users] to buy. It's available from all over the world's enterprise IT OEMs. Every single company will be offering this, and you can use this for everything.

It runs x86, so all of your software that you run with your enterprise IT works today. You can run Redmap, VMWare, Nutanix, so all of the orchestration and operating system works just fine.

And, you can run computer graphics, you can run AI, you can run agents. This is incredible. This one idea that the GPU... they didn't give us a GPU [To showcase], but you know what one looks like, it's the gold one, and that makes it a new server.

So, this is the RTX Pro Enterprise AI server, and this is a huge, huge announcement, and it opens up the enterprise market. As you know today, all of the AI isn't involved, but OEMs would like to serve the enterprises, companies would like to build it for themselves.

And so anyway, that's a very big announcement. So Cloud AI over here and GB200, that's our enterprise AI system.

Right, whose got the first question? Good morning.

Elaine Huang, Commonwealth Magazine: Just like what you mentioned, that enterprise is a very important market, and everybody talks about not just AI servers, but AI PCs. So what are the potential opportunities that Taiwan has to co-work with Nvidia for upcoming features?

Jensen Huang: Last night, Microsoft announced Windows ML, Windows Machine Learning. Machine Learning is AI, so Windows AI. And, they announced it on Nvidia. So now Windows ML, which is a new API [that] runs AI inside Windows, runs on Nvidia.

And, the reason for that is Nvidia's RTX has CUDA and Tensor cores, everyone one of them, exactly the same. We have several hundred million RTX PCs in the world. RTX equals AI, and now Windows ML runs on RTX. Everyone with RTX? They know. Hundreds of millions of gamers and PC users, workstation users, everybody. Laptop, desktop... Bingo! Home run, job done [laughs], Windows ML.

If you're a developer, and you would like your own AI supercomputer so that you don't have to keep going to the cloud, open the cloud, and when you're done, you know, shut down your session, because if you don't shut it down, the bill keeps running. And so maybe you're doing development, there's a lot of idle time. And so, you would not like to do that on the cloud, you would like to do it on your desk.

So, if you have a Mac? No problem. If you have a Chromebook? No problem. If you have Linux? No problem. If you have Windows? No problem. We have a perfect little device for you.

[We want] To give you this little AI supercomputer [Presumably DGX Spark], that sits next to you. You can connect it with networking, or you can connect them with Wi-Fi. You talk to this like you like you talk to the cloud, and the software is exactly the same.

So, if you are a developer, software programmer, AI creator, this product is perfect for you. The perfect developer companion.

And if you'd like a bigger one, this is essentially a computer for the AI natives [DGX Station], anybody who's getting completely AI applications, and you would like a bigger one than this one [DGX Spark], this is an AI workstation [Referring to DGX Station].

And this goes into a desktop, a normal desktop, and you can access it, you can remote, you can use it like the cloud, but it's yours, and you can walk away and go enjoy a coffee and don't feel bad.

Question 2 (Unclear speaker and outlet): I'm curious to know, over the last five to ten years, you've had a lot of great new products. The array of products and services you have now is quite extensive. I'm really curious to know what you had in the pipeline. Did you kill anything before it entered production? Like, you had a project, it had momentum and at some point you had to get down to business... [unintelligible speech], I'm really curious about the products that never saw the light of day. Can you share anything with us about that?

Jensen Huang: I would say it's very rare that we would completely kill a project. It's very likely that we shape it, shape it and reshape it. And, the reason for that is because the direction needs to be right. Like, for example, the initial early days of Omniverse, we had to rebuild it a couple of times, and the reason for that is because in the beginning, of Omniverse, our vision was right. That we needed to create a virtual world of digital systems, and robotic systems, and AI systems.

The vision was right. But, the way we architected the software was odd. It was kind of based on the old days of enterprise and workstation applications. And so, it wasn't scalable.

[Jensen pauses to ask for a bottle of water and proceeds to choke] I do that too, you know, I say something surprising, I'm talking, and then I'm drinking and swallowing all at the same time. I say something surprising to myself, and then I choke on water.

So, in the beginning, we built Omniverse as single instance software with multiple GPUs, and that was the wrong answer. Omniverse should have been created as a disaggregated system. It should run across multiple systems, multiple operating systems and multiple computers with multiple GPUs each. Which is the reason why we built this machine. In fact, this computer RTX Pro, it's called RTX Pro for a good reason.

It's essentially an Omniverse generative AI system, and notice this is one computer, eight GPUs, and you can connect them with more computers. Omniverse will run across this whole thing. This is the perfect Omniverse computer, the perfect robotics computer, the perfect digital twin computer.

We started working on Omniverse, how many years ago now? Let's see... Six years, seven years ago, finally it's come to connecting everything together. So notice that all the pivots that were made along the way, all of the mistakes that were made, and so on and so forth, we just keep investing.

Eric, Publication Unclear: Just a quick question about DGX Spark, and what you said with simple production, delivery is going to happen in a couple of weeks. I wonder if you can give any additional color about what you feel about the opportunity and compliance. You know, no pun intended, but is the window closing for an additional player to get into ARM-based computing?

Jensen Huang: So first of all, it's just delightful to look at. You know, it's nice to have a computer that's beautiful. The reason why we need this computer is because we need a coherent, productive AI development environment, and AI has models that are fairly large. Its environment really wants to be fully accelerated with excellent Python software and AI stacks.

If I look around this room right now, I don't actually see a computer that would be perfect for AI development. Most of the computers don't have that memory, or they don't have Tensor cores, because maybe it's a Mac, or maybe it's a Chromebook, or maybe it's an older version of a PC, or an older version of a desktop.

And so we took a state-of-the-art AI system, and we put it in a remote Wi-Fi environment that connects to everybody's computer. Now there's some 30 million software developers in the world, there will probably be just as many who are now going to be AI developers.

And so everybody has the benefit of having, essentially an AI supercomputer, an AI cloud, but not being burdened with the anxiety of your cloud computing that's ticking away. And so this is something you can buy, the ROI is probably, call it six months.

And for most of all, of course, this, we have really great volume, and it's available from everybody. So they'll be available from Asus, they can sell it alongside their laptops. It's available from MSI, and not to mention all the enterprise OEMs.

Every single developer can go out and just get one, and just put it next to their desk. You can develop on here, and you want to now scale it out, or test it out on large data sets, it's just like one pull down menu, point it at a cloud. Exactly the same thing runs there. And so, this is really an ideal AI developer environment.

Victoria Jen, CNA: Given the trade tensions and also the talk of de-globalization. How is Nvidia thinking about its global supply chain strategy, and where does Taiwan fit in that picture?

Jensen Huang: First of all, Taiwan is going to continue to grow, and the reason for that is we're at the beginning of a breed of a new industry. This new industry builds AI factories. The world is going to have AI infrastructure all over. AI infrastructure will cover the planet, just as internet infrastructure has covered the planet. Eventually, AI infrastructure will be everywhere.

We are several hundred billion dollars into a tens of trillions of dollars AI infrastructure buildout that will take five decades.

I've been looking around Taiwan. There are cranes everywhere. There's buildings everywhere. Factories are being built everywhere. And the reason for that is because we're all racing to build infrastructure for AI, manufacturing for AI.

Well, simultaneously, the world needs to be needs to have more manufacturing resilience and diversification, and some of that will be distributed around the world.

In the United States, we're going to do some manufacturing. It is impossible to do all manufacturing, all on-shore, and it's also unnecessary. But, we should do as much as we can that is important for national security, while having resilience, with redundancy, all around the world.

And so this rebalancing is happening at actually, a very good time. It's happening at a very good time because the world is building AI infrastructure. We're adding new infrastructure for the very first time. So we need a lot of new plants anyway.

The most important thing is we have to provide energy for these new plants. Communities realize that we want to grow. We want to have economic prosperity. We want to have economic security. In order for that to happen, industrialization; AI factories, need energy. And so the support of governments to provide for energy of all kinds while we pursue new technologies, whether it's hydrogen or nuclear, solar or wind, whatever new technology that that is most available at the time, we're going to need it all.

And so, government officials around the world really need to support all of the companies, so that we can re-industrialize and reset our industry, so that we can grow into AI infrastructure.

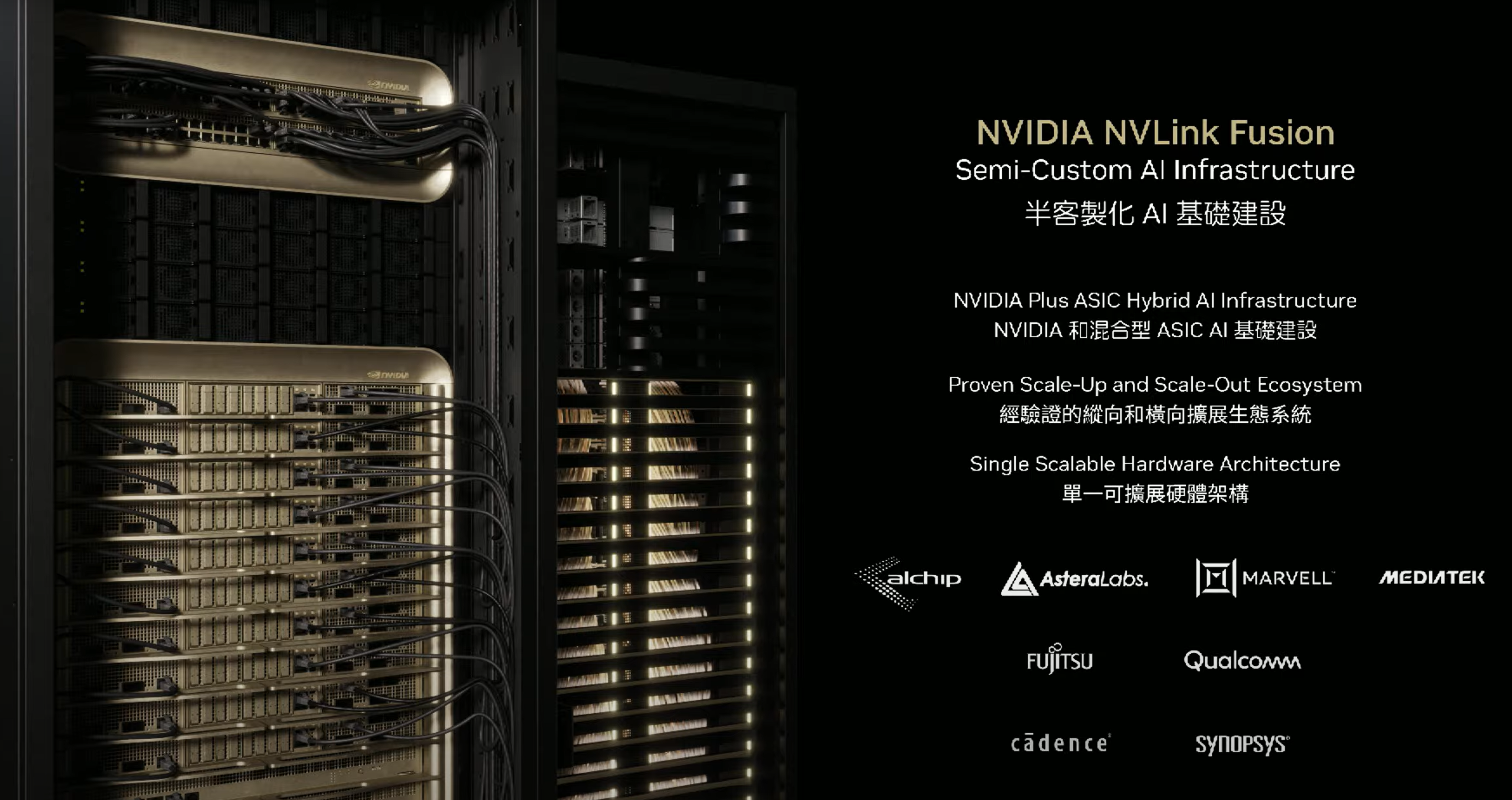

Question 5 (Unclear speaker and outlet): My question is about NVLink Fusion, what's your strategy?

Jensen Huang: NVLink Fusion allows every data center to take advantage of this incredible invention we call NVLink, now in it's fifth generation. We've been working on NVLink now for... How many years? 12 years?

Also, NVLink and Spectrum X NVLink, and quantum- our networking, is highly integrated and highly optimized together. And, that's one of the reasons why the performance of the AI data center, the AI factory, is so good.

When the factory cost $10 billion and our efficiency is 90%, and someone else's efficiency is 60%...30% of $10 billion is $3 billion. So, network efficiency is very important. Performance is very important. Efficiency is very important. Energy is very important...All because the network efficiency is so good.

And so we have many customers, many people who are developing their semi-custom AI infrastructure. They came to us and asked: "Can we can we use NVLink?"

Because, of course, there's a there's a industry discussion about UALink. UALink is not doing that well, I don't think. And so, the customers have come to us and asked whether NVLink could be authenticated. And I said, of course, we're happy to.

The benefit to us, is that the Nvidia network, the Nvidia the fabric, is really the operating system of the data center. It's the nervous system of the data center. And so, we can extend Nvidia's nervous system into every data center, whether it's Nvidia's technology, or if you're selling custom technology.

So we can expand our our market opportunity. It is also so good for us, it's good for the ASIC companies, Mediatek, Alchip, Marvell, right?

It's good for them, because now they have a complete solution. They can have HBM [High Bandwidth Memory], they have [speech unclear], and now they also have NVLink and networking. So now they have a complete solution partner. For the customer, of course, the most important part is that the architecture of NVLink is integrated with the [server and data center] rack system.

The rack system is so complicated. The spine is so complicated. And since they are already using GB200, they're already using Nvidia's racks. Now, they can scale, continue to use Nvidia racks, or even semi-custom. So one architecture, one hardware architecture, one NVLink architecture, one networking architecture, sometimes it's three CPUs, sometimes it's Fujitsu CPUs, sometimes Qualcomm CPUs, it's very nice for the customer.

Are we open to working with Broadcom? Of course, we are, of course we are. Currently, they have their own plan. But if they need, if they would like, to use NVLink [we're] very open.

We love working with Broadcom. We work with Broadcom in many places, export control. So, as you know, export control has caused us to write off our H20s. Our H20 is now banned in China. Banned to ship in China, and export controls resulted in us writing off multiple billions of dollars. Then, write off the inventory. The write-off of H20 is as big as many semiconductor companies.

If you look at look at most chip companies, their quarterly revenue is only a few billion dollars. We wrote off, you know, multiple billion dollars of inventory. And so the cost to us is very high, and also the sales to us was quite high.

The fact of the matter is, the China market is very important. It's very important for several reasons. The first reason is that China is where 50% of the world's AI researchers are. And we want the AI researchers to build on Nvidia. DeepSeek was built on Nvidia. That's a gift to us. It's a gift to the world.

Now, DeepSeek runs incredibly well everywhere. [Audio unclear, Huang mentions R1 or similar.] is an excellent, excellent AI model. It's a gift to the world. It's open source. And so, the China market is important, because the AI researchers there are so good, and they're going to build amazing AI no matter what. We would like them to build on Nvidia's technology.

Second, the China market is quite large. As you know, it's the second largest computer market. There's no others like it. And so the China market, my guess is that next year, the whole dang market is probably [worth] $50 billion. $50 billion dollars! You know how large many chip companies are? Much less than $50 billion.

So, the $50 billion market opportunity to Nvidia is quite significant, and it would be a shame not to be able to enjoy that opportunity, to bring home tax revenues to the United States, create jobs...sustain the industry.

Okay, so, all of that... [Jensen gets distracted and looks at the person who asked the question] You asked me a question, you're not even paying attention.

[The duo converses in Mandarin, and Huang responds] Am I upset at the policy? [Regarding export controls to China] I'm not upset at the policy. I think the policy is wrong, and the reason for that is because, let's look at the evidence.

Four years ago, at the beginning of the Biden administration, Nvidia's market share in China was nearly 95%. Today, it is only 50%. The rest of it is China's technology, and not to mention we have to sell lower chip specifications. So, our ASP [Average selling price] is also lower. So we left a lot of revenue, and nothing changed.

AI researchers are still doing AI research in China. They have a lot of mobile technology they would use if they don't have Nvidia, if they don't have enough Nvidia, they will use their own! They'll use the second best. Then lastly, of course, the local companies are very, very talented and very determined, and the export controls gave them the spirit, the energy, and the government support, to accelerate their development. And so I think, all in all, the export control was a failure, the facts would suggest it.

Question 6 (Unclear speaker and outlet): So I have a quick question about AI factories. I mean, AI factories and depracaction. So the equipment inside is a data center or AI server, right? So if we talk about the factories, we have to talk about depreciation, and equipment upgrades. So, what do you expect to see? And then you have this one-year-rhythm theory, which means the systems will be upgraded every year.

So, what's your expectation on the lifetime of equipment in data center AI factories. How frequently will their systems need to be upgraded?

Jensen Huang: There are two pieces of information that are very important. The first is the reason why we upgrade every year. It's because in a factory, performance equals cost, and performance equals revenues.

If your factory is limited by power, and our performance per watt is four times better, then the revenues of this data center increase by four times. So, if we introduce new generation, the customer's revenues can grow, and their costs can come down.

We upgrade every year. So we tell our customers, don't buy everything every year. Buy something every year. This way, they don't over-build and over-invest with old technology. But the benefit that we have, is that Nvidia architecture is compatible in all of the factories. And so, we can upgrade the software for a very long time.

The second fact, Hopper in the [beginning] of it's life, Llama-70B, in the beginning, was 1/4 the performance on the same Hopper [system] four years later. So, we keep improving the performance using CUDA software, which is the benefit of CUDA. And if we optimize the software, and improve the performance of the model, it helps the whole factory. Every single every single factory. Every single computer.

Nvidia's CUDA is very valuable here, Nvidia's once-a-year rhythm is very valuable, and so you have to use both of them together. With that, your overall data center fleet revenues will go up, your overall data center costs will come down.

And then one last idea. As you know, Nvidia CUDA runs everything. Every model, every new innovation, because the Nvidia CUDA install base is so high. If you are a software developer, of course you would do Nvidia CUDA first.

You have the best performance, the best technology, you also have the largest install base, and software developers want the largest install base, so that they can touch and reach everyone. Isn't that right? And so these three ideas, once a year, performance up, costs down. Once a year, all the time, a software upgrade with CUDA. And then lastly, our install base is so high everything runs, so the life of your data center will be quite long.

Max Cherney, Reuters: Since you were just talking about China, it brings me to something that I think is been an interesting question. Over the past 10 days, you've gone on a world tour. Made pit stops in the Middle East and elsewhere. And what I'm wondering is you've also made a flurry of announcements. Very technical stuff here at Computex, you know.

What I'm wondering is if you could put some of the technical announcements you've made, such as NVLink Fusion, the laptop platform, and some of the other more detailed, nerdy things in the context of how you're planning to continue to sustain Nvidia's growth over the next few years. I think that's especially relevant, with some of the fears investors have at the moment about a pullback in AI spending, especially after DeepSeek.

Jensen Huang: That last little part is really important. Remember what DeepSeek did. DeepSeek was incredible for AI infrastructure.

The old AI is called one shot [A stateless model like GPT-2 and GPT-3] . You hit enter, and the AI gives you an answer. One shot. The only way to give you a one shot answer is not to think. No thinking. You, [the AI model] already know it. You've kind of memorized it from pre-training. But DeepSeek is a reasoning model. It has to think.

It has to think, and you want to think fast, because if you don't think fast, the answer will take too long to come. And so DeepSeek opened the reasoning model, the world's first open source, excellent reasoning model.

Developers all over the world are using it, because it's so good. Now, the reasoning model is not one shot [stateless model], but it's hundreds of shots. It even goes to the internet to read websites, and read PDFs. So, it has to read, think, reason, plan, read some more.

So, that's the reason why deep research... You see that the latest versions of queries are taking much longer. The reason why it takes much longer is using a lot more compute [power]. And so, in fact, DeepSeek increased the amount of computing needed by maybe 100 to 1000 times.

That's the reason why all over the world, the AI companies are saying the GPUs are melting down, right? Sam [Altman], says our GPUs are melting because they're working too hard. They need more GPUs, more GPUs.

And last night, Microsoft announced that they were the first to online GB200, that OpenAI is already using GB200, and that they're planning to build out this year, hundreds of thousands of GB200 [systems]. More build out this year than all of Microsoft's data centers combined, only three years ago.

That's how much [OpenAI plans to build out], in just one year. And so the build out, the ramp of AI infrastructure, to me, is actually just beginning. This is now the beginning of the reasoning AI era, and reasoning AI is so useful, and it's so useful in so many different applications.

Second, AI infrastructure is being built out. That's one of the reasons why I'm traveling around the world. Every region realizes they need to build their own AI infrastructure. AI infrastructure is going to be part of society, part of the industry. Just like electricity, just like the internet, AI is going to be an essential part of infrastructure, social infrastructure, as well as industrial infrastructure.

When I was in the Middle East, President Trump announced that this is the reversal of what was the previous AI diffusion rule, the new diffusion rule, for this administration, they realized the goal. The goal of the AI diffusion rule has specified in the past, was to limit AI diffusion.

President Trump realizes it's exactly the wrong goal. The United States, and America, is not the only provider of AI technology. If the United States wants to stay in the lead, and if the United States would like the rest of the world to build on American technology, we need to maximize AI diffusion, maximize the speed. And that's where we are today.

I think it's really a great reversal of a wrong policy, frankly. And this [the new AI diffusion rules] is a great reversal of that, and it's just in time.

Question 8 (Unclear speaker and outlet): We started with CPUs, and then to right now with GPUs. So still, both are important for our industry. So, what is the future?

[This question is quite unclear, but the general gist of it is that the speaker asks Jensen about the future of the hardware and software industries in the wake of AI factories and data centers.]

Jensen Huang: Good question. Fluid Dynamics is not going to go away. Particle Physics is not going to go away. Finite elements not going to go away. Computer graphics is not going to go away. These algorithms are so good, and they've been refined over so many years.

Not to mention trillions of dollars of software already written, no reason to rewrite it. And so flexible software, flexible hardware is always valuable. That's the reason why CPU has been so successful for 60 years.

Now, Nvidia has created something and you have been following CUDA for two decades now, and you understand very deeply, that CUDA is so successful because there's so many domains of applications.

Everything from deep learning, to machine learning, classical machine learning, to unstructured data quantities, structured data processing, to particles, and fluids, and quantum, and chemistry, and so on so forth. The list goes on.

And so the benefit is flexibility. If it's slow, then it's too expensive. But Nvidia's technology is very fast, it's also flexible. Then the data center can be used for many things. If the data center can be used for many things, the utilization will be high. If the utilization is high, the cost is down.

So, general purpose equals low cost. In fact, you might remember, on the day that Steve Jobs announced the iPhone, he showed iPhone, and then he showed the music player and camera, and also a PC. So all of these different devices can now be in a general purpose device, camera, music, player, all in one general purpose device. This general purpose device is, of course, more expensive, but the cost is actually lower than having all of those things.

So, general purpose equals low cost, but it hasn't got very high performance. And that's the benefit of CUDA. You have just exactly pointed to the reason why CUDA is so successful.

Question 9 (Unclear speaker and outlet): Two or three years ago, you said that Nvidia is a software company, and beyond hardware. So, what elements will take Nvidia to the future? Is it still CUDA [unclear audio], or a bunch of AI [unclear audio].

Jensen Huang: Thank you. Appreciate that. Actually, what I said is that Nvidia starts with software. We always start with the algorithm. For example, it could be a quantum classical algorithm, quantum classical computing.

Maybe it's an algorithm for computational lithography, making chips. Maybe it's an algorithm for 5G and 6G radio. We always start with the algorithm, and then, we try to design up, down, bottom. It's called "co-design", across the entire stack.

But, we have to start with the algorithm. Otherwise, if you don't understand the algorithm, you cannot accelerate it. CPUs don't have to understand algorithms. CPUs, because the algorithm sits on top of a compiler, and you only see a compiler. But, accelerated computing is not like that. [It's a] Very, very different type of computing format.

So, Nvidia starts with that [with software and algorithms]. In the future, though, you will see that Nvidia started with software, acceleration of algorithms, to full-stack, then we became a systems company, then we became a data company. Now, we're becoming an AI infrastructure company.

And the infrastructure is important, because the software that runs across the infrastructure is very different than the software that runs on a PC. And, the system organization, architecture and optimization is very different than inside a PC.

So, as we think about the future of computing and these factories, you have to think about the infrastructure completely. Everything from power, to cooling, to networking, to scale up networking, into the fabric, security, storage...everything. Everything has to be considered in one time.

Otherwise, the software is not optimized, the throughput is not optimized. And if the throughput is not optimized, the revenue is affected. This is the first time, the very first time, that a computer directly affects the revenues of a company.

Today, when you see a chip fab, that ASML equipment directly affects TSMC's revenues. Makes sense, right? It directly affects the revenues. But a computer in a big IT data center... How do you know?

If I bought you a faster laptop, does it directly translate to your revenues? Does it directly translate to your income? It does not. Same thing. [For example] IT, if I bought them more computers, does it directly translate to Nvidia's revenues? It does not.

But in the AI factory, it does.

So, this is a very new way of thinking about computers. It's a factory, and we have to optimize it to the extreme, because these factories are very, very expensive.

Dr. Ian Cutress, More than Moore: Love the NVLink Fusion announcement you did yesterday. I'm trying to understand the width, depth and breadth, to the availability to the outside world. I kind of want to envision a system where you have the NVLink spine, you have a partner with that custom CPU, with NVLink, their custom GPU, TPU, whatever you want to call it, with their NVLink, being a custom partner with a switch on top. All that's involved with Nvidia, is the ingredient with the switch. Is that a vision you can expect for that technology in the future, you said you wanted to at least buy something right?

Jensen Huang: That is one vision. But the more likely vision, is that they will buy an NVLink chiplet, and they'll buy the NVLink switch, and the NVLink spine, and the Spectrum-X switch, and all of the necessary software to go along with it. That's more likely.

Let's use one particular example. Remember, Fujitsu has been a computer company for literally, exactly as long as I can remember. They have a large install base of Fujitsu systems all over the world, and it's based on Fujitsu's CPU. They would like to add AI to that.

How do you do that? Because today, Fujitsu has a CPU, and they would like and all of their software stack runs on the Fujitsu CPU, and the Nvidia AI, runs on Nvidia AI.

And so how do you combine the two? How do you use these two together? Well, the way you fuse these two ecosystems together is with NVLink beauty. It's a fusion of ecosystems. Does it make sense? That's why I call it NVLink Fusion, the fusion of two ecosystems.

All of a sudden, by building a Fujitsu CPU with NVLink, and you connect it to...the port is actually going to look exactly like this, except this will be a producer CPU, or [unclear audio], or [unclear audio], or Rubin. We would then sell this to Fujitsu. Make sense? They plug it into the NVLink system, and look what happened. Fujitsu's entire ecosystem just become AI supercharged.

Dr. Ian Cutress, More than Moore: But could they use their own accelerator?

Jensen Huang: They could, but they really want our ecosystem. That's the reason why they did this. If they don't want our ecosystem, there's nothing to fuse. People want our ecosystem, and all the software that we bring along.

So we would do the same with Qualcomm, and if other CPU vendors would want, we're more than happy to. Because we put the chip to chip, and they NVLink into Synopsys and Cadence, so every CPU company could do it. And all of a sudden, Nvidia's entire ecosystem becomes integrated with theirs, fused with theirs. Pretty clever, huh?

Lisa, Wall Street Journal: I just want to follow up with something you said earlier. You talked about AI diffusion rule , and basically it's been a reversal for the past week. I'm interested in your views on going forward? Do you think this reversal will continue, just at least the Middle East is just one example of a country's negotiation over GPUs. I'm just wondering, did you expect Trump and his administration to continue that line and his attitude?

Jensen Huang: I don't know the details of the diffusion rules. The policy hasn't come out. No one knows what future policies are going to happen. Nobody knows in any country and in any government. Policies are always evolving.

But here's what I do know, the fundamental assumptions that led to the AI diffusion rule in the beginning, has been proven to be fundamentally flawed.

That's the big thing. Believe that smart people are doing smart things in governments, and they want to do what's good for the country. I believe people genuinely do that.

If the facts are flawed, if the assumptions are flawed, then the outcome would have to be flawed, the policy would have to change, and so the fundamentals have been completely proven wrong.

And so that's the reason why President Trump made it possible for us to expand our reach outside the United States. And he said very publicly, that he would like Nvidia to sell as many GPUs as possible, all around the world.

The reason for that is because he sees it very clearly, that the race is on, and the United States wants to stay ahead. We need to maximize, accelerate our diffusion, not limit it, because somebody else is more than happy to provide it.

And this AI diffusion is important, because AI is not just AI for all of the things that we said, remember, AI is also going to be the foundation of 6G. So, future communications infrastructure will also be affected. So, we need to get the American AI technology out to as many places as possible. Work with developers and AI researchers all around the world, and help them build an ecosystem, [to] participate in this incredible AI revolution, and do that as fast as possible.

The fundamental assumption was that United States the only provider of AI. And, obviously that's not true.

Dianne, New York Times: So you talked about how important the China market is for Nvidia, and I was wondering, what does it look like for Nvidia to compete in China on an ongoing basis? Is it accurate that the company is investing in a research center in Shanghai, and does the future of Nvidia in China look like, potentially working more closer with the US government, to avoid a future situation like H20?

Jensen Huang: We are trying to lease a new building for our employees in Shanghai. We've been in China for 30 years. Our employees are in a really cramped environment.

Because now more and more people- We still have a flexible work from home policy. So, you know, I decided that the way that people work should reflect the capabilities of the technology, the nature of our work and the sensibility of culture.

And the one additional idea is, because of video conferencing, because we can remote work, I wanted to use the opportunity to enable young people, young parents, to be able to build a life, build a family, and build a career at the same time. Because many young many young women can't build a career, because they have to be at home taking care of their children.

I would want to make it possible for young women to do both: Have a great career and be a great homemaker. And so the ability to have remote work enables that to happen. It's been a fantastic response from all of our employees and all of the others.

They think it's fantastic. Of course, it is incredible maintaining both jobs or doing both things at one time. It's not easy, but at least it's possible. And so that's the reason why we have remote work.

But more and more people are starting to move to the offices, and so the offices are just too cramped. We finally found a place that we could lease the building, and that's basically it. I'm surprised that that's such an enormous story. I feel like I just bought a new chair and that that became front page news. [Laughs]

Our competition in China is really intense. Let's face it, China has a vibrant technology ecosystem, and it's very important, the fact that China has 50% of the world's AI researchers, and China is incredibly good at software. I would put China's software capabilities up against any country, any region in the world. That's how good they are.

Not to mention, they're fast. So our competition is intense. If we're not there, quite frankly, the local companies are more than joyful. They would love for us to never to go back to China.

And so it is precisely those policies benefit, whatever the reasons are. I hope that that what is actually happening, is going to help shape the policy-makers, so that it's possible for us to go back and compete, and that's my goal.

H20, as it currently stands, Hopper. We don't know how to degrade Hopper to make it useful to the marketplace, but we're committed to the market.

You know, the number one in export controls. Export control puts limits on products. If the government would like to completely have sanctions, and whatever they want to ban completely, they're allowed to do that, of course, and we'll comply with the law.

But in the meantime, our job is to comply with the export controls, and the government is very clear about that, provide the export controls, but do your best. Provide the export controls, but continue to do your best, serve the market.

What we're trying to do right now is to think through, how can we best serve the market? And we have very limited choices. We degraded the product so severely, It's going to be quite complicated. But anyhow, we're going to do our best. I don't have any good ideas at the moment, but I'm going to keep thinking.

Penny, Publication Unknown: I'm just going to ask you a question about China. So there are lots of startups in China, GPU companies, and they're developing their ownerships. I'm just wondering how you see this, and how is Nvidia going to respond to it?

Jensen Huang: There are startup companies, and [Audio unclear] one of the the largest and most affordable technologies, period, in the world. And, they are innovating fast. The advantage that AI provides is that the data center is very large. It's not like a cell phone. It doesn't have to fit in one line. If it doesn't work, you know, use two chips. And if that doesn't work, use four chips. It uses more energy. But power is quite cost effective in China, and there's plenty of land. So the the the ban on H20 is not effective for that reason. They'll just buy more more chips from the startups, from Huawei, and others. And so, I really do hope that that the US government recognizes that the ban is not effective and gives us a chance to go back to market as soon as possible.

Question 14 (Speaker and publication unclear): Nvidia is building AI systems for large scale, solutions like GB300 NVL72, do you envision any [audio unclear] specific platforms. Will Nvidia extend any particular specialized AI hardware, and how will you prioritize areas like robotics and industrial AI?

Jensen Huang: These are the two computers. These called DGX. DGX-1 was the first computer in the world, created for one service, for AI. It only does one thing. AI. Oh, and CUDA.

So DGX-1, was the world's first AI native computer, and when I first announced it, there were no customers except for one, and they didn't have any money, so I gave it to them. A company called OpenAI, this was 2016.

So, I decided that now that there are developers all over the world, and they would all love to have their own DGX-1, but DGX-1 is very big. and so I decided to make small ones. These are personal DGX-1's. This one is called DGX Spark, and this one's called DGX station, the world's first AI personal computer.

[Audio unclear] With respect to robotics, robotics is going to be the next industrial revolution. Let me prove it to you. In order for a technology to succeed, it needs to have excellent capability. It needs to have usefulness, so customers buy it, and there needs to be enough customers buying it [at] high volume, such that the R&D fly-wheel can be high.

It has to be useful, it has to be good technology. The technology needs to converge at just the right time, [it needs] lots of customers and use cases. [Audio unclear]

If this technology fly-wheel is high, then the refinement rate will be exponential. The performance will go like this. Cost will go like this, just like smartphones. The moment that the smartphone, all the pieces of technology, from touch, to 3G, which became 4G, mobile processors, internet, the whole web. The moment all of those things came together, boom, it took off. A huge industry.

The same thing is going to happen with robotics, and the reason for that, is the humanoid robot is the only robot that we can imagine using in many places, because we are in many places. We fit the world to ourselves.

There are only two of them [robotics products] with that property, those characteristics. Self driving cars, because we create the world's [Audio unclear], for cars and human robots. Because, we created the world for ourselves.

If we can make these two technologies useful, functional and useful, it's going to take off. And that's what Nvidia's Isaac GR00T is.

Our entire platform, just like we have RTX for games, just like we have Nvidia AI that you're seeing here, Isaac GR00T is our human and robotics platform, and we are very successful with them. That's going to be the next multi-trillion dollar industry. I expect it to be very, very large.

This was not the end of the Q&A session that journalists had with Nvidia's Jensen Huang at Computex 2025. However, we hope you enjoyed reading.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.