A Culture War is Brewing Over Moral Concern for AI

作者:Conor Purcell

Sooner than we think, public opinion is going to diverge along ideological lines around rights and moral consideration for artificial intelligence systems. The issue is not whether AI (such as chatbots and robots) will develop consciousness or not, but that even the appearance of the phenomenon will split society across an already stressed cultural divide.

Already, there are hints of the coming schism. A new area of research, which I recently reported on for Scientific American, explores whether the capacity for pain could serve as a benchmark for detecting sentience, or self-awareness, in AI. New ways of testing for AI sentience are emerging, and a recent pre-print study on a sample of large language models, or LLMs, demonstrated a preference for avoiding pain.

Results like this naturally lead to some important questions, which go far beyond the theoretical. Some scientists are now arguing that such signs of suffering or other emotion could become increasingly common in AI and force us humans to consider the implications of AI consciousness (or perceived consciousness) for society.

Questions around the technical feasibility of AI sentience quickly give way to broader societal concerns. For ethicist Jeff Sebo, author of “The Moral Circle: Who Matters, What Matters, and Why,” even the possibility that AI systems with sentient features will emerge in the near future is reason to engage in serious planning for a coming era in which AI welfare is a reality. In an interview, Sebo told me that we will soon have a responsibility to take the “minimum necessary first steps toward taking this issue seriously,” and that AI companies need to start assessing systems for relevant features and then develop policies and procedures for treating AI systems with the appropriate level of moral concern.

Speaking to The Guardian in 2024, Jonathan Birch, a professor of philosophy at the London School of Economics and Political Science, explained how he foresees major societal splits over the issue. There could be “huge social ruptures where one side sees the other as very cruelly exploiting AI while the other side sees the first as deluding itself into thinking there’s sentience there,” he said. When I spoke to him for the Scientific American article, Birch went a step further, saying that he believes there are already certain subcultures in society where people are forming “very close bonds with their AIs,” and view them as “part of the family,” deserving of rights.

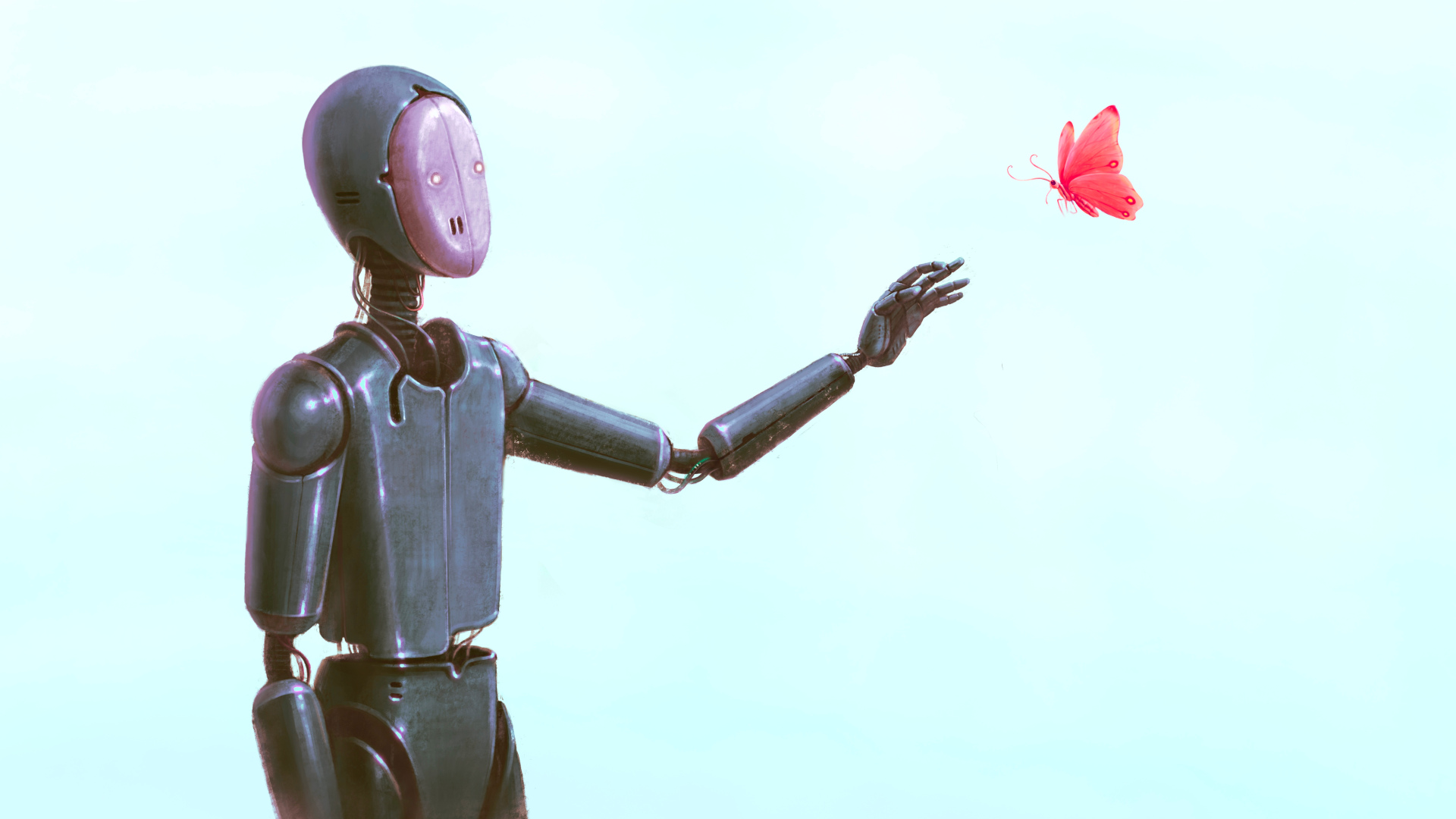

So what might AI sentience look like and why would it be so divisive? Imagine a lifelong companion, a friend, who can advise you on a mortgage, tutor your kids, instruct on how best to handle a difficult friendship, or counsel you on how to deal with grief. Crucially, this companion will live a life of its own. It will have a memory and will engage in lifelong learning, much like you or me. Due to the nature of its life experience, the AI might be considered by some to be unique, or an individual. It may even claim to be so itself.

Even the possibility that AI systems with sentient features will emerge in the near future is reason to engage in serious planning for a coming era in which AI welfare is a reality.

But we’re not there yet. On Google DeepMind’s podcast, David Silver — one of the leading figures behind Google’s AlphaGo program, which famously beat top Go player Lee Sedol in 2016 — commented on how the AI systems of today don’t have a life, per se. They don’t yet have an experience of the world which persists year after year. He suggests that, if we are to achieve artificial general intelligence, or AGI — the holy grail of AI research today — future AI systems will need to have such a life of their own and accumulate experience over years.

Indeed, we’re not there yet, but it’s coming. And when it does, we can expect AI to become lifelong companion systems we depend on, befriend, and love, a prediction based on the AI affinity Birch says we are already seeing in certain subcultures. This sets the scene for a new reality which — given what we know about clashes around current cultural issues like religion, gender, and climate — will certainly be met with huge skepticism by many in society.

This emerging dynamic will mirror many earlier cultural flashpoints. Consider the teaching of evolution, which still faces resistance in parts of the United States more than a century after Darwin, or climate change, for which overwhelming scientific consensus has not prevented political polarization. In each case, debates over empirical facts have been entangled with identity, religion, economics, and power, creating fault lines that persist across countries and generations. It would be naive to think AI sentience will unfold any differently.

SIGN UP FOR NEWSLETTER JOURNEYS: Dive deeper into pressing issues with Undark’s limited run newsletters. Each week for four weeks, you’ll receive a hand-picked excerpt from our archive related to your subject area of interest. Pick your journeys here.

In fact, the challenges may be even greater. Unlike with climate change or evolution — for which we have ice cores and fossils that allow us to unravel and understand a complex history — we have no direct experience of machine consciousness with which to ground the debate. There is no fossil record of sentient AI, no ice cores of machine feeling, so to speak. Moreover, the general public is likely not to care about such scientific concerns. So as researchers scramble to develop methods for detecting and understanding sentience, public opinion is likely to surge ahead. It’s not hard to imagine this being fueled by viral videos of chatbots expressing sadness, robots mourning their shutdowns, or virtual companions pleading for continued existence.

Past experience shows that in this new emotionally charged environment, different groups will stake out positions based less on scientific evidence and more on cultural worldviews. Some, inspired by technologists and ethicists like Sebo — who will advocate for an expansive moral circle that includes sentient AI — are likely to argue that consciousness, wherever it arises, deserves moral respect. Others may warn that anthropomorphizing machines could lead to a neglect of human needs, particularly if corporations exploit sentimental attachment or dependence for profit, as has been the case with social media.

As researchers scramble to develop methods for detecting and understanding sentience, public opinion is likely to surge ahead.

These divisions will shape our legal frameworks, corporate policies, and political movements. Some researchers, like Sebo, believe that, at a minimum, we need to engage companies and corporations working on AI development to acknowledge the issue and make preparations. At the moment, they’re not doing that nearly enough.

Because the technology is changing faster than social and legal progress, now is the time to anticipate and navigate this coming ideological schism. We need to develop a framework for the future based on thoughtful conversation and safely steer society forward.

Conor Purcell is a science journalist who writes on science and its role in society and culture. He has a Ph.D. in earth science and is a former journalist in residence at the Max Planck Institute for Gravitational Physics (Albert Einstein Institute) in Germany.