Artificial intelligence (AI), machine learning (ML), and deep learning (DL) are often used interchangeably, but they differ in capability, complexity, and how much human input they require. For lab managers, understanding these distinctions can be helpful for evaluating which tools align with your lab’s needs. This article demystifies the hierarchy of these technologies, explains how they work, and highlights real-world applications of AI, machine learning, and deep learning in the lab.

AI is an umbrella term. ML, DL, neural networks, machine vision, rule-based algorithms, and other techniques all fall under AI. ML and DL are nested concepts within AI—ML is a subset of AI, and DL is a subset of ML.

Defining artificial intelligence

AI is the ability of computers to mimic human intelligence. There’s a broad spectrum of what that means. Anything as simple as an algorithm that takes an input and compares it against predefined values can be considered AI. Likewise, a neural network that generates a unique output—think ChatGPT—is also AI, even though there is a chasm of sophistication between these technologies.

For lab managers, understanding AI at its broadest level helps with evaluating vendor claims. If a product is labeled “AI-powered,” that could mean anything from basic rule-based automation to a complex learning system. Simple AI tools—such as those using IF/THEN logic or predefined heuristics—can still provide value by automating repetitive decisions or flagging known risks.

The key is to ask vendors what kind of AI underpins their product and whether it adapts over time or behaves deterministically. Knowing that may help you gauge the improvements that the tool will bring as well as the level of oversight required.

Lab use cases for AI

Lab-specific examples of AI include:

Interested in lab design?

Sign up for the free Lab Design Newsletter from our sister site, Lab Design News.

Is the form not loading? If you use an ad blocker or browser privacy features, try turning them off and refresh the page.

By completing this form, you agree to receive news updates and relevant promotional content from Lab Design News. You may unsubscribe at any time. View our Privacy Policy

- CellProfiler: A cell counting program that differentiates between cells and non-cell objects with rule-driven image processing, enabling it to count cells.

- Chemical storage safety: Some chemical storage software vendors offer features that allow AI to identify chemical safety hazards automatically, acting as extra insurance for human inspections.

Defining machine learning

MIT Sloan School of Business defines ML as “a subfield of AI that gives computers the ability to learn without being explicitly programmed.” ML models—whether neural networks or statistical algorithms—learn patterns from data that has been given meaningful labels by people and then apply those patterns to new inputs, making them more adaptive than rigid, rule-based AI systems.

Many ML models depend on two essential, human-driven steps: feature extraction and data labeling.

Feature extraction simplifies complex raw data into meaningful variables a model can use. As the University of California Davis’s Digital Agriculture Laboratory explains, “Feature engineering (sometimes called feature extraction) is the technique of creating new (more meaningful) features from the original features.” For example, an email might be reduced to the number of links or keyword frequencies, and an image to edge density or color histograms. This step removes noise, boosts efficiency, and improves the model’s ability to learn.

Data labeling provides the truth needed for supervised learning. As the University of Arizona defines it: “Data labeling refers to the process of manually annotating or tagging data to provide context and meaning.” Labeled datasets—such as emails tagged “spam” or images tagged “cat”—train models to link features to the correct outcome. Quality labeling is critical for accuracy and fairness.

Human expertise shapes what data the system sees and how it interprets the data, making ML powerful, but not autonomously insightful.

For lab managers, ML tools strike a balance between performance and resource demand. When comparing machine learning and deep learning in the lab, ML solutions usually require less data and computing power, making them more practical for labs with structured datasets and limited infrastructure. However, their effectiveness still depends on thoughtful feature selection and high-quality labeled data. When evaluating ML-driven software—such as inventory predictors or quality control assistants—look for systems trained on data similar to your own lab’s workflows and consider whether the software allows for customization or retraining as your needs evolve.

Lab use cases for ML

- PeakBot: An open-source, ML-based chromatographic peak picking program that debuted in 2022. According to the Bioinformatics paper about it, PeakBot achieves results comparable to existing peak detection solutions like XCMS but can be trained on user reference data, heightening accuracy.

- Inventory tracking: Some lab inventory management software now offers forecasting powered by machine learning, giving researchers advance notice of when supplies may run out and recommending reorder times based on supplier lead times.

- Experiment design help: Other programs offer assistance in experiment design by recommending parameters for different types of tests.

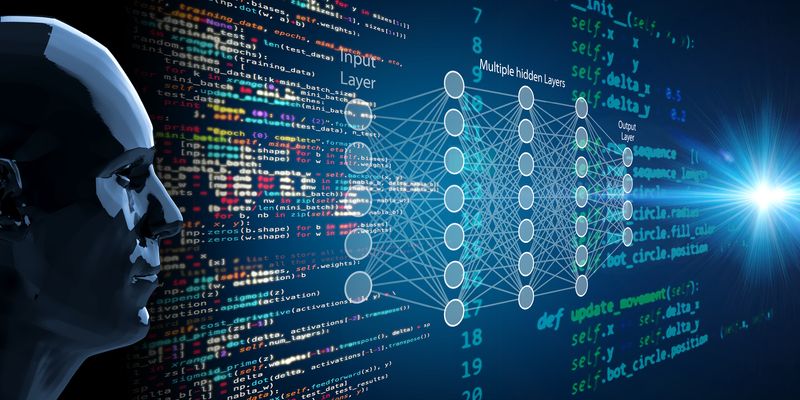

Defining deep learning

Deep learning is a subset of ML that relies on layered neural networks to identify patterns in data. These layers—each made up of interconnected “neurons” that loosely mimic the human brain—allow some DL models to learn increasingly abstract features from raw inputs, such as images or text.

This architecture is what sets DL apart from traditional ML approaches, which require humans to manually define which features the model should focus on. Because DL models can automatically extract features from raw data, they are especially well-suited to tasks involving unstructured or highly complex data.

For lab managers, this means DL tools can offer more powerful and flexible solutions than traditional ML systems, but they come with tradeoffs. DL requires significantly more computational power, often leveraging GPUs or specialized hardware. In the end, when looking at machine learning and deep learning in the lab, DL enables capabilities at a scale and accuracy beyond what ML alone can achieve.

Lab use cases for DL

- Large language models: Now the flagship example of DL, large-language-model-based applications like ChatGPT, Google Gemini, and Claude from Anthropic offer a generalized dataset that people from nearly any industry can use. Labs have a variety of use cases, including summarizing meetings, writing code, and more.

- Organoid analysis: DL has been successfully applied to organoid analysis in the last few years, enabling fast and accurate automated analyses.

- Protein folding: AlphaFold and its open-source counterpart, Boltz, are examples of using DL to predict biomolecular interactions and protein folding, enabling faster innovation in early-stage drug discovery.

Table: Comparing AI, machine learning, and deep learning in the lab

| AI | ML | DL | |

| Input | Rules or data | Labeled data | Raw data (images, text, etc.) |

| Learning method | Pre-programmed or reactive | Learns patterns via training, feature extraction | Independently learns patterns via neural networks |

| Human involvement | High (rules must be defined) | Medium (features must be extracted manually) | Low (extracts features autonomously) |

| Complexity | Broad range of complexity | More adaptable than AI | Most adaptable; mimics human learning |

| Example | IF/THEN logic in equipment scheduling | Email spam filters trained on labeled sets of emails | ChatGPT, AlphaFold, image classifiers |

Buzzwords like "AI-powered" get thrown around often, but knowing what's under the hood—rule-based logic, traditional ML, or deep learning—can help you assess a tool’s true value.