- Systematic Review

- Open access

- Published:

BMC Musculoskeletal Disorders volume 26, Article number: 682 (2025) Cite this article

Abstract

Background

Currently, the application of convolutional neural networks (CNNs) in artificial intelligence (AI) for medical imaging diagnosis has emerged as a highly promising tool. In particular, AI-assisted diagnosis holds significant potential for orthopedic and emergency department physicians by improving diagnostic efficiency and enhancing the overall patient experience. This systematic review and meta-analysis has the objective of assessing the application of AI in diagnosing facial fractures and evaluating its diagnostic performance.

Methods

This study adhered to the guidelines of the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) and PRISMA-Diagnostic Test Accuracy (PRISMA-DTA). A comprehensive literature search was conducted in the PubMed, Cochrane Library, and Web of Science databases to identify original articles published up to December 2024. The risk of bias and applicability of the included studies were assessed using the QUADAS-2 tool. The results were analyzed using a Summary Receiver Operating Characteristic (SROC) curve.

Results

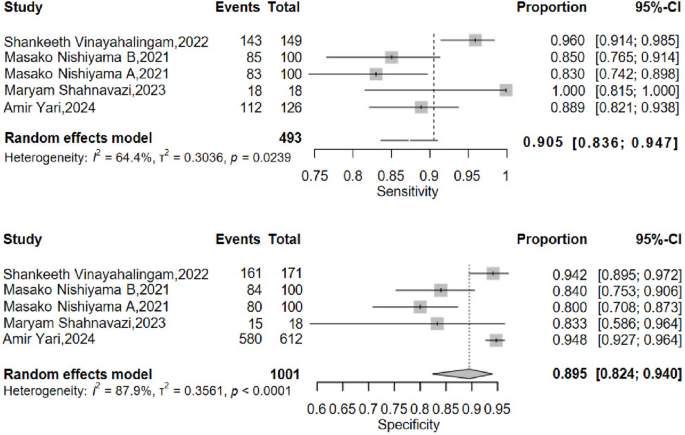

A total of 16 studies were included in the analysis, with contingency tables extracted from 11 of them. The pooled sensitivity was 0.889 (95% CI: 0.844–0.922), and the pooled specificity was 0.888 (95% CI: 0.834–0.926). The area under the Summary Receiver Operating Characteristic (SROC) curve was 0.911. In the subgroup analysis of nasal and mandibular fractures, the pooled sensitivity for nasal fractures was 0.851 (95% CI: 0.806–0.887), and the pooled specificity was 0.883 (95% CI: 0.862–0.902). For mandibular fractures, the pooled sensitivity was 0.905 (95% CI: 0.836–0.947), and the pooled specificity was 0.895 (95% CI: 0.824–0.940).

Conclusions

AI can be developed as an auxiliary tool to assist clinicians in diagnosing facial fractures. The results demonstrate high overall sensitivity and specificity, along with a robust performance reflected by the high area under the SROC curve.

Clinical trial number

This study has been prospectively registered on Prospero, ID:CRD42024618650, Creat Date:10 Dec 2024. https://www.crd.york.ac.uk/PROSPERO/view/CRD42024618650.

Background

When examining the role of artificial intelligence (AI) in the imaging diagnosis of facial fractures, it is essential to first recognize their clinical significance. Facial fractures not only alter patients’ appearances but can also impair critical functions such as mastication, vision, and hearing [1,2,3]. Consequently, timely and accurate diagnosis is vital for effective treatment planning.

Maxillofacial fractures represent a prevalent form of trauma with diverse etiologies spanning multiple facets of daily life [4]. Research indicates that the primary causes include motor vehicle accidents, falls (including from heights), interpersonal violence, sports injuries, occupational mishaps, iatrogenic injuries, pathological fractures, and other miscellaneous factors [5,6,7,8]. Across all age groups, motor vehicle accidents predominate, while falls are notably frequent among the elderly and children [9,10,11].

Advancements in medical imaging, particularly computed tomography (CT) and magnetic resonance imaging (MRI), have enabled clinicians to obtain high-resolution images of facial bones, enhancing diagnostic precision. However, the intricate anatomy of the face poses challenges, as even seasoned radiologists may misinterpret findings or overlook subtle fractures [12].

In recent years, AI has made remarkable strides in medical imaging, particularly in fracture detection. AI-assisted diagnostic systems leverage deep learning algorithms to autonomously analyze imaging data, thereby improving both diagnostic efficiency and accuracy [13]. Studies suggest that AI’s performance in fracture detection rivals that of clinical practitioners, positioning it as a potential adjunct in future clinical workflows [14]. The promise of AI in facial fracture diagnosis is particularly compelling. By training on extensive datasets of facial fracture images, AI systems can identify characteristic fracture patterns and support clinicians in decision-making. Furthermore, techniques such as image enhancement and segmentation enhance the visualization of fracture sites, facilitating the detection of subtle fracture lines and bone fragments [12, 15].

In clinical imaging diagnostics, traditional approaches predominantly rely on the expertise of specialists. These experts, with their experience and knowledge, carefully examine X—rays, CT, and other imaging modalities to evaluate fracture conditions.However, prolonged image analysis can lead to physician fatigue, which may cause the omission of subtle fractures [6]. Moreover, the diagnostic speed is dependent on the complexity of the images. In cases of complex fractures, diagnostic discrepancies among different doctors are not rare [12, 14]. Meanwhile, artificial intelligence has demonstrated its unique advantages. By utilizing advanced deep—learning algorithms, it can quickly process a large amount of imaging data.particularly in trauma emergency scenarios where AI-assisted predictive capabilities provide critical time window support for clinical decision-making.

Although artificial intelligence performs excellently in image analysis, it seriously lacks the ability to comprehensively analyze the overall situation of patients. Currently, the integration of the two has become an inevitable trend. In practical diagnosis and treatment, AI relies on powerful computing power for initial screening, and providing accurate references for doctors.doctors, drawing on their profound clinical expertise and a comprehensive understanding of patient—related data, refine the diagnostic process based on the AI—generated results.

Despite its potential, the integration of AI into fracture detection faces several hurdles. Training robust AI models requires vast quantities of high-quality annotated data, yet the collection and annotation of medical imaging datasets are often time-intensive and costly [6, 16]. Additionally, the interpretability of AI models and their safety in clinical settings remain areas necessitating further investigation and validation. These challenges underscore the research imperative for AI-assisted facial fracture diagnosis: to enhance diagnostic accuracy and efficiency. Future efforts must prioritize the development of more effective and precise AI algorithms while ensuring their safety and reliability in clinical practice, thereby addressing current limitations and advancing their utility in medical imaging [17].

This review seeks to provide a comprehensive analysis of the effectiveness and precision of existing AI systems in this domain. By doing so, it aims to offer valuable insights for radiologists, emergency physicians, orthopedic specialists, and future investigators. Through this rigorous analysis, we intend to establish a scientific foundation for the clinical deployment of AI in detecting head and facial fractures and to delineate directions for subsequent research.

Methods

Study design and reporting guidelines

This systematic review and meta-analysis adhered to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines and the PRISMA-Diagnostic Test Accuracy (PRISMA-DTA) extension. All phases of the review process—including title and abstract screening, full-text evaluation, data extraction, assessment of adherence to reporting guidelines, risk of bias analysis, and evaluation of applicability—were conducted independently and in duplicate by two reviewers (Jiangyi Ju and Zhen Qu), each with one year of experience in applying artificial intelligence (AI) to medical imaging diagnosis. Discrepancies between reviewers were resolved through discussion with a third independent reviewer (LiHua Peng), who has two years of experience in AI applications in medical imaging diagnosis. This third reviewer facilitated a thorough evaluation of bias and applicability to ensure balanced and robust synthesis of the study findings. Among the 126 articles that excluded duplicate records, Jiangyi Ju ultimately screened 16 articles, and Zhen Qu also screened 16 articles. Among them, one article included by Jiangyi Ju was inconsistent with Zhen Qu's inclusion, and one article included by Zhen Qu was inconsistent with Jiangyi Ju's inclusion. Inter-rater reliability for study eligibility assessment was evaluated using Cohen's kappa coefficient. For Included studies screening, the kappa coefficient was 0.93, indicating substantial agreement. Finally, under the coordination of Lihua Peng, 16 articles were identified for analysis. This study aims to systematically review and meta-analyze the literature on AI applications in facial fracture detection, encompassing studies published up to December 1, 2024. The primary objective is to assess the current capabilities of AI technologies in identifying head and facial fractures, with a detailed evaluation of key performance metrics, including accuracy, sensitivity, specificity, and the area under the curve (AUC). Additionally, the study will appraise the risk of bias reported in the literature and evaluate the practical applicability of AI technologies.

Search strategy

A comprehensive literature search was performed across PubMed, Cochrane Library, and Web of Science databases to identify relevant studies published up to December 1, 2024, with no lower limit on publication date. The search strategy employed a combination of keywords, including:"facial fractures,""midface fractures,""nasal fractures,""orbital fractures,""frontal bone fractures,""mandibular fractures,""jaw fractures,""deep learning,""convolutional network,"and"artificial intelligence."No restrictions were applied to the target population, study setting, or control group (detailed in Table 1)

.

Eligibility criteria

Studies were included if they met the following criteria: (1) The fractures studied are craniofacial fractures, mainly involving the mandible, nasal bone, and midfacial bone, which are classified, detected, or segmented. (2) The minimum sample size required for study inclusion was greater than 100. (3) All types of studies, such as prospective, cross-sectional, retrospective, Ambictive, RCTs, etc. (4) Apply artificial intelligence or its subsets (deep neural networks or machine learning) as diagnostic tools. (5) Original articles written in English. (6) Research published as of December 1, 2024. (7) The research results include accuracy and/or sensitivity and/or precision and/or F1 score and/or AUC equivalent, and provide complete, usable, and effective data.

Exclusion criteria were defined as follows: (1) Researchers with unreasonable experimental design. (2) Conference abstracts, letters to editors, review articles, and research solely focused on segmentation tasks or radiomics analysis. (3) Studies lacking extractable quantitative data.

Duplicate records were removed using EndNote 21 (Clarivate Analytics).

Study selection and data extraction

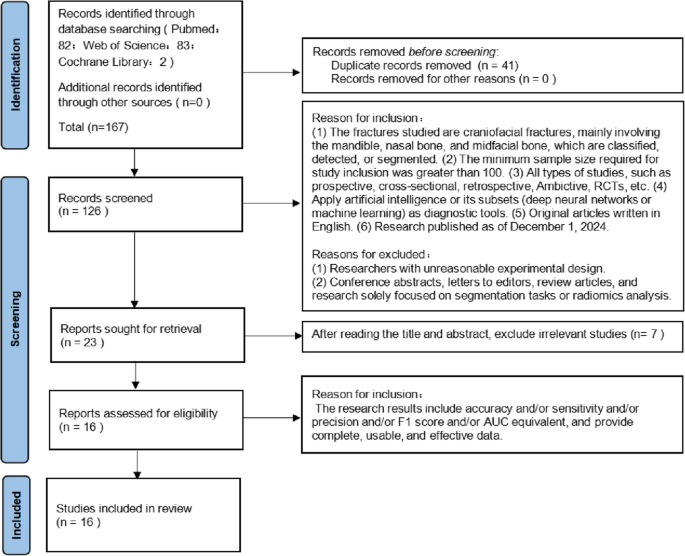

The literature search yielded 158 studies. Following the application of inclusion and exclusion criteria, 16 studies were deemed eligible for inclusion. The study selection process is illustrated in a PRISMA flowchart (Fig. 1). Characteristics of the included studies are summarized in Table 2, while detailed numerical data are presented in Table 3. The included studies, published between 2021 and 2024, varied in sample size, with test dataset images ranging from 36 to 1,250. Data extraction was performed in duplicate by the two primary reviewers, capturing key study features, including publication year, sample size, and performance metrics.

Data extraction and analysis

Data collection

Research characteristics and model performance metrics were extracted manually from the included studies without the use of automated tools. All data were systematically collected using standardized data extraction tables to ensure consistency and reproducibility across the review process.

Extracted features and performance indicators

This study primarily focuses on evaluating the performance of deep learning (DL) models in the detection and analysis of facial fractures. The extracted features and performance indicators from each study included: Author(s) and Publication Year: To identify and contextualize the research; Architecture Model: The specific DL framework employed (e.g., convolutional neural networks); Imaging Modality: The type of imaging used (e.g., CT, MRI); Target Condition: The specific craniofacial fracture type(s) investigated (e.g., mandibular, nasal, midface, frontal bone); Comparison Group: The reference or control group, if applicable; Reference Standard: The gold standard for fracture diagnosis (e.g., expert radiologist interpretation); Data Source: The origin of the dataset (e.g., public database, clinical records); Performance Metrics: Diagnostic sensitivity (recall), specificity, accuracy, precision, and additional indicators as reported (detailed in Tables 2 and 3).

Definitions of performance metrics

Performance metrics were defined as follows:

-

Sensitivity: The proportion of true positives (TP) relative to the sum of true positives and false negatives (FN), calculated as TP/(TP + FN);

-

Specificity: The proportion of true negatives (TN) relative to the sum of true negatives and false positives (FP), calculated as TN/(TN + FP);

-

Precision: The proportion of true positives relative to the sum of true positives and false positives, calculated as TP/(TP + FP);

-

Accuracy: The proportion of correctly classified cases (TP + TN) relative to the total number of cases, calculated as (TP + TN)/(TP + TN + FP + FN).

Analysis considerations

To ensure a robust synthesis of model performance: In studies comparing multiple models on the same test dataset, only the model with the highest performance (based on the primary metric, e.g., accuracy or AUC) was included in the analysis; For studies evaluating multiple fracture types across different anatomical regions within a single article, data from all regions were classified and analyzed comprehensively; When the same model was validated across different datasets, results from each dataset were included to assess consistency and generalizability.

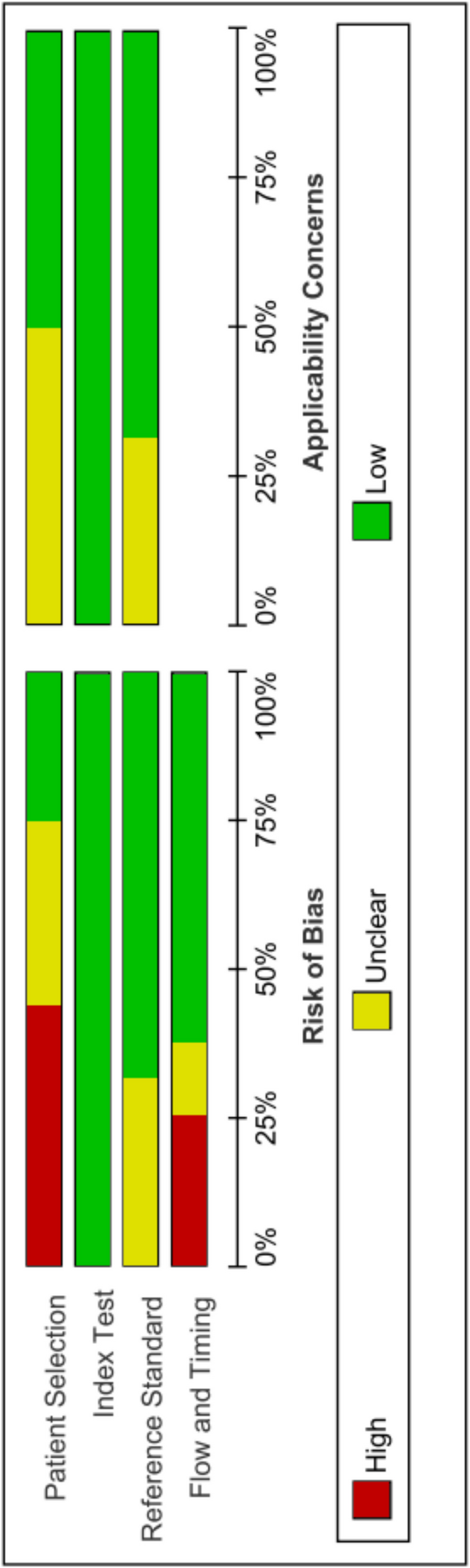

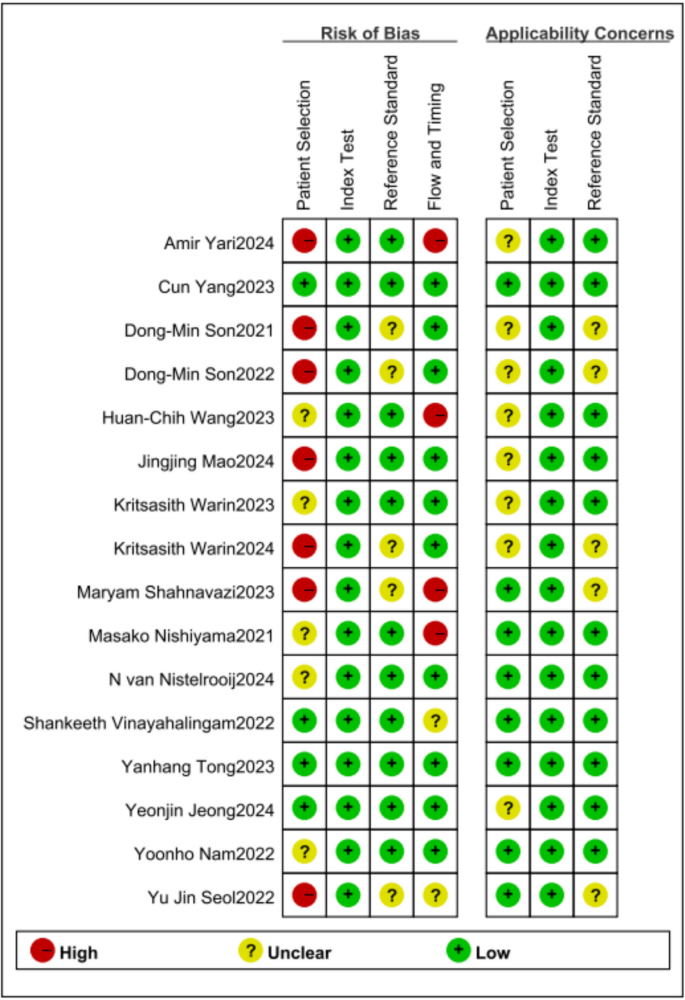

Risk of bias and applicability assessment

The Quality Assessment of Diagnostic Accuracy Studies-2 (QUADAS-2) tool was employed to evaluate the risk of bias and applicability of the included studies. This tool comprises four key domains: patient selection, index test, reference standard, and flow and timing [18, 19].

Two reviewers independently assessed each study for potential risk of bias and concerns regarding applicability across these domains. Each domain was classified as having"low,""high,"or"unclear"risk of bias or risk of applicability. In cases of disagreement, resolution was achieved through adjudication by a third reviewer. The quality evaluation software is completed using Review manager (5.4.1).

Brief summary of the included studies

Amir Yari et al. developed a single-step CNN model (YOLOv5) to detect mandibular fractures in panoramic radiography, which consisted of the mandibular fracture detection step; mandible segmentation was done manually [20]. Using a single center database for training, validation, and testing. And sub group analysis was conducted on the detection performance of different positions of the mandible. The trained AI model demonstrated satisfactory accuracy in detecting most types of mandibular fractures. The model showed a fair diagnostic sensitivity of 0.889, and a specificity of 0.948.

Cun Yang et al. developed a deep learning model for detecting nasal bone fractures on CT images [21]. The AI algorithm is based on the Feature Pyramid Network (FPN) deep learning algorithm and was trained and validated using a single center database. The diagnostic performance of FPN will be compared with 10 radiologists and 10 physicians. The diagnostic performance of FPN will be compared with 10 emergency department physicians and 10 internal medicine physicians. And compare the diagnostic abilities of these physicians with and without the assistance of AI, with a minimum washout period of 3 months. AI model was calculated as having 0.8478 sensitivity and 0.8667 specificity when distinguishing between normal and fractured nasal bone. And AI assisted reading significantly improves the detection ability of radiologists and doctors for nasal bone fractures.

Dong-Min Son [22] et al. developed an algorithm (YOLOv4:one-stage object detection) aimed at detecting mandibular fractures on panoramic radiography and trained using a single center database. And they use SLAT and MLAT (two types of the luminance adaptation transform) to preprocess the mandibular imaging in panoramic radiography in order to achieve better image quality. They also trained six and two classes of SLAT and MLAT modules under the same conditions. The six classes of modules are related to the anatomic area of the mandibular fracture whereas two classes of modules were related to the form of the mandibular fracture.The two classes of SLAT and MLAT achieved an accuracy of 0.985, a sensitivity of 0.691, and an F1 score of 0.821.The six classes of SLAT and MLAT achieved an accuracy of 0.975, a sensitivity of 0.794, and an F1 score of 0.875.

Dong-Min Son et al. also used LAT YOLOv4 (LAT: SLAT and MLAT) for research and applied U-net for image segmentation [23]. Compared with U-Net, Mask R-CNN, YOLOv4 and LAT-YOLOv4, U-Net with LAT YOLOv4 modules achieves optimal detection performance with an accuracy of 0.955, sensitivity of 0.866, and F1 score of 0.908.

Huan-Chih Wang et al. developed a deep learning system to automatically detect both cranial and facial bone fractures in CT images, which integrated the YOLOv4 model for one-stage fracture detection and the improved ResUNet (ResUNet + +) model for cranial and facial bone segmentation [24]. The performance of facial regions were evaluated and resulted in a sensitivity of 0.8077, a precision of 0.8750, and F1 scores of 0.8400. This study also conducted subgroup analysis on fractures in different cranial and facial bone regions, and achieved relatively good performance in areas other than the nasal bone. (Insufficient sample size of nasal bones.)

Jingjing Mao et al. conducted a study on the application of CNN for detecting and classifying mandibular fractures in MSCT [25]. Fractures were detected utilizing the following models: YOLOv3, YOLOv4, Faster R-CNN, CenterNet, and YOLOv5-TRS. Of all of the detection models, YOLOv5-TRS obtained the best mean accuracy (96.68%). However, this article does not provide any other data that excludes accuracy. The main purpose of this study is to select the optimal CNN model from the above models.

Kritsasith Warin et al. developed midfacial fractures detection models which were adopted by the two-stage detector, faster R-CNN, and the one-stage detector, RetinaNet [26]. The purpose is to detect mandibular fractures on CT images. Among them, faster R-CNN achieved considerably greater performance, with a sensitivity of 0.85, precision of 0.72, F1-score of 0.78, and AUC of 0.80.

Kritsasith Warin et al. evaluated the performance of CNN models for the detection and classification of maxillofacial fractures on CT maxillofacial bone window images [27]. Multiclass image classification models were created by using DenseNet-169 and ResNet-152. Multiclass object detection models were created by using faster R-CNN and YOLOv5. Detection performance of the faster R-CNN achieved a better precision of 0.77, 0.70, 0.86; a better recall of 0.92, 0.81, 0.81; a better F1 score of 0.84, 0.75, 0.83; and a better AP of 0.82, 0.72, 0.79 in detection of maxillofacial fracture locations at frontal, midfacial, and mandibular area, respectively.

Maryam Shahnavazi et al. developed a two-stage deep learning framework to detect the mandibular fractures on panoramic radiography [14]. This study gathered the images from four resources. And the mandible was first segmented using a U-net model. A model named Faster region-based convolutional neural network was applied to detect fractures. It was shown that the model had a sensitivity of 100% and a specificity of 83.33%. On the other hand, this study also detected the human-level diagnostic accuracy, sensitivity, and specificity were 87.22 ± 8.91, 82.22 ± 16.39, and 92.22 ± 6.33, respectively. The model overperformed human-level diagnosis regarding accuracy and sensitivity on average.

Masako Nishiyama et al. constructed the DL systems on Ubuntu OS v. 16.04.2 using a graphics processor unit with 11 GB [28]. The CNN used was AlexNet provided on the DIGITS Library v. 5.0. This study detects the performance of deep learning models for diagnosing fractures of the mandibular condyle on panoramic radiographs using data sets from two resources and compare their internal and external validities. The performance of the best performing model AB achieved an accuracy of 0.815; a sensitivity of 0.830; a specificity of 0.800 on test datasets A. On test datasets B, it achieved an accuracy of 0.845; a sensitivity of 0.850; a specificity of 0.840.

N.van Nistelrooij et al. developed a three-stage innovative AI method (JawFracNet) to enable automated detection of mandibular fractures in cone-beam computed tomography scans [6]. These stages are responsible for predicting the mandible segmentation, fracture segmentation, and fracture classification, respectively. The detection performance of JawFracNet had achieved a high precision of 0.978 and a sensitivity of 0.956. Comparing the performance of JawFracNet with three clinical doctors, the algorithm outperforms two of them.

Shankeeth Vinayahalingam et al. applied an AI algorithm (Faster R-CNN with Swin-Transformer) for detecting mandibular fractures in panoramic radiographs [29]. Faster R-CNN contains a region proposal module that identifies the regions the classifier should consider. In this module, the Swin-Transformer was used as a backbone. The model achieved a precision of 0.935, recall of 0.960 and F1-score of 0.947. The AUC and AP were 0.977 and 0.963, respectively.

Yanhang Tong et al. applied an AI algorithm (ResNet-34) to detect zygomatic fractures on CT images [30]. This study provides a detailed explanation of the basic information of the included patients, with data images sourced from a single center database. The sensitivity and specificity of the fracture detection model were 100%. And there is no statistical difference compared to the reference standard (manual diagnosis) of this study.

Yeonjin Jeong et al. applied an AI algorithm (YOLOX) to detect nasal bone fractures on CT images taken at a single center [12]. The sensitivity of the trained algorithm was 100% and the specificity was 77%.

Yoonho Nam et al. developed a DL model to classify the nasal radiographs [31]. The images were sourced from two independent centers and divided into an external test set and an internal test set. The EfficientNet-B7 model was used as the backbone of DL model, and the parameters of the backbone were initialized by loading an ImageNet pretrained model. Gradient-weighted Class Activation Mapping was applied at the last convolutional layer of the CNN model for each view. On the internal test set, the DL model showed diagnostic performance with an AUC of 0.931, sensitivity of 0.822, specificity of 0.896, and accuracy of 0.859. On the external test set, The DL model showed an AUC of 0.857, sensitivity of 0.831, specificity of 0.837, and accuracy of 0.833. This study compared the AUC of the model with two radiologists, and found that the performance of the model was higher than one of them(P = 0.021), with no significant difference compared to the other(P = 0.142).

Yu Jin Seol et al. developed an AI model (3D-ResNet34 and 3D-ResNet50) on the 3-dimensional deep-learning-based automatic diagnosis of nasal fractures [32]. In a facial 3D CT image voxel dataset, 3D-ResNet50 achieved 0.945 of AUC, 0.875 of sensitivity, 0.878 of specificity, and 0.876 of accuracy with higher performance compared to 3D-ResNet34.

Brief summary of characteristics of imaging tools

For patients suspected of facial fractures, emergency doctors and trauma specialists often expect to receive a definitive diagnosis. Among the facial bones, including the frontal bone, midface bone, nasal bone, and mandible, different imaging diagnostic methods are adopted for fractures in different parts [33, 34]. Conventional radiography has historically played an important role in the diagnosis of maxillofacial fractures. However, its utility is limited when it comes to visualizing overlapping bones, soft-tissue swelling, and the dislocation of facial fractures.

In this study, there are 8 studies applied CT, 1 study applied CBCT, 1 study applied X-ray, and 6 studies applied panoramic radiographs.

-

X-ray: X-ray is currently a common imaging assisted examination method in clinical practice. Considering the advantages of low radiation exposure, cost-effectiveness, and accessibility, nasal bone X-ray plain film is still used as the initial diagnostic tool for screening simple nasal bone fractures [35].

-

CT (Computed Tomography): CT is a medical imaging technology that scans the human body layer by layer using precise X-ray beams and high-sensitivity detectors [36]. Generate high-resolution images of the body's internal cross-section, coronal plane, or sagittal plane through computer processing of scanned data. CT images reflect the degree of absorption of X-rays by organs and tissues with different grayscale levels, and have high-density resolution, which can clearly display soft tissue and skeletal structures.

-

CBCT (Cone Beam Computed Tomography): CBCT is a three-dimensional imaging technique that obtains data through cone beam X-ray scanning and generates three-dimensional images using computer reconstruction technology. Compared with traditional spiral CT, CBCT's projection data is two-dimensional and can directly reconstruct three-dimensional images without the need to stack multiple two-dimensional slices. Moreover, CBCT imaging involves only a minimal increase in radiation dose relative to combined diagnostic modern digital panoramic and cephalometric imaging [37]. The advantage of CBCT lies in its high isotropic spatial resolution and high X-ray utilization, which only requires a 360 degrees rotation to obtain all the raw data needed for reconstruction. CBCT has also been used to assess 3D craniofacial anatomy in health and disease and of treatment outcomes [38]. Although CBCT has lower density resolution compared to CT, it has higher spatial resolution and a wide and high-performance dynamic range.

-

Panoramic radiography: Panoramic radiography is a special X-ray imaging technique that obtains a large range of two-dimensional X-ray images through a single exposure. This technique is commonly used in dentistry and some specific medical fields, such as orthopedics, to provide a comprehensive view of an area [39]. In the diagnosis of facial fractures, panoramic radiography is mainly used for the diagnosis of mandibular fractures [40]. So, panoramic radiology is usually limited to isolated lesions, while computed tomography is the preferred tool for all other facial trauma events [41, 42].

Brief summary of characteristics of artificial intelligence algorithm

The following is a review of the AI algorithms included in the research.

-

YOLO: YOLO (You Only Look Once) is a real-time object detection algorithm. Unlike traditional object detection algorithms, YOLO uses a single pass convolutional neural network (CNN) to predict the bounding boxes and categories of all objects in the image. The characteristics of YOLO are fast speed and high accuracy, which are crucial for clinical doctors, especially emergency department doctors, to quickly identify and intervene in patients with fractures [43]. The structure of YOLO is very simple, consisting of simple convolution, pooling, and finally adding two layers of fully connected connections. On this basis, YOLO has extended multiple versions [44,45,46,47]. The main advantages of YOLO algorithm include: 1. High accuracy target detection ability, 2. High resource utilization and short detection time, 3. Relatively easy model acquisition and deployment. The main drawbacks include: 1. Limited detection ability for small or overlapping detection objects, 2. High demand for training dataset data volume [48]. In actual clinical trials, a simple YOLO model may not meet the actual detection requirements. Therefore, enhancing by using network branches based on the YOLO model, or preprocessing the mandibular imaging in the image using SLAT and MLAT (two brightness adaptive transformations) to obtain better image quality, are both good methods [22, 23, 25].

-

U-Net: The U-Net model is an image segmentation model characterized by: 1. Its ability to effectively segment complex facial bones, and 2. Its powerful feature extraction capability, 3.U-Net introduces skip connections in the decoder, which connect the feature maps in the encoder with the corresponding feature maps in the decoder, which can improve the accuracy and detail preservation ability of image segmentation [49]. Compared to traditional convolutional neural networks, U-Net can better handle edge information, preserve detailed features, and improve the accuracy of segmentation results in image segmentation tasks [50]. The main drawbacks include: 1. Complex calculations: Although UNet has a simple structure, it requires a large amount of computation, especially when processing large-scale images, resulting in slower training and inference speeds, 2.U-Net is more suitable for processing small sample sizes, and there is a risk of overfitting for large sample sizes of data. Dong-Min Son et al. applied U-Net to pre segment images before applying YOLO detection, thereby improving the detection capability of the model [23].

-

ResNet: A major feature of ResNet (Residual Network) is the introduction of"skip connections"to enable convolutional neural networks to be trained more efficiently and significantly improve network performance. Previously, it was widely believed that the deeper the network, the higher the accuracy. However, when deeper networks are able to start converging, a degradation problem has been exposed: with the network depth increasing, accuracy gets saturated and then degrades rapidly [51]. As the gradient of deep learning networks increases, there will be issues with vanishing gradients or exploring gradients. After ResNet was proposed,"skip connections"can effectively solve this problem. This design method has had a profound influence on subsequent deep learning architectures.

-

ResUNet++: The backbone of ResUNet++architecture is ResUNet: an encoder decoder network based on U-Net. Compared to U-Net, ResUNet++ introduces residual blocks, squeeze and excitation blocks, Atrous Spatial Pyramid Pooling (ASPP), and attention blocks to further improve performance [52]. The main advantages of ResUNet++ model include: 1. ResUNet++ has demonstrated better segmentation results compared to Unet on multiple publicly available datasets [53]. 2. ResUNet++ can also be trained with a smaller dataset sample size and achieve satisfactory segmentation performance. The main drawbacks include: 1. ResUNet++has added some complex modules, and its parameter count has increased, may not maintaining high computational efficiency. 2.ResUNet++ also has the risk of overfitting after training with small data sample sizes.

-

FPN: Feature pyramids are used to detect objects of different scales in recognition systems, but they are computationally slow and memory intensive. So a top-down architecture with horizontal connections was developed to construct high-level semantic features at all scales, known as Feature Pyramid Network (FPN) [54]. The main advantages of ResUNet + + model include: 1.FPN can effectively detect targets of different sizes, 2.FPN can achieve fast detection speed while maintaining high accuracy, 3.FPN can be used in combination with different backbone networks. The main drawbacks include: 1.Although FPN constructs feature pyramids on deep convolutional networks, there are still computational and memory intensive issues, 2.The complexity of FPN models increases the demand for computing resources, 3.Limited detection ability for small detection objects.

-

Faster R-CNN: Faster R-CNN generates high-quality candidate regions for subsequent object detection tasks by introducing Region Proposal Network (RPN). The main advantages of Faster R-CNN model include: 1. Faster R-CNN has significant advantages in object detection accuracy and speed, 2. Faster R-CNN has been widely applied in practice and its excellent performance has been verified, 3. Faster R-CNN introduces RPN to replace methods such as Selective Search and allows for end-to-end training, significantly reducing detection time [55]. The main drawbacks include: 1. High demand for training dataset data volume, 2. Faster R-CNN model is relatively complex and not be user-friendly for clinical doctors in practical applications [56].

-

AlexNet: AlexNet is a deep convolutional neural network (CNN) model proposed by Alex Krizhevsky et al. in 2012. Compared to previous CNN models, the AlexNet model is deeper and wider, consisting of 5 convolutional layers and 3 fully connected layers. This structure enables the network to learn more complex feature representations. But currently, AlexNet's performance is not particularly efficient. And the applied Local Response Normalization (LRN) was used to improve generalization ability at that time, but later research found that it was not necessary.

-

JawFracNet: JawFracNet is a three-stage neural network model designed by N. van Nistelrooij et al. for processing three-dimensional images from CBCT scans. Good performance has been achieved in the detection of mandibular fractures [6].

-

EfficientNet-B7: EfficientNet is an advanced convolutional neural network (CNN) architecture. EfficientNet applies a new compound scaling method that uses a simple composite coefficient to unify the depth, width, and resolution of the scaling network. This scaling method significantly improves the performance and efficiency of the model. EfficientNet-B7 is a member of EfficientNets, achieving state-of-the-art 84.3% top-1 accuracy on ImageNet [57].

-

3D-ResNet: ResNet is a learning model whose structure minimizes each learning step by skipping connections. 3D ResNet is a three-dimensional convolutional neural network architecture that extends the classic ResNet model to handle three-dimensional data [58].

Statistical analysis and subgroup analysis

The systematic review and meta-analysis evaluated the performance of artificial intelligence (AI) in detecting facial fractures based on contingency tables comprising true positives (TP), false negatives (FN), true negatives (TN), and false positives (FP). These tables were constructed for each study where data were available. Of the 16 included studies, contingency tables were successfully extracted for 11. Notably, Masako Nishiyama et al. [28] and Yoonho Nam et al. [31] applied the same AI model to validate detection performance across two distinct databases. To ensure analytical independence, these were treated as separate studies, resulting in a total of 13 contingency tables analyzed.

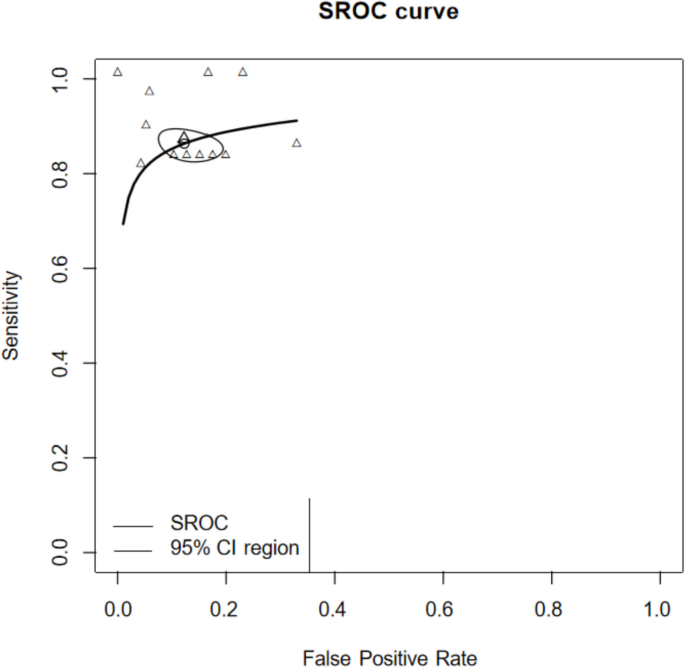

Diagnostic performance assessment

The diagnostic performance of artificial intelligence (AI) models in detecting facial fractures was evaluated using the Summary Receiver Operating Characteristic (SROC) curve. The SROC curve integrates sensitivity and specificity values across the included studies, accounting for varying diagnostic thresholds in each independent analysis [59, 60]. The area under the curve (AUC) was calculated from the fitted SROC to provide an overall measure of diagnostic accuracy, a widely accepted metric for assessing AI classification models. The Receiver Operating Characteristic (ROC) curve plots the true positive rate (sensitivity) against the false positive rate (1—specificity). Higher AUC values indicate superior model performance in distinguishing between fractured and non-fractured images [61, 62]. AUC values are interpreted as follows: excellent (0.9–1.0), good (0.8–0.9), fair (0.7–0.8), and poor (< 0.7).

Of the 16 included studies, contingency tables comprising true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN) were extracted for 11 studies. Forest plots and SROC curves were generated based on these data to summarize diagnostic performance. However, five studies [6, 22, 23, 25, 27] did not include true negative samples in their test datasets, as these consisted solely of fracture images. Consequently, contingency tables could not be constructed for these studies, precluding their inclusion in the SROC analysis.

Results

Detailed summaries of extracted characteristics and performance metrics are presented in Table 2 (study features) and Table 3 (numerical performance data). Detailed summaries of extracted data are presented in Table 4 (TP,TN,FP,FN).

For the screening of included studies, The discrepancy between the two researchers lies in that Jiangyi Ju intends to include Jingjing Mao et al. [25], whereas Zhen Qu prefers to include Daiki Morita et al. [63]. The kappa coefficient was 0.93, showing substantial agreement. The methodological quality of the included studies was assessed using the QUADAS-2 tool, as shown in Fig. 2 and Fig. 3. Application of the QUADAS-2 tool revealed that 9 out of the 16 included studies exhibited a high risk of bias and/or significant concerns regarding applicability (Fig. 3). Publication bias assessment was carried out as depicted in Fig. 4. The resultant p-value was 0.32 > 0.05. This finding indicates a low risk of publication bias in the present study. All sensitivity and specificity analyses were conducted using R software (version 4.2.3). And publication bias assessment was conducted using Stata (18.0).

Sensitivity and specificity analyses were conducted to evaluate the diagnostic performance of deep learning (DL) models in detecting facial fractures (Fig. 5). The pooled sensitivity was 0.889 (95% CI: 0.844–0.922), and the pooled specificity was 0.888 (95% CI: 0.834–0.926). The Summary Receiver Operating Characteristic (SROC) curve for DL-based diagnosis of facial fractures is presented in Fig. 6, with an area under the curve (AUC) of 0.911, indicating excellent overall diagnostic accuracy.

Subgroup analyses of sensitivity and specificity were performed for mandibular and nasal bone fractures, with results depicted in Figs. 7 and 8. For nasal fractures, the pooled sensitivity was 0.851 (95% CI: 0.806–0.887), and the pooled specificity was 0.883 (95% CI: 0.862–0.902). For mandibular fractures, the pooled sensitivity was 0.905 (95% CI: 0.836–0.947), and the pooled specificity was 0.895 (95% CI: 0.824–0.940).

In the meta-analytical integration of 11 enrolled studies, sensitivity analyses showed no obvious heterogeneity, while specificity analyses presented marked statistical heterogeneity. To handle the heterogeneity, a random-effects modeling approach was implemented alongside subgroup stratification. Subgroup analysis indicated acceptable overall heterogeneity for nasal fratcure detection, in contrast to notably higher heterogeneity observed in mandibular fracture assessment.

Discussion

Overview of findings

This systematic review and meta-analysis aimed to assess the performance of artificial intelligence (AI) algorithms, particularly convolutional neural networks (CNNs), in detecting facial fractures using imaging modalities. The results demonstrate that AI exhibits high diagnostic accuracy, with a pooled sensitivity of 0.889 (95% CI: 0.844–0.922), pooled specificity of 0.888 (95% CI: 0.834–0.926), and an area under the Summary Receiver Operating Characteristic (SROC) curve (AUC) of 0.911. These findings suggest that AI, leveraging its image recognition capabilities, can effectively distinguish fracture from non-fracture images after training on large datasets [13]. Compared to human performance, AI offers advantages in detecting subtle fracture lines and expediting diagnosis, particularly benefiting junior clinicians and emergency department physicians. In subgroup analysis, the detection of the mandible performed better, achieving a pooled sensitivity of 0.905 (95% CI: 0.836–0.947) and a pooled specificity of 0.895 (95% CI: 0.824–0.940). Tong Yanhang et al. [30] achieved the best results in the application of an AI model (ResNet-34) for detecting zygomatic fractures on CT images. The sensitivity and specificity of the fracture detection model were 100%.

Clinical implications and challenges

AI algorithms hold significant promise for fracture detection and prognostic planning. However, their clinical application faces several challenges. First, despite achieving or surpassing clinician-level performance, AI is prone to false positives and negatives, necessitating secondary review by clinicians. This reliance risks over-dependence, potentially undermining independent clinical judgment. Second, AI models often perform better on internal test sets than external ones, raising concerns about generalizability [64]. In this review, 11 of 16 studies relied solely on single-center databases, achieving robust internal performance but lacking external validation. Third, training AI requires extensive imaging datasets, yet single-center studies struggle to provide sufficient facial fracture images. Fourth, reference standards in most studies depend on expert consensus, where multiple clinicians annotate images, followed by expert review. While reasonable, this approach does not ensure absolute accuracy. Future studies could enhance reference standards by incorporating postoperative or clinically confirmed diagnoses, or, for X-ray-based studies, integrating CT findings [65].

Additional studies and methodological considerations

During the literature search, we identified a study by Daiki Morita et al. [63], which applied Single Shot MultiBox Detector (SSD) and YOLOv8 to detect midfacial fractures on CT images. Performance metrics included precision (0.872 vs. 0.871), recall (0.823 vs. 0.775), F1 scores (0.846 vs. 0.820), and average precision (0.899 vs. 0.769) for SSD and YOLOv8, respectively. However, the study’s test dataset (883 slices from 20 CT scans) included excessive slices per patient without specifying distribution across imaging planes (e.g., horizontal, coronal, sagittal). This approach risks bias, as scientifically sound CT-based fracture detection should limit slices to approximately 10 per patient (e.g., single-digit representations per plane) after preprocessing [66]. Similarly, Xuebing Wang et al. [67] used ResNet to detect mandibular fractures on CT images, analyzing performance by anatomical region. Due to incomplete overall data and high bias risk, this study was excluded from our analysis.

In orthopedics, artificial intelligence has emerged as a promising tool for detecting bone fractures across diverse anatomical sites. CNN models designed to assist in diagnosing femur and tibia fractures have demonstrated strong diagnostic performance, utilizing large datasets of Radio graphy and CT images to accurately classify fracture patterns [68,69,70]. Yet, diagnostic challenges persist for small and anatomically complex fractures—such as nasal bone injuries and midfacial microfractures—where suboptimal image quality and the intricate structure of these regions create unique obstacles. Unlike long bone fractures, which are often imaged in relatively straightforward anatomical contexts, craniofacial fractures in compact spaces are frequently obscured by overlapping bony structures and soft tissue artifacts, limiting the effectiveness of even sophisticated deep learning frameworks. We believe that augmenting dataset size alongside stringent quality assurance measures could address these limitations. Future studies should focus on systematically curating annotated imaging datasets that prioritize underrepresented fracture subtypes in complex anatomical areas. Implementing standardized protocols for image acquisition and labeling, coupled with techniques to enhance spatial resolution and minimize imaging noise, may improve model generalization for these challenging cases. By tackling these data-related bottlenecks, AI-driven diagnostic systems could achieve more uniform performance across all fracture presentations, ultimately supporting more reliable clinical decision-making.

Artificial intelligence has seen extensive utilization across diverse medical disciplines, with notable applications in neuro-oncology for detecting brain tumors via MRI and CT imaging [71, 72]. CNN-based frameworks are capable of analyzing the intricate anatomical architecture of the brain to identify neoplastic lesions by discerning subtle tissue contrasts and structural abnormalities. The performance of these models is typically evaluated using indicators such as sensitivity, specificity, and accuracy, similar to the diagnosis of facial fractures. High quality datasets are crucial for training these models.

In the study of imaging diagnosis of facial fractures assisted by artificial intelligence, Mahmood Dashti et al. [73] previously explored research on the diagnosis of mandibular fractures on panoramic radiograph. The research includs five studies. All studies utilized convolutional neural network (CNN) algorithms for panoramic radiography. The results indicated a high sensitivity (0.971) and specificity (0.813) for fracture detection. However, the effectiveness of these algorithms is currently constrained by the small size and limited scope of available datasets, and most of the included studies have a high risk of bias. Our study comprehensively analyzed fractures across various regions of the face, a research direction that has been seldom explored. Thus, our research holds a certain degree of novelty.

Limitations

This study has several limitations. First, we included only English-language studies from PubMed, Cochrane Library, and Web of Science, potentially omitting relevant research from other sources or languages. And although we have repeatedly modified and expanded our search strategy to include more comprehensive literature, applying it to different databases may still result in some research omissions. In addition, contingency tables were extractable from only 11 of 16 studies, limiting the scope of our quantitative synthesis. Then, many included studies exhibited methodological flaws, with 9 of 16 classified as having high risk of bias and applicability concerns per the QUADAS-2 tool. Such biases may inflate reported algorithm performance, constraining the reliability of our meta-analysis conclusions. Moreover, not a single one of the studies assessed the performance of their algorithms within a clinical context. In such a setting, elements like low—quality images or the existence of casts can potentially impact the diagnostic process. And the studies we have included are mostly retrospective studies. In terms of developing research methods, we hope that future research in this field can develop more scientific research methods.

Panoramic radiograph, which widely used in clinical practiceare, used to screen various lesions and conditions in the maxillofacial region and to view the entire jaw bone [28]. Due to the unique methods of image acquisition, anatomical representation, and radiographic artifacts, this form of facial skeletal imaging often results in reporting errors [74]. Certain regions pose challenges in interpretation due to the intricate relationships between anatomical structures and the layers within panoramic images [28]. Therefore, this imaging form is usually used for preliminary screening of diseases or partial acute trauma. Thisis also an important reason for applying artificial intelligence assisted diagnosis in this field. Artificial intelligence identifies image features that are difficult for the naked eye to detect in order to obtain more accurate diagnoses. Among the 16 studies included in this study, a total of 6 studies used panoramic radiograph diagnosis. In the analysis included in the study, the inherent shortcomings of panoramic radiograph in diagnosing diseases do have a certain impact on our research, which can lead to bias.

Regarding the heterogeneity issue in the results of the article, the key factors causing this heterogeneity are analyzed as follows: (1) discrepancies in reporting quality across different studies; (2) demographic variations in age, gender, and ethnic backgrounds among study populations; (3) absence of same imaging methodologies. (4) the CNN architectures differed between the AI systems, which limits the ability to compare them. In the Fig. 3, it is not difficult to find that there is a high risk of bias and applicability issues in the studies related to mandibular fractures testing. Thus, the conclusions of this study are preliminary and should be approached with caution. These issues are likely linked to the inherent technical difficulties of mandibular bone imaging and insufficient methodological precision in those particular investigations, emphasizing the necessity for standardized protocols and improved reporting clarity in future research endeavors. This indicates that more high-quality research is needed in the field of artificial intelligence assisted facial fracture detection.

Future directions

There is a deep integration relationship between AI and medical imaging diagnosis. As an important auxiliary examination in clinical research, medical imaging has high standards for accuracy and high requirements for diagnostic speed. AI has significantly improved the efficiency, accuracy, and accessibility of medical imaging diagnosis through technological empowerment. At the same time, the demand in the field of medical imaging also drives the continuous innovation of AI technology. Of course, currently, AI has not been widely applied in clinical imaging diagnosis work, but its broad prospects are worth believing in.

AI can serve as a valuable adjunct for clinicians in diagnosing facial fractures, but it should not disrupt established clinical workflows. Effective implementation requires clinicians to understand AI’s principles and performance, maintaining independent judgment over AI-generated results. Future research should prioritize multi-center datasets, dividing them into training, validation, internal testing, and external testing subsets to enhance algorithm applicability [65]. Additionally, standardized CT slice selection protocols and more robust reference standards could mitigate bias and improve diagnostic reliability. High-quality studies are needed to further validate AI’s role in facial fracture detection.

Conclusions

The results of this meta-analysis indicate that artificial intelligence performs well in the diagnostic performance of facial fracture detection and is expected to become a useful diagnostic tool.

Data availability

The data used to support the findings of this study are available in the text and can be procured from the corresponding author upon request.

Abbreviations

- CNNs:

-

Convolutional neural networks

- AI:

-

Artificial intelligence

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- PRISMA-DTA:

-

PRISMA-Diagnostic Test Accuracy

- ROC:

-

Receiver operating characteristic

- SROC:

-

Summary Receiver Operating Characteristic

- CT:

-

Computed tomography

- MRI:

-

Magnetic resonance imaging

- AUC:

-

Area under the curve

- DL:

-

Deep learning

- TP:

-

True positives

- FN:

-

False negatives

- TN:

-

True negatives

- FP:

-

False positives

- QUADAS-2:

-

Quality Assessment of Diagnostic Accuracy Studies-2

- CBCT:

-

Cone Beam Computed Tomography

- NR:

-

Not reported

References

Bonitz L, Wruck V, Peretti E, Abel D, Hassfeld S, Bicsák Á. Long-term evaluation of treatment protocols for isolated midfacial fractures in a German nation-wide craniomaxillofacial trauma center 2007–2017. Sci Rep. 2021;11(1): 18291. https://doi.org/10.1038/s41598-021-97858-4.

Chen Y, Weber A, Chen C. Evidence-based medicine for Midface/Orbit/Upper facial fracture repair. Facial Plast Surg. 2023;39(3):253–65. https://doi.org/10.1055/s-0043-1764290.

Datta N, Tatum SA. Reducing risks for midface and mandible fracture repair. Facial Plast Surg Clin North Am. 2023;31(2):307–14. https://doi.org/10.1016/j.fsc.2023.01.014.

Chasmar LR. Fractures of the facial skeleton: a review. Can Med Assoc J. 1969;101(13):38–42. PMID: 5364636; PMCID: PMC1946467.

Afrooz PN, Bykowski MR, James IB, Daniali LN, Clavijo-Alvarez JA. The epidemiology of mandibular fractures in the United States, Part 1: a review of 13,142 cases from the US National Trauma Data Bank. J Oral Maxillofac Surg. 2015;73(12):2361–6. https://doi.org/10.1016/j.joms.2015.04.032.

van Nistelrooij N, Schitter S, van Lierop P, Ghoul KE, König D, Hanisch M, Tel A, Xi T, Thiem DGE, Smeets R, Dubois L, Flügge T, van Ginneken B, Bergé S, Vinayahalingam S. Detecting Mandible Fractures in CBCT Scans Using a 3-Stage Neural Network. J Dent Res. 2024 Jun 24:220345241256618. https://doi.org/10.1177/00220345241256618. Epub ahead of print. PMID: 38910411.

Down KE, Boot DA, Gorman DF. Maxillofacial and associated injuries in severely traumatized patients: implications of a regional survey. Int J Oral Maxillofac Surg. 1995;24(6):409–12. https://doi.org/10.1016/s0901-5027(05)80469-2.

Crockett DM, Mungo RP, Thompson RE. Maxillofacial trauma. Pediatr Clin North Am. 1989;36(6):1471–94. https://doi.org/10.1016/s0031-3955(16)36801-8.

Kaban LB, Mulliken JB, Murray JE. Facial fractures in children: an analysis of 122 fractures in 109 patients. Plast Reconstr Surg. 1977;59(1):15–20. https://doi.org/10.1097/00006534-197701000-00002. (PMID: 831236).

Zhou HH, Lv K, Yang RT, Li Z, Li ZB. Risk factor analysis and idiographic features of mandibular coronoid fractures: a retrospective case-control study. Sci Rep. 2017;7(1): 2208. https://doi.org/10.1038/s41598-017-02335-6.

Wong FK, Adams S, Coates TJ, Hudson DA. Pediatric facial fractures. J Craniofac Surg. 2016;27(1):128–30. https://doi.org/10.1097/SCS.0000000000002185.

Jeong Y, Jeong C, Sung KY, Moon G, Lim J. Development of AI-Based Diagnostic Algorithm for Nasal Bone Fracture Using Deep Learning. J Craniofac Surg. 2024 Jan-Feb 01;35(1):29–32. https://doi.org/10.1097/SCS.0000000000009856. Epub 2023 Nov 13. PMID: 38294297.

Hong N, Whittier DE, Glüer CC, Leslie WD. The potential role for artificial intelligence in fracture risk prediction. Lancet Diabetes Endocrinol. 2024;12(8):596–600. https://doi.org/10.1016/S2213-8587(24)00153-0.

Shahnavazi M, Mohamadrahimi H. The application of artificial neural networks in the detection of mandibular fractures using panoramic radiography. Dent Res J (Isfahan). 2023. https://doi.org/10.4103/1735-3327.369629.

Zhang Z, Sejdić E. Radiological images and machine learning: trends, perspectives, and prospects. Comput Biol Med. 2019;108:354–70. https://doi.org/10.1016/j.compbiomed.2019.02.017.

Thrall JH, Li X, Li Q, Cruz C, Do S, Dreyer K, Brink J. Artificial intelligence and machine learning in radiology: opportunities, challenges, pitfalls, and criteria for success. J Am Coll Radiol. 2018;15(3 Pt B):504–8. https://doi.org/10.1016/j.jacr.2017.12.026.

Guermazi A, Tannoury C, Kompel AJ, Murakami AM, Ducarouge A, Gillibert A, Li X, Tournier A, Lahoud Y, Jarraya M, Lacave E, Rahimi H, Pourchot A, Parisien RL, Merritt AC, Comeau D, Regnard NE, Hayashi D. Improving radiographic fracture recognition performance and efficiency using artificial intelligence. Radiology. 2022;302(3):627–36. https://doi.org/10.1148/radiol.210937.

Whiting PF, Rutjes AW, Westwood ME, Mallett S, Deeks JJ, Reitsma JB, Leeflang MM, Sterne JA, Bossuyt PM. QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med. 2011;155(18):529–36. https://doi.org/10.7326/0003-4819-155-8-201110180-00009.

Huang QX, Huang XW. QUADAS-2 tool for quality assessment in diagnostic meta-analysis. Ann Palliat Med. 2022;11(5):1844–5. https://doi.org/10.21037/apm-22-204. (Epub 2022 Apr 7 PMID: 35400153).

Yari A, Fasih P, Hosseini Hooshiar M, Goodarzi A, Fattahi SF. Detection and classification of mandibular fractures in panoramic radiography using artificial intelligence. Dentomaxillofac Radiol. 2024;53(6):363–71. https://doi.org/10.1093/dmfr/twae018. PMID: 38652576; PMCID: PMC11358630.

Yang C, Yang L, Gao GD, Zong HQ, Gao D. Assessment of artificial intelligence-aided reading in the detection of nasal bone fractures. Technol Health Care. 2023;31(3):1017–25. https://doi.org/10.3233/THC-220501.

Son DM, Yoon YA, Kwon HJ, An CH, Lee SH. Automatic detection of mandibular fractures in panoramic radiographs using deep learning. Diagnostics. 2021;11(6): 933. https://doi.org/10.3390/diagnostics11060933.

Son DM, Yoon YA, Kwon HJ, Lee SH. Combined deep learning techniques for mandibular fracture diagnosis assistance. Life. 2022;12(11): 1711. https://doi.org/10.3390/life12111711.

Wang HC, Wang SC, Yan JL, Ko LW. Artificial intelligence model trained with sparse data to detect facial and cranial bone fractures from head CT. J Digit Imaging. 2023;36(4):1408–18. https://doi.org/10.1007/s10278-023-00829-6.

Mao J, Du Y, Xue J, Hu J, Mai Q, Zhou T, Zhou Z. Automated detection and classification of mandibular fractures on multislice spiral computed tomography using modified convolutional neural networks. Oral Surg Oral Med Oral Pathol Oral Radiol. 2024;138(6):803–12. https://doi.org/10.1016/j.oooo.2024.07.010.

Warin K, Vicharueang S, Jantana P, Limprasert W, Thanathornwong B, Suebnukarn S. Deep learning for midfacial fracture detection in CT images. Stud Health Technol Inform. 2024;25(310):1497–8. https://doi.org/10.3233/SHTI231262. PMID: 38269714.

Warin K, Limprasert W, Suebnukarn S, Paipongna T, Jantana P, Vicharueang S. Maxillofacial fracture detection and classification in computed tomography images using convolutional neural network-based models. Sci Rep. 2023;13(1): 3434. https://doi.org/10.1038/s41598-023-30640-w.

Nishiyama M, Ishibashi K, Ariji Y, Fukuda M, Nishiyama W, Umemura M, Katsumata A, Fujita H, Ariji E. Performance of deep learning models constructed using panoramic radiographs from two hospitals to diagnose fractures of the mandibular condyle. Dentomaxillofac Radiol. 2021;50(7): 20200611. https://doi.org/10.1259/dmfr.20200611.

Vinayahalingam S, van Nistelrooij N, van Ginneken B, Bressem K, Tröltzsch D, Heiland M, Flügge T, Gaudin R. Detection of mandibular fractures on panoramic radiographs using deep learning. Sci Rep. 2022;12(1):19596. https://doi.org/10.1038/s41598-022-23445-w.

Tong Y, Jie B, Wang X, Xu Z, Ding P, He Y. Is convolutional neural network accurate for automatic detection of zygomatic fractures on computed tomography? J Oral Maxillofac Surg. 2023;81(8):1011–20. https://doi.org/10.1016/j.joms.2023.04.013.

Nam Y, Choi Y, Kang J, Seo M, Heo SJ, Lee MK. Diagnosis of nasal bone fractures on plain radiographs via convolutional neural networks. Sci Rep. 2022;12(1): 21510. https://doi.org/10.1038/s41598-022-26161-7.

Seol YJ, Kim YJ, Kim YS, Cheon YW, Kim KG. A study on 3D deep learning-based automatic diagnosis of nasal fractures. Sensors. 2022;22(2): 506. https://doi.org/10.3390/s22020506.

Chukwulebe S, Hogrefe C. The diagnosis and management of facial bone fractures. Emerg Med Clin North Am. 2019;37(1):137–51. https://doi.org/10.1016/j.emc.2018.09.012.

Omami G, Branstetter BF 4th. Imaging of maxillofacial injuries. Dent Clin North Am. 2024;68(2):393–407. https://doi.org/10.1016/j.cden.2023.10.003.

Marston AP, O’Brien EK, Hamilton GS 3rd. Nasal Injuries in Sports. Clin Sports Med. 2017;36(2):337–53. https://doi.org/10.1016/j.csm.2016.11.004. (PMID: 28314421).

Fukuda T, Yonenaga T, Miyasaka T, Kimura T, Jinzaki M, Ojiri H. CT in osteoarthritis: its clinical role and recent advances. Skeletal Radiol. 2023;52(11):2199–210. https://doi.org/10.1007/s00256-022-04217-z.

Machado GL. CBCT imaging - a boon to orthodontics. Saudi Dent J. 2015;27(1):12–21. https://doi.org/10.1016/j.sdentj.2014.08.004.

Kapila SD, Nervina JM. CBCT in orthodontics: assessment of treatment outcomes and indications for its use. Dentomaxillofac Radiol. 2015;44(1): 20140282. https://doi.org/10.1259/dmfr.20140282.

Noikura T, Shinoda K, Ando S. Image visibility of maxillo-facial fractures in conventional and panoramic radiography. Radiation dose of the combined and individual procedures. Dentomaxillofac Radiol. 1978;7(1):35–42. https://doi.org/10.1259/dmfr.1978.0005. PMID: 291553.

Reddy LV, Bhattacharjee R, Misch E, Sokoya M, Ducic Y. Dental injuries and management. Facial Plast Surg. 2019;35:607–13. https://doi.org/10.1055/s-0039-1700877.

Nardi C, Vignoli C, Pietragalla M, Tonelli P, Calistri L, Franchi L, Preda L, Colagrande S. Imaging of mandibular fractures: a pictorial review. Insights Imaging. 2020;11(1):30. https://doi.org/10.1186/s13244-020-0837-0. PMID: 32076873; PMCID: PMC7031477.

Haq I, Mazhar T, Asif RN, Ghadi YY, Ullah N, Khan MA, Al-Rasheed A. YOLO and residual network for colorectal cancer cell detection and counting. Heliyon. 2024;10(2): e24403. https://doi.org/10.1016/j.heliyon.2024.e24403. PMID: 38304780; PMCID: PMC10831604.

Aly GH, Marey M, El-Sayed SA, Tolba MF. YOLO based breast masses detection and classification in full-field digital mammograms. Comput Methods Programs Biomed. 2021;200: 105823. https://doi.org/10.1016/j.cmpb.2020.105823.

Yuan M, Zhang C, Wang Z, Liu H, Pan G, Tang H. Trainable spiking-YOLO for low-latency and high-performance object detection. Neural Netw. 2024;172: 106092. https://doi.org/10.1016/j.neunet.2023.106092.

Yao Q, Zheng X, Zhou G, Zhang J. SGR-YOLO: a method for detecting seed germination rate in wild rice. Front Plant Sci. 2024;23(14):1305081. https://doi.org/10.3389/fpls.2023.1305081. PMID: 38322421; PMCID: PMC10844399.

Zhou J, Ning J, Xiang Z, Yin P. Icdw-yolo: an efficient timber construction crack detection algorithm. Sensors. 2024;24(13): 4333. https://doi.org/10.3390/s24134333.

Xie H, Yuan B, Hu C, Gao Y, Wang F, Wang Y, Wang C, Chu P. SMLS-YOLO: an extremely lightweight pathological myopia instance segmentation method. Front Neurosci. 2024;25(18):1471089. https://doi.org/10.3389/fnins.2024.1471089. PMID: 39385849; PMCID: PMC11461473.

Pham TD, Holmes SB, Coulthard P. A review on artificial intelligence for the diagnosis of fractures in facial trauma imaging. Front Artif Intell. 2024;5(6):1278529. https://doi.org/10.3389/frai.2023.1278529. PMID: 38249794; PMCID: PMC10797131.

Yousef R, Khan S, Gupta G, Siddiqui T, Albahlal BM, Alajlan SA, Haq MA. U-net-based models towards optimal MR brain image segmentation. Diagnostics. 2023;13(9): 1624. https://doi.org/10.3390/diagnostics13091624.

Azad R, Aghdam EK, Rauland A, Jia Y, Avval AH, Bozorgpour A, Karimijafarbigloo S, Cohen JP, Adeli E, Merhof D. Medical image segmentation review: the success of U-Net. IEEE Trans Pattern Anal Mach Intell. 2024;46(12):10076–95. https://doi.org/10.1109/TPAMI.2024.3435571. Epub 2024 Nov 6 PMID: 39167505.

Jha D, Smedsrud PH, Johansen D, de Lange T, Johansen HD, Halvorsen P, Riegler MA. A comprehensive study on colorectal polyp segmentation with ResUNet++, conditional random field and test-time augmentation. IEEE J Biomed Health Inform. 2021;25(6):2029–40. https://doi.org/10.1109/JBHI.2021.3049304.

He K, Zhang X, Ren S, Sun J. "Deep Residual Learning for Image Recognition," 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 2016, pp. 770–778, https://doi.org/10.1109/CVPR.2016.90.

Jha D, “ResUNet++: An Advanced Architecture for Medical Image Segmentation,”, et al. IEEE International Symposium on Multimedia (ISM). San Diego, CA, USA. 2019;2019:225–2255. https://doi.org/10.1109/ISM46123.2019.00049.

Lin TY, Dollár P, Girshick R, He K, Hariharan B, Belongie S. "Feature Pyramid Networks for Object Detection," 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 2017, pp. 936–944, https://doi.org/10.1109/CVPR.2017.106.

Al-Obeidat F, Hafez W, Gador M, Ahmed N, Abdeljawad MM, Yadav A, Rashed A. Diagnostic performance of AI-based models versus physicians among patients with hepatocellular carcinoma: a systematic review and meta-analysis. Front Artif Intell. 2024;19(7):1398205. https://doi.org/10.3389/frai.2024.1398205. PMID: 39224209; PMCID: PMC11368160.

Ren S, He K, Girshick R, Sun J. "Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks," in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 6, pp. 1137–1149, 1 June 2017, https://doi.org/10.1109/TPAMI.2016.2577031. R. Girshick, "Fast R-CNN," 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 2015, pp. 1440–1448, https://doi.org/10.1109/ICCV.2015.169.

Tan M, Le Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In EfficientNet: Rethinking model scaling for convolutional neural networks 6105–6114 (PMLR, 2019).

Ioannidou A, Chatzilari E, Nikolopoulos S, Kompatsiaris I. 3D ResNets for 3D Object Classification. In: Kompatsiaris, I., Huet, B., Mezaris, V., Gurrin, C., Cheng, WH., Vrochidis, S. (eds) MultiMedia Modeling. MMM 2019. Lecture Notes in Computer Science, 2019;11295. Springer, Cham. https://doi.org/10.1007/978-3-030-05710-7_41

Rosman AS, Korsten MA. Application of summary receiver operating characteristics (sROC) analysis to diagnostic clinical testing. Adv Med Sci. 2007;52:76–82 (PMID: 18217394).

Veroniki AA, Tsokani S, Agarwal R, Pagkalidou E, Rücker G, Mavridis D, Takwoingi Y. Diagnostic test accuracy network meta-analysis methods: a scoping review and empirical assessment. J Clin Epidemiol. 2022;146:86–96. https://doi.org/10.1016/j.jclinepi.2022.02.001.

Dimou N, Bagos P. Meta-analysis methods of diagnostic test accuracy studies. Methods Mol Biol. 2022;2345:173–85. https://doi.org/10.1007/978-1-0716-1566-9_11. PMID: 34550591.

Nyaga VN, Arbyn M. Comparison and validation of metadta for meta-analysis of diagnostic test accuracy studies. Res Synth Methods. 2023;14(3):544–62. https://doi.org/10.1002/jrsm.1634.

Morita D, Kawarazaki A, Soufi M, Otake Y, Sato Y, Numajiri T. Automatic detection of midfacial fractures in facial bone CT images using deep learning-based object detection models. J Stomatol Oral Maxillofac Surg. 2024;125(5S2): 101914. https://doi.org/10.1016/j.jormas.2024.101914.

Reitsma JB, Glas AS, Rutjes AW, Scholten RJ, Bossuyt PM, Zwinderman AH. Bivariate analysis of sensitivity and specificity produces informative summary measures in diagnostic reviews. J Clin Epidemiol. 2005;58(10):982–90. https://doi.org/10.1016/j.jclinepi.2005.02.022.

Kuo RYL, Harrison C, Curran TA, Jones B, Freethy A, Cussons D, Stewart M, Collins GS, Furniss D. Artificial intelligence in fracture detection: a systematic review and meta-analysis. Radiology. 2022;304(1):50–62. https://doi.org/10.1148/radiol.211785.

Wang J, Tan D, Liu J, Wu J, Huang F, Xiong H, Luo T, Chen S, Li Y. Merging multiphase CTA images and training them simultaneously with a deep learning algorithm could improve the efficacy of AI models for lateral circulation assessment in ischemic stroke. Diagnostics. 2022;12(7): 1562. https://doi.org/10.3390/diagnostics12071562.

Wang X, Xu Z, Tong Y, Xia L, Jie B, Ding P, Bai H, Zhang Y, He Y. Detection and classification of mandibular fracture on CT scan using deep convolutional neural network. Clin Oral Investig. 2022;26(6):4593–601. https://doi.org/10.1007/s00784-022-04427-8.

Liu P, Lu L, Chen Y, Huo T, Xue M, Wang H, Fang Y, Xie Y, Xie M, Ye Z. Artificial intelligence to detect the femoral intertrochanteric fracture: The arrival of the intelligent-medicine era. Front Bioeng Biotechnol. 2022;6(10): 927926. https://doi.org/10.3389/fbioe.2022.927926. PMID: 36147533; PMCID: PMC9486191.

Kim T, Moon NH, Goh TS, Jung ID. Detection of incomplete atypical femoral fracture on anteroposterior radiographs via explainable artificial intelligence. Sci Rep. 2023;13(1):10415. https://doi.org/10.1038/s41598-023-37560-9. PMID: 37369833; PMCID: PMC1030009235218428.

Cai D, Zhou Y, He W, Yuan J, Liu C, Li R, Wang Y, Xia J. Automatic segmentation of knee CT images of tibial plateau fractures based on three-dimensional U-Net: assisting junior physicians with Schatzker classification. Eur J Radiol. 2024;178: 111605. https://doi.org/10.1016/j.ejrad.2024.111605.

Luo X, Yang Y, Yin S, Li H, Shao Y, Zheng D, Li X, Li J, Fan W, Li J, Ban X, Lian S, Zhang Y, Yang Q, Zhang W, Zhang C, Ma L, Luo Y, Zhou F, Wang S, Lin C, Li J, Luo M, He J, Xu G, Gao Y, Shen D, Sun Y, Mou Y, Zhang R, Xie C. Automated segmentation of brain metastases with deep learning: a multi-center, randomized crossover, multi-reader evaluation study. Neuro Oncol. 2024;26(11):2140–51. https://doi.org/10.1093/neuonc/noae113.

Bairagi VK, Gumaste PP, Rajput SH, Chethan KS. Automatic brain tumor detection using CNN transfer learning approach. Med Biol Eng Comput. 2023;61(7):1821–36. https://doi.org/10.1007/s11517-023-02820-3. (Epub 2023 Mar 23 PMID: 36949356).

Dashti M, Ghaedsharaf S, Ghasemi S, Zare N, Constantin EF, Fahimipour A, Tajbakhsh N, Ghadimi N. Evaluation of deep learning and convolutional neural network algorithms for mandibular fracture detection using radiographic images: A systematic review and meta-analysis. Imaging Sci Dent. 2024 Sep;54(3):232–239. https://doi.org/10.5624/isd.20240038. Epub 2024 Aug 12. PMID: 39371302; PMCID: PMC11450407.

Sklavos A, Beteramia D, Delpachitra SN, Kumar R. The panoramic dental radiograph for emergency physicians. Emerg Med J. 2019;36(9):565–71. https://doi.org/10.1136/emermed-2018-208332. (Epub 2019 Jul 26 PMID: 31350283).

Acknowledgements

Not applicable.

Declaration of originality

This manuscript is original, has not been previously published and has not been submitted for publication elsewhere while under consideration. Each named author has substantially contributed to conducting the underlying research and drafting this manuscript.

Funding

The authors state that this work has not received any funding.

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ju, J., Qu, Z., Qing, H. et al. Evaluation of Artificial Intelligence-based diagnosis for facial fractures, advantages compared with conventional imaging diagnosis: a systematic review and meta-analysis. BMC Musculoskelet Disord 26, 682 (2025). https://doi.org/10.1186/s12891-025-08842-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12891-025-08842-2