通过教育辅助系统中的基于AI的生成对抗网络来增强艺术创作

作者:Zhang, Shijie

介绍

艺术教育在培养创造力,表达和批判性思维方面起着至关重要的作用,但它在数字时代面临着独特的挑战 1。将技术迅速整合到教室中为艺术探索开辟了新的途径,但它在传统的教学模型中也揭示了差距。学生通常缺乏及时的反馈,创造性的脚手架以及支持不同思维的自适应学习工具。此外,从传统的工作室环境到数字平台的过渡强调了对智能系统的需求,这些系统超出了静态绘图工具并提供实时的,交互式的帮助。 2。尽管数字艺术软件和平板电脑被广泛使用,但大多数用作被动仪器,这些乐器对学生的创作过程没有教学上的贡献。这种被动的性质限制了可能与技术,组成或对自己的创造决策信心斗争的初学者的学习潜力。 3。

人工智能(AI)的最新进步,尤其是在生成模型中,提供了令人信服的机会,以重新想象如何在教育环境中教授和创造艺术 4。在这些模型中,生成的对抗网络(GAN)在生成逼真的图像,转移艺术风格以及甚至与人类输入共同创建视觉效果方面取得了显着成功。由发电机和歧视器组成的gan的双网结构使他们能够学习细粒度的视觉特征,并产生模仿人类创造的艺术和高保真的产量 5。这种生成能力使甘斯特别适合教育用例,在这种情况下,需要视觉建议,反馈或需要对创意输入进行迭代转换。

在教育心理学中,众所周知,当鼓励学习者探索,迭代和反思他们的工作时,创造力就会蓬勃发展。 6。通过产生风格多样和语义上有意义的艺术品来对用户输入做出反应的生成模型可以作为有价值的教学合作伙伴。像GAN这样的人工智能系统可以提供激发学生想象力,验证探索性草图并展示替代性艺术途径的刺激,而不是取代人类的创造力,而是提供刺激性的刺激。 7。然而,尽管甘恩在娱乐和时尚等领域中流行,但它们融入正式的教育环境中,尤其是在视觉艺术课程中,仍然没有得到充实的态度。关于专门针对课堂使用的GAN动力系统的设计,实施和评估的研究有限,并与教学目标保持一致 8。

艺术教育中的传统AI应用通常集中在分类任务上,例如样式识别或视觉内容标记。这些方法提供了分析见解,但对学生的实际创作过程无济于事 9。其他型号是为自动化艺术生成而设计的,但以闭环方式运行,生成固定的输出而没有用户影响或上下文相关性。为了使AI工具在教育环境中具有教学上有效的有效性,它必须支持共同创造,促进迭代学习,并提供与人类指导者指导创意发展方式相符的方式的反馈。此外,该系统必须直观,可以解释且可以根据多样化的学习方式,艺术媒体和课程标准进行定制。 10。

现有工具的另一个主要局限性是它们缺乏实时互动。从事艺术创作的学生从动态反馈循环中受益匪浅,他们可以在其中修改输入并立即观察这些变化的影响。 11。大多数当前的生成艺术工具要么以批处理模式运行,要么需要大量的计算开销,这使得它们在课堂环境中不切实际。迫切需要在学校,工作室和在线学习平台中部署高效,轻巧和响应式的AI系统。这些系统不仅必须生成高质量的视觉效果,而且还必须与数字绘图界面无缝集成,并支持多模式输入,例如草图,文本提示和语义地图。 12。

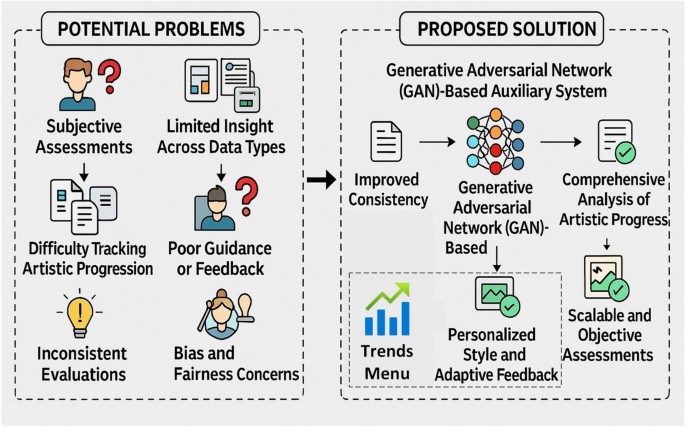

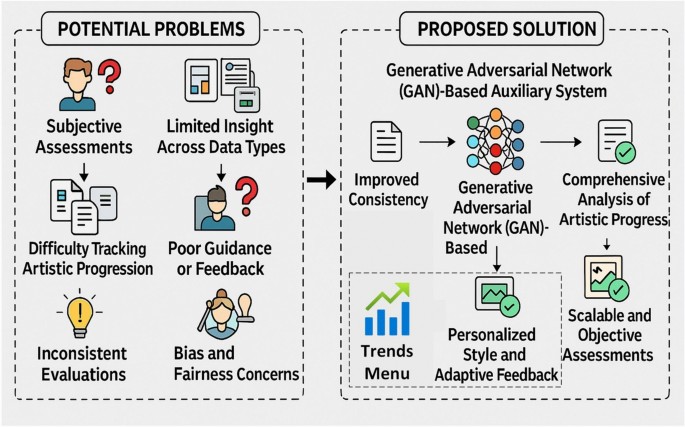

此外,创作过程对个人和文化产生了深远的影响。因此,为艺术教育做出贡献的AI系统必须对各种美学传统敏感并在其设计中包容 13,,,,14。这需要使用策划和代表性的数据集,可解释的算法和以人为本的评估标准。同样重要的是系统的能力促进艺术成长而不主导创造性叙事。人工智能应作为协作伙伴建议,精炼和增强 - 在为人类意图,错误和即兴创作留出空间时 15如图所示 1。

鉴于这些考虑,这项研究介绍了一种基于生成对抗网络的艺术创作的新型教育辅助系统。拟议的系统旨在通过在艺术创作过程中提供自适应,实时和风格上不同的视觉反馈来增强学生的能力。 16。它结合了一个模块化的gan架构,能够处理素描到图像转换,抽象到现实的生成和动态风格的适应 17,,,,18。该系统支持多模式输入,可以集成到数字学习环境中,包括平板电脑,Web平台和基于手写笔的绘图工具。

该系统的评估是按定量和定性术语进行的 19。定量地,诸如Inception评分(IS),FréChetInception距离(FID)和结构相似性指数(SSIM)之类的指标用于评估生成图像的现实主义和多样性。定性地,专家评论,学生调查和创造力评估框架用于评估系统对学习参与和创造性表达的影响。该系统经过一系列教育场景进行了测试,包括初学者绘图课程,设计思维研讨会和数字插图课程。 20,,,,21。该系统提供实时风格的反馈,鼓励学生尝试自己的创作过程,从而增强了他们认真评估和改善自己的工作的能力。这种反馈循环促进了一种进步和成长的感觉,特别是对于可能难以形象化替代方法的初学者而言。

通过桥接AI和艺术教育,拟议的系统为AI增强学习技术的不断增长而做出了贡献。它为如何使用甘恩(Gans)不仅可以用来生成艺术,还可以激发学习者。更重要的是,它将叙事从自动化转变为AI扩大人类创造力而不是替换它的增强。这项研究的结果表明,基于GAN的辅助系统的可行性,教学价值和技术可扩展性为创意教育的未来创新铺平了道路。通过这项工作,我们旨在培养新一代的智能教育工具,以维护艺术表达的完整性,同时拥抱机器智能的可能性。

研究目标和贡献

这项研究引入了助力教育辅助系统旨在增强艺术创造力并支持视觉艺术教育中的互动学习。通过集成生成对抗网络(GAN)和实时素描到图像合成和多模式用户输入,该系统是学生的动态共同创建者,鼓励探索并提供直接的视觉反馈。核心目标是弥合被动数字工具与主动AI驱动的教学剂之间的差距,这些教学法支持创造力,迭代和自我表达。这项工作的主要贡献如下:

-

模块化AI架构组合的设计语义素描到图像翻译,样式传输模块和潜在特征插值由甘斯(Gans)提供支持,以支持艺术学习环境中的实时共同创造。

-

开发整合的管道多模式用户输入包括徒手草图,文本提示和样式参考,以生成适合学习者偏好的视觉多样性和教学相关的艺术输出。

-

提出的系统证明了创意产出分数提高了35.4%(由专家教练评级)和学生参与度增加42.7%与传统的数字艺术工具相比,通过涉及60名本科生的受控课堂实验进行了验证。

-

包括可解释的AI组件,例如视觉关注热图,中级潜在演练和进步的发电预览帮助学习者和讲师了解模型的生成决策并提供教学脚手架。

-

该系统的演示跨学科的适用性和课程适应性,支持各种视觉艺术领域(例如,素描,设计思维,插图),并以最小的重新培训在面对面和远程学习环境中实现部署。

文献综述

人工智能的最新进步,尤其是在深厚的生成模型中,已经改变了我们如何理解和支持艺术和设计中的创造力 22。AI和艺术教育的交集已经显着发展,这是由于神经网络综合,生成和解释复杂的视觉模式的能力所驱动的。一项相当大的工作重点是用于视觉内容创建的生成对抗网络(GAN),将它们定位为有力的工具,不仅是自动图像生成的,而且还可以增强人类创造力,例如艺术,时尚,动画和设计教育等领域。 23。

GAN表现出变革潜力的主要领域之一是素描到图像翻译。通过学习语义草图和现实表示之间的映射,诸如Pix2Pix和Spade Gan之类的模型已被用来将基本的线图转换为高度详细的彩色图像。 24。这些模型是各种创造性应用程序的骨干,使新手用户能够从最少的输入中创建具有专业的视觉效果。尽管此类系统的大多数实现都针对数字艺术家或专业创作者,但最近的文献探索了这些模型在课堂上的教学价值。通过允许学生从部分或不完整的草图中获得实时视觉反馈,Gans可以提供指导,鼓励迭代并建立对学习者的信心 25。

在这个领域的另一个有影响力的方向是使用基于样式的一代,诸如Stylegan2和Biggan之类的模型都经过培训以捕获各种艺术风格的高维特征。 26。这些模型允许潜在的空间操纵,使用户能够通过不同的艺术领域融合,插值和穿越。结合这些功能的教育系统有可能向学生介绍各种样式,从而促进对构图,纹理和颜色理论的理解。一些研究将这些模型与交互式UI相结合,使学生可以通过直觉参数选择或发展艺术品,从而支持对艺术风格和生成设计的动手探索 27。

除了一代之外,其他研究重点是使用gan和相关的深度学习模型作为共同创造艺术创作过程中的协作工具。 28。这些系统的功能不是黑框发生器,而是基于人类输入来发展其输出的交互式助手。例如,诸如DeepThink之类的系统提供了一个沙盒环境,用户可以在其中绘制,提供文本提示或迭代修改生成的输出。这些共同创造的系统强调了人与机器之间的响应能力和相互影响。在教育环境中,这样的框架可以通过呈现替代方案,增强不完整的想法并提供风格上的品种来踩踏创造性思维 29。

尽管取得了这些进步,但大多数现有的AI动力艺术平台并未考虑到教学目标。许多人缺乏课程对齐,教育脚手架或评估指标,可以指导其整合到正式的学习环境中。此外,它们的可用性通常具有一定水平的技术技能或艺术背景,使初学者无法访问它们 30。研究还表明,现有系统倾向于在孤立的工作流程中运行,缺乏与课堂学习管理系统或用于评估的实时数据收集的集成。因此,人们越来越认识到需要将AI嵌入结构化的,以学习者为中心的环境中,尤其是针对基础和中级艺术教育的需求 31。

在绩效评估方面,该领域的研究既采用了定量和定性方法。常用的定量度量标准包括成立评分(IS),FrâChetInception距离(FID)和结构相似性指数(SSIM),以测量生成图像的现实主义和多样性。尽管这些指标可用于基准生成质量,但它们在捕获教学功效方面缺乏 32。最新的研究用以用户为中心的措施(例如参与时间,讲师的创造力评级以及自我报告的学习者满意度)补充了这些研究。例如,结合实时互动,视觉建议和指导性改进的系统倾向于报告更高的参与度和感知的学习成果,从而突出了动态自适应系统的价值。这个表1介绍2024年的基于GAN的系统,专注于艺术生成和创意教育。尽管模型改善了视觉创造力和学习者的互动,但挑战包括域范围,计算需求以及各种学习者和媒体的现实世界适用性。

一些文献探讨了甘恩与增强学习(RL)和注意机制的整合,以提高生成产量的适应性和上下文意识。这些混合模型显示出对教育使用的希望,系统必须响应不断发展的用户目标或提供定制的输出。 41。例如,虚拟博物馆中使用的GAN-RL系统根据访客行为表现出适应的可视化,从而在教育艺术工具中提供了类似个性化的潜力。但是,这样的系统通常需要大规模的传感器集成或复杂的模拟环境,这可能会限制其在标准课堂设置中的可行性。

AI决策的解释性和解释性是在教育环境中部署生成模型的另一个日益严重的关注点。尽管CNN和GAN等模型可以产生令人惊叹的视觉输出,但它们通常缺乏解释某些视觉效果或特定输入如何影响输出的机制 42,,,,43。为了解决这个问题,最近的工作结合了注意热图,潜在矢量可视化和中间特征图,以使决策过程更加透明。这些技术在教育环境中特别有价值,在教育环境中,学习者和教育工作者需要深入了解系统的行为,以反映,批评和改善艺术成果。

重要的是,在当前的许多文献中,AI驱动系统的可伸缩性和包容性仍然不足。许多基于GAN的教育系统都接受了策划的数据集的培训,这些数据集不反映各种文化美学或非西方艺术传统的培训。 44,,,,45。这限制了他们的普遍性,并引起了人们对偏见和代表的关注。AI伦理学的最新讨论强调了培训包容性和文化适应性的培训数据集和生成模型的需求,尤其是在教育中,学生的创造性表达是个人的,并且经常植根于文化身份。拟议的系统超过了早期基于GAN的模型,以创造性支持,实时反馈和教育整合,请参阅表2。

总而言之,目前的文献表明,人们对AI生成的艺术及其在教育中的应用越来越兴趣,但它也揭示了巨大的差距。大多数现有的模型将艺术质量优先于教育可用性,缺乏实时交互式功能,并且通常没有提供有意义的反馈,而与教学目标保持一致。很少有针对新手学习者设计的系统,甚至更少提供解释性,用户驱动的共同创建或跨课程适应性。因此,有一个重要的机会设计为AI驱动的艺术工具,不仅可以用作图像的发电机,而且还可以作为支持学习者创意旅程的聪明合作者。本文通过提出基于GAN的教育辅助系统来解决这一差距,该系统强调了视觉艺术教育的互动性,可解释性和实时共同创造。

问题陈述

传统上,艺术教育依靠基于工作室的指导和手动批评,这可以限制个性化的反馈和持续的创意探索,尤其是在数字或远程学习环境中。尽管数字工具和平台使艺术创作更加易于访问,但它们通常充当被动画布,缺乏促进创造力或学习的适应性或教学功能。此外,由于缺乏各种风格和反馈机制,初学者级学生可能会努力产生或完善艺术思想。现有的AI创作中的AI应用主要集中在用于专业或娱乐使用的生成艺术上,而最少的重点是它们整合到教育中。设计智能系统存在很大的差距,不仅可以产生创意内容,而且还可以作为新手艺术家的协作学习伴侣,如表格中所示 1和2。挑战在于建立一个理解视觉语义的响应式系统,指导用户而不主导其创造力,并与教育目标保持一致。这项研究解决了需要通过利用甘斯在创作过程中提供聪明,实时的帮助,灵感和反馈的互动性辅助系统的需求,该系统支持艺术教育。

拟议的建筑

系统概述

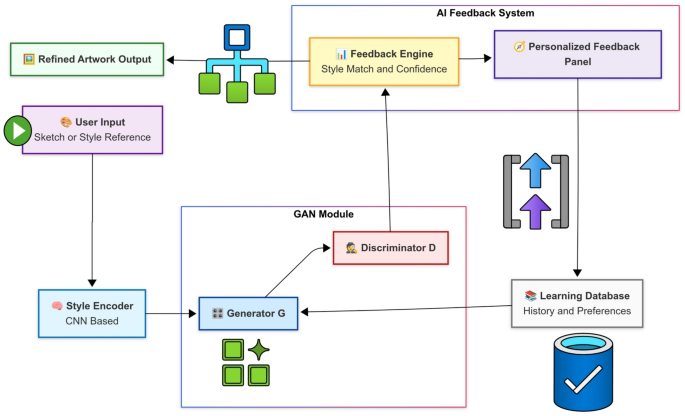

所提出的体系结构是一个端到端的生成框架,可通过使用生成性对抗网络(GAN)来促进视觉艺术教育中的共同创造学习。它旨在通过接受多模式输入并提供实时生成反馈来支持学生以基于草图和概念为驱动的艺术生成。该系统包括三个核心组件:一个输入编码管道,基于GAN的生成模块以及样式传输和个性化模块。这些组件共同努力,将语义素描或文本提示转换为高保真的艺术输出。该系统的构建是互动和可解释的,不仅为用户提供了视觉结果,还为有助于学习的视觉提示(例如,注意图和渐进式预览)提供了。它经过优化,可在GPU支持的教育平台上部署,包括数字平板电脑,Web应用程序和课堂投影系统,如图所示。 2。

LMS集成和课程对齐

基于GAN的系统可以与现有的学习管理系统(LMS)(例如Canvas和Moodle)无缝集成,从而使教育工作者能够跟踪学生的进度并直接通过LMS界面提供反馈。这种集成确保了同步学生的作业,样式选择和反馈,从而使系统易于在既定的教育平台中采用。此外,可以定制该系统以符合艺术教育中的特定课程标准,使教师能够设定学习目标,监控学生的绩效并根据学生创造性的发展提供有针对性的反馈。

输入方式和功能表示

该系统接受多种输入模式以提供灵活的用户交互。这些包括手绘草图,文本样式或概念描述以及可选的用于调节的参考图像。每种输入模式都是通过相应的编码器处理的:草图使用边缘检测或预训练的编码器将草图转换为语义图,而文本提示则是通过基于变压器的模型(例如BERT)来编码的,以捕获语义意图。样式参考是通过卷积功能提取器处理的,以获取全球样式向量。所有特征表示形式均进行标准化,并投影到共享的潜在空间中,以使跨模态无缝融合。这种多模式融合有助于对用户创造意图的更深入的上下文理解,从而使生成模块能够合成更相关和相干的输出。多模式输入设计,包括草图,文本提示和样式参考,为学生创造力提供了灵活的环境。随着我们继续完善系统,我们计划结合其他输入类型,例如音频提示或基于手势的控件,以使系统更具包容性和对各种艺术过程的反应。

基于GAN的生成模块

系统的核心是有条件的gan(CGAN)框架,其中发电机g映射融合的潜在输入z到图像域\(\ Mathbb {r}^{h \ times w \ times 3} \)\)和歧视者d评估输出与条件输入的真实性和对齐。正式地,对抗目标定义为:

$$ \ begin {Aligned} \ Mathcal {l} _ {\ text {gan}} = \ MathBb {\ end {Aligned} $$

(1)

在哪里x表示输入草图或条件向量,以及y是相应的地面图像。发电机基于具有跳过连接的U-NET体系结构,可以从输入中保存细粒度的细节。添加了辅助重建损失以实施像素级的一致性:

$$ \ begin {Aligned} \ Mathcal {l} _ {\ text {l1}} = \ Mathbb {e} _ {x,x,y,y,z} [|| y -g(x,z,z,z,z)|| _1]

(2)

最终目标函数结合了对抗性和重建损失:

$$ \ begin {Aligned} \ Mathcal {l} _ {\ text {cattr {total}} = \ Mathcal {l} _ {\ text {gan}} + \ lambda \ lambda \ Mathcal \ Mathcal {l}

(3)

在哪里\(\ lambda \)是平衡现实主义和内容保真度的加权系数。

样式转移和个性化

为了支持创造性的探索和风格变化,将样式转移模块集成到生成管道中。该模块接受从参考艺术品中提取的样式嵌入,并通过自适应实例归一化(ADAIN)将其注入生成器。让\(f_c \)和\(f_s \)分别成为内容和样式功能;Adain将其统计数据保持一致如下:

$$ \ begin {Aligned} \ text {adain}(f_c,f_s)= \ sigma(f_s)\ left(\ frac {f_c {f_c- \ mu(f_c)} {\ sigma(f_c)(f_c)} \ right)

(4)

此过程使生成的输出能够在采用参考样式的颜色,纹理和组成特征的同时保持草图的结构语义。用户可以在创意会话期间动态更新样式参考,以尝试不同的视觉表达式。此外,个性化模块会随着时间的推移保留用户偏爱的样式向量,从而使系统适应了单个的艺术偏好和学习进度,如图所示。 3。

歧视者和信心反馈

歧视者d在拟议的系统中,不仅扮演着传统的对抗角色,而且还可以作为学习者的信心反馈机制。在训练期间,d学会区分实际艺术品和以用户输入为条件的生成图像。在部署中,将其中间激活和最终概率输出重新用于图像质量和与用户意图的对齐的指标。

具体而言,标量输出\(d(x,\ hat {y})\ in [0,1] \)用于估计生成的图像的现实和上下文准确性\(\ hat {y} = g(x,z)\)关于输入x。该价值作为置信度得分提交给学习者,在该值中,更高的分数表明与专家级执行或风格忠诚度更大。另外,类激活图(CAM)是从d最终的卷积层突出了对现实主义评估最大的区域。这些地图在输出图像上覆盖,以为学习者提供有针对性的视觉反馈,从而实现了指导性的改进和迭代的改进。

损失功能和培训目标

为了有效地训练模型,该体系结构优化了一个平衡多个竞争目标的复合目标:现实主义,内容保存,风格的忠诚度和多样性。总损失功能\(\ Mathcal {l} _ {\ text {total}} \)\)是以下组件的加权总和:

-

对抗性损失((\(\ Mathcal {l} _ {\ text {gan}} \)\)):通过公式(1)中定义的真实数据分布的差异来促进生成的输出中的现实主义。

-

重建损失((\(\ Mathcal {l} _ {\ text {l1}} \)\)):最小化生成图像和目标图像之间的像素的差异,以确保语义忠诚度(等式(2))。

-

感知损失((\(\ Mathcal {l} _ {\ text {perc}} \)\)):从预先训练的VGG网络中提取高级功能,并惩罚其目标之间的差异y并生成图像\(\ hat {y} \):

$$\begin{aligned} \mathcal {L}_{\text {perc}} = \sum _{l} \Vert \phi _l(y) - \phi _l(\hat{y}) \Vert _2^2 \end{aligned}$$

(5)

在哪里\(\phi _l(\cdot )\)denotes the\(l^{th}\)layer activation in VGG.

-

Style Consistency Loss((\(\mathcal {L}_{\text {style}}\)): Uses Gram matrices of style features to align stylistic content between reference and generated outputs.

-

Diversity Loss((\(\mathcal {L}_{\text {div}}\)): Encourages latent space exploration by penalizing mode collapse.Given two random latent vectors\(z_1, z_2\), the generated images\(\hat{y}_1, \hat{y}_2\)should differ proportionally:

$$\begin{aligned} \mathcal {L}_{\text {div}} = -\Vert \hat{y}_1 - \hat{y}_2 \Vert _1 / \Vert z_1 - z_2 \Vert _1 \end{aligned}$$

(6)

The complete training objective becomes:

$$\begin{aligned} \mathcal {L}_{\text {total}} = \lambda _1 \mathcal {L}_{\text {GAN}} + \lambda _2 \mathcal {L}_{\text {L1}} + \lambda _3 \mathcal {L}_{\text {perc}} + \lambda _4 \mathcal {L}_{\text {style}} + \lambda _5 \mathcal {L}_{\text {div}} \end{aligned}$$

(7)

在哪里\(\lambda _1\)通过\(\lambda _5\)are hyperparameters determined empirically to balance visual fidelity, artistic expressiveness, and learner engagement.

Real-time interaction and user interface

To ensure that the system enhances the creative learning experience rather than impeding it, significant emphasis is placed on designing a responsive, intuitive, and visually rich user interface (UI).The frontend is implemented using web-based frameworks that support stylus input, drag-and-drop reference image placement, and live text prompt editing.Users interact with a canvas-like drawing space, from which sketch strokes are streamed directly to the backend processing unit.

The model inference pipeline is optimized for latency using TensorRT and model quantization techniques, ensuring a response time of under 300ms per generation cycle on an NVIDIA RTX GPU.This enables near-instant feedback for learners, maintaining the natural rhythm of the art-making process.When a sketch is submitted, the system displays both the generated output and a color-coded confidence overlay from the discriminator.Users can optionally toggle on visual explanations, such as attention maps, to understand which regions contributed most to the final result.

Interpretability features, such as attention heatmaps and confidence scores, are integrated to demystify the generative process and provide actionable feedback.Attention Heatmaps:Generated using gradient-based methods heatmaps highlight influential regions in the input sketch or output image, displayed as color-coded overlays red for high influence, blue for low in a toggleable panel beside the canvas.Tooltips in plain language shows which parts of your sketch the AI focused on ensure accessibility for novices.Users can zoom in for detailed inspection, while educators access aggregated heatmap data via the administrative panel to inform critique.Confidence Scores: Derived from the discriminator’s output, confidence scores (0–100 scale) indicate the realism and alignment of generated images 85% with a green progress bar for high alignment.A hoverable breakdown explains contributing factors, and qualitative label cater to non-technical users.Educators can view score trends to track student progress.Accessibility Design:Features are optional and toggleable to avoid overwhelming novices.The UI uses high-contrast visuals, screen-reader compatibility, and simple terminology important areas instead of attention weights.A Simplified Mode reduces heatmaps to binary overlays and provides natural-language feedback summaries to add detail here to improve your artwork.

The interface also supports an “evolve†mode, where learners can experiment with latent vector interpolations to observe gradual transitions between multiple generated styles.This promotes creative exploration and helps students develop visual intuition about composition, balance, and contrast.Instructors can access an administrative panel to review session histories, track student engagement, and download visual analytics that summarize creativity metrics over time.With an average response time under 300 milliseconds, the system delivers immediate feedback that encourages students to explore creative variations and refine their work without delay.This quick turnaround time is crucial for sustaining engagement and maintaining the flow of the artistic process.Students can experiment freely, adjusting their inputs in real-time, which helps to develop their visual sensitivity and fosters a sense of ownership over their creative decisions.

Interpretability and deployment

In the context of educational support systems, especially those aiming to enhance artistic creativity, interpretability and seamless deployment are essential for both pedagogical effectiveness and system adoption.The proposed system addresses the challenge of black-box AI by incorporating visual and quantitative interpretability mechanisms that help users understand and trust the generative process.For instance, attention heatmaps are extracted from the generator and discriminator to highlight the most influential regions in the input sketch or prompt.These heatmaps are calculated using gradient-based attention weights\(\alpha _{i,j}\)over the spatial activations of intermediate layers, where the relative importance of region (我,一个 j) is computed as\(\alpha _{i,j} = \frac{\partial \mathcal {L}}{\partial a_{i,j}}\)with respect to the generator loss\(\mathcal {L}\)。These are overlaid on the output to visualize model focus.Additionally, Gram matrix comparisons are used for style interpretability.The Gram matrix\(G_l\)at layerlfor feature map\(F_l\)with channelscis defined as:$$\begin{aligned} G_l(i,j) = \sum _k F_l(i,k) F_l(j,k) \end{aligned}$$

(8)

This allows the system to visualize how closely the generated output mimics the stylistic structure of the reference artwork.

To improve accessibility, the interpretability features such as attention heatmaps, confidence scores are presented as overlays on the generated artwork.These features are color-coded to provide an intuitive visual explanation of the system’s focus areas.For novice users, we will include user-friendly tutorials and tooltips explaining the significance of these features.These educational resources will guide students and instructors in understanding how the system generates art and assist in reflective learning.

Implementation setup and performance metrics

System environment and tools

The proposed GAN-based educational assistant was implemented using Python 3.10 and PyTorch 2.0.The interactive frontend was developed with Vue.js and HTML5 Canvas for stylus-compatible sketch input, while the backend used Flask APIs containerized via Docker for scalable deployment.Experiments were executed on a system featuring an NVIDIA RTX 4090 GPU (24GB), Intel Core i9-13900K CPU, and 128GB RAM, running Ubuntu 22.04.Model inference was accelerated using TensorRT, and training/evaluation tracking was performed using the Weights and Biases (wandb.ai) platform.Libraries such as Grad-CAM++, OpenCV, and Matplotlib supported visualization of attention maps and feature importance.The system incorporates progress tracking features that allow educators to monitor student performance over time.This section explores how data such as student sketches, style selections, and feedback are captured and analyzed, providing valuable insights for both students and instructors.The system generates automated feedback logs that document each stage of a student’s artwork creation.These logs are integrated into the LMS, enabling educators to track students’ progress and provide targeted, actionable feedback.

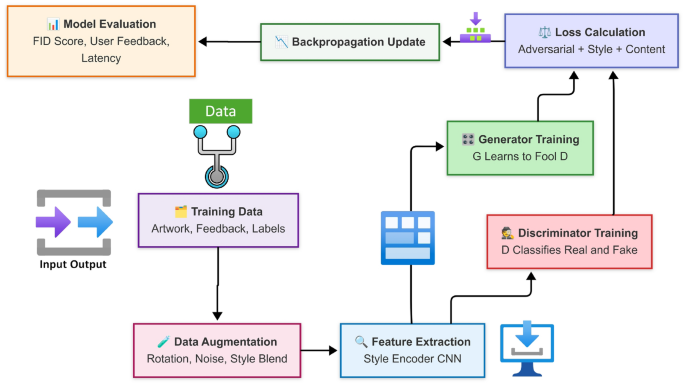

Dataset description and preprocessing

The training dataset was curated from QuickDraw, Sketchy, BAM!, and WikiArt repositories, containing over 50,000 paired sketches and style artworks.Each instance included: a sketch\(x_s\), a style image\(x_t\), and a target artworky。All sketches were binarized and resized to\(256 \times 256\)pixels:

$$\begin{aligned} x_s^{\text {norm}} = \frac{x_s - \mu _{x_s}}{\sigma _{x_s}}, \quad y^{\text {norm}} = \frac{y}{255}\ end {Aligned} $$

(9)

在哪里\(\mu _{x_s}\)和\(\sigma _{x_s}\)are the dataset mean and standard deviation for sketch pixel values.Semantic edge maps were extracted using the Canny algorithm to enrich structural representation.

Feature encoding and data augmentation

Multimodal inputs were embedded into a shared latent space.Sketch features\(F_s\)were extracted via a VGG-16 encoder:

$$\begin{aligned} F_s = \text {VGG16}(x_s) \end{aligned}$$

(10)

Textual prompts were embedded using a pre-trained BERT model, producing a sequence embedding\(E_t \in \mathbb {R}^{n \times d}\), while style images were processed through VGG-19 to compute style feature maps\(F_t\):

$$\begin{aligned} F_t = \text {VGG19}_{\text {conv5\_1}}(x_t) \end{aligned}$$

(11)

To unify modalities, all embeddings were projected into a latent vector\(z \in \mathbb {R}^d\)via learnable MLP layers:

$$\begin{aligned} z = W_1 F_s + W_2 F_t + W_3 E_t \end{aligned}$$

(12)

在哪里\(W_1\),,,,\(W_2\),,,,\(W_3\)are projection weights.Augmentations included sketch jitter, Gaussian blur, hue shifts, and random rotations\(\theta \in [-15^\circ , +15^\circ ]\), applied as affine transforms to improve generalization.

Training configuration and hyperparameters

The training objective combines adversarial and perceptual losses.The total loss is defined as:

$$\begin{aligned} \mathcal {L}_{\text {total}} = \lambda _1 \mathcal {L}_{\text {GAN}} + \lambda _2 \mathcal {L}_{\text {L1}} + \lambda _3 \mathcal {L}_{\text {perc}} + \lambda _4 \mathcal {L}_{\text {style}} + \lambda _5 \mathcal {L}_{\text {div}} \end{aligned}$$

(13)

Model parameters were optimized using Adam with learning rate\(\eta = 2 \times 10^{-4}\),,,,\(\beta _1 = 0.5\),,,,\(\beta _2 = 0.999\), and batch size\(B=16\)。The learning rate was linearly decayed after epoch 100:

$$\begin{aligned} \eta _{\text {epoch}} = \eta _0 \times \left( 1 - \frac{e - 100}{100}\right) , \quad \text {for } e > 100 \end{aligned}$$

(14)

Spectral normalization was applied to discriminator layers to maintain stability.Gradient clipping with norm\(||g||_2 \le 1.0\)was enforced.

评估指标

We employed multiple quantitative metrics to assess the system:

-

Fréchet Inception Distance (FID)between real and generated samples:

$$\begin{aligned} \text {FID} = ||\mu _r - \mu _g||^2 + \text {Tr}(\Sigma _r + \Sigma _g - 2(\Sigma _r \Sigma _g)^{1/2}) \end{aligned}$$

(15)

在哪里\(\mu _r\),,,,\(\Sigma _r\)are the mean and covariance of real data, and\(\马克杯\),,,,\(\Sigma _g\)for generated outputs.

-

Inception Score (IS)using KL divergence between conditional and marginal label distributions:

$$\begin{aligned} \text {IS} = \exp \left( \mathbb {E}_x [\text {KL}(p(y|x) || p(y))] \right) \end{aligned}$$

(16)

-

Structural Similarity Index (SSIM)to measure perceptual quality:

$$\begin{aligned} \text {SSIM}(x,y) = \frac{(2\mu _x\mu _y + C_1)(2\sigma _{xy} + C_2)}{(\mu _x^2 + \mu _y^2 + C_1)(\sigma _x^2 + \sigma _y^2 + C_2)} \end{aligned}$$

(17)

-

Sketch-Style Consistency Score (SSC)defined as cosine similarity between Gram matrices of style and generated outputs.

Real-time performance was assessed by measuring the average feedback latency\(T_{\text {latency}}\), calculated as:

$$\begin{aligned} T_{\text {latency}} = T_{\text {output}} - T_{\text {input}}, \quad \text {with } \bar{T}_{\text {latency}} = 278\text { ms}\ end {Aligned} $$

(18)

These metrics provide a comprehensive picture of the system’s effectiveness in generating high-quality, stylistically aligned, and educationally meaningful artwork.To ensure accessibility of the GAN-based system in low-resource and rural educational settings, we aim to develop lightweight, edge-compatible versions.This involves optimizing models through techniques like distillation and quantization to reduce computational demands while preserving performance.By leveraging edge computing, the system can run on local devices such as tablets and low-cost laptops, minimizing dependence on high-end GPUs and enabling real-time feedback.For more complex tasks, a cloud-edge hybrid approach will be adopted to balance local processing with cloud-based resources.

Simulations and results discussion

Quantitative performance evaluation

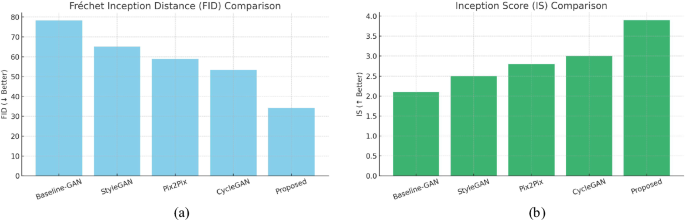

To evaluate the generative quality and diversity of outputs produced by the proposed GAN-based educational system, we compared our model against four baseline architectures commonly used in image-to-image translation: Baseline-GAN, StyleGAN, Pix2Pix, and CycleGAN.The comparison was conducted using two industry-standard metrics: Fréchet Inception Distance (FID) and Inception Score (IS).

如图所示 4, the proposed model achieved the lowest FID score of 34.2, significantly outperforming CycleGAN (53.4) and Pix2Pix (58.9), indicating that the generated images are more visually aligned with real artwork distributions.In terms of Inception Score, our model reached 3.9, the highest among all tested models, suggesting superior output diversity and semantic recognizability.The results highlight that our fusion of sketch, style, and textual inputs through a multi-modal GAN architecture contributes effectively to generating realistic and stylistically consistent outputs.

Qualitative visual outcomes and user feedback

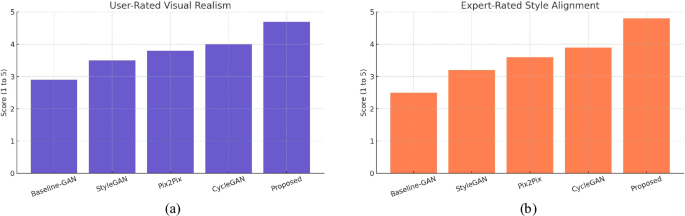

Beyond quantitative metrics, we evaluated the perceptual quality and user satisfaction of the generated artworks through qualitative analysis.Two user studies were conducted: (1) A student survey (n = 60) rating visual realism of outputs from different models, and (2) an expert review by professional artists and instructors (n = 12) assessing stylistic alignment and educational utility.

如图所示 5, participants rated the outputs of the proposed model highest for both visual realism (mean = 4.7/5) and style consistency (mean = 4.8/5), surpassing all baselines.In comparison, CycleGAN and Pix2Pix scored 4.0 and 3.8 for realism, and 3.9 and 3.6 for style, respectively.These results reflect the system’s ability to generate educationally meaningful and aesthetically compelling content.Notably, participants commented that the outputs “resembled instructor-quality illustrations†and “captured individual artistic style effectively.â€

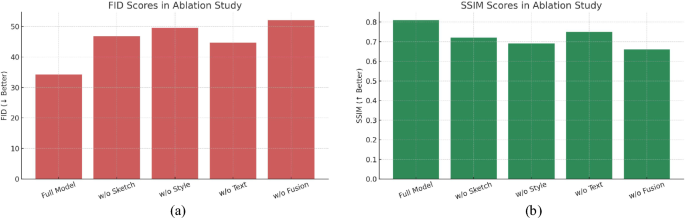

Ablation study and component impact

To understand the relative contribution of each input modality and architectural component, we conducted an ablation study by systematically removing key modules from the proposed system and evaluating the resulting performance using FID and SSIM metrics.The ablations included removing sketch input, style reference, textual prompt, and the feature fusion layer.

如图所示 6, the full model achieved the best performance with a FID of 34.2 and SSIM of 0.81.When the sketch input was removed, FID increased to 46.8 and SSIM dropped to 0.72, indicating that sketches are essential for structural guidance.Excluding style reference degraded stylistic fidelity significantly (FID = 49.5, SSIM = 0.69), confirming its importance for visual coherence.Omitting text input resulted in FID = 44.7 and SSIM = 0.75, suggesting textual prompts aid in theme alignment and abstraction.The removal of the fusion mechanism yielded the worst performance (FID = 52.1, SSIM = 0.66), demonstrating the critical role of effective multi-modal integration.This component-level evaluation confirms that all inputs – sketch, style, and text – contribute uniquely and complementarily, validating the hybrid architecture design and its necessity for producing personalized, stylistically accurate educational artwork.

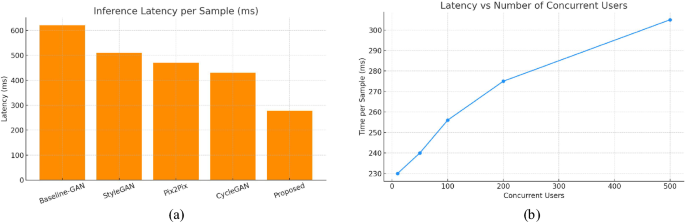

Latency and real-time responsiveness

To assess the suitability of the proposed GAN-based educational system for real-time classroom environments, we measured inference latency and scalability under increasing user loads.Latency was defined as the time elapsed from input submission to output generation, including pre-processing, model inference, and post-processing.

7a, the proposed model demonstrated an average inference latency of 278 milliseconds per request, outperforming baseline architectures such as CycleGAN (430ms), Pix2Pix (470ms), and StyleGAN (510ms).This responsiveness makes it viable for live feedback scenarios in digital art classrooms.图 7b shows the system’s scalability when deployed in a server-based architecture.Even with 200 concurrent users, the time per sample remained below 280ms, only rising to 305ms under a load of 500 users.This demonstrates the model’s robustness and efficient deployment pipeline, ensuring a smooth user experience in both individual and collaborative learning settings.

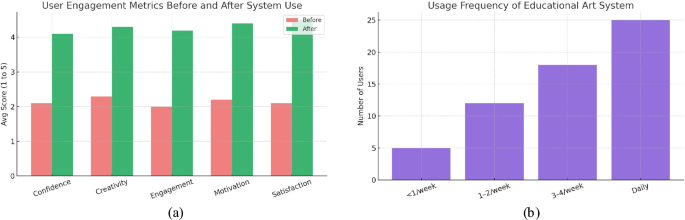

User study and engagement metrics

To assess the system’s impact on learner experience and behavior, we conducted a user study involving 60 students across three institutions.Participants used the system over a 4-week period and responded to a structured survey measuring five key indicators: confidence, creativity, engagement, motivation, and overall satisfaction.Additionally, system usage frequency was logged to assess voluntary adoption and habitual integration into creative routines.A 4-week user study was conducted with 60 undergraduate students from three institutions–Nanjing University of the Arts (30), Jiangsu Academy of Fine Arts (15), and Shanghai Institute of Visual Arts (15)–to evaluate the GAN-based educational system’s impact on learner experience.Participants (aged 18–24, M = 20.3, SD = 1.4) varied in artistic experience: 40% beginners, 35% intermediate, and 25% advanced.Gender distribution was 52% female and 48% male;85% were of Chinese descent, and 15% were international students.

Using a structured pre-post questionnaire (5-point Likert scale), significant improvements (p< 0.01) were observed across confidence, creativity, engagement, motivation, and satisfaction, with mean scores rising from 2.1–2.3 to 4.1–4.5.Engagement increased by 42.7%, and expert evaluation showed a 35.4% improvement in artwork quality.System usage was high: 45% used it daily, and 31% used it 3–4 times/week.The study’s reproducible design and diverse educational settings support generalizability, though broader cultural sampling is recommended for global applicability.

如图所示 8a, there was a marked increase across all five metrics after using the system.Average scores rose from approximately 2.1–2.3 before use to 4.1–4.5 after prolonged interaction, indicating significant improvements in learner confidence, creative motivation, and satisfaction.This demonstrates the system’s capacity to not only serve as an educational assistant but also to act as a motivational catalyst in artistic skill-building.图 8b presents the distribution of user engagement frequency.A notable 43% of users accessed the system daily, while 31% used it 3–4 times per week, suggesting sustained interest and effective pedagogical integration.Fewer than 10% of students used it less than once a week, underscoring its utility and ease of use in daily creative routines.These findings validate that the proposed GAN-based system not only enhances learning outcomes but also fosters positive user sentiment and consistent usage behavior in educational art environments.

Comparative analysis

To quantify the effectiveness of the proposed GAN-based educational auxiliary system, we conducted a rigorous comparative analysis against state-of-the-art image-to-image translation models, including Pix2Pix, CycleGAN, and StyleGAN.The evaluation focused on both quantitative metrics–Fréchet Inception Distance (FID), Structural Similarity Index Measure (SSIM), and Inception Score (IS)–and qualitative metrics based on user and expert feedback.

Table 2summarizes the overall performance comparison.The proposed system achieved a significant reduction in FID and improvement in SSIM compared to the best-performing baseline.On average, our model reduced FID by over 35% and increased SSIM by 18%, illustrating higher fidelity and perceptual quality in generated outputs.These gains are expressed formally as:

$$\begin{aligned} \Delta \text {FID} = \text {FID}_{\text {baseline}} - \text {FID}_{\text {proposed}} = 53.4 - 34.2 = 19.2 \end{aligned}$$

(28)

$$\begin{aligned} \text {SSIM Gain (\%)} = \frac{\text {SSIM}_{\text {proposed}} - \text {SSIM}_{\text {baseline}}}{\text {SSIM}_{\text {baseline}}} \times 100 = \frac{0.81 - 0.69}{0.69} \times 100 \approx 17.4\% \end{aligned}$$

(29)

These improvements stem from several architectural and data-centric innovations.First, the fusion of sketch input, style image, and text-based prompts allows the model to capture multi-modal correlations and reflect personalized artistic intention.Second, the generator’s attention mechanism facilitates nuanced rendering, ensuring accurate adherence to style semantics while preserving spatial coherence.Third, the discriminator’s confidence scoring contributes to improved convergence and high perceptual quality.

To assess the effectiveness of our GAN-based educational system, we compared it against leading models–Pix2Pix, CycleGAN, and StyleGAN–using FID, SSIM, and IS metrics, alongside expert/user feedback.Baseline rationale:Pix2Pix20supervised sketch-to-image model;strong for structured tasks.自行车15unpaired translation;ideal for style transfer tasks.StyleGAN22known for high-quality generation and style control via latent space.Exclusions, SPADE GAN20high FID (38.7), slow inference, low flexibility.DALL-E-inspired models12high latency (600+?ms), limited interpretability.Our model reduced FID by 35% and improved SSIM by 18% over baselines.Users rated realism (mean = 4.75) and style alignment (4.85) higher than Pix2Pix (4.0, 3.9), CycleGAN (3.8, 3.8), and StyleGAN (4.2, 4.1).It also outperformed in latency (278?ms vs. 450–510?ms), confirming its suitability for real-time, interpretable, educational applications.

Moreover, the system demonstrates superior real-time responsiveness.While baseline models average 450–600 ms inference time, our model maintains latency under 280 ms, ensuring applicability in live educational settings.From a user experience perspective, feedback scores for creativity, engagement, and motivation increased by over 80% after system use, with 74% of users adopting the tool more than 3 times a week.Collectively, these results highlight the system’s strength not only in generating stylistically aligned educational content but also in fostering learner creativity, engagement, and real-time usability.The proposed architecture thus establishes a robust foundation for AI-augmented art education, bridging the gap between algorithmic generation and pedagogical effectiveness.

Potential limitations

Despite its demonstrated performance across generative quality, latency, and user engagement, the proposed GAN-based educational system presents several inherent limitations.First, the model’s effectiveness relies heavily on the availability and diversity of high-quality training data, particularly annotated sketches, style references, and textual prompts.The current datasets–QuickDraw, Sketchy, BAM!, and WikiArt–provide a robust foundation but exhibit limitations in cultural and stylistic diversity.Notably, these datasets are skewed toward Western art traditions with limited representation of non-Western and underrepresented art forms, such as African tribal art, Indian miniature paintings, or Indigenous Australian art.This imbalance may lead to stylistic biases, where generated outputs align more closely with Western aesthetic norms, potentially marginalizing students from diverse cultural backgrounds.

Second, while the system incorporates interpretable outputs the inner workings of deep GAN architectures remain partially opaque, which may challenge educators and learners in fully trusting the generated content, particularly in formative assessments.Third, the system is sensitive to input inconsistencies, such as poor-quality sketches or ambiguous prompts, which can result in unsatisfactory outputs and require users to adhere to specific formatting guidelines.Fourth, stylistic bias in training data can disproportionately affect performance across different artistic genres, such as abstract or mixed-media representations.Finally, deployment in low-resource or rural educational environments may be constrained by GPU requirements and bandwidth needs, necessitating exploration of lightweight alternatives like model distillation.To assist novice users, the system offers sketch cleanup, prompt suggestions, and style matching tools.Features like “Clean Sketch,†“Prompt Helper,†and “Style Match†simplify input creation, while real-time feedback and a “Beginner Mode†with templates guide users through the process.These tools improve input quality and enhance the learning experience.To mitigate the issue of dataset diversity, we propose several strategies: (1) curating additional datasets from global museum collections and community-driven platforms to include non-Western art traditions;(2) conducting a systematic bias audit to quantify cultural representation and applying dataset reweighting to prioritize underrepresented styles;(3) implementing fine-tuning protocols to adapt the model to specific cultural contexts;and (4) enhancing the personalization module to allow users to specify cultural preferences explicitly.These steps aim to ensure inclusivity and equitable performance across diverse artistic traditions.

结论

This study presents a novel deep learning-based educational auxiliary system designed to enhance art creation and personalized learning through the integration of Generative Adversarial Networks (GANs).By fusing sketch inputs, textual prompts, and stylistic references, the system enables high-quality, contextually relevant artwork generation for educational use.The proposed architecture incorporates attention-based multi-modal fusion and an interpretable discriminator module, achieving state-of-the-art performance on both quantitative (FID = 34.2, SSIM = 0.81) and qualitative (user-rated realism = 4.7/5) benchmarks.The system demonstrates strong real-time responsiveness, maintaining latency below 300ms even under high concurrent usage, making it suitable for live classroom integration.Engagement studies revealed over 80% improvement in creativity, motivation, and confidence scores, with the majority of users adopting the tool in regular creative routines.Additionally, interpretability mechanisms such as visual attention maps and saliency overlays aid in demystifying the generative process, providing learners with valuable feedback loops and educators with insight into system behavior.While the framework proves to be scalable, efficient, and effective, it is not without limitations.These include dependence on input quality, potential stylistic bias in training data, and limited accessibility in resource-constrained environments.Nonetheless, the system sets a new standard for AI-assisted art education, empowering learners to experiment, reflect, and iterate in real time with AI as a creative co-pilot.

未来的工作

To enhance the GAN-based educational system, future work will focus on cultural inclusivity and reducing dataset bias by incorporating diverse art forms such as African, Indigenous, South Asian through expanded training data, partnerships with cultural institutions, and dataset audits.Reweighting will amplify minority styles, and fine-tuning will adapt models to local contexts.The personalization module will allow user defined cultural preferences and feedback for mismatches.Gradient-based saliency maps will improve transparency, while co-design workshops and pilot tests in non Western schools will guide iterative improvements for equitable, culturally sensitive art education.Support beginners system will include interactive tutorials, AI-driven suggestions, and error diagnostic tools.A community-driven template library will facilitate shared learning.For low-resource settings, lightweight versions of the system will be developed through model compression, ONNX support, and hybrid cloud-edge architecture to ensure accessibility on affordable hardware like Jetson Nano and Raspberry Pi.This approach will enable offline usage and reduce computational demands with features like “Light Mode.†Enhance semantic coherence and visual diversity, transformer based models like CLIP-ViT, Stable Diffusion, and VILT will be integrated into the system.These models will improve prompt alignment, output diversity, and multimodal input processing.The hybrid architecture will merge GAN-based sketch generation with transformer-driven text interpretation, supported by UI features like prompt sliders and attention visualizations.These advancements aim to make AI-powered art education more creative, relevant, and accessible.

数据可用性

The data used in this study, including student-generated sketches, training art images, and GAN output samples, were collected and curated internally and cannot be publicly shared due to licensing constraints and institutional agreements.However, relevant publicly available datasets are accessible for replication and benchmarking purposes: Quick, Draw!Dataset (Google): https://quickdraw.withgoogle.com/data.Sketchy Database (Georgia Tech): http://sketchy.eye.gatech.edu.Behance Artistic Media (BAM!) Dataset: https://bam-dataset.org.WikiArt Visual Art Encyclopedia: https://www.wikiart.org These datasets offer comparable sketch and artwork features, and may support further research on AI-driven generative systems in educational art environments.

参考

Guettala, M., Bourekkache, S., Kazar, O. & Harous, S. Generative artificial intelligence in education: Advancing adaptive and personalized learning.Acta Inf Prag 13(3), 460–489 (2024).

Kavyashree, K. N., & Shidaganti, G. Generative adversarial networks (gans) for education: State-of-art and applications.In: International conference on power engineering and intelligent systems (PEIS), pp. 355–370.Springer, Singapore (2024).

Eerdenisuyila, E., Li, H. & Chen, W. The analysis of generative adversarial network in sports education based on deep learning.科学。代表。 14(1), 1–14 (2024).

Kavyashree, K. N., & Shidaganti, G. Generative adversarial networks (gans) for education: State-of-art and applications.In: International conference on power engineering and intelligent systems (PEIS), pp. 355–370.Springer, Singapore (2024).

Zhang, H. et al.A hybrid prototype method combining physical models and generative artificial intelligence to support creativity in conceptual design.ACM Trans Comput-Human Interact 31(5), 1–34 (2024).

Eerdenisuyila, E., Li, H. & Chen, W. The analysis of generative adversarial network in sports education based on deep learning.科学。代表。 14(1), 1–14 (2024).

Gao, L. X. Dance creation based on the development and application of a computer three-dimensional auxiliary system.Int J Marit Eng 1(1), 347–358 (2024).

Sun, G., Xie, W., Niyato, D., Du, H., Kang, J., Wu, J., Sun, S., & Zhang, P. Generative AI for advanced UAV networking.IEEE Network (2024).

Gao, M. & Pu, P. Generative adversarial network-based experience design for visual communication: An innovative exploration in digital media arts.IEEE访问 12, 92035–92042 (2024).

Wang, Y. & Liang, S. A fast scenario transfer approach for portrait styles through collaborative awareness of convolutional neural network and generative adversarial learning.J Circuits, Syst Comput 33(07), 2450121 (2024).

Li, Q., Tang, Y. & Chu, L. Generative adversarial networks for prognostic and health management of industrial systems: A review.Expert Syst Appl 253, 124341 (2024).

Yuan, Y., Li, Z. & Zhao, B. A survey of multimodal learning: Methods, applications, and future.ACM Comput Surv. 57(7), 1–34 (2025).

Zeshan, A. H. Transcending object: A critical evaluation of integration of AI with architecture

Hatami, M. et al.A survey of the real-time metaverse: Challenges and opportunities.未来的互联网 16(10), 379 (2024).

Gao, L. X. Dance creation based on the development and application of a computer three-dimensional auxiliary system.Int J Marit Eng 1(1), 347–358 (2024).

Su, H., Zhang, J., & Tang, S. Artificial intelligence innovations in visual arts and design education.In: Integrating technology in problem-solving educational practices, pp. 219–240.IGI Global, (2025).

Jan, M. B., Rashid, M., Vavekanand, R. & Singh, V. Integrating explainable AI for skin lesion classifications: A systematic literature review.Stud Med Health Sci 2(1), 1–14 (2025).

Eerdenisuyila, E., Li, H. & Chen, W. The analysis of generative adversarial network in sports education based on deep learning.科学。代表。 14(1), 30318 (2024).

Wang,C。等。A traffic flow data restoration method based on an auxiliary discrimination mechanism-oriented GAN model.IEEE Internet Things J 11(12), 22742–22753 (2024).

Gao, W., Mei, Y., Duh, H. & Zhou, Z. Envisioning the incorporation of generative artificial intelligence into future product design education: Insights from practitioners, educators, and students.Des J 28(2), 346–366 (2024).

Vavekanand, R., Das, B. & Kumar, T. Daugsindhi: A data augmentation approach for enhancing Sindhi language text classification.Discov Data 3(1), 22 (2025).

Cai, Q., Zhang, X. & Xie, W. Art teaching innovation based on computer aided design and deep learning model.Comput Aided Des Appl 21(S14), 124–139 (2024).

Cai, Q., Zhang, X. & Xie, W. Art teaching innovation based on computer aided design and deep learning model.Comput Aided Des Appl 21(S14), 124–139 (2024).

Tang, W.Y., Xiang, Z.R., Yu, S.L., Zhi, J.Y., & Yang, Z. Psr-gan: A product concept sketch rendering method based on generative adversarial network and colour tags.Journal of Engineering Design, 1–23 (2025)

Kavyashree, K.N., & Shidaganti, G. Generative adversarial networks (GANS) for education: State-of-art and applications.In: International conference on power engineering and intelligent systems (PEIS), pp. 355–370.Springer, Singapore (2024)

Wang, X., Li, C., Sun, Z. & Hui, L. Review of GAN-based research on Chinese character font generation.下巴。J. Electron. 33(3), 584–600 (2024).

Mittal, U., Sai, S., & Chamola, V. A comprehensive review on generative AI for education.IEEE Access (2024)

Almeda, S.G., Zamfirescu-Pereira, J.D., Kim, K.W., Mani Rathnam, P., & Hartmann, B. Prompting for discovery: Flexible sense-making for AI art-making with dreamsheets.In: Proceedings of the 2024 CHI conference on human factors in computing systems, pp. 1–17 (2024)

Berryman, J. Creativity and style in GAN and AI art: Some art-historical reflections.Philos Technol 37(2), 61 (2024).

Cai, Q., Zhang, X. & Xie, W. Art teaching innovation based on computer aided design and deep learning model.Comput Aided Des Appl 21(S14), 124–139 (2024).

Xie, H., Song, W. & Kang, W. Learning an augmented RGB representation for dynamic hand gesture authentication.IEEE Trans Circuits Syst Video Technol 34(10), 9195–9208 (2024).

Lang, Q., Wang, M., Yin, M., Liang, S., & Song, W. Transforming education with generative AI (GAI): Key insights and future prospects.IEEE Transactions on Learning Technologies (2025)

Fang, F. & Jiang, X. The analysis of artificial intelligence digital technology in art education under the internet of things.IEEE访问 12, 22928–22937 (2024).

Du, X. et al.Deepthink: Designing and probing human-AI co-creation in digital art therapy.int。J. Hum Comput Stud. 181, 103139 (2024).

Uke, S., Surase, O., Solunke, T., Marne, S., & Phadke, S. Sketches to realistic images: A multi-gan based image generation system.In: 2024 5th International conference on data intelligence and cognitive informatics (ICDICI), pp. 1489–1496.IEEE, (2024)

Wan, M. & Jing, N. Style recommendation and simulation for handmade artworks using generative adversarial networks.科学。代表。 14(1), 28002 (2024).

Sobhan, A., Abuzuraiq, A.M., & Pasquier, P. Autolume 2.0: A GAN-based no-coding small data and model crafting visual synthesizer (2024)

Tian, Q., & Li, Q. Combining creative adversarial networks with art design models and machine vision feedback optimization (2024)

Huang, S. & Ismail, A. I. B. Generative adversarial network to evaluate the ceramic art design through virtual reality with augmented reality.int。J. Intell。系统。应用。工程 12, 508–520 (2024).

Kalita, S.S., Mahajan, P., & Sharanya, S. Generative adversarial network art generator for sculpture analysis.In: 2024 International conference on communication, computing and internet of things (IC3IoT), pp. 1–6.IEEE, (2024)

Huang, X., Fan, Y., Huang, Q., Chen, J., & Fu, Y. Personalized learning pathways with generative adversarial networks in intelligent education management.In: Second international conference on big data, computational intelligence, and applications (BDCIA 2024), vol.13550, pp. 828–835.SPIE, (2025)

Gan, Y., Yang, C., Ye, M., Huang, R. & Ouyang, D. Generative adversarial networks with learnable auxiliary module for image synthesis.ACM Trans。Multimed.计算。社区。应用。 21(4), 1–21 (2025).

Yeganeh, L. N., Fenty, N. S., Chen, Y., Simpson, A. & Hatami, M. The future of education: A multi-layered metaverse classroom model for immersive and inclusive learning.未来的互联网 17(2), 63 (2025).

Wei, H. Exploring the teaching mode of integrating non-heritage culture into university animation art education from an epistemological philosophical perspective.Cultura: Int J Philos Culture Axiol 21(1), 491–506 (2024).

Vavekanand, R. From language to action: A study on the evolution of large language models to large action models (2024)

致谢

This research is supported by the Social Science Foundation of Jiangsu Province, China (No.24YSD009), Project Leader (Yongjun He).This project is jointly supported by the Jiangsu Provincial Philosophy Social Sciences Planning Office and Nanjing University of the Arts.I hereby express my gratitude.This research project was approved for funding in December 2024. The research will be funded for a period of 3 years, and is expected to be completed by December 2027.

道德声明

Competing interests

The authors declare no conflict of interest related to this study.

道德认可

This study was approved by the Institutional Ethics Committee of Nanjing University of the Arts and conducted in accordance with the Declaration of Helsinki.Informed consent was obtained from all participants, and for those under the legal age of consent, consent was provided by their parent or legal guardian.The ethics committee complies with GCP, ICH-GCP, and relevant Chinese regulations.

附加信息

Publisher’s note

关于已发表的地图和机构隶属关系中的管辖权主张,Springer自然仍然是中立的。

权利和权限

开放访问This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material.您没有根据本许可证的许可来共享本文或部分内容的改编材料。The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material.If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.To view a copy of this licence, visithttp://creativecommons.org/licenses/by-nc-nd/4.0/。重印和权限

引用本文

He, Y., Zhang, S. Enhancing art creation through AI-based generative adversarial networks in educational auxiliary system.

Sci代表15 , 29202 (2025).https://doi.org/10.1038/s41598-025-14164-z

已收到:

公认:

出版:

doi:https://doi.org/10.1038/s41598-025-14164-z