AI辅助多模式信息用于筛查抑郁症:系统评价和荟萃分析

作者:Jiang, Jiehui

抽象的

抑郁症是所有年龄段的普遍且昂贵的精神障碍。人工智能(AI)协助的生理和行为信息,例如脑电图(EEG),眼动,视频或音频监测,步态分析为抑郁症筛查提供了有希望的工具。我们系统地回顾了这些AI辅助措施在抑郁筛查中的分类性能。Google Scholar,Web of Science和IEEE Xplore进行了全面的文献搜索,搜索日期截至2025年6月7日。报告的AUC值是根据所有合格研究结果计算得出的。AI辅助多模式方法的合并AUC为0.95(95%CI:0.92-0.96),表现优于单模式方法(合并的AUC:0.84 0.92)。亚组分析表明,深度学习模型显示出更高的性能,AUC为0.95(95%CI:0.93 0.97)。这些发现突出了基于AI的多模式信息在抑郁筛查中的潜力,并强调需要建立标准化数据库并改善研究设计。

别人观看的类似内容

介绍

抑郁症是全球领先的心理健康障碍,是全球残疾负担的主要贡献者1。抑郁症的潜在发病机理尚不清楚,临床实践中的可靠筛查具有挑战性,因为当前的评估主要依赖于患者病史和自我报告2。此外,这使大规模的社区筛查变得困难,并增加了遗体诊断的风险。因此,有效而准确的抑郁诊断方法对于早期干预至关重要。最近,随着医疗技术的进步,具有成本效益,无创和易于收集的技术,例如脑电图,眼动,视频或音频监测以及步态分析,在抑郁症筛查中越来越多地实现3,,,,4,,,,5,,,,6。这些方法为生理和行为信息提供了客观指标。

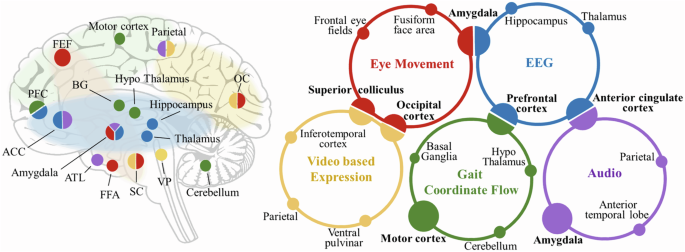

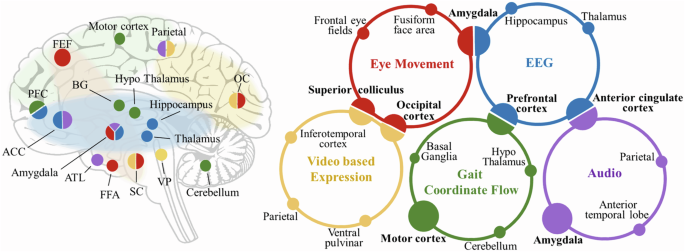

抑郁症是一种临床和病因异质性疾病7,这种异质性反映在通过生理和行为评估检测到的客观测量生物标记物的变异性。根据脑电图的面部识别任务或注意力任务,研究人员检测到抑郁症患者的情绪网络中异常的大脑激活,包括背外侧前额叶皮层(DLPFC),前扣带回皮层(ACC),甲状腺纤维额(ORBITOFRONTAL CORTEX)8,,,,9,,,,10,,,,11。此外,对眼动追踪的研究表明,抑郁症患者倾向于更多地关注负面刺激,而在观看情绪图像时忽略积极的互动12,,,,13,,,,14。这可能伴随着额眼球(FEF),顶叶皮层和枕皮层的变化。此外,抑郁症患者表现出缓慢的音频率,负面表情增加15。此外,感觉运动系统与与情绪相关的大脑网络和更高的认知功能之间存在双向相互作用16,,,,17。抑郁可能导致步态异常的严重使人衰弱的疾病18。我们总结了图2中与不同的生理和行为信息有关的大脑区域。1。但是,由于抑郁症状的多方面性质,单模式的生理或行为信息可能不足以捕获足够的能力以有效筛查19。

当前的研究表明,多模式信息可以导致更具个性化和准确的筛选20。但是,探索多模式信息之间的相关性和互补性的挑战是要解决的紧迫问题。随着人工智能(AI)的发展,研究人员正试图使用更自动化,客观,高效和实时方法来进行抑郁症筛查。AI技术尤其是深度学习(DL)有望提高多模式特征集成的准确性,从而提高分类性能并减少筛选错误。尽管大量研究采用了各种AI算法20,,,,21,,,,22,在数字医疗保健中使用多种方式进行抑郁症筛查的综合荟萃分析仍然不足。

这项研究系统地检查了整合AI辅助(即采用人工智能算法,包括机器学习和深度学习,进行特征提取和分类)多模式生理和行为信息可以改善抑郁症筛查的分类性能。多模式AI辅助筛选方法已经在其他医学领域取得了重大突破23,,,,24,这也表明了心理健康应用的潜力。尽管先前的荟萃分析已经探索了可穿戴设备的数字信号(例如体育活动数据,位置数据等)21和社交媒体平台(包括推文和通信日志)25,,,,26作为抑郁症的潜在指标。相比之下,我们的荟萃分析集中于客观测量的生理和行为信息,这些信息通常用于融合框架,例如脑电图,眼动,面部表情,语音和步态。通过对已发布数据得出的建模结果进行系统的荟萃分析,我们试图强调多模式集成和概述未来方向的独特价值,以进行更有效和客观的抑郁筛查。

结果

符合条件研究的研究选择和特征

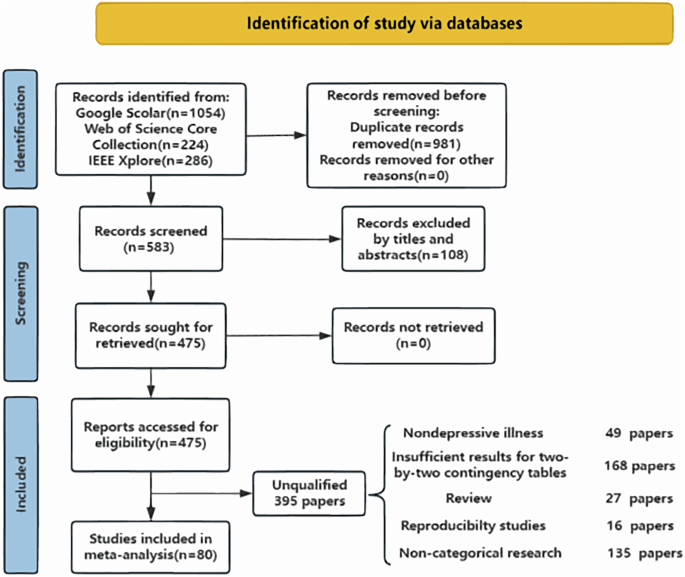

在初始搜索中总共确定了1564个记录,其中981个是重复的。筛选标题和摘要后,将108项研究排除在外,因为它们与研究主题显然无关,留下了475篇文章以进行全文资格评估。其中,在审查了全文手稿的含量后,排除了397个,导致定性合成中包括80项研究(包括33项EEG研究,6项眼动研究,6项视频研究,16项视频研究,6个音频研究,6个步态研究和13项多模式研究)。这80项研究27,,,,28,,,,29,,,,30,,,,31,,,,32,,,,33,,,,34,,,,35,,,,36,,,,37,,,,38,,,,39,,,,40,,,,41,,,,42,,,,43,,,,44,,,,45,,,,46,,,,47,,,,48,,,,49,,,,50,,,,51,,,,52,,,,53,,,,54,,,,55,,,,56,,,,57,,,,58,,,,59,,,,60,,,,61,,,,62,,,,63,,,,64,,,,65,,,,66,,,,67,,,,68,,,,69,,,,70,,,,71,,,,72,,,,73,,,,74,,,,75,,,,76,,,,77,,,,78,,,,79,,,,80,,,,81,,,,82,,,,83,,,,84,,,,85,,,,86,,,,87,,,,88,,,,89,,,,90,,,,91,,,,92,,,,93,,,,94,,,,95,,,,96,,,,97,,,,98,,,,99,,,,100,,,,101,,,,102,,,,103,,,,104,,,,105,,,,106提供了足够的数据以满足荟萃分析的纳入标准(图。2)。这些包括研究的详细特征在表中显示1和补充表16。所有研究都使用回顾性数据。

80项研究中的71个(89%)明确报告说,其分类标签的基础真实评估来自量表(例如DSM -IV和-V,PHQ -8和-9等)。其余的九项研究未指定任何抑郁诊断或筛查标准。此外,数据中的38个研究中的38个(47.5%)来自公共数据集,而其余的研究涉及专有数据集的收集(42/80,52.5%)。所使用的主要基础真相评估是精神障碍的诊断和统计手册,第四/第五版(25/80,31.3%)和九个/八个项目的患者健康问卷(26/80,32.5%)。EEG(24/38,63.1%),视频访谈(8/16,50.0%)和Audio(23/30,76.7%),EYE(24/38,76.7%),临床访谈(24/38,63.1%),临床访谈(24/38,63.1%),临床访谈(23/30,76.7%),供眼睛移动(4/7/7/100.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0 for)(7/7/7/7/7/7/7/7/7/7/7/7/7/7,66.7%)。这些研究共同采用了19种算法,最常用的算法是支持向量机(SVM)(36/80,45.0%),K-Nearest邻居(15/80,18.8%)和卷积神经网络(CNN)(CNN)(17/80,21.3%)。

在多模式抑郁筛查研究中,13项研究对不同类型的模态融合方法和四个结果进行了15项研究。四项调查使用了脑电图和音频信息(4/15,26.7%),一项使用的脑电图和眼动运动(1/15,6.7%),其余8个调查使用了音频,视频和文本信息(10/15,66.7%)。

AI算法的汇总性能用于分类NC的抑郁症

桌子2总结了对抑郁症的各种模态信息的AI辅助筛选的全面绩效估计。森林地块可以在补充无花果中找到。112和SROC曲线可以在补充无花果中找到。1318。

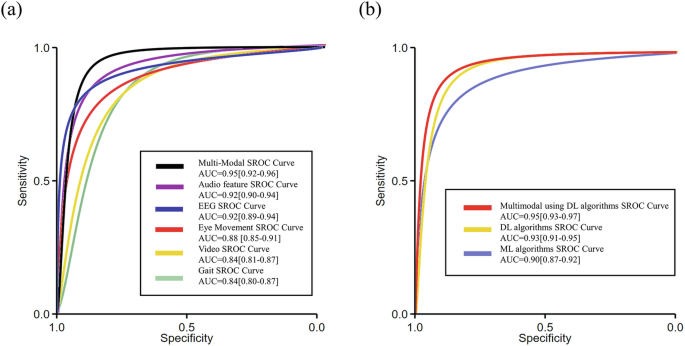

涉及脑电图筛查抑郁症的33项研究提供了足够的数据来构建88个意外表并确定分类绩效指标。该组的汇总估计值为0.85 SE(95%CI:0.84 - 0.87),0.86 SP(95%CI:0.84 0.88),AUC为0.92(95%CI:0.89 .0.94)(图。3)。当选择具有最高性能的应急表时,产生了0.86(95%CI:0.83的89),SP的合并SE,SP为0.89(95%CI:0.85 -0.92),AUC为0.94(95%CI:CI:0.92 0.96)。

该组的汇总估计值为0.77 SE(95%CI:0.72 - 0.81),0.86 SP(95%CI:0.83 0.89),AUC为0.88(95%CI:0.85-0.91)(图。3)。当选择具有最高性能的应急表时,产生了0.81的合并SE(95%CI:0.74(0.74(0.74)),SP为0.85(95%CI:0.77 0.90),AUC为0.90(95%CI:CI:0.87 0.92)。

六项关于视频抑郁症筛查的研究提供了13个应急表。该组的汇总估计值为0.83 SE(95%CI:0.78 - 0.87),0.75 SP(95%CI:0.70 -0.80),AUC为0.86(95%CI:0.82 0.88)(图。3)。当选择具有最高性能的应急表时,产生了0.82的合并SE(95%CI:0.72 -0.89),SP为0.78(95%CI:0.66 - 0.87),AUC为0.87(95%CI:CI:0.84 0.90)。

关于音频抑郁症筛查的16项研究提供了28个应急表。该组的汇总估计值为0.86 SE(95%CI:0.81 0.89),0.86 SP(95%CI:0.81 0.90),AUC为0.92(95%CI:0.90 -0.90 - 0.94)(图。3)。当选择具有最高性能的应急表时,产生了0.86的合并SE(95%CI:0.80 -0.91),SP为0.90(95%CI:0.86 0.93),AUC为0.95(95%CI:CI:0.92 0.96)。

从六项关于步态抑郁筛查的研究中共有25个应急表。该组的汇总估计值为0.79 SE(95%CI:0.73 - 0.84),0.77 SP(95%CI:0.73 0.81),AUC为0.84(95%CI:0.81:0.81-0.87)(图。3)。当选择具有最高性能的应急表时,产生了0.85的合并SE(95%CI:0.75α0.91),SP为0.83(95%CI:0.78(0.78(0.78)),AUC为0.90(95%CI:CI:0.87 0.92)。

对于所有多模式抑郁筛查研究,在13项研究中提供的39个应急表提供的数据创造了理想的结果。该组的汇总估计值为0.88 SE(95%CI:0.85â0.91),0.90 SP(95%CI:0.88 0.91),AUC为0.95(95%CI:0.92-0.96)(图0.96)(图。3)。当选择具有最高性能的应急表时,产生了0.89的合并SE(95%CI:0.84 -0.93),SP为0.91(95%CI:0.88 0.94),AUC为0.96(95%CI:95%CI:0.94 0.94-0.97)。

异质性分析

为了探索研究之间的异质性,我们根据输入分类器中的特征或信号对每种模式进行了进一步的研究分析(表3)。

在眼球运动研究的抑郁分类中观察到低异质性,SE的I2为33.15%(95%CI:0.00 66.40),SP的I2为47.67%(95%CI:22.76 - 72.57)。使用EEG技术,当SE区分抑郁症和NC时,观察到低至中度的异质性,SE的I2为32.64%(95%CI:14.76 50.51),SP的I2为56.14%(95%CI:45.67 - 66.61)。此外,在多模式研究的抑郁分类中还观察到了低至中等的异质性,SE的I2为66.67%(95%CI:55.53 77.82),对于50.18%的I2,I2的I2(95%CI:31.74-68.61)占SP。然而,基于视频,音频和步态的研究观察到中等至高的异质性。相比之下,在所有6种模式的19个亚组分析中,在SE和SP的I2值中,通常观察到总共38个低至中等异质性。具体而言,有37个I2值小于75%的组。

结果揭示了协变量在统计上显着差异。补充无花果图显示了由漏斗图目视检查产生的组和亚组的出版偏见。1924。

质量评估

纳入研究的质量由Quadas-2确定。详细的评估结果在补充图中的图中显示。25。纳入的研究中,几乎一半(Nâ= 38)表现出很高或不清楚的患者选择偏见风险,因为提供的信息不足以验证是否使用合适的连续样品选择合格的患者。

讨论

近年来,自动抑郁筛查方法已变得越来越多样化,尤其是与多模式生理和行为信息结合在一起。但是,大多数现有研究都集中在单模式方法上。例如,使用可穿戴设备和AI进行抑郁筛查的分类精度从70%到89%21,这类似于我们的荟萃分析中单模式方法的性能。然而,跨不同方式进行抑郁症筛查的数据格式存在显着差异,各种AI技术在有效地整合多模式输入方面仍然面临挑战。因此,我们旨在探讨AI辅助多模式信息是否可以提高抑郁症筛查的分类性能。考虑到这些挑战,我们旨在探索AI辅助多模式信息是否可以进一步提高抑郁症筛查的分类性能。通过严格遵守筛选审查指南,我们确保了研究的完整性。尽管我们的研究表明单独使用语音或脑电图的单模式分析实现令人满意的诊断性能,但多种模态的整合产生了较高的分类精度。值得注意的是,AI辅助多种方式可以在多达96%的病例中对患有和没有抑郁的患者进行分类(表2)。

由于数十年的快速发展,脑电图和音频信号已成为筛查和治疗精神障碍的关键方法107。现在,相对足够的数据的可用性允许荟萃分析验证一些脑电图研究结果。几项脑电图研究已经确定了皮质额叶区域半球不对称与抑郁症状之间的联系108。当前在左右额叶电极上的研究始终表明,在开眼界/闭眼任务中29,,,,41,,,,56,右额叶中电极收集的脑电图包含的抑郁症相关信息比左额叶中的电极多。这表明抑郁症患者可能在休息时表现出异常增加的右额叶活动,为该地区的未来研究提供了重要的见解109。此外,有两种主要的基于音频的抑郁症筛查的方法:为传统的机器学习提取声学特征,或在原始计时音频信号或频谱图上使用端到端的深度学习。我们的亚组荟萃分析表明,具有韵律特征的机器学习具有与深度学习方法相似的分类性能(AUC = 0.92和0.94),特异性提高了17%,但敏感性降低了8%(图。3)。但是,敏感性的降低会增加抑郁症患者缺失的风险。因此,在敏感性和特异性之间达到最佳平衡对于开发有效的抑郁筛查工具至关重要,就像所有方式一样。尽管有脑电图和音频信号的承诺,但其他单一模式也没有执行,从而探索了多模式方法。

对于使用单模式信息进行筛选方法,基于提取的算法的特征算法优于亚组分析中的其他算法(表3)。这种差异可能归因于特征提取领域的快速进步,尤其是关于脑电图的107和语音数据108。在这项研究中,我们汇总了基于文献的模式使用的特定特征的频率和选择率的统计数据(表1)。通过这种统计分析,我们可以从脑电图数据中确定经常使用的特征,例如功率谱密度和Lempel的ZIV复杂性,以及来自眼动数据的固定持续时间,并评估它们在单模式和多模式筛选中的重要性。这提供了有价值的参考数据,以优化特征选择策略并增强未来研究中抑郁筛查的准确性。

当前研究中目前有两种主要的多模式融合方法。第一种方法结合了脑电图和音频,将抑郁症与NC区分开,合并的SE为0.89(95%CI:0.83 0.92),合并的SP为0.88(95%CI:0.85 0.91),AUC为0.93(95%CI:0.91:0.91:0.91 0.95)。第二种方法集成了视频,音频和文本,该视频,音频和文本将NC与0.88(95%CI:0.84 0.92)的合并SE区分开,汇总SP为0.91(95%CI:0.88 0.93),AUC为0.95(95%CI:0.93 ci:0.93 ci:0.93 - 0.97)(表3)。这种观察强调了基于AI的多模式融合是筛查抑郁症的有前途的工具。观察到的改进可能归因于几个因素:AI辅助多模式生理和行为信息可以有效地整合各种数据格式,从而降低Uni-Mododal数据中固有的噪声和可变性。此外,AI的标准化且稳定的筛选过程可以最大程度地减少主观错误。此外,尽管患者可能在临床评估期间试图掩盖自己的状况,但他们无法轻易隐藏生理信号(例如,脑电图)和行为反应(例如,微表达)。

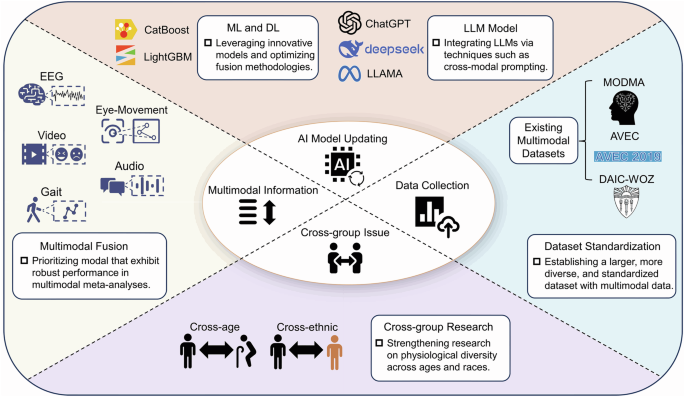

尽管取得了进展,但目前的研究仍然需要一些技术改进。许多有关ML方法的研究继续依赖于经典模型,例如SVM和KNN,这些模型主要是来自不同方式的连接特征。探索更先进的模型可能是有益的。例如,极端的梯度提升(XGBoost)及其导数(例如LightGBM,Catboost)不仅在各种任务中都超过传统分类器,而且还提供了特征重要性分数或决策树的可视化,从而增强了多模式数据分析中的可解释性110,,,,111,,,,112。这种透明度至关重要,因为了解每种方式的贡献可以导致更好的模型解释和完善。多内核学习允许整合多个内核113,每个针对特定方式量身定制的,以更好地合成异质数据114。这种方法更有效地捕获了每种模式的独特功能,从而改善了整体模型性能。

此外,与使用传统ML方法相比,使用DL的方法提高了总体筛选精度3%(表2)。尽管有这种改进,但当前的研究仍然面临一些挑战。例如,由于输入方式之间数据格式的显着差异,有89%的多模式DL调查包括采用晚期融合策略(Nâ= 9)。该策略涉及在决策层的串联功能。虽然晚期融合需要更少的数据,并且可以更轻松地整合多种感应策略和算法,但它可能无法完全利用不同方式的互补优势115。此外,这种方法更容易拟合,尤其是小数据集116。为了解决这些限制,我们建议在多模式DL筛选模型中探索更复杂的融合和对齐技术117。

为了在多模式AI抑郁症筛查中进行未来的工作,需要注意一些领域。整合高级DL技术至关重要,例如实施跨模式的注意机制,该机制使模型可以动态地集中在每个信息的最相关特征上117(如图。4)。此外,可以使用跨任务和交叉数据转移学习等技术来帮助增强模型的概括能力,确保在实际应用中的鲁棒性和稳定性118。此外,将多模式框架与大语言模型(LLM)集成在一起可能有助于利用丰富的上下文语义,从而导致更个性化的抑郁症筛查。开发更多的可解释性工具对于理解和改进AI系统也至关重要。例如,利用诸如Shap(Shapley添加说明)值之类的方法可以帮助临床医生掌握ML模型决策背后的推理,从而提高对筛选结果的信任119。

除模型框架外,数据的正确标准化和预处理对于消除数据集和模式之间的噪声和格式差异也很重要120,,,,121。例如,在这项研究中,所有涉及脑电图的文献都对数据进行了预处理,以删除脑电频段以外的eMG,ECG和频率信号等伪影,包括功率频率信号。同样,由于异叠,高阶谐波失真以及人声仪和音频收集设备的高频等因素,音频信号需要预处理,例如预处理,框架和窗口。基于视频的研究还进行了相邻框架之间的面部检测和对齐,有些提取了68个面部标志和动作单元,以进一步提取。最后,基于步态的研究使用Kinect设备处理了Kinect产生的25个接头的三维坐标流。这涉及丢弃错误估计的坐标或框架,并具有变形的骨骼和执行坐标转换。本文中的大多数研究都考虑了这些步骤。但是,缺乏预处理某些方式(例如步态,眼动)的统一标准可能导致偏见。未来的研究应着重于开发更健壮和自动化的预处理管道,以有效处理各种格式。

尽管现有模型的表现有希望,但数据可用性的挑战仍然显着。抑郁数据很敏感,因此很难收集多种数据集来估计抑郁症的严重程度。在此荟萃分析中包括的研究中(每项研究中使用的数据集列在补充表中1),突出显示了六个公共数据集:pred+ct数据集(提供脑电图数据)122;AVEC 2011年2021个数据集(例如Daic-Woz和E-Daic,具有视频,音频和文本方式,主要以英语为单位)123,,,,124,,,,125,,,,126,,,,127,,,,128,,,,129,,,,130;MODMA数据集(以中文提供脑电图和音频方式)131;黑狗数据库(提供英语的视频和音频方式);CMDC数据集(包括中文的视频,音频和文本方式)98;还有eatd-corpus数据集(重点介绍中文的音频方式)106。但是,每个公共数据集以及包括研究中使用的私人数据集包含不超过280个受试者(样本量从24到275不等),这阻碍了普遍且可靠地识别临床生物标志物的能力,尤其是在DL方法中识别临床生物标志物132,,,,133。我们主张建立一个抑郁症患者的更大,更多样化和标准化的数据库,并在一致的条件,设备和配置下收集了多模式数据。

尽管使用脑电图,眼动,面部表情,语音和步态等模式进行数据驱动的抑郁症筛查具有减轻临床工作量并实现早期干预的潜力,但它也带来了道德挑战。第三方(例如雇主或保险公司)滥用滥用的风险,尤其是在没有明确患者同意或理解的情况下使用数据的情况下。研究人员和临床医生必须确保有关数据使用的透明沟通,确保强大的数据保护措施,并确保任何时间参与是自愿的和可以撤回的。持续的道德监督对于维护个人权利并促进这些技术的负责应用至关重要134。

这项研究有几个明显的局限性。首先,我们专注于英语文章,这些文章可能会忽略非英语研究的宝贵发现。其次,由于大多数算法的汇总估计是基于数量有限的研究,并且在这些研究中样本量有限,因此结果可能会出现偏见。此外,这项研究的亚组分析仍然表现出一定的异质性。进一步的研究应检查更均匀的患者组中AI算法的分类性能。此外,很少有研究涉及跨种族或跨年龄研究,这使得验证在一个特定种族群体还是年龄段训练的模型对他人同样有效。此外,我们排除了仅报告诸如MAE或RMSE之类的连续指标以维持分析一致性的研究,因为尚未针对现有文献中的所有方式进行此类评估。未来的研究可以进一步探索使用连续的结果指标来扩大当前见解。一些相关研究,例如审核135和Audibert5,仅报告了荟萃分析所需的F1分数(例如TP,TN,FP,FN),而不能包括可能引入了包含偏见。最后,可能由于公共数据集的影响,文献中的大多数多模式融合方法都集中在两种方式上,从而阻止了每种方式的有效性进一步研究。

这项研究强调了人工智能算法在多模式抑郁筛查中的重要潜力,并概述了每种模式的有希望的前景和发展方向。尽管诸如误报和假否定的风险,数据隐私和安全问题以及监管批准要求之类的挑战,AI仍然是协助医生诊断的宝贵工具。此外,我们强调有必要增强基于AI的抑郁症筛查系统的研究设计,并倡导创建足够的标准化数据库以支持进一步的研究。

方法

根据标准Prisma进行系统的审查和荟萃分析(系统评价和荟萃分析的首选报告项目)136(补充表7)。该研究在Prospero(CRD420251049107)中进行了注册。

搜索策略和资格标准

Two researchers with experience in computer science and biomedical engineering independently conducted literature searches and records.The agreement was then achieved by a third reviewer with the expertise of data analysis.All these searches were performed on Google Scholar, Web of Science Core Collection, and IEEE Xplore database for publications up to June 7, 2025. Only English-language articles were included.To investigate the strategies of automated clinically informative depression screening with both single and multiple modalities, we conducted a literature search using a combination of key terms from three categories: (1) depression-related disorders (“depression†OR “major depressive disorder†OR “mood disorderâ€), (2) screening processes (“detection†OR “diagnosis†OR “screeningâ€), and (3) modalities (“EEG†OR “eye movement†OR “gait†OR “speech†OR “vocal†OR “audio†OR “audiovisual†OR “facial†OR “visual†OR “video†OR “multi-modalâ€).Search queries were constructed by combining at least one term from each category with the AND operator, while terms within each category were combined using OR.First pre-screen by looking at abstracts and titles to filter out irrelevant articles.Eligibility is then checked against our criteria (Fig.2) and unqualified full-text review entries are removed.Eligible studies that reported AI-assisted each modality for the screening of Depression with classification outcomes such as sensitivity (SE) and specificity (SP) were then used to calculate the 2 × 2 contingency tables.

Studies were included if they met the following criteria: (1) the main focus was on depression (excluding studies centered on other disorders such as schizophrenia, Alzheimer’s disease, brain trauma, or cancer);(2) only technical research was included (excluding reviews and non-technical papers);(3) the study purposed and evaluated an approach for depression diagnosis that used machine learning or deep learning models to classify depression;(4) comprehensive classification performance results were reported, specifically accuracy, sensitivity, specificity, recall, precision, or an explicit confusion matrix, enabling direct extraction or calculation of true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN).

数据分析

Details of papers for quality review were manually summarized in a spreadsheet, including title, modality, year of publication, source of data, type of patient’s depression, number of patients and normal control, types of features, algorithm model, and classification performance data.

Classification performance data for included studies were extracted, and contingency tables were created.These data included accuracy, sensitivity, specificity, recall, precision, or an explicit confusion matrix, which enabled direct extraction or calculation of true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN).Contingency tables were used to construct SROC curves and forest plots, as well as to calculate pooled sensitivity and specificity.If a study provided multiple contingency tables for different AI algorithms, the contingency tables for different AI algorithms were used independently.

Risk of bias and applicability appraisal

The risk of bias and applicability of all selected studies were assessed by using the QUADAS-2 criteria137。It provides researchers with a standardized framework for assessing the risk of bias and applicability when conducting reviews that evaluate the accuracy of AI-assisted screening tests.

Data synthesis

We performed a meta-analysis of studies using contingency tables to estimate the classification performance of AI methods, including machine learning (ML) or DL algorithms.As differences between studies were assumed, a random effects model was performed.We conducted a meta-analysis of all single-modal and multi-modal research literature data;number of research literature for all modalities was greater than 5, which meets the requirements of random-effect analysis138。We used contingency tables to construct stratified summary receiver operating characteristic (SROC) curves and calculated summary sensitivity and specificity for predicting high heterogeneity.The combined curve is the corresponding 95% confidence region and 95% prediction region plotted around the average estimate of SE, SP, and AUC in the SROC plot.Heterogeneity was assessed, using the I2 statistic.Meanwhile, all SROC curves were summarized on one graph to facilitate comparison and calculated using STATA statistical software (version 17.0) (Midas and Metandi modules; StataCorp).Statistical significance is shown when the P < 0.05.

数据可用性

The datasets used and analyzed during the current study are available from the corresponding author upon reasonable request.

参考

Diseases, G. B. D. & Injuries, C. Global burden of 369 diseases and injuries in 204 countries and territories, 1990-2019: a systematic analysis for the Global Burden of Disease Study 2019.柳叶刀 396, 1204−1222 (2020).

Mitchell, A. J., Vaze, A. & Rao, S. Clinical diagnosis of depression in primary care: a meta-analysis.柳叶刀 374, 609–619 (2009).

PubMed一个 Google Scholar一个

de Aguiar Neto, F. S. & Rosa, J. L. G. Depression biomarkers using non-invasive EEG: A review.Neurosci。Biobehav。修订版 105, 83–93 (2019).

PubMed一个 Google Scholar一个

Stolicyn, A., Steele, J. D. & Series, P. Prediction of depression symptoms in individual subjects with face and eye movement tracking.Psychol。医学 52, 1784–1792 (2022).

PubMed一个 Google Scholar一个

Toto, E., Tlachac, M. & Rundensteiner, E. A. AudiBERT: a deep transfer learning multimodal classification framework for depression screening.在Proceedings 30th ACM International Conference on Information & Knowledge Management4145–4154.https://doi.org/10.1145/3459637.3481895(2021)。

Francese, R. & Attanasio, P. Emotion detection for supporting depression screening.Multimed.Tools Appl. 82, 12771–12795 (2023).

PubMed一个 Google Scholar一个

Hasler, G. Pathophysiology of depression: do we have any solid evidence of interest to clinicians?.World Psychiatry 9, 155 (2010).

PubMed一个 Google Scholar一个

Han, S. et al.Orbitofrontal cortex-hippocampus potentiation mediates relief for depression: a randomized double-blind trial and TMS-EEG study.Cell Rep. Med. 4, 101060 (2023).

PubMed一个 Google Scholar一个

Rajkowska, G. et al.Morphometric evidence for neuronal and glial prefrontal cell pathology in major depression.生物。精神病学 45, 1085–1098 (1999).

PubMed一个 Google Scholar一个

Drevets, W. C. et al.Subgenual prefrontal cortex abnormalities in mood disorders.自然 386, 824–827 (1997).

PubMed一个 Google Scholar一个

Mayberg, H. S. et al.Reciprocal limbic-cortical function and negative mood: converging PET findings in depression and normal sadness.是。J. Psychiatry 156, 675–682 (1999).

PubMed一个 Google Scholar一个

Esterman, M. et al.Frontal eye field involvement in sustaining visual attention: evidence from transcranial magnetic stimulation.Neuroimage 111, 542–548 (2015).

PubMed一个 Google Scholar一个

Corbetta, M. & Shulman, G. L. Control of goal-directed and stimulus-driven attention in the brain.纳特。Neurosci牧师。 3, 201–215 (2002).

PubMed一个 Google Scholar一个

Lazarov, A., Ben-Zion, Z., Shamai, D., Pine, D. S. & Bar-Haim, Y. Free viewing of sad and happy faces in depression: a potential target for attention bias modification.J. Affect.Disord. 238, 94–100 (2018).

PubMed一个 Google Scholar一个

Girard, J. M. et al.Nonverbal social withdrawal in depression: evidence from manual and automatic analysis.Image Vis.计算。 32, 641–647 (2014).

PubMed一个 Google Scholar一个

Deligianni, F., Guo, Y. & Yang, G. Z. From emotions to mood disorders: a survey on gait analysis methodology.IEEE J. Biomed.Health Inf. 23, 2302–2316 (2019).

Kebets, V. et al.Somatosensory-motor dysconnectivity spans multiple transdiagnostic dimensions of psychopathology.生物。精神病学 86, 779–791 (2019).

PubMed一个 Google Scholar一个

Schrijvers, D., Hulstijn, W. & Sabbe, B. G. Psychomotor symptoms in depression: a diagnostic, pathophysiological and therapeutic tool.J. Affect.Disord. 109, 1–20 (2008).

PubMed一个 Google Scholar一个

Sobin, C. & Sackeim, H. A. Psychomotor symptoms of depression.是。J. Psychiatry 154, 4–17 (1997).

He, L. et al.Deep learning for depression recognition with audiovisual cues: a review.inf。Fusion 80, 56–86 (2022).

Abd-Alrazaq, A. et al.Systematic review and meta-analysis of performance of wearable artificial intelligence in detecting and predicting depression.npj Digit.医学 6, 84 (2023).

PubMed一个 Google Scholar一个

Mangalik, S. et al.Robust language-based mental health assessments in time and space through social media.npj Digit.医学 7, 109 (2024).

PubMed一个 Google Scholar一个

Kaczmarczyk, R., Wilhelm, T. I., Martin, R. & Roos, J. Evaluating multimodal AI in medical diagnostics.npj Digit.医学 7, 205 (2024).

PubMed一个 Google Scholar一个

Kline, A. et al.Multimodal machine learning in precision health: a scoping review.npj Digit.医学 5, 171 (2022).

PubMed一个 Google Scholar一个

Cunningham, S., Hudson, C. C. & Harkness, K. Social media and depression symptoms: a meta-analysis.res。儿童青少年。Psychopathol. 49, 241–253 (2021).

PubMed一个 Google Scholar一个

Yoon, S., Kleinman, M., Mertz, J. & Brannick, M. Is social network site usage related to depression?A meta-analysis of Facebook–depression relations.J. Affect.Disord. 248, 65–72 (2019).

PubMed一个 Google Scholar一个

Yang, J. et al.Cross-subject classification of depression by using multiparadigm EEG feature fusion.计算。Methods Prog.生物。 233, 107360 (2023).

Mohammadi, M. et al.Data mining EEG signals in depression for their diagnostic value.BMC Med。通知。决策。mak。 15, 1–14 (2015).

Koo, P. C. et al.Combined cognitive, psychomotor and electrophysiological biomarkers in major depressive disorder.欧元。拱。精神病学临床。Neurosci。 269, 823–832 (2019).

PubMed一个 Google Scholar一个

Nassibi, A., Papavassiliou, C. & Atashzar, S. F. Depression diagnosis using machine intelligence based on spatiospectrotemporal analysis of multi-channel EEG.医学生物。工程。计算。 60, 3187–3202 (2022).

PubMed一个 Google Scholar一个

Soni, S., Seal, A., Yazidi, A. & Krejcar, O. Graphical representation learning-based approach for automatic classification of electroencephalogram signals in depression.计算。生物。医学 145, 105420 (2022).

PubMed一个 Google Scholar一个

Seal, A. et al.DeprNet: a deep convolution neural network framework for detecting depression using EEG.IEEE Trans。乐器。测量 70, 1–13 (2021).

Liu, B., Chang, H., Peng, K. & Wang, X. An end-to-end depression recognition method based on EEGNet.正面。精神病学 13, 864393 (2022).

PubMed一个 Google Scholar一个

Li, X. et al.Depression recognition using machine learning methods with different feature generation strategies.艺术品。Intell。医学 99, 101696 (2019).

PubMed一个 Google Scholar一个

Li, X., Hu, B., Sun, S. & Cai, H. EEG-based mild depressive detection using feature selection methods and classifiers.计算。Methods Prog.生物。 136, 151–161 (2016).

Song, X., Yan, D., Zhao, L. & Yang, L. LSDD-EEGNet: an efficient end-to-end framework for EEG-based depression detection.生物。信号过程。控制 75, 103612 (2022).

Shen, J., Zhang, X., Wang, G., Ding, Z. & Hu, B. An improved empirical mode decomposition of electroencephalogram signals for depression detection.IEEE Trans。影响。计算。 13, 262–271 (2019).

Soni, S., Seal, A., Mohanty, S. K. & Sakurai, K. Electroencephalography signals-based sparse networks integration using a fuzzy ensemble technique for depression detection.生物。信号过程。控制 85, 104873 (2023).

Jiang, W. et al.EEG-based subject-independent depression detection using dynamic convolution and feature adaptation.在Proceedings International Conference on Swarm Intelligence272–283 (2023).

Tasci, G. et al.Automated accurate detection of depression using twin Pascal’s triangles lattice pattern with EEG Signals.知识。Based Syst. 260, 110190 (2023).

Sadiq, M. T., Akbari, H., Siuly, S., Yousaf, A. & Rehman, A. U. A novel computer-aided diagnosis framework for EEG-based identification of neural diseases.计算。生物。医学 138, 104922 (2021).

PubMed一个 Google Scholar一个

Shi, Q. et al.Depression detection using resting state three-channel EEG signal.Preprint atarxiv https://doi.org/10.48550/arXiv.2002.09175(2020)。

Chen, T., Guo, Y., Hao, S. & Hong, R. Exploring self-attention graph pooling with EEG-based topological structure and soft label for depression detection.IEEE Trans。影响。计算。 13, 2106–2118 (2022).

Wang, H.-G., Meng, Q.-H., Jin, L.-C., Wang, J.-B.& Hou, H.-R.AMG: a depression detection model with autoencoder and multi-Head graph convolutional network.在Proceedings 2023 42nd Chinese Control Conference (CCC)8551–8556 (2023).

Shivcharan, M., Boby, K. & Sridevi, V. EEG based machine learning models for automated depression detection.在Proceedings 2023 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT)1–6 (2023).

Bai, R., Guo, Y., Tan, X., Feng, L. & Xie, H. An EEG-based depression detection method using machine learning model.int。J. Pharma Med.生物。科学。 10, 17–22 (2021).

Fan, Y., Yu, R., Li, J., Zhu, J. & Li, X. EEG-based mild depression recognition using multi-kernel convolutional and spatial-temporal feature.在Proceedings 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM)1777–1784 (2020).

Tian, F. et al.The three-lead EEG sensor: introducing an EEG-assisted depression diagnosis system based on ant lion optimization.IEEE Trans。生物。Circuits Syst. 17, 1305–1318 (2023).

Zhu, G. et al.Detecting depression using single-channel EEG and graph methods.数学 10, 4177 (2022).

Zhang, B. et al.Feature-level fusion based on spatial-temporal of pervasive EEG for depression recognition.计算。Methods Prog.生物。 226, 107113 (2022).

Li, D., Tang, J., Deng, Y. & Yang, L. Classification of resting state EEG data in patients with depression.在Proceedings 2020 IEEE International Conference on E-health Networking, Application & Services (HEALTHCOM)1–2 (2020).

Mahato, S., Goyal, N., Ram, D. & Paul, S. Detection of depression and scaling of severity using six channel EEG data.J. Med。Syst. 44, 1–12 (2020).

Lin,H。等。MDD-TSVM: a novel semisupervised-based method for major depressive disorder detection using electroencephalogram signals.计算。生物。医学 140, 105039 (2022).

PubMed一个 Google Scholar一个

Rafiei, A., Zahedifar, R., Sitaula, C. & Marzbanrad, F. Automated detection of major depressive disorder with EEG signals: a time series classification using deep learning.IEEE访问 10, 73804–73817 (2022).

Duan, L. et al.Machine learning approaches for MDD detection and emotion decoding using EEG signals.正面。哼。Neurosci。 14, 284 (2020).

PubMed一个 Google Scholar一个

Acharya, U. R. et al.Automated EEG-based screening of depression using deep convolutional neural network.计算。Methods Prog.生物。 161, 103–113 (2018).

Cohn, J. F. et al.Detecting depression from facial actions and vocal prosody.在Proceedings 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops1–7 (2009).

Yang,M。等。Undisturbed mental state assessment in the 5G era: a case study of depression detection based on facial expressions.IEEE Wirel.社区。 28, 46–53 (2021).

Shangguan, Z. et al.Dual-stream multiple instance learning for depression detection with facial expression videos.IEEE Trans。Neural Syst.康复。工程。 31, 554–563 (2022).

Wang, Q., Yang, H. & Yu, Y. Facial expression video analysis for depression detection in Chinese patients.J. Vis。社区。Image Represent. 57, 228–233 (2018).

Hu, B., Tao, Y. & Yang, M. Detecting depression based on facial cues elicited by emotional stimuli in video.计算。生物。医学 165, 107457 (2023).

PubMed一个 Google Scholar一个

Lin, L., Chen, X., Shen, Y. & Zhang, L. Towards automatic depression detection: a BiLSTM/1D CNN-based model.应用。科学。 10, 8701 (2020).

Kiss, G. & Vicsi, K. Comparison of read and spontaneous speech in case of automatic detection of depression.在Proceedings 2017 8th IEEE International Conference on Cognitive Infocommunications (CogInfoCom)000213–000218 (2017).

Mobram, S. & Vali, M. Depression detection based on linear and nonlinear speech features in I-vector/SVDA framework.计算。生物。医学 149, 105926 (2022).

PubMed一个 Google Scholar一个

Vázquez-Romero, A. & Gallardo-AntolÃn, A. Automatic detection of depression in speech using ensemble convolutional neural networks.熵。22, 688 (2020).

PubMed一个 Google Scholar一个

Huang, Z., Epps, J. & Joachim, D. Speech landmark bigrams for depression detection from naturalistic smartphone speech.在Proceedings ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)5856–5860 (2019).

Huang, Z., Epps, J., Joachim, D. & Sethu, V. Natural language processing methods for acoustic and landmark event-based features in speech-based depression detection.IEEE J. Sel.顶部。信号过程。 14, 435–448 (2019).

Huang, Z., Epps, J. & Joachim, D. Investigation of speech landmark patterns for depression detection.IEEE Trans。影响。计算。 13, 666–679 (2019).

Yang,W。等。Attention guided learnable time-domain filterbanks for speech depression detection.Neural Netw. 165, 135–149 (2023).

PubMed一个 Google Scholar一个

Li, X., Cao, T., Sun, S., Hu, B. & Ratcliffe, M. Classification study on eye movement data: towards a new approach in depression detection.在Proceedings 2016 IEEE Congress on Evolutionary Computation (CEC)1227–1232 (2016).

Shen, R., Zhan, Q., Wang, Y. & Ma, H. Depression detection by analysing eye movements on emotional images.在Proceedings ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)7973–7977 (2021).

Pan, Z., Ma, H., Zhang, L. & Wang, Y. Depression detection based on reaction time and eye movement.在Proceedings 2019 IEEE International Conference on Image Processing (ICIP)2184–2188 (2019).

Le, C., Ma, H. & Wang, Y. A method for extracting eye movement and response characteristics to distinguish depressed people.在Proceedings Image and Graphics: 9th International Conference, ICIG 2017, Shanghai, China, September 13-15, 2017Revised Selected Papers, Part I 9. 489–500 (2017).

Zhang, D. et al.Effective differentiation between depressed patients and controls using discriminative eye movement features.J. Affect.Disord. 307, 237–243 (2022).

PubMed一个 Google Scholar一个

Diao, Y. et al.A combination of P300 and eye movement data improves the accuracy of auxiliary diagnoses of depression.J. Affect.Disord. 297, 386–395 (2022).

PubMed一个 Google Scholar一个

Shao, W. et al.A multi-modal gait analysis-based detection system of the risk of depression.IEEE J. Biomed.Health Inform. 26, 4859–4868 (2021).

Lu, H., Shao, W., Ngai, E., Hu, X. & Hu, B. A new skeletal representation based on gait for depression detection.在Proceedings 2020 IEEE International Conference on E-health Networking, Application & Services (HEALTHCOM)1–6 (2020).

Wang, T. et al.A gait assessment framework for depression detection using kinect sensors.IEEE Sens. J. 21, 3260–3270 (2020).

Li, W., Wang, Q., Liu, X. & Yu, Y. Simple action for depression detection: using kinect-recorded human kinematic skeletal data.BMC Psychiatry 21, 205 (2021).

PubMed一个 Google Scholar一个

Yu, Y. et al.Depression and severity detection based on body kinematic features: using kinect recorded skeleton data of simple action.正面。神经。 13, 905917 (2022).

PubMed一个 Google Scholar一个

Wang, Y., Wang, J., Liu, X. & Zhu, T. Detecting depression through gait data: examining the contribution of gait features in recognizing depression.正面。精神病学 12, 661213 (2021).

PubMed一个 Google Scholar一个

Zhang, X. et al.Multimodal depression detection: fusion of electroencephalography and paralinguistic behaviors using a novel strategy for classifier ensemble.IEEE J. Biomed.Health Inform. 23, 2265–2275 (2019).

PubMed一个 Google Scholar一个

Chen, T., Hong, R., Guo, Y., Hao, S. & Hu, B. MS²-GNN: exploring GNN-based multimodal fusion network for depression detection.IEEE Trans。Cybern. 53, 7749–7759 (2022).

Qayyum, A., Razzak, I., Tanveer, M., Mazher, M. & Alhaqbani, B. High-density electroencephalography and speech signal based deep framework for clinical depression diagnosis.IEEE/ACM Trans.计算。生物。Bioinform. 20, 2587–2597 (2023).

PubMed一个 Google Scholar一个

Ahmed, S., Yousuf, M. A., Monowar, M. M., Hamid, M. A. & Alassafi, M. Taking all the factors we need: a multimodal depression classification with uncertainty approximation.IEEE访问 11, 99847–99861 (2023).

Zhu, J. et al.Content-based multiple evidence fusion on EEG and eye movements for mild depression recognition.计算。Methods Prog.生物。 226, 107100 (2022).

Othmani, A., Zeghina, A.-O.& Muzammel, M. A model of normality inspired deep learning framework for depression relapse prediction using audiovisual data.计算。Methods Prog.生物。 226, 107132 (2022).

Yang, L., Jiang, D. & Sahli, H. Integrating deep and shallow models for multi-modal depression analysis—hybrid architectures.IEEE Trans。影响。计算。 12, 239–253 (2018).

Yang, S., Cui, L., Wang, L., Wang, T. & You, J. Enhancing multimodal depression diagnosis through representation learning and knowledge transfer.Heliyon 10, e25959 (2024).

Muzammel, M., Salam, H. & Othmani, A. End-to-end multimodal clinical depression recognition using deep neural networks: a comparative analysis.计算。Methods Prog.生物。 211, 106433 (2021).

Joshi, J. et al.Multimodal assistive technologies for depression diagnosis and monitoring.J. Multimodal Use.接口 7, 217–228 (2013).

Chen, J. et al.IIFDD: Intra and inter-modal fusion for depression detection with multi-modal information from Internet of Medical Things.inf。Fusion 102, 102017 (2024).

Chen, X. et al.MGSN: Depression EEG lightweight detection based on multiscale DGCN and SNN for multichannel topology.生物。信号过程。控制 92, 106051 (2024).

Ying, M. et al.EDT: An EEG-based attention model for feature learning and depression recognition.生物。信号过程。控制 93, 106182 (2024).

Sun, C., Jiang, M., Gao, L., Xin, Y. & Dong, Y. A novel study for depression detecting using audio signals based on graph neural network.生物。信号过程。控制 88, 105675 (2024).

Shao, X., Ying, M., Zhu, J., Li, X. & Hu, B. Achieving EEG-based depression recognition using Decentralized-Centralized structure.生物。信号过程。控制 95, 106402 (2024).

Xu, X., Wang, Y., Wei, X., Wang, F. & Zhang, X. Attention-based acoustic feature fusion network for depression detection.神经计算 601, 128209 (2024).

Zou, B. et al.Semi-structural interview-based Chinese multimodal depression corpus towards automatic preliminary screening of depressive disorders.IEEE Trans。影响。计算。 14, 2823–2838 (2022).

Chen, D. et al.Comparative efficacy of multimodal AI methods in screening for major depressive disorder: machine learning model development predictive pilot study.JMIR Form.res。 9, e56057 (2025).

PubMed一个 Google Scholar一个

Zhou, L., Hu, B. & Guan, Z.-H.MDRA: a multimodal depression risk assessment model using audio and text.IEEE Signal Process.Lett。 32, 2045–2049 (2025).

Xie, W. et al.Interpreting depression from question-wise long-term video recording of SDS evaluation.IEEE J. Biomed.Health Inform. 26, 865–875 (2021).

Al Hanai, T., Ghassemi, M. M. & Glass, J. R. Detecting depression with audio/text sequence modeling of interviews.在Proceedings Interspeech1716–1720 (ISCA, 2018).

Toto, E., Tlachac, M., Stevens, F. L. & Rundensteiner, E. A. Audio-based depression screening using sliding window sub-clip pooling.在Proceedings 2020 19th IEEE International Conference on Machine Learning and Applications (ICMLA)791–796 (2020).

Sardari, S., Nakisa, B., Rastgoo, M. N. & Eklund, P. Audio based depression detection using convolutional autoencoder.专家系统。应用。 189, 116076 (2022).

Ding, H. et al.IntervoxNet: a novel dual-modal audio-text fusion network for automatic and efficient depression detection from interviews.正面。物理。 12, 1430035 (2024).

Shen, Y., Yang, H. & Lin, L. Automatic depression detection: an emotional audio-textual corpus and a gru/bilstm-based model.在Proceedings ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)6247–6251 (2022).

Siuly, S. et al.Electroencephalogram (EEG) and its background.在EEG Signal Analysis and Classification: Techniques and Applications3–21 (Springer International Publishing, 2016).

Fox, N. A. If it’s not left, it’s right: electroencephalograph asymmetry and the development of emotion.是。Psychol。 46, 863 (1991).

PubMed一个 Google Scholar一个

Thibodeau, R., Jorgensen, R. S. & Kim, S. Depression, anxiety, and resting frontal EEG asymmetry: a meta-analytic review.J. Abnorm.Psychol。 115, 715 (2006).

PubMed一个 Google Scholar一个

Chen, T. & Guestrin, C. XGBoost: a scalable tree boosting system.在Proceedings 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining785–794 (ACM, 2016).

Ke, G. et al.Lightgbm: a highly efficient gradient boosting decision tree.ADV。Neural Inf.过程。Syst. 30(2017)。

Prokhorenkova, L., Gusev, G., Vorobev, A., Dorogush, A. V. & Gulin, A. CatBoost: unbiased boosting with categorical features.ADV。Neural Inf.过程。Syst. 31(2018)。

Gönen, M. & Alpaydın, E. Multiple kernel learning algorithms.J. Mach。学习。res。 12, 2211–2268 (2011).

Bucak, S. S., Jin, R. & Jain, A. K. Multiple kernel learning for visual object recognition: a review.IEEE Trans。Pattern Anal.马赫。Intell。 36, 1354–1369 (2013).

Lahat, D., Adali, T. & Jutten, C. Multimodal data fusion: an overview of methods, challenges, and prospects.Proc。IEEE 103, 1449–1477 (2015).

Litjens, G. et al.A survey on deep learning in medical image analysis.医学图像肛门。 42, 60–88 (2017).

PubMed一个 Google Scholar一个

Vaswani, A. et al.Attention is all you need.神经信息处理系统的进步 30(2017)。

Lin, C.-C., Lin, K., Wang, L., Liu, Z. & Li, L. Cross-modal representation learning for zero-shot action recognition.在Proceedings IEEE/CVF Conference on Computer Vision and Pattern Recognition19978–19988 (IEEE, 2022).

Lundberg, S. M. & Lee, S.-I.A unified approach to interpreting model predictions.ADV。Neural Inf.过程。Syst. 30(2017)。

Fu, T.-c.A review on time series data mining.工程。应用。艺术品。Intell。 24, 164–181 (2011).

Cerutti, S. In the spotlight: biomedical signal processing.IEEE Rev. Biomed.工程。 1, 8–11 (2008).

PubMed一个 Google Scholar一个

Cavanagh, J. F., Napolitano, A., Wu, C. & Mueen, A. The patient repository for EEG data+ computational tools (PRED+ CT).正面。Neuroinform. 11, 67 (2017).

PubMed一个 Google Scholar一个

Schuller, B. et al.AVEC 2011–the first international audio/visual emotion challenge.在Proceedings Affective Computing and Intelligent Interaction: Fourth International Conference, ACII 2011, Memphis, TN, USA, October 9–12, 2011, Part II415–424 (2011).

Schuller, B., Valster, M., Eyben, F., Cowie, R. & Pantic, M. AVEC 2012: the continuous audio/visual emotion challenge.在Proceedings 14th ACM International Conference on Multimodal Interaction449–456 (ACM, 2012).

Valstar, M. et al.AVEC 2013: the continuous audio/visual emotion and depression recognition challenge.在Proceedings 3rd ACM International Workshop on Audio/Visual Emotion Challenge3–10 (ACM, 2013).

Valstar, M. et al.AVEC 2014: the 4th International audio/visual emotion challenge and workshop.在Proceedings 4th International Workshop on Audio/Visual Emotion Challenge3–10 (ACM, 2014).

Valstar, M. et al.AVEC 2016: depression, mood, and emotion recognition workshop and challenge.在Proceedings 6th International Workshop on Audio/Visual Emotion Challenge3–10 (ACM, 2016).

Ringeval, F. et al.Avec 2017: real-life depression, and affect recognition workshop and challenge.在Proceedings 7th Annual Workshop on Audio/Visual Emotion Challenge3–9 (ACM, 2017).

Ringeval, F. et al.AVEC 2018 workshop and challenge: Bipolar disorder and cross-cultural affect recognition.在Proceedings 2018 on Audio/Visual Emotion Challenge and Workshop3–13 (ACM, 2018).

Ringeval, F. et al.AVEC 2019 workshop and challenge: state-of-mind, detecting depression with AI, and cross-cultural affect recognition.在Proceedings 9th International on Audio/visual Emotion Challenge and Workshop3–12 (ACM, 2019)。

Cai, H. et al.A multi-modal open dataset for mental-disorder analysis.科学。数据 9, 178 (2022).

PubMed一个 Google Scholar一个

Stahlschmidt, S. R., Ulfenborg, B. & Synnergren, J. Multimodal deep learning for biomedical data fusion: a review.简短的。Bioinform. 23, bbab569 (2022).

PubMed一个 Google Scholar一个

BaltruÅ¡aitis, T., Ahuja, C. & Morency, L.-P.Multimodal machine learning: a survey and taxonomy.IEEE Trans。Pattern Anal.马赫。Intell。 41, 423–443 (2018).

PubMed一个 Google Scholar一个

Custers, B. Click here to consent forever: expiry dates for informed consent.Big Data Soc. 3, 2053951715624935 (2016).

Flores, R., Tlachac, M., Toto, E. & Rundensteiner, E. Audiface: multimodal deep learning for depression screening.在Proceedings Machine Learning for Healthcare Conference609–630 (2022).

Page, M. J. et al.The PRISMA 2020 statement: an updated guideline for reporting systematic reviews.BMJ。372, n71 (2021).

Whiting, P. F. et al.QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies.安。实习生。医学 155, 529–536 (2011).

PubMed一个 Google Scholar一个

Jackson, D. & Turner, R. Power analysis for random-effects meta-analysis.res。Synth.方法 8, 290–302 (2017).

PubMed一个 Google Scholar一个

致谢

This article was funded by Science and Technology Innovation 2030—Major Projects (2022ZD0211600), National Natural Science Foundation of China (No. 62376150, 62206165, 82020108013), and Shanghai Industrial Collaborative Innovation Project (No. XTCX-KJ-2023-37).

道德声明

竞争利益

作者没有宣称没有竞争利益。

附加信息

Publisher’s note关于已发表的地图和机构隶属关系中的管辖权主张,Springer自然仍然是中立的。

补充信息

权利和权限

开放访问This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material.您没有根据本许可证的许可来共享本文或部分内容的改编材料。The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material.If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.To view a copy of this licence, visithttp://creativecommons.org/licenses/by-nc-nd/4.0/。重印和权限

引用本文

Wang, L., Wang, C., Li, C.

等。AI-assisted multi-modal information for the screening of depression: a systematic review and meta-analysis.npj Digit.医学 8, 523 (2025).https://doi.org/10.1038/s41746-025-01933-3

已收到:

公认:

出版:

doi:https://doi.org/10.1038/s41746-025-01933-3