- Research

- Open access

- Published:

BMC Medical Education volume 25, Article number: 1187 (2025) Cite this article

Abstract

Artificial Intelligence (AI) is reshaping both healthcare delivery and the structure of medical education. This narrative review synthesizes insights from 14 studies exploring how AI is being integrated into undergraduate, postgraduate, and continuing medical education programs. The evidence highlights a wide range of applications, including diagnostic assistance, curriculum redesign, enhanced assessment methods, and streamlined administrative tasks. Nevertheless, several challenges persist—such as ethical dilemmas, the lack of validated curricula, limited empirical research, and infrastructural constraints—that hinder broader implementation. The protocol was registered with PROSPERO (ID: 1109025), and the review followed PRISMA 2020 guidelines. The findings emphasize the need for well-structured AI curricula, targeted faculty development, interdisciplinary collaboration, and ethically sound practices. To promote sustainable and equitable adoption, the review advocates for a phased, learner-centered approach tailored to the evolving demands of medical education.

Introduction

Artificial Intelligence (AI) is rapidly revolutionizing both healthcare and medical education through its broad range of capabilities [1]. Within medical training, AI is improving knowledge acquisition, clinical reasoning, diagnostic precision, and administrative workflows. Techniques like machine learning, natural language processing (NLP), neural networks, and large language models (LLMs) are being utilized to create virtual patient simulations, automate assessments, provide personalized learning feedback, and predict learner outcomes [2]. These innovations are transforming the way medical students, residents, and clinicians engage with information while developing their clinical skills.

The healthcare sector has progressively embraced AI for numerous clinical applications, from imaging analysis to predictive analytics in patient care. This momentum is now extending to academic settings as well [3]. With tools such as ChatGPT and decision-support systems increasingly integrated into clinical practice, the demand for AI literacy among future healthcare professionals is growing more urgent. Globally, medical schools and training programs are starting to design curricula that not only teach technical competencies but also address ethical, regulatory, and humanistic considerations surrounding AI in medicine [4].

Despite rising interest and expanding research, AI education within medical training remains inconsistent and unevenly implemented. Many institutions still lack comprehensive curricula, validated teaching methods, or meaningful interdisciplinary collaboration [5]. There is also notable variability in how programs define AI literacy, evaluate educational outcomes, and prepare faculty to deliver this content. These disparities raise important questions: What are the most effective approaches for teaching AI in medicine? How can AI training be made accessible and equitable? And how do we ensure ethical awareness keeps pace with technological advancement [6]?

To address these challenges and fully harness AI’s potential, educational strategies must be grounded in structured frameworks. This review draws on Kern’s Six-Step Approach to Curriculum Development, which emphasizes needs assessment, goal setting, instructional design, implementation, evaluation, and feedback. This model offers a clear, systematic pathway for integrating AI thoughtfully into medical education [7].

This narrative review aims to tackle these issues by examining the existing literature on AI in medical education. It seeks to chart the landscape of AI applications, evaluate educational models, gather perspectives from learners and faculty, identify practical barriers to implementation, and propose strategies for future integration.

Method

This review adopted a hybrid approach, combining elements of both narrative and systematic reviews. A structured literature search was conducted across multiple databases following PRISMA 2020 guidelines to ensure methodological transparency. While risk of bias tools were applied and study selection was systematically documented, the synthesis of findings was conducted narratively due to the heterogeneity in study designs, outcome measures, and AI applications. This approach allowed for both rigorous appraisal and flexible thematic integration of diverse evidence. A comprehensive literature search was conducted across PubMed, Scopus, Web of Science, and Google Scholar, covering publications from January 2000 through March 2024. The search strategy employed various keyword combinations, including “artificial intelligence,” “machine learning,” “AI,” “medical education,” “curriculum,” “teaching,” and “assessment.”

Protocol registration

This review protocol was registered with the International Prospective Register of Systematic Reviews (PROSPERO), under registration ID: [1109025]. The review was conducted following the PRISMA 2020 guidelines. A completed PRISMA 2020 checklist is available in Supplementary File 1 to ensure transparency and adherence to systematic review standards.

Eligibility criteria

This review included studies that explored the integration of artificial intelligence (AI) into medical education at the undergraduate, postgraduate, or continuing professional development levels. Eligible studies comprised empirical research using quantitative, qualitative, or mixed-method designs, as well as educational interventions, cross-sectional surveys, narrative reviews, and systematic reviews. Studies were required to describe AI applications within educational contexts, including curriculum development, assessment methods, stakeholder perceptions, ethical considerations, or barriers to implementation.

Articles were excluded if they were not published in English, lacked full-text access, focused exclusively on clinical AI applications without a direct link to educational setting or were limited to abstracts without supporting data.

Search strategy and selection process

A comprehensive literature search was conducted across four electronic databases: PubMed, Scopus, Web of Science, and Google Scholar—covering studies published between January 2000 and March 2024. Both Medical Subject Headings (MeSH) and free-text keywords were used in various combinations, including “artificial intelligence,” “AI,” “machine learning,” “medical education,” “curriculum,” “teaching,” “training,” and “assessment.” Boolean operators (AND, OR) were applied to structure the queries, such as the example used in PubMed: (“artificial intelligence” OR “machine learning” OR “AI”) AND (“medical education” OR “curriculum” OR “teaching” OR “training” OR “assessment”). The search was limited to English-language publications with full-text availability. Grey literature and reference lists of eligible studies were also screened to identify additional relevant articles. The final database search was completed on March 28, 2024. Duplicate records were first removed using EndNote reference management software. A secondary de-duplication step was performed manually in Rayyan to ensure accuracy. This process eliminated all redundant entries before initiating the screening process.

Data collection process

Screening and data extraction were conducted independently and in duplicate by two trained reviewers. In the first stage, titles and abstracts of all non-duplicate records were independently screened using predefined inclusion and exclusion criteria. Any disagreements were resolved through discussion and consensus. In the second stage, full texts of all potentially eligible studies were retrieved and reviewed independently by both reviewers to determine final inclusion. Data extraction was conducted using a standardized and pilot-tested extraction form developed for this review. The form captured key study characteristics, including: Author(s) and publication year, Country or region, Study type and methodology, Level of education (undergraduate, postgraduate, or continuing medical education), AI application area, Intervention or framework (if applicable), Reported outcomes and implementation barriers, Ethical or regulatory considerations. Each reviewer independently extracted the data, and discrepancies were resolved through discussion to ensure accuracy and consistency. No automation tools were used in the screening or data extraction process, and no contact was made with study authors for additional information.

Outcome and other variables

The primary outcomes of interest were the applications of artificial intelligence (AI) in medical education and their reported impact on teaching, learning, assessment, curriculum design, and educational outcomes. Data were extracted across all relevant outcome domains reported in each study, regardless of measurement type or time point. The synthesis included both qualitative and quantitative results, where available.

In addition to outcome data, the following variables were extracted for each study: author(s), publication year, country or region, study type (e.g., review, cross-sectional survey, educational intervention), education level (undergraduate, postgraduate, or continuing professional development), AI application area, implementation frameworks or models used, barriers to implementation, and ethical or regulatory considerations. Where data were unclear or incomplete, assumptions were minimized, and information was interpreted conservatively based on the context provided within the articles.

Risk of bias assessment

Given the diversity of study designs included in this narrative review, risk of bias was assessed using tools specific to each methodology. For cross-sectional studies, the AXIS tool was employed to evaluate study design quality, including sampling strategies, measurement reliability, and risk of non-response bias. For qualitative studies, the CASP (Critical Appraisal Skills Programme) Qualitative Checklist was used to assess credibility, relevance, and methodological rigor.

Narrative and scoping reviews were evaluated using the SANRA (Scale for the Assessment of Narrative Review Articles), which focuses on the review’s rationale, comprehensiveness of the literature search, and critical synthesis. For quasi-experimental educational interventions, the Joanna Briggs Institute (JBI) Critical Appraisal Checklist for Quasi-Experimental Studies was applied, assessing comparability, intervention clarity, and outcome validity.

No randomized controlled trials (RCTs) were included in the review; hence, tools such as ROB 2.0 were not applicable. Each study was independently assessed by two volunteer reviewers. Discrepancies were resolved through discussion and mutual agreement to ensure consistency and minimize subjective bias.

Effect size

This review adopted a narrative synthesis approach due to the heterogeneity in study designs, educational contexts, outcome measures, and reporting formats. As such, no formal effect measures (e.g., odds ratios, risk ratios, or mean differences) were applied across the included studies. Where studies reported summary statistics or descriptive outcomes, these were presented narratively to highlight trends and patterns rather than to quantify effect size or directionality.

Given the methodological heterogeneity of the included studies—ranging from narrative reviews and qualitative case studies to cross-sectional surveys and quasi-experimental educational interventions—a narrative synthesis was employed. Studies were grouped into five thematic domains: (1) applications of AI in medical education, (2) curricular innovations and interventions, (3) stakeholder perceptions, (4) ethical and regulatory issues, and (5) barriers and facilitators to implementation. No quantitative data transformation or imputation was required, and no meta-analysis was conducted due to the lack of consistent effect measures and outcome reporting across studies. Results were tabulated and presented descriptively in Table 1. No formal statistical tests for heterogeneity, subgroup analysis, or sensitivity analysis were conducted. Instead, patterns and differences across themes were summarized narratively to synthesize the breadth and scope of AI integration in medical education.

Data extraction followed a structured framework, with findings organized thematically into five main categories: AI applications in education, curricular innovations, stakeholder perspectives, ethical and regulatory issues, and barriers and facilitators to implementation.

Results

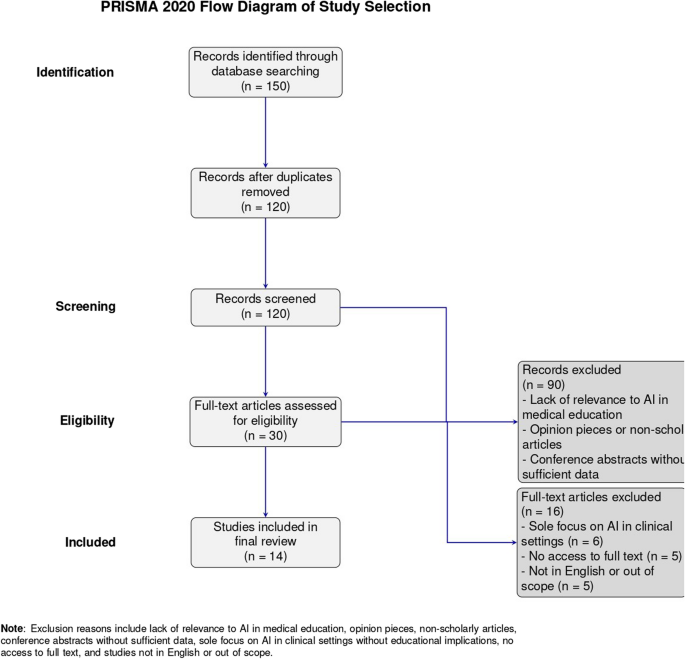

The initial search yielded a total of 150 studies across four databases. After removing duplicates, 120 unique records were screened based on titles and abstracts. Of these, 30 full-text articles were assessed for eligibility. Following a full-text review, 14 studies were included in the final synthesis from PubMed, Scopus, Web of Science, and Google Scholar (Fig. 1). The remaining studies were excluded for reasons including lack of educational context, absence of AI-related content, or unavailability of full text. A summary of the study selection process is presented in the PRISMA flow diagram (Fig. 1).

Study characteristics

The narrative review includes 14 studies covering diverse geographic locations including the United States, India, Australia, Germany, Saudi Arabia, and multi-national collaborations. The selected studies employed a mix of methodologies: scoping reviews, narrative reviews, cross-sectional surveys, educational interventions, integrative reviews, and qualitative case studies. The target population across studies ranged from undergraduate medical students and postgraduate trainees to faculty members and in-service professionals.

Artificial Intelligence (AI) was applied across various educational contexts, including admissions, diagnostics, teaching and assessment, clinical decision-making, and curriculum development. Several studies focused on stakeholder perceptions, ethical implications, and the need for standardized curricular frameworks. Notably, interventions such as the Four-Week Modular AI Elective [13] and the Four-Dimensional AI Literacy Framework [12] were evaluated for their impact on learner outcomes.

Table 1 provides a comprehensive summary of each study, outlining country/region, study type, education level targeted, AI application domain, frameworks or interventions used, major outcomes, barriers to implementation, and ethical concerns addressed.

Risk of bias assessment

A comprehensive risk of bias assessment was conducted using appropriate tools tailored to each study design. For systematic and scoping reviews (e.g., Gordon et al. [2], Khalifa & Albadawy [8], Crotty et al. [11]), the AMSTAR 2 tool was applied, revealing a moderate risk of bias, primarily due to the lack of formal appraisal of included studies and incomplete reporting on funding sources. Observational studies such as that by Parsaiyan & Mansouri [9] were assessed using the Newcastle-Ottawa Scale (NOS) and showed a low risk of bias, with clear selection methods and outcome assessment. For cross-sectional survey designs (e.g., Narayanan et al. [10], Ma et al. [12], Wood et al. [14], Salih [20]), the AXIS tool was used. These showed low to moderate risk depending on sampling clarity, non-response bias, and data reporting. Qualitative and mixed-methods studies such as those by Krive et al. [13] and Weidener & Fischer [15] were appraised using a combination of the CASP checklist and NOS, showing overall low to moderate risk, particularly for their methodological rigor and triangulation. One study [19], which employed a quasi-experimental design, was evaluated using ROBINS-I and was found to have a moderate risk of bias, primarily due to concerns about confounding and deviations from intended interventions. Lastly, narrative reviews like Mondal & Mondal [17] were categorized as high risk due to their lack of systematic methodology and critical appraisal Table 2.

Characteristics of included studies

A total of 14 studies were included in this systematic review, published between 2019 and 2024. These comprised a range of study designs: 5 systematic or scoping reviews, 4 cross-sectional survey studies, 2 mixed-methods or qualitative studies, 1 quasi-experimental study, 1 narrative review, and 1 conceptual framework development paper. The majority of the studies were conducted in high-income countries, particularly the United States, United Kingdom, and Canada, while others included contributions from Asia and Europe, highlighting a growing global interest in the integration of artificial intelligence (AI) in medical education.

The key themes addressed across these studies included: the use of AI for enhancing clinical reasoning and decision-making skills, curriculum integration of AI tools, attitudes and readiness of faculty and students, AI-based educational interventions and simulations, and ethical and regulatory considerations in AI-driven learning. Sample sizes in survey-based studies ranged from fewer than 100 to over 1,000 participants, representing diverse medical student populations and teaching faculty.

All included studies explored the potential of AI to transform undergraduate and postgraduate medical education through improved personalization, automation of feedback, and development of clinical competencies. However, variability in methodology, focus, and outcome reporting was observed, reinforcing the importance of structured synthesis and cautious interpretation.

-

A.

Applications of AI in Medical Education

AI serves multiple educational functions. Gordon et al. identified its use in admissions, diagnostics, assessments, clinical simulations, and predictive analytics [2]. Khalifa and Albadawy reported improvements in diagnostic imaging accuracy and workflow efficiency [8]. Narrative reviews by Parsaiyan et al. [9] and Narayanan et al. [10] highlighted AI’s impact on virtual simulations, personalized learning, and competency-based education.

-

B.

Curricular innovations and interventions

Several studies introduced innovative curricular designs. Crotty et al. advocated for a modular curriculum incorporating machine learning, ethics, and governance [11], while Ma et al. proposed a Four-Dimensional Framework to cultivate AI literacy [12]. Krive et al. [13] reported significant learning gains through a four-week elective, emphasizing the value of early, practical exposure.

Studies evaluating AI-focused educational interventions primarily reported improvements in knowledge acquisition, diagnostic reasoning, and ethical awareness. For instance, Krive et al. [13] documented substantial gains in students’ ability to apply AI in clinical settings, with average quiz and assignment scores of 97% and 89%, respectively. Ma et al. highlighted enhanced conceptual understanding through their framework, though outcomes were primarily self-reported [12]. However, few studies included objective or longitudinal assessments of educational impact. None evaluated whether improvements were sustained over time or translated into clinical behavior or patient care. This reveals a critical gap and underscores the need for robust, multi-phase evaluation of AI education interventions.

-

C.

Stakeholder perceptions

Both students and faculty showed interest and concern about AI integration. Wood et al. [14] and Weidener and Fischer [15] noted a scarcity of formal training opportunities, despite growing awareness of AI’s importance. Ethical dilemmas, fears of job displacement, and insufficient preparation emerged as key concerns.

-

D.

Ethical and regulatory challenges

Critical ethical issues were raised by Mennella et al. [16] and Mondal and Mondal [17], focusing on data privacy, transparency, and patient autonomy. Multiple studies called for international regulatory standards and the embedding of AI ethics within core curricula.

While several reviewed studies acknowledged the importance of ethical training in AI, the discussion of ethics often remained surface-level. A more critical lens reveals deeper tensions that must be addressed in AI-integrated medical education. One such tension lies between technological innovation and equity AI tools, if not designed and deployed with care, risk widening disparities by favoring data-rich, high-resource settings while neglecting underrepresented populations. Moreover, AI’s potential to entrench existing biases—due to skewed training datasets or uncritical deployment of algorithms—poses a threat to fair and inclusive healthcare delivery.

Another pressing concern is algorithmic opacity. As future physicians are expected to work alongside AI systems in high-stakes clinical decisions, the inability to fully understand or challenge these systems’ inner workings raises accountability dilemmas and undermines trust. Educational interventions must therefore go beyond theoretical awareness and cultivate critical engagement with the socio-technical dimensions of AI, emphasizing ethical reasoning, bias recognition, and equity-oriented decision-making.

-

E.

Barriers to implementation

Implementation hurdles included limited empirical evidence [18], infrastructural constraints [19], context-specific applicability challenges [20], and an over-reliance on conceptual frameworks [10]. The lack of unified teaching models and outcome-based assessments remains a significant obstacle.

These findings informed the creation of a conceptual framework for integrating artificial intelligence into medical education, depicted in Fig. 1. A cross-theme synthesis revealed that while AI integration strategies were broadly similar across countries, their implementation success varied significantly by geographic and economic context. High-income countries (e.g., USA, Australia, Germany) demonstrated more comprehensive curricular pilots, infrastructure support, and faculty readiness, whereas studies from LMICs (e.g., India, Saudi Arabia) emphasized conceptual interest but lacked institutional capacity and access to AI technologies. Contextual barriers such as resource limitations, cultural sensitivity, and institutional inertia appeared more pronounced in LMIC settings, influencing the feasibility and depth of AI adoption in medical education.

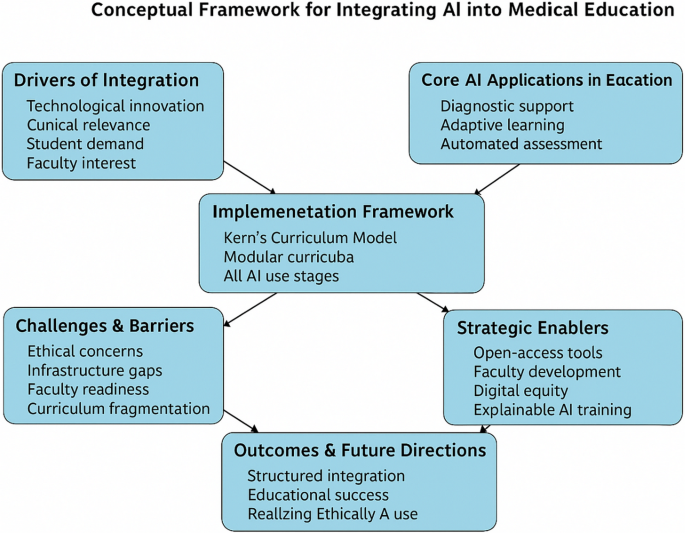

Based on the five synthesized themes, we developed a Comprehensive Framework for the Strategic Integration of AI in Medical Education (Fig. 2). This model incorporates components such as foundational AI literacy, ethical preparedness, faculty development, curriculum redesign, and contextual adaptability. It builds on and extends existing models such as the FACETS framework, the Technology Acceptance Model (TAM), and the Diffusion of Innovation theory. Unlike FACETS, which primarily categorizes existing studies, our framework is action-oriented and aligned with Kern’s curriculum development process, making it suitable for practical implementation. Compared to TAM and Diffusion of Innovation, which focus on user behavior and adoption dynamics, our model integrates educational design elements with implementation feasibility across diverse economic and institutional settings.

Table 3 shows a comparative synthesis of included studies evaluating AI integration in medical and health professions education using Kern’s six-step curriculum development framework. The analysis reveals that most studies effectively identify the need for AI literacy (Step 1) and conduct some form of needs assessment (Step 2), often through surveys, literature reviews, or scoping exercises. However, only a subset of studies explicitly define measurable educational goals and objectives (Step 3), and even fewer describe detailed instructional strategies (Step 4) or implement their proposed curricula (Step 5). Evaluation and feedback mechanisms (Step 6) were rarely reported, and when included, they were typically limited to short-term student feedback or pre-post knowledge assessments. Longitudinal evaluations and outcome-based assessments remain largely absent. The findings underscore a critical implementation gap and emphasize the need for structured, theory-informed, and empirically evaluated AI education models tailored to medical and allied health curricula.

This conceptual model is informed by thematic synthesis and integrates principles from existing frameworks (FACETS, TAM, Diffusion of Innovation) while aligning with Kern’s six-step approach for curriculum design.

Discussion

This review highlights the extensive use of AI in medical education, spanning from diagnostic support to adaptive learning platforms. However, most initiatives remain at pilot or conceptual stages, with limited outcome data available to guide best practices. Many AI tools employed in training still lack thorough validation and have yet to be integrated into standard curricula. The comparative synthesis further highlighted a geopolitical divide in AI education readiness. Institutions in high-income countries reported greater access to digital infrastructure, interdisciplinary collaboration, and implementation support, enabling more seamless AI integration. In contrast, institutions in low- and middle-income countries (LMICs) faced barriers such as limited AI literacy among faculty, budget constraints, and lack of localized AI tools. These disparities emphasize the need for adaptable AI education strategies, such as open-access resources and context-sensitive faculty development, to avoid exacerbating global educational inequities. To enhance the operational utility of our review, we mapped selected studies to Kern’s Six-Step Curriculum Development Model (Appendix A). This mapping highlights that while most studies addressed early stages such as problem identification and instructional design, very few incorporated structured implementation or outcome evaluation. The limited attention to Steps 5 and 6 underscores a critical gap in curriculum sustainability and impact assessment.

Curriculum development remains a significant hurdle. Although models such as FACETS [29], the four-dimensional AI literacy framework [12], and modular designs [13] have been proposed, few have undergone real-world testing or long-term evaluation. A gap persists between theoretical enthusiasm and practical application, often compounded by institutional resistance, funding shortages, and a scarcity of faculty skilled in AI. Applying Kern’s model could help bridge this divide by focusing on learner needs, guiding rollout, and systematically assessing educational outcomes [30].

Ethical concerns add another layer of complexity. As AI increasingly informs clinical decision-making, students require more than technical skills—they must also critically evaluate algorithmic outputs. The review emphasizes growing demands for education on bias reduction, algorithmic transparency, and data governance, yet these topics are seldom embedded within core curricula, often remaining fragmented or optional. Tools like explainable AI (XAI) [31] and frameworks such as TRIPOD-AI [32] offer ways to connect technical details with ethical understanding.

Engaging key stakeholders is crucial for successful implementation. Students demonstrate keen interest in AI training, especially in clinical reasoning and diagnostics. Faculty support exists but is frequently tempered by limited expertise and confidence. Interdisciplinary collaboration among educators, clinicians, data scientists, and ethicists is vital for creating effective curricula. Initiatives like AI hackathons, cross-disciplinary electives, and co-teaching programs provide practical avenues to foster this cooperation [33].

Infrastructure and equity concerns must also be addressed. Many AI tools demand significant technological resources, which may be beyond reach for institutions in low-resource settings. Without coordinated planning and international sharing of resources, AI education risks deepening existing disparities. Prioritizing open-access tools and scalable, resource-light strategies will be essential [34].

Another critical barrier to effective AI integration is the shortage of faculty with adequate expertise in AI principles and applications. To address this, structured faculty development models should be prioritized. These could include micro-credentialing programs offering modular, competency-based certification in AI literacy, as well as collaborative bootcamps co-developed with computer science departments to provide interdisciplinary, hands-on training. Investing in such faculty development strategies is essential to ensure sustainable and contextually relevant delivery of AI curricula.

A major strength of this systematic review is its comprehensive and methodologically rigorous approach. We adhered to the PRISMA 2020 guidelines and employed a transparent protocol that included searches across multiple databases (PubMed, Scopus, Web of Science, and Google Scholar), supplemented by manual screening of references and grey literature. We applied a structured thematic synthesis and used validated tools (ROBINS-I, NOS, CASP, AMSTAR 2, AXIS) to assess the risk of bias across diverse study designs, ensuring methodological integrity.

However, several limitations should be noted. First, the heterogeneity in study designs, outcomes, and reporting limited the feasibility of conducting a meta-analysis. Second, the included studies were predominantly from high-income countries, restricting the generalizability of findings to low- and middle-income settings (LMICs), where educational infrastructure, curricular demands, and access to AI tools may vary significantly. Third, many studies lacked rigorous evaluation frameworks and relied heavily on subjective, short-term outcomes such as self-reported knowledge or attitudes, which are prone to recall and social desirability bias. Notably, few studies assessed the long-term impact of AI interventions on learner performance, clinical competence, or patient outcomes. These gaps underscore the urgent need for more longitudinal, outcome-based research to evaluate the sustained effectiveness and real-world applicability of AI integration in medical education.

Conclusion

AI is reshaping medical education by enhancing teaching methods, increasing assessment accuracy, and enabling personalized learning experiences. This review underscores the importance of structured, ethically grounded, and evidence-based AI training programs. A phased integration strategy—starting with foundational literacy during preclinical education and advancing toward more complex applications in clinical training—offers a practical and sustainable pathway. As AI technologies evolve, educational systems must adapt accordingly, equipping future clinicians not only to use these tools proficiently but also to lead thoughtfully and ethically in AI-driven healthcare.

Data availability

All data analyzed during this study are included from the published article and its supplementary information files. The full list of included studies and extracted data is available from the corresponding author upon reasonable request.

Abbreviations

- AI:

-

Artificial Intelligence

- AXIS:

-

Appraisal tool for Cross-Sectional Studies

- CASP:

-

Critical Appraisal Skills Programme

- JBI:

-

Joanna Briggs Institute

- NOS:

-

Newcastle-Ottawa Scale

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- ROBINS-I:

-

Risk Of Bias In Non-randomized Studies - of Interventions

- SANRA:

-

Scale for the Assessment of Narrative Review Articles

References

Sriram A, Ramachandran K, Krishnamoorthy S. Artificial intelligence in medical education: transforming learning and practice. Cureus. 2025. https://doi.org/10.7759/cureus.80852.

Gordon M, Daniel M, Ajiboye A, Uraiby H, Xu NY, Bartlett R, et al. A scoping review of artificial intelligence in medical education: BEME guide 84. Med Teach. 2024;46(4):446–70.

Bajwa J, Munir U, Nori A, Williams B. Artificial intelligence in healthcare: transforming the practice of medicine. Future Healthc J. 2021;8(2):e188–94.

Maaß L, Grab-Kroll C, Koerner J, Öchsner W, Schön M, Messerer D, et al. Artificial intelligence and ChatGPT in medical education: a cross-sectional questionnaire on students’ competence. J CME. 2025;14(1): 2437293.

Rincón EHH, Jimenez D, Aguilar LAC, Flórez JMP, Tapia ÁER, Peñuela CLJ. Mapping the use of artificial intelligence in medical education: a scoping review. BMC Med Educ. 2025;25(1):526.

Mir MM, Mir GM, Raina NT, Mir SM, Mir SM, Miskeen E, et al. Application of artificial intelligence in medical education: current scenario and future perspectives. J Adv Med Educ Professionalism. 2023;11(3):133.

Younas A, Subramanian KP, Al-Haziazi M, Hussainy SS, Al Kindi ANS. A review on implementation of artificial intelligence in education. Int J Res Innov Soc Sci. 2023;7(8):1092–100.

Khalifa M, Albadawy M. AI in diagnostic imaging: revolutionising accuracy and efficiency. Comput Methods Programs Biomed Update. 2024. https://doi.org/10.1016/j.cmpbup.2024.100146.

Parsaiyan SF, Mansouri S. Digital storytelling (DST) in nurturing EFL teachers’ professional competencies: a qualitative exploration. Technol Assist Lang Educ. 2024;2(4):141–67.

Narayanan S, Ramakrishnan R, Durairaj E, Das A. Artificial intelligence revolutionizing the field of medical education. Cureus. 2023. https://doi.org/10.7759/cureus.49604.

Crotty E, Singh A, Neligan N, Chamunyonga C, Edwards C. Artificial intelligence in medical imaging education: recommendations for undergraduate curriculum development. Radiography. 2024;30:67–73.

Ma Y, Song Y, Balch JA, Ren Y, Vellanki D, Hu Z et al. Promoting AI competencies for medical students: a scoping review on frameworks, programs, and tools. arXiv preprint arXiv:240718939. 2024.

Krive J, Isola M, Chang L, Patel T, Anderson M, Sreedhar R. Grounded in reality: artificial intelligence in medical education. JAMIA Open. 2023;6(2):ooad037.

Wood EA, Ange BL, Miller DD. Are we ready to integrate artificial intelligence literacy into medical school curriculum: students and faculty survey. J Med Educ Curric Dev. 2021;8: 23821205211024078.

Weidener L, Fischer M. Artificial intelligence in medicine: cross-sectional study among medical students on application, education, and ethical aspects. JMIR Med Educ. 2024;10:e51247.

Mennella C, Maniscalco U, De Pietro G, Esposito M. Ethical and regulatory challenges of AI technologies in healthcare: a narrative review. Heliyon. 2024;10(4):e26297.

Mondal HMS. Ethical and social issues related to AI in healthcare. Methods in Microbiology. 2024;55:247 – 81. 2024.

Chan KS, Zary N. Applications and challenges of implementing artificial intelligence in medical education: integrative review. JMIR Med Educ. 2019;5(1):e13930.

Sharma VSU, Pareek V, Sharma L, Kumar S. Artificial intelligence (AI) integration in medical education: A pan-India cross-sectional observation of acceptance and understanding among students. Scripta Medica. 2023;2023;54(4):343 – 52.

Salih SM. Perceptions of faculty and students about use of artificial intelligence in medical education: a qualitative study. Cureus. 2024;16(4):e57605.

Mousavi Baigi SF, Sarbaz M, Ghaddaripouri K, Ghaddaripouri M, Mousavi AS, Kimiafar K. Attitudes, knowledge, and skills towards artificial intelligence among healthcare students: A systematic review. Health Sci Rep. 2023;6(3):e1138. https://doi.org/10.1002/hsr2.1138. PMID: 36923372; PMCID: PMC10009305.

Zheng L, Xiao Y. Refining AI perspectives: assessing the impact of ai curricular on medical students' attitudes towards artificial intelligence. BMC Med Educ. 2025;25(1):1115. https://doi.org/10.1186/s12909-025-07669-8. PMID: 40713579; PMCID: PMC12291331.

Rincón EHH, Jimenez D, Aguilar LAC, Flórez JMP, Tapia ÁER, Peñuela CLJ. Mapping the use of artificial intelligence in medical education: a scoping review. BMC Med Educ. 2025;25(1):526. https://doi.org/10.1186/s12909-025-07089-8. PMID: 40221725; PMCID: PMC11993958.

Grunhut J, Wyatt AT, Marques O. Educating Future Physicians in Artificial Intelligence (AI): An Integrative Review and Proposed Changes. J Med Educ Curric Dev. 2021;8:23821205211036836. https://doi.org/10.1177/23821205211036836. PMID: 34778562; PMCID: PMC8580487.

Naseer MA, Saeed S, Afzal A, Ali S, Malik MG. Navigating the integration of artificial intelligence in the medical education curriculum: a mixed-methods study exploring the perspectives of medical students and faculty in Pakistan. BMC Med Educ. 2025;25(1):273.

Weidmann AE. Artificial intelligence in academic writing and clinical pharmacy education: consequences and opportunities. Int J Clin Pharm. 2024;46(3):751–4.

Tolentino R, Baradaran A, Gore G, Pluye P, Abbasgholizadeh-Rahimi S. Curriculum Frameworks and Educational Programs in AI for Medical Students, Residents, and Practicing Physicians: Scoping Review. JMIR Med Educ. 2024;10:e54793. https://doi.org/10.2196/54793. PMID: 39023999; PMCID: PMC11294785.

Blanco MA, Nelson SW, Ramesh S, Callahan CE, Josephs KA, Jacque B, Baecher-Lind LE. Integrating artificial intelligence into medical education: a roadmap informed by a survey of faculty and students. Med Educ Online. 2025;30(1):2531177. https://doi.org/10.1080/10872981.2025.2531177. Epub 14 Jul 2025. PMID: 40660466; PMCID: PMC12265092.

A. S. Artificial intelligence literacy: a proposed faceted taxonomy. Digital Library Perspectives 2024;2024;40(4):681 – 99.

Robertson AC, Fowler LC, Niconchuk J, Kreger M, Rickerson E, Sadovnikoff N, et al. Application of kern’s 6-Step approach in the development of a novel anesthesiology curriculum for perioperative code status and goals of care discussions. J Educ Perioper Med. 2019;21(1):E634.

Gunning DAD. DARPA’s explainable artificial intelligence (XAI) program. AI magazine. 2019;2019;40(2):44–58.

Collins GS, Dhiman P, Andaur Navarro CL, Ma J, Hooft L, Reitsma JB, et al. Protocol for development of a reporting guideline (TRIPOD-AI) and risk of bias tool (PROBAST-AI) for diagnostic and prognostic prediction model studies based on artificial intelligence. BMJ Open. 2021;11(7):e048008.

Hogg HDJ, Al-Zubaidy M, Talks J, Denniston AK, Kelly CJ, Malawana J, et al. Stakeholder perspectives of clinical artificial intelligence implementation: systematic review of qualitative evidence. J Med Internet Res. 2023;25:e39742.

Shabbir A, Rizvi S, Alam MM, Su’ud MM. Beyond boundaries: navigating the positive potential of ChatGPT, empowering education in underdeveloped corners of the world. Heliyon. 2024;10(16):e35845.

Acknowledgements

The author thanks the volunteer reviewers for assisting with study screening and data extraction, and acknowledges the use of OpenAI’s ChatGPT for language refinement and grammar improvement in non-analytical sections of the manuscript.

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ahsan, Z. Integrating artificial intelligence into medical education: a narrative systematic review of current applications, challenges, and future directions. BMC Med Educ 25, 1187 (2025). https://doi.org/10.1186/s12909-025-07744-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-025-07744-0