Machine learning for painting conservation: a state-of-the-art review

作者:Van der Snickt, Geert

Imaging techniques such as high-resolution digital photography, (3D) digital microscopy, Ultra-Violet Induced Visible Fluorescence Photography (UIVFP), Infrared Photography (IRP), Infrared Reflectography (IRR), Infrared Thermography (IRT), and X-ray Radiography (XRR), along with analytical techniques like hyperspectral imaging, Raman Spectroscopy (RS), Macro X-ray Fluorescence (MA-XRF), and Reflectance Imaging Spectroscopy (RIS), have become standard in the investigation, conservation, and restoration of paintings4. Several of these techniques operate beyond the visible light spectrum, such as X-ray radiation or infrared light, and allow for the non-invasive detection of hidden features such as underdrawings and pentimenti. These multi-modal datasets – particularly when combined – provide valuable insights into a painting’s construction, materials, and condition, as well as evidence of past interventions. This information supports conservation and restoration decisions, assists in the recording of object condition, informs scholarly research, and offers new ways to visualise and engage with paintings. However, the diversity of materials and the complex stratigraphy of paintings make interpretation difficult12. Furthermore, these techniques produce increasingly large and complex datasets, often in the form of vast multi-dimensional datacubes, making fully manual analysis no longer feasible. In recent years, researchers have begun exploring computational approaches, including ML, to analyse these datasets.

Underdrawings and pentimenti in a painting are traditionally studied by means of broadband IRR. In order to improve the selective visualisation of these features, Karella, Blažek, and Striová processed RIS data collected in the visible spectra-near infrared (VIS-NIR) range, using a supervised modern deep learning approach, more specifically a CNN (see Table 1)12. A CNN is a special kind of FNN (i.e., the output value of a unit of some layer becomes an input value of each of the units of the subsequent layer) that significantly reduces the number of parameters in a deep neural network with many units without losing too much in the quality of the model10. CNNs have found applications in image and text processing, where they beat many previously established benchmarks. RIS is a quick, non-invasive, in situ technique adaptable to various modalities and bandwidths. Karella et al. used it to scan mock-ups and two historical paintings in the VIS-NIR range (380–2500 nm), in a pixel-by-pixel manner. The result was a multispectral set of 32 reflectance images, each corresponding to a specific wavelength (16 in VIS and 16 in NIR). However, the resulting images display pixels with contributions of multiple layers, among which is the hidden underdrawing. Separating the layers and displaying the underlying layer without features coming from the surface VIS layer is a difficult task. The transition from VIS to NIR for painting materials is hard to describe, since no precise relation exists to characterise it. The function that can be used to describe the transition is non-linear. Since the characteristics of the materials used in a painting are highly reliant on a variety of elements, such as the pigments’ grain size, each painting has a unique estimated function. ML algorithms can be utilised to estimate the non-linear relation representing this transition and unmix both signals. The specific aim of the CNN in this study was to suppress VIS information found in NIR. Modern data (i.e., data collected from physical replicas or samples created or gathered for the task at hand), in this case mock-ups were used as a basis to create virtual phantoms, which is artificial data (i.e., artificially generated data that mimics the characteristics of real-world data but is created through algorithms, simulations, or models) that can vary in size, material count, or underdrawing ratio. Both the mock-ups and virtual phantoms were labelled to be used as input data. To show the network’s applicability in real-world scenarios, it was further applied to historical data (i.e., data gathered from historical objects) collected from paintings. The results suggest that the model performs well in handling colour transitions and mitigating the effects of high-brightness points from the VIS layer. However, some imperfections persist, such as visible brushstrokes from the VIS layer that remain in the enhanced reflectogram. Despite these remaining flaws, the networks effectively reduced noise and enhanced the visibility of details in the paintings. Additionally, the background has been altered to a firmer one, further improving the overall quality of the reflectogram. In the future, focussing on enhancing underdrawings that are executed with materials that contrast less intensely with paint, such as iron gall ink, metal point stylus or sketching material pigmented with earth pigments, vermilion or lake pigments, could lead to new insights into underdrawing practices. To further improve underdrawing detection, more sophisticated training data—both artificial and modern—that accounts for the multi-layered paint stratigraphy in historical paintings should be employed. This will deliver more accurate results when the model is applied to historical data. Further, the benefits of more advanced neural network models, such as a Residual Network (ResNet) to prevent overfitting a model to a certain task, or transformer-based models, can be explored. A ResNet is a deep neural network architecture designed to address the challenges of training very deep networks, particularly the problem of vanishing gradients13. It introduces residual learning, where shortcut (skip) connections bypass one or more layers, allowing the network to learn residual mappings instead of directly fitting the desired transformation. This improves gradient flow, making it easier to train deep models effectively. The core component of ResNet is the residual block, which helps preserve important features and prevents information degradation. This architecture has been widely used in image classification, object detection, and other deep learning applications, enabling models with hundreds or even thousands of layers to be trained successfully.

XRR imaging is another much-used technique to obtain scientific images, but in contrast with IR methods, it is performed in transmission mode14. In XRR, the material’s physical thickness, its density, as well as the atomic number of its constituting elements, affect the attenuation of X-rays. These parameters directly define the brightness of the pixels in the resulting grey value image. Also, here, the fact that radiographies are two-dimensional representations of three-dimensional objects that consist of multiple layers (support, ground layers, painting layers, varnish, etc.) involves interpretation issues. In addition, the radiograph will potentially show any element in the front, rear, or even inside the painting that has, e.g., a reinforcing function. Specifically for paintings, typical issues are wooden cradles or stretcher bars, metal fixings, as well as features from the support itself (such as the wood grain, or even the topographic imprint of the canvas weave on a radio opaque paint layer). To eliminate the visible cradling on X-ray images of paintings on wooden panels, Platypus, a software based on image processing algorithms, has been created. Further confusion can arise when the support is painted on both sides (e.g., the wings of an altarpiece), if the artist recycled the painting and covered it with another composition, or if the design was modified throughout the creative process15,16. Although several methods have been explored to separate layers in XRR images, so far, the resulting images have never been entirely selective17,18. Furthermore, the tested ML methods were developed to separate radiographies recorded on paintings with artworks on both sides. RGB images of both sides were required as input, implying that ML was not applicable to paintings with concealed designs.

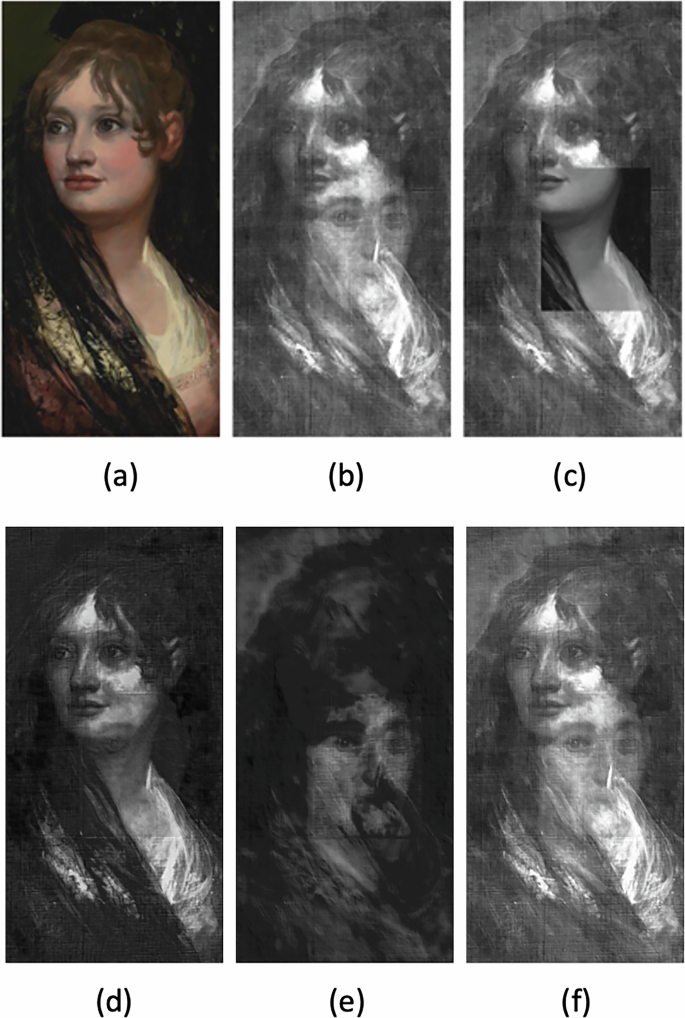

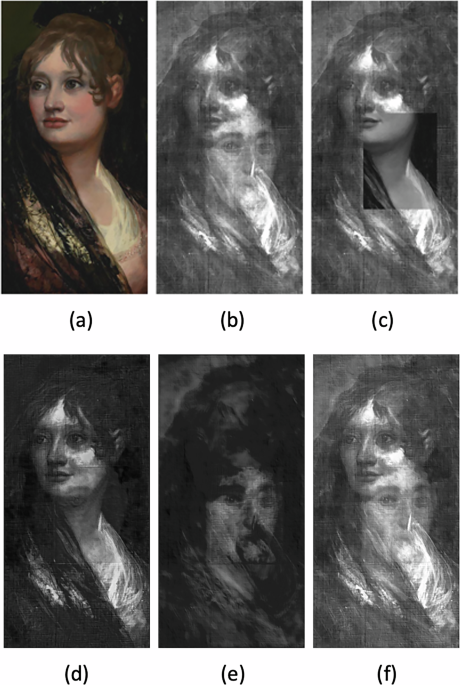

In order to separate mixed X-rays, Pu et al. modelled a separation network consisting of a classic neural network (a FNN) and a modern deep learning method (a CNN) (see Table 1)16. The separation network proposed here is the first that works for both double-sided paintings as well as for paintings with concealed designs. The self-supervised network was trained on an unlabelled dataset consisting of an artificially mixed XRR (two separate sections of a single painting were overlaid on each other) and an RGB image of the two sections visible at the surface. The network was further tested on historical data gathered from a double-sided painting, of which the mixed XRR and an RGB image of only one side of the painting were used as input. Finally, the network was also tested on historical data collected from a painting with a concealed design, of which the mixed XRR and an RGB image of the surface were used as input (Fig. 1). There are still some issues with the image separation, such as blurring in certain areas. However, these final images exhibit more of the character expected of X-ray images, making them likely to feel more familiar and appealing to conservators. Since this method takes images as input, it can also potentially be applied to other types of imagery holding mixed compositions in the future, such as infrared reflectograms, MA-XRF element distribution maps, or outputs from RIS. However, it is important to consider that the resulting images from IRR, RIS, and XRF might be more difficult to separate compared to XRR images due to the complex reflectance response of mixtures and layers of materials.

a RGB image of the surface painting. b Mixed X-ray image. c Manually modified image of the surface painting. d The reconstructed X-ray image of the surface painting. e The reconstructed X-ray image of the concealed design. f The artificially mixed X-ray from the separated results. Pu et al.16. Licensed under CC BY 4.0 (http://creativecommons.org/licenses/by/4.0/).

Pigment analysis

To safely preserve paintings, it is necessary to have a full understanding of the materials employed by the artist and materials used in later interventions, as well as their chemical structure and condition of preservation19. Knowing the pigments used by an artist can be essential in the art historical appraisal of the painting or can be an important parameter in the decision-making process of a conservator when developing a strategy for treatment or preventive conservation. In particular, pigment identification can allow distinguishing overpaint, whereas it impacts the choice of retouching materials, preventing metamerism and assimilating ageing with the original paint. In the context of collection management, risk pigments can be identified, allowing for optimising storage conditions with regard to humidity, temperature, and light exposure while implementing close monitoring and early warning. Many analytical and chemical imaging techniques have been developed for this purpose. Spectroscopic techniques such as MA-XRF, Raman spectroscopy, and RIS provide a wealth of information, such as chemical data related to the pigments used in a painting. Large datasets are then typically analysed using chemometric techniques. Chemometric techniques are used to analyse complex spectroscopic data by applying multivariate statistical methods to extract meaningful information, reduce dimensionality, and build predictive models. These techniques, such as Principal Component Analysis (PCA), help in tasks like quantifying chemical concentrations, identifying components in mixtures, and classifying samples based on spectral features. Nonetheless, techniques such as PCA are based on a linear mixing assumption, which does not accurately reflect the way pigments combine in painted artworks20. Artists traditionally created paints by finely grinding and mixing pigments, leading to what are known as intimate mixtures, where pigment particles are in close physical contact within the paint layer. The resulting reflectance spectrum of such a mixture cannot simply be described as the sum of the individual pigment spectra. Instead, it exhibits a non-linear relationship influenced by the concentration of pigment particles and their absorption and scattering properties, both of which vary with wavelength. Consequently, while such linear models can serve as a useful initial step for identifying spectral endmembers, manual interpretation is still necessary to determine the rest of the endmembers present. To address the non-linearity caused by intimate pigment mixtures in spectral data, a number of researchers have investigated the application of the Kubelka–Munk (KM) model. KM is a mathematical model that provides a framework to calculate the reflectance of opaque materials, such as pigments, based on its absorption (K) and scattering (S) coefficients. Alternatively, rather than relying solely on a physics-based approach, ML methods may offer a solution, as they are well-suited to capturing complex, non-linear relationships within data.

Currently, the primary goals of applying ML to the analysis of spectral and chemical data in cultural heritage are pigment identification and pigment unmixing. Pigment identification is a matter of classification that establishes whether a pigment is present or absent based on the given spectrum. In their study, Jones et al. employed a supervised modern deep learning approach, namely a CNN (see Table 1)21. For the input data, they created an extensive labelled dataset of artificial XRF spectra employing Sherman’s function. The function can be used for generating XRF spectra from a sample of known composition. It describes how the fluorescence intensity emitted by elements in a sample correlates with their concentration. The relationship is governed by physical coefficients, known as fundamental parameters, which vary for each element and its characteristic X-ray line. To generate artificial spectra with the Fundamental Parameter (FP) method, the concentrations of all elements in the sample must be known, along with the relevant fundamental parameter values for each element. After testing the model for the first time with both artificial and historical data, transfer learning (i.e., applying a pre-trained model for a different task) was performed by finetuning the model (i.e., a pre-trained model is further trained on a new dataset). This significantly improved the performance of the model on historical data.

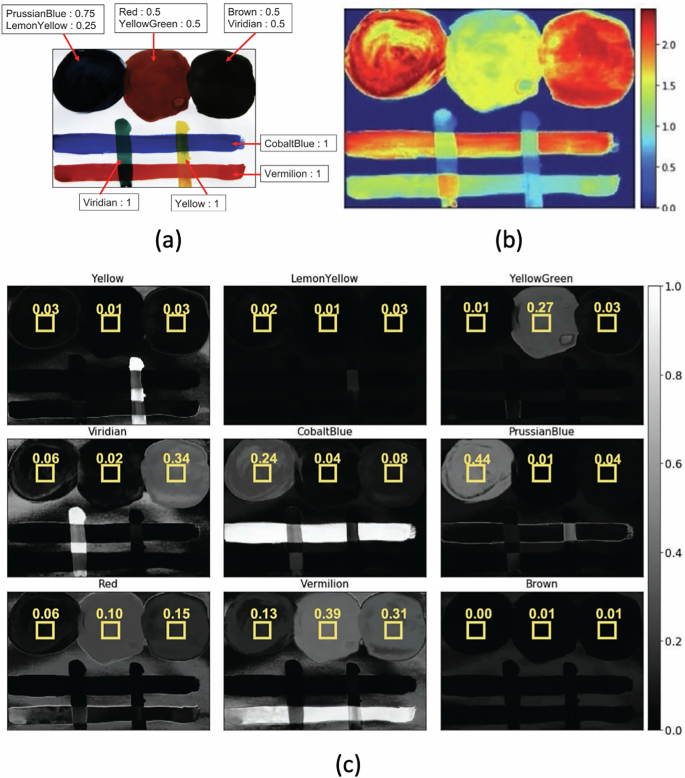

Pigment unmixing is the quantitative decomposition of a given spectrum into its endmembers (EM) or base constituents22. The EMs represent the pure materials utilised to create the mixtures, while showing the contribution of each EM to each pixel of the image. The process of unmixing is then split into two tasks: identifying the EMs and estimating the contribution of each EM. For pigment unmixing, multiple models have been tested (see Table 1). Shitomi et al. modelled an unsupervised classic neural network, namely a physics-based autoencoder, for pigment unmixing and estimated the thickness of pigments in layered surface objects (see Table 1)23. An autoencoder is a feedforward neural network with an encoder-decoder architecture10. It is trained to reconstruct its input. The output of the model should be as similar to the input as possible. An important detail here is that an autoencoder’s network looks like an hourglass with a bottleneck layer in the middle that contains the embedding of the D-dimensional input vector; the embedding layer usually has much fewer units than D. The goal of the decoder is to reconstruct the input feature vector from this embedding. The autoencoder was trained and tested by Shitomi et al. with unlabelled data from mock-ups obtained within the VIS range and artificial spectral data based on KM (Fig. 2). Choosing a physics-based approach to generate data has its limitations because the accuracy of the estimates by the algorithm then depends on the physical information provided by the spectra. However, the researchers demonstrated the superiority of their method by comparing the model to a supervised learning approach using data generated with KM.

a RGB image of the mock-up. b Estimated pigment layer thickness. c Estimated mixing ratios. Adapted with permission from: Shitomi, R. et al.23. © Optical Society of America.

A review of the input data employed in studies on pigment analysis reveals that most researchers rely on artificial data to supplement historical sources. However, several studies have used historical data solely for model evaluation or testing rather than for training. This approach appears less advantageous, as artificial and modern datasets are often less complex than those derived from historical paintings. Inevitably, such data lacks certain characteristics found in spectral data collected from historical works. Firstly, the effects of weathering, ageing, and fading significantly influence the reflectance properties of pigments22. While ageing can be modelled to some extent, numerous variables are involved. The change in a pigment’s reflectance depends on its composition, exposure to light, and environmental conditions—factors which are often unknown. Secondly, the material composition of the painting as a whole—particularly the variety and mixtures of pigments—must be considered21,22,24,25. Elements such as the substrate (e.g., canvas, wood), the preparation layer, the sequence of paint applications (e.g., highly absorbing pigments may block radiation from underlying layers), the binder, the thickness and depth of pigment layers (e.g., thin applications like glazing), pigments containing trace chemical components, pigments with similar elemental characteristics, rare pigments, mixing ratios, and surface coatings like varnish all add to the complexity of correctly identifying and unmixing pigments. Finally, certain variables affecting the identification and separation of pigments are difficult to label explicitly24. Unsupervised learning methods present a promising approach, as they are capable of extracting latent features from spectral data without the need for labelled inputs.

Alongside the study of pigments, examining the materials used as binders and varnishes is essential for a fuller understanding of a painting’s composition and condition. These organic substances not only reflect artistic practice but also play a role in how a work ages and responds to its environment. Although researchers in other disciplines have already used ML to interpret complex data from techniques such as Gas Chromatography-Mass Spectrometry (GC-MS) and Fourier Transform Infrared Spectroscopy (FTIR), these approaches have yet to be applied to paintings in this context. As more relevant examples from historical artworks become available, such tools could offer valuable support in the analysis of these often-overlooked components, building on the progress already made in pigment analysis.

Damage detection

Polychrome surfaces in paintings are susceptible to physical degradation as a result of numerous internal and external factors1. Problems with the painted layers of an artwork can be attributed to the materials employed by an artist, as well as to combining incompatible materials and treating the support. In addition, the conservation history of a painting often affects its condition and stability substantially. In order to design an appropriate preservation strategy, it is necessary to document the various types of damage a painting may have. This is typically done by visual inspection in combination with assessing scientific imagery recorded with various techniques such as VIS photography, UIVFP, IRP, IRR, IRT, and XRR. However, a sound and comprehensive interpretation of these images requires the trained eye of one or ideally multiple experienced conservators. In addition, the volume of data that needs to be examined can make this a challenging job in the context of a conservation treatment that is typically time sensitive, while annotating data to document all damages can be a time-consuming task. Considering that human examination is time-consuming and requires experienced staff, the process of damage identification, classification, and documentation may benefit from the use of swift and automatic interpretation techniques such as ML.

One of the most frequent deteriorations seen in paintings is cracking, also known as craquelure26,27. In general, pressures that build up within or on paint layers due to a variety of reasons typically result in the material breaking, leading to cracks. ‘Age cracks’ are usually caused by the substrate expanding or contracting, with the ensuing cracks running through the entire paint layer stack of a painting. These changes in the substrate are often due to fluctuations in temperature and relative humidity. The entire paint buildup might also be subjected to mechanical cracks1,28. This type of cracking may be generated by transport vibrations, framing stress or other types of physical impact. Premature cracks affect only one layer of paint and are less defined than age cracks26,27. They typically indicate poor technical execution during the painting process, such as applying a fast-drying layer on top of a slow-drying layer or applying a paint layer on top of a paint that is not dry enough. Finally, varnish cracks appear exclusively in the varnish layer when oxidation causes it to become brittle. Cracks can be visually classified as either dark cracks on a bright background or bright cracks on a dark background. Additionally, they differ in width from fine hair-like lines to wide, open cracks exposing layers below, and sometimes extending into areas of missing paint. They create a pattern that might be entirely random or in the shape of trees, webs, rectangles, circles, or unidirectional.

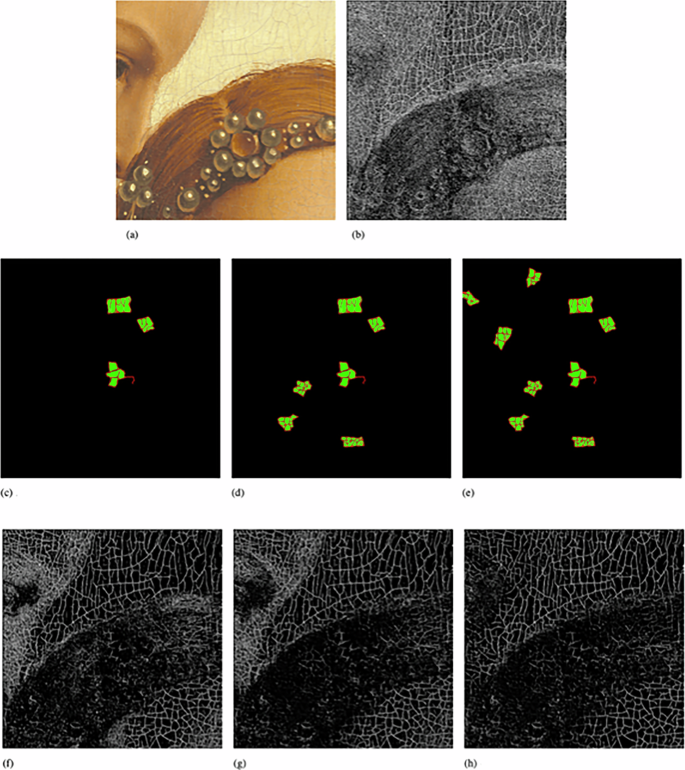

The most recent developments in crack detection were made by Nadisic et al., who proposed DAL4ART, a supervised active learning framework utilising a modern deep learning algorithm, more specifically a CNN (see Table 1)29. The historical data used as an input dataset consisted of labelled multi-modal images acquired with VIS, IRP, IRR, and XRR during the restoration campaign of the Ghent Altarpiece. After training and testing the model to detect cracks, fast re-learning (i.e., retraining a model from scratch after adding new data to the existing training data), continuous learning (i.e., a model that can learn continuously over time from new data, without forgetting previous knowledge), and transfer learning methods were employed to integrate new information without overfitting the model to the assignment at hand (Fig. 3). For future research investigating the use of state-of-the-art U-Net models could deliver interesting results in crack detection. U-Net is a type of CNN architecture which is structured as a U-shaped network consisting of a contracting path (encoder) to capture context and a symmetric expanding path (decoder) for precise localisation30. The architecture enables efficient training with a small number of annotated data, such as images, and achieves high segmentation accuracy by employing skip connections that directly transfer feature maps from the encoder to the corresponding decoder layers.

a Original patch (visual macrophotography). b Crack map produced by a pre-trained CNN. c Manual annotations for the 1st iteration of active learning. d Manual annotations for the 2nd iteration of active learning. e Manual annotations for the 3rd iteration of active learning. f Crack map produced after the 1st iteration of active learning. g Crack map produced after the 2nd iteration of active learning. h Crack map produced after the 3rd iteration of the active learning network. Nadisic et al.29. Licensed under CC BY-NC-ND 4.0 (https://creativecommons.org/licenses/by-nc-nd/4.0/).

Another common form of damage is paint loss. The regions with paint loss, also known as lacunae, may result from a wide range of issues, including physical impacts such as abrasions, tears, etc., or from the combination of other forms of damages. To detect paint losses, Meeus et al. suggested a modern deep learning method that is semi-supervised and does not rely on a large number of labelled data (see Table 1)31. As input data, the same historical dataset consisting of scientific imagery taken of the Ghent Altarpiece as Nadisic et al. used were chosen. The proposed method utilises a translation invariant U-Net (TI-U-Net). After training and testing the model, transfer learning was employed to integrate new data from unseen images, with little additional retraining.

Contrary to other studies, Chirosca and Angheluta decided not to solely focus on one type of damage, but to examine works of art that showed various kinds of physical deteriorations (cracks, paint loss and blisters) in the painted layer with a modern deep learning approach (see Table 1)1. They employed a VGG-16 to obtain a damage map that was labelled with the types of damage they focused on. VGG-16 is a CNN architecture designed for large-scale image recognition32. It features 16 weight layers, consisting of 13 convolutional layers and 3 fully connected layers. The network is characterised by its use of small 3 × 3 convolutional filters throughout, which helps in capturing complex patterns while maintaining computational efficiency. VGG-16 was designed to demonstrate that increasing depth with small convolutional filters can improve network performance. Despite its effectiveness, it has a high computational cost due to its 138 million parameters, making it resource-intensive compared to more modern architectures. A historical dataset composed of high-resolution images taken during the documentation of an icon was utilised as labelled input data. Further improvement could be made by training a model for more types of damage, e.g., abrasion, flaking, water damage, etc.

IRT is an imaging method which can be used to detect damage in a painting. ML has been used to facilitate extrapolating damages from thermographs. Liu et al. specifically focused on enhancing Principal Component Thermography (PCT) to detect damages (see Table 1)33. PCT is a widely utilised technique in thermography where PCA is employed for improving damage detection and studying damage distributions. Liu et al. utilised a GAN algorithm and a modern deep learning algorithm to enhance PCT’s performance. The first one is a Spectral Normalised GAN (SNGAN). A GAN is a type of neural network consisting of two components: a generator that creates fake data, and a discriminator that distinguishes between real and fake data. The generator and discriminator are trained together in a competitive setting, where the generator aims to produce data that the discriminator cannot distinguish from real data. A SNGAN adds spectral normalisation to the discriminator to stabilise training, improving the quality of generated samples. The second approach that was tested is a CAE. A CAE is a type of neural network architecture designed for unsupervised learning tasks such as feature extraction and data compression. It consists of an encoder that compresses input data into a lower-dimensional latent representation using convolutional layers, and a decoder that reconstructs the input data from this compressed representation. The model is trained to minimise the difference between the original input and the reconstructed output, enabling it to learn efficient representations of the data. Liu et al. aimed to evaluate the relative impacts of the ML methods on PCT outcomes. The input dataset was comprised of historical data, namely images of a panel painting and artificial data in the form of those same images with artificial zones simulating damages at varying depths added onto them. The results show that both SNGAN and CAE outperform regular PCT, but CAE delivers the best results overall. In future studies, it may be interesting to investigate how deep learning techniques might be combined with other feature extraction techniques than PCT.

For damage detection, almost exclusively, historical data is used. However, it can be observed that some researchers have employed datasets with minimal variation in terms of style, period and artist. The use of different materials and painting techniques will result in different damage patterns. This creates an opportunity to experiment with the input data and introduce more variation by incorporating artificial data (like artificial damages), more complex mock-ups, and additional historical data to refine and improve the models. Most methods to date have relied on supervised learning, using manually labelled data according to the type(s) of damage present. However, labelling data can be highly time-consuming, making it worthwhile to explore semi-supervised and unsupervised learning methods further.

Virtual restoration

As mentioned before, the appearance of a painted artwork’s surface inevitably changes over time due to ageing, which affects its visual qualities. Cracks, paint loss, and other forms of deterioration may develop; varnish can yellow, and the colours of pigments may fade. Traditional art restoration methods—such as careful cleaning, retouching, and structural repair—are typically undertaken by skilled conservators34. Although these techniques can be effective, they often fall short of fully recapturing the artwork’s original authenticity. Yellowed varnish can be removed, paint losses and some other damages could be restored, but filling in all the craquelure and recovering the original colour of the pigments is not feasible. Consequently, striking a balance between preserving the artist’s intent and addressing damage remains a continual challenge. In this context, the integration of ML into art restoration has emerged as a promising development. Virtual restoration, driven by ML, is the most feasible means of recovering a painting’s original appearance35. It can also serve as a valuable simulation tool to support decision-making during the physical restoration process.

Virtual crack restoration offers a non-invasive method to enhance the visual appearance of paintings, particularly by digitally reducing the impact of surface cracks that are typically left untouched in physical conservation. These cracks, while essential to preserve for authenticity, can hinder the viewer’s appreciation of the artwork. To address this, Sizyakin et al. propose a supervised GAN method in which cracks are first detected using a crack detection algorithm, and then digitally inpainted using an Adaptive Adversarial Neural Network (aGAN) (see Table 1)35. The aGAN architecture consists of an autoencoder-based generator and a CNN-based discriminator, trained in an adversarial setup where each network improves through competition with the other. This design leads to sharper and more realistic inpainted images compared to traditional autoencoders that rely solely on pixel-based loss functions. The model is trained and tested on a historical dataset consisting of high-resolution images of two paintings from the Ghent Altarpiece—Annunciation Virgin Mary and Singing Angels—provided in three imaging modalities: VIS, IRP, IRR, and XRR. This multi-modal input improves the accuracy of virtual crack detection by providing rich, complementary visual information to guide the restoration process. While the current study focuses on these two works, the approach shows broader potential for digitally restoring historical paintings affected by craquelure or paint loss.

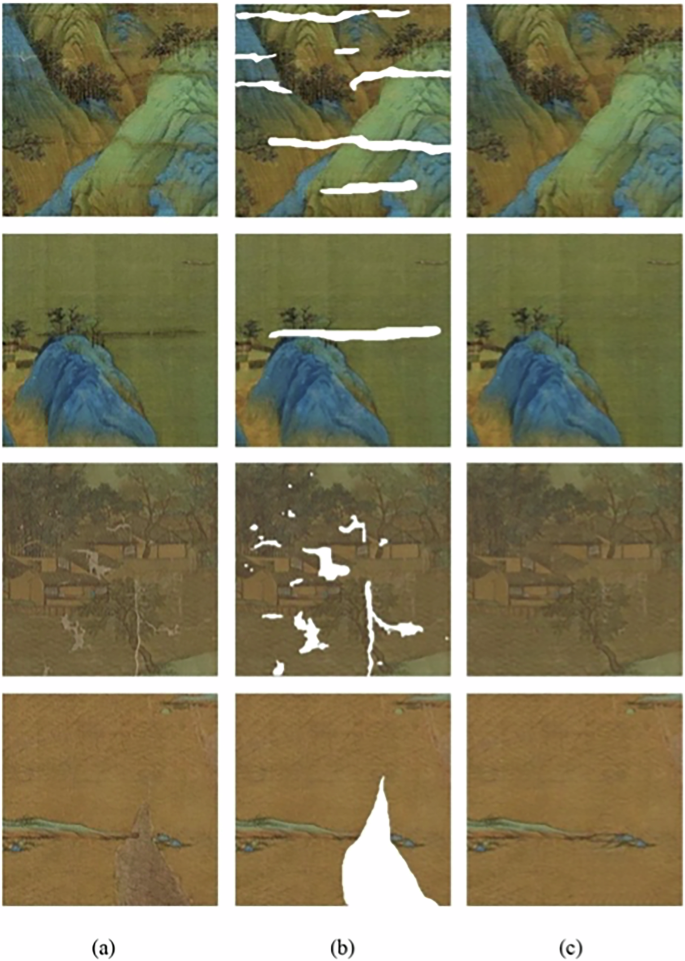

While many deep learning image restoration models perform well on natural images, they often struggle with paintings—leading to texture loss and overly smooth results. Enhancing the extraction of semantic features and improving feature transfer is essential for virtually restoring paintings while preserving their original texture and intricate details. Considering these issues, Sun et al. proposed a supervised GAN model to virtually restore paintings that leverages dual encoders and contextual information (see Table 1)36. The GAN-based model integrates a generator with two encoding branches: a regular encoding branch and a gated encoding branch. This aids in preserving the texture and detail of the painting. A discriminator then assesses the quality of the restored image. The model was trained and tested on two datasets: a dataset containing four famous Chinese paintings and a publicly available mural dataset. An artificial training dataset was created by simulating damaged artworks using pixel multiplication between original paintings and mask images, allowing for a range of breakage levels. The model was also validated on a historical dataset that consisted of damaged ancient paintings and murals, demonstrating its practical potential in digital heritage restoration (Fig. 4). Despite its success, the model still faces challenges in restoring mural details accurately, particularly in real-world applications.

a Real damaged paintings; b Paintings with manually labelled damaged areas; c Virtual restoration results. Sun et al.36. Licensed under CC BY 4.0 (http://creativecommons.org/licenses/by/4.0/).

Artwork conservation often involves the irreversible process of varnish removal to restore a painting’s original appearance. However, physical cleaning can be risky and time-consuming, prompting the need for virtual cleaning, a technique that simulates the cleaning process without physically altering the artwork. This assists conservators in making informed decisions about varnish removal. Previous methods of virtual cleaning have had limitations, including reliance on large training datasets, challenges in identifying specific colours, and restricted applicability to different types of paintings. To address these issues, Amiri and Messinger propose an unsupervised modern deep learning approach, more specifically a DGN, to virtually clean artworks in the spectral reflectance domain (see Table 1)37. A DGN is a type of deep learning architecture that learns to model complex data distributions and generate or reconstruct high-quality data from incomplete, noisy, or degraded inputs38. It typically consists of a generator that maps low-quality inputs to desired outputs, using multiple layers to automatically learn relevant features from the data. DGNs are often trained in an unsupervised manner, making them effective for tasks like image restoration, enhancement, and generation, where the goal is to predict or generate realistic data without the need for labelled examples. The DGN method proposed by Amiri and Messinger requires a small section of the painting to be physically cleaned. By estimating the relationship between the cleaned and uncleaned parts, the DGN can estimate the unvarnished appearance of the entire painting. This technique was tested using both artificial data (Macbeth ColourChecker) and historical data collected in the form of hyperspectral imagery of Georges Seurat’s Haymakers at Montfermeil. The DGN method improves the accuracy of virtual cleaning, which also allows for pigment mapping and identification before any physical cleaning occurs. However, the method’s reliance on a small pre-cleaned area poses a limitation, as it may not always be feasible to obtain a representative cleaned section. Despite this, the method still produces satisfactory results even with less representative pre-cleaned areas.

When restoring an artwork, damages such as paint loss are retouched; however, retouching large areas where the pigments have faded is generally not accepted. By virtually recolouring an artwork, the original colours and hues of pigments can be brought back. Unfortunately, the availability of quality datasets for training deep learning models is often limited due to various challenges. To address this, Bombini et al. proposed a modern deep learning methodology that utilises a supervised ViT to virtually recolour a painting (see Table 1)39. A ViT is a deep learning architecture that treats an image as a sequence of fixed-size, non-overlapping patches40. These patches are linearly embedded into vectors and fed into a standard transformer model. The transformer uses self-attention to capture relationships between patches, enabling the model to learn global dependencies and make predictions for tasks like image classification. This approach departs from traditional CNNs by relying on transformers instead of convolutions to process spatial information. The input to the ViT employed by Bombini et al. is an artificial MA-XRF dataset, generated using the ganX algorithm, and reduced using deep variational embedding to decrease the disk size of the input dataset and to extract relevant spectral features independent of the measuring apparatus or the measurement conditions41. The embedded MA-XRF maps are then processed by a ViT trained to map the XRF data to RGB images and thus virtually recolour an artwork.

For the virtual restoration of paintings, both artificial and historical data are employed in equal measure. However, artificial data is more commonly used to train the model, while historical data is typically used to test it. In an ideal scenario, historical data would also be used for training, as artificial data tends to be less complex. Furthermore, future research should focus on expanding the training dataset to encompass a wider range of paintings from various styles, periods, and artists36. This would ensure greater diversity in potential damage types, varnish types, and pigment compositions, all of which degrade in distinct ways. Regarding varnish removal, future studies should address the challenges linked to the requirement for a cleaned region to train the model37. As for the type of algorithms, it is evident that generative models are favoured for virtual restoration. These models excel at filling in damaged or missing sections of artwork, which is particularly important when dealing with incomplete or deteriorated pieces. Since these models can often be trained in an unsupervised manner, without the need for labelled data, such as fully restored examples, they are particularly valuable in virtual restoration, where such data may be limited or unavailable. An important future direction lies in advancing virtual restoration from mere visual enhancement towards applications such as the simulation of conservation treatments.

Damage prediction

Panel paintings are artworks with distinctive hygroscopic and heterogeneous properties42. The hygroscopic nature is primarily caused by the presence of a wooden support (i.e., the panel), which is very prone to water, particularly when changes in temperature (T) and relative humidity (RH) are at play. The heterogeneous nature is caused by the presence of layers of various materials, such as the wooden support, the ground (usually a mixture of animal glue and chalk), oil-based paint layers, and resin varnishes. Damage in the pictorial layer can occur due to a mismatch between the wooden panel’s moisture response and that of a brittle ground layer. Variations in the (micro)climate, for instance, when travelling from one exhibition to another, can result in a negative impact on the mechanical integrity and qualities of paintings. Therefore, a solid understanding of the mechanical behaviour of panel paintings under microclimatic fluctuations is essential for their conservation. Countless research endeavours have relied on monitoring, experimental, and numerical projects, collecting a vast amount of data to develop strategies for minimising damage. Yet, because the researchers must adhere to the timelines of microclimatic variations and fluctuations as well as the characteristic response time of multi-material objects during diffusive phenomena, such projects are often laborious. As a solution to current time-consuming research projects, the generalisation abilities of ML, along with established failure criteria from the fields of mechanical engineering and fracture mechanics, can be combined to obtain reliable findings and guidance on how to move forward with conservation practices. It could speed up the decision-making process regarding preventive conservation of paintings and collections and add value to the process.

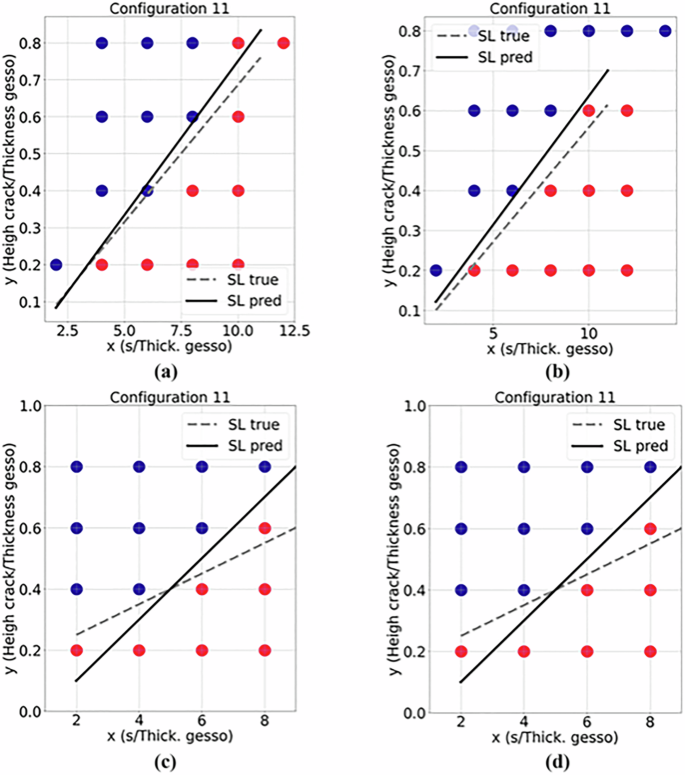

Califano et al. proposed a traditional ML methodology for predicting climate-induced cracking in wooden panel paintings by combining parametric Finite Element Modelling (FEM) with supervised ML techniques (see Table 1)42. A simplified three-dimensional FEM model was developed to simulate a panel painting incorporating a penny-shaped crack within the gesso layer. Across 27 configurations, the researchers varied key parameters such as gesso thickness, wood species (lime, spruce, poplar), and the ratio between the thicknesses of wood and gesso layers. The simulations applied the Strain Energy Density (SED) criterion to assess whether each configuration could be considered structurally sound (safe) or at risk of further crack propagation (unsafe). Two supervised ML algorithms were employed for this purpose: XGBoost and GPR. XGBoost is a high-performance ensemble method that constructs predictive models by sequentially adding decision trees, each one aimed at correcting the errors of its predecessors. It optimises a loss function via gradient descent and is recognised for its scalability and efficiency, particularly with lower-dimensional datasets. In contrast, GPR adopts a non-parametric Bayesian approach, modelling the relationship between inputs and outputs as a probability distribution over functions. This allows it not only to produce point estimates but also to quantify the uncertainty of its predictions through associated standard deviations. Both models were trained on artificial data consisting of 2D sub-models of FEM simulations and subsequently tested on previously unseen configurations. The findings indicate that both algorithms performed well in approximating SED values, though prediction accuracy was influenced by material characteristics; lime wood, for example, exhibited greater sensitivity to geometric and environmental variation, resulting in increased dispersion of simulation outcomes (Fig. 5).

True (grey dashed) and predicted (black continuous) separation lines for configuration 11 of the lime wood model subjected to four different cases (a–d) with varying ratios between the width of a single gesso island (s), the thickness of the gesso, and the height of the crack. The corresponding subplots (a–d), respectively, are shown. Califano et al.42. Licensed under CC BY 4.0 (http://creativecommons.org/licenses/by/4.0/).

Exploring the feasibility of applying ML techniques to a complete 3D model, rather than just 2D sub-models, could provide a valuable direction for further investigation. When the input data is based on a digital 3D model, more complex configurations that account for the layered structure of a painting, and how these layers interact with each other under varying climatic conditions, could be employed. Each material will respond uniquely to factors such as changes in humidity, potentially causing friction between different layers. Additionally, it is noteworthy that this study relies exclusively on artificial data. A 3D digital model cannot fully capture the history of a painting (e.g., climatic history or previous repairs). For instance, paintings often adapt to their specific climatic environment, even when the conditions are far from ideal. Consequently, when a painting is placed in a new climate, it may not respond well, even if the new environment is theoretically more suitable. This suggests that further possibilities lie in the use of historical data derived from previous measurements of deformations in historical objects. Although this approach would be more time-consuming, it could potentially yield more accurate results. Many factors that contribute to the degradation of a painting, such as the materials used, structural components (e.g., cradling), the surrounding climate, and the painting’s history, are difficult to model. Although supervised learning was employed in this study, using unlabelled data and unsupervised methods might provide a solution to these challenges. Additionally, comparing the output of an XGBoost model with that of a deep neural network could result in more accurate damage predictions, as deep neural networks are more adept at learning complex relationships. Finally, Califano et al. focused solely on the future progression of cracks in the gesso. However, paintings can suffer from various types of damage, and future studies could therefore consider other forms of damage. For instance, based on images of the surface of a painting, predictions of future paint losses or delamination could be made.

Discussion: cross-thematic insights

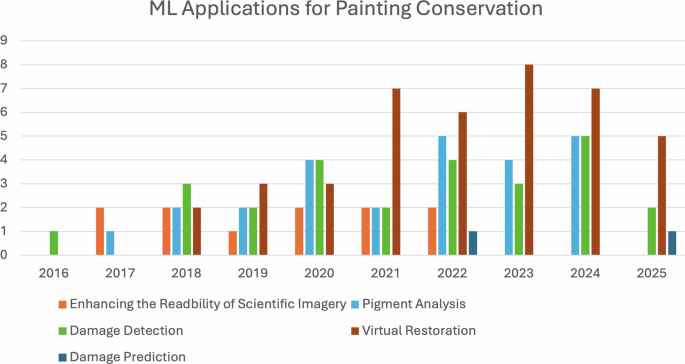

By examining the five thematic areas—enhancing the readability of scientific imagery, pigment analysis, damage detection, virtual restoration, and damage prediction—it becomes clear how data types, learning strategies, and algorithmic choices are shaped by the specific demands of each task. While these approaches vary across themes, recurring patterns can be observed in methodological design and data use. Figure 6 complements this analysis by providing a broader overview of the field, illustrating how research activity in each theme has developed over time. Importantly, it also includes studies published under more restricted access, offering a more comprehensive perspective on the evolution of machine learning in painting conservation.

The graph shows the yearly growth of machine learning applications in painting conservation, categorised by theme. Virtual restoration has seen the most significant rise since 2020, while pigment analysis and damage detection have grown steadily. Other themes like damage prediction and imagery enhancement appear more sporadically.

Across all five themes, historical data drawn from real artworks play a central role, reflecting the direct application of these methods to genuine conservation scenarios. Nevertheless, artificially generated data—such as simulated XRF spectra, synthetic damage masks, or digitally mixed X-ray images—is commonly used to augment these datasets, which may typically be limited in terms of volume and quality. This approach is particularly prevalent in pigment analysis and virtual restoration, where labelled real-world examples may be too scarce. Modern mock-ups, which replicate traditional materials and techniques in controlled settings, serve as a bridge between artificial and historical data and are frequently employed to validate models or supplement training datasets. However, as noted in pigment analysis and virtual cleaning studies, models trained predominantly on synthetic or modern data may underperform when applied to the complex, aged surfaces of historical paintings.

The choice of learning type varies according to the availability and nature of labelled data. Supervised learning is most prevalent, especially within pigment analysis and damage detection, where clearly defined input-output pairs allow for effective model training. However, the labour-intensive nature of data annotation has promoted a growing interest in semi-supervised and unsupervised methods, particularly in tasks like pigment unmixing, virtual cleaning, and damage prediction, where ground-truth labels are either unavailable or ambiguous. Self-supervised methods, though still emerging, show promise in reducing dependency on labelled data while preserving task relevance, as demonstrated in X-ray image separation and reflectogram enhancement.

Algorithm selection reflects both task-specific requirements and data structure. Modern deep learning architectures, particularly CNNs, are widely used in crack detection and infrared reflectogram enhancement—owing to their strong performance in visual pattern recognition. For spectral data, classic neural networks and traditional ML techniques (e.g., GPR, XGBoost, K-SVD) continue to be employed, offering a balance between interpretability and performance. GANs are prominent in virtual restoration work, where their capacity to generate plausible reconstructions without explicit ground truth makes them highly effective. Their capacity for unsupervised training further enhances their suitability for restoration scenarios.

A central insight emerging across the thematic discussions is that the generalisability of ML models in conservation contexts is contingent not solely upon model architecture or learning type, but critically upon the variability, complexity, and realism of the training data employed. As explored in the sections on pigment analysis and damage detection, models developed from narrowly delimited datasets—constrained, for instance, by artist, historical period, or material type—frequently exhibit limited robustness when applied to broader collections. Accordingly, future work might more productively orient itself toward the curation of training corpora that encompass a wider spectrum of materials, techniques, and degradation phenomena, thereby affording models greater flexibility in the face of real-world conservation challenges. In this context, efforts at predictive modelling of damage processes would benefit from the integration of both artificial and empirically derived data, including the incorporation of three-dimensional models that more accurately approximate the layered and often non-linear material interactions characteristic of painted surfaces.

Across all domains examined, the integration of ML into conservation research underscores both its transformative potential and its methodological and conceptual tensions. The field is marked by an emergent methodological plurality, with approaches adapted to task-specific constraints while remaining responsive to the material and ethical stakes of cultural heritage conservation. The enduring need for closer and more sustained collaborations between conservation practitioners and ML specialists remains clear. Such partnerships are indispensable not only for the articulation of research questions that are materially and contextually grounded but also for ensuring that computational innovations, often abstracted from the contingencies of practice, translate into outcomes that are meaningfully aligned with conservation priorities. Here, the question is not simply whether models work in a technical sense, but how and under what assumptions their outputs come to matter within the complex, iterative work of conserving painted heritage.