Society / StudentNation / October 6, 2025

With platforms like Boston University’s “TerrierGPT,” the pressure for students to use AI is coming from colleges themselves, even as researchers warn of long-term consequences.

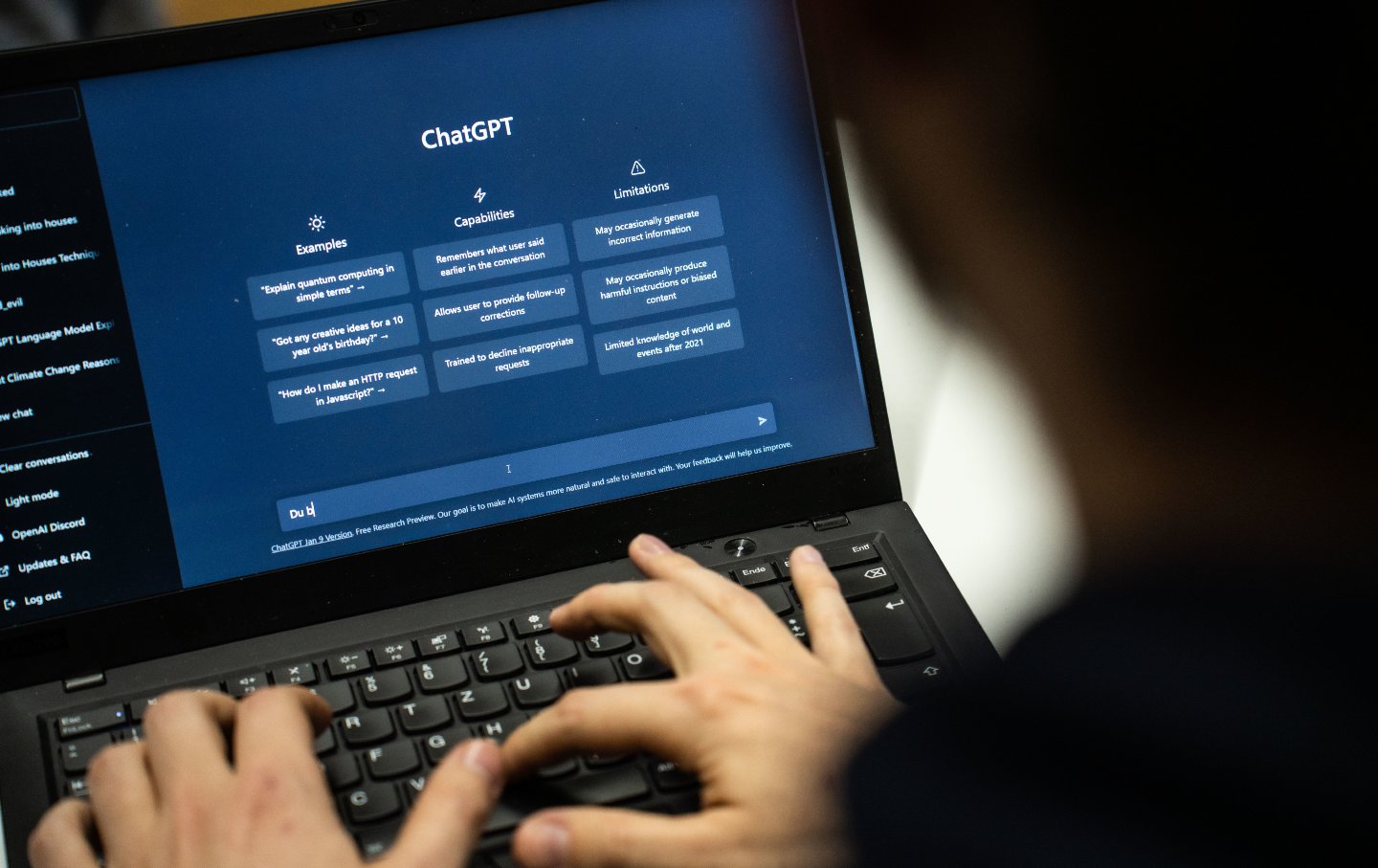

The artificial intelligence tool ChatGPT.

(Frank Rumpenhorst / Getty)

Often, when a college student receives an e-mail from their school during the summer—a message from the bookstore or a reminder about moving in—it is quickly glossed over. But when Quinn, a sophomore at Boston University, saw the letters “GPT” in their inbox in late July, they immediately took notice.

The e-mail was sent by the university’s “AI Development Accelerator” and the IT help center, and introduced TerrierGPT, a new artificial intelligence–powered chat platform that granted students access to the most widely used generative AI (GenAI) models.

Quinn, a graphic design and anthropology student in the College of Fine Arts, expressed skepticism about the school’s embrace of the new technology. On the Internet, they said, “AI is getting shoved down my throat every second.” But now, the pressure to use artificial intelligence would be coming from the university itself.

The use of generative AI has become pervasive among college students, with more than one-third of 18- to 24-year-olds in the United States already using ChatGPT. TerrierGPT is meant to “ensure all students have equitable access” as more LLMs become pay-to-use, according to the e-mail sent to students. For some, this implies that those who don’t have access—or who willingly choose not to use AI—are at a disadvantage. “It sort of seems like peer pressure,” Quinn said, “I think there are plenty of ways for us to reform learning so people can still keep up and not use AI.”

In fall 2023, Boston University formed a task force to study the impact and usage of AI, which later recommended that the school “should not universally prohibit or restrict the use of GenAI tools.” The result is TerrierGPT. The university has not created its own model; rather, it offers a free gateway for students to access preexisting artificial intelligence programs internally. BU is just one of several institutions that have adopted a “critical embrace” of these tools. According to a recent report from Inside Higher Ed, the demand for AI education and training has grown exponentially, as employers increasingly prefer candidates proficient in artificial technology. In June, Ohio State University announced that it was introducing an “AI Fluency Initiative” to undergraduate students to teach them how to effectively use AI.

“Many students have been discussing how their internships or potential future employers encourage them to become more well-versed in LLMs and how to prompt them appropriately,” said Tye Robison, a senior in the university’s data science program and a paying subscriber of ChatGPT Plus. “I think the consensus on campus is that data science majors will need to know how to use AI efficiently regardless of if they want to or not,” she said.

Amélie Tonoyan, a sophomore English and philosophy student at Boston University, said that most of her professors have avoided using artificial intelligence “and emphasize that our writing must never utilize it,” but when professors do allow students to use AI in humanities classes—where writing and critical thinking are integral parts of the coursework—the usage guidelines are clearly outlined. One of Tonoyan’s professors, for example, asked her class to prompt ChatGPT to write an essay about The Republic by Plato, and then to critique the output with their own writing. In this case, the AI model’s work was used to point out gaps in the technology and to reaffirm the importance of a human voice.

But what starts with professors encouraging students to experiment with AI for small tasks, critics argue, can evolve into a bad habit—one that will be difficult to rewire down the line. “With no shame to anybody that uses it if they feel behind in class,” said Quinn, “but I also see people use it in circumstances where they really could just make an effort to learn.” Though Boston University’s vision for TerrierGPT is that students will be able to foster their critical thinking skills, recent research links excessive use of ChatGPT to lower brain engagement and a condition called “cognitive debt,” in which a short-term deferring of mental effort leads to long-term consequences.

The preliminary findings, conducted by the MIT Media Lab, stated that “repeated reliance on external systems like [large language models] replaces the effortful cognitive processes required for independent thinking.” Tonoyan believes the integration of artificial intelligence defeats the purpose of her education. “It’s going to stifle our creative impulses,” she said. “It not only broadens the scope for academic dishonesty, but it takes away the playful mystery inherent in the learning process.”

Nataliya Kosmyna, one of the contributing scientists of the study, explained how they are still in the early stages of discovering the long-term impacts of AI use. “We chose to release a preprint now because of the rapid deployment and rapid impact on the society humans are living in. Education and academia have to evolve as anyone else at the time of change,” Kosmyna said. “Because LLMs are here to stay, the question is, will it change education for [the] better?”

“Our educators have successfully guided students through previous waves of technological change,” said Colin Riley, a spokesperson for Boston University. “We are confident that BU’s approach—thoughtful curriculum design and ongoing faculty assessment—will prepare students to thrive in an ever-changing workforce, whether influenced by AI or other emerging factors.”

Thomas Yousef, a senior studying data science at BU, mainly uses AI for his coding and math classes. But even within his major, there are mixed feelings about the technology. “On one side, people think it will help improve technology and have a positive impact on the world. However, others also think it can cause job loss and aid in students cheating,” he said. Robison, though she supports the creation of the platform, has similar concerns. “I think that without proper regulation, AI could cause people to become too reliant on chatbots and too trusting of their outputs,” she said. “It is extremely common that platforms like ChatGPT, Claude, etc. spit out misinformation, incorrect answers, or just bad advice.”

Popular

“swipe left below to view more authors”Swipe →

Margaret Wallace, an associate professor at Boston University, has seen firsthand how AI has evolved during her time in the video game industry. “In my courses, I integrate lessons, lectures, readings, and hands-on exercises focused on GenAI. We demystify what AI actually is, explore specific use cases and platforms, and consider the ethical and social implications of these innovations,” she said. Wallace allows her students to experiment with GenAI for creative and analytical projects, but emphasizes “the importance of students developing their own expertise rather than relying on AI to do the thinking for them. Ultimately, we all need to examine how GenAI will impact our work and lives, and whether we can responsibly leverage it as an assistive, co-intelligent, or even transformational tool for the betterment of humanity.”

“We recognize that artificial intelligence is a transformative and disruptive technology,” said Riley, the Boston University spokesperson. “These initiatives are designed to support and elevate human creativity, judgment, and innovation, not replace them.”

But it was human creativity that led Quinn to come to Boston University in the first place. “I’d like to say I pride myself on going to a pretty prestigious school. A lot of smart people have studied [here], and a lot of beautiful artists have come out of the university,” they said. “I’d like to say that I come from a university where all the accomplishments have come from human brains.”

Julie Huynh

Julie Huynh is a student and writer at Boston College.