Oregon State University chip breakthrough cuts AI energy use

作者:Author: Chris McGinness

AI is exploding, and so is the related energy use. OSU engineers design a new chip to curb some of that power usage.

CORVALLIS, Ore. — The future of artificial intelligence is bright — but it's also energy-intensive. With AI's rapid advancement, global electricity consumption is expected to nearly double over the next five years, according to the International Energy Agency (IEA). A significant driver of that increase? The growing demand for AI computing power.

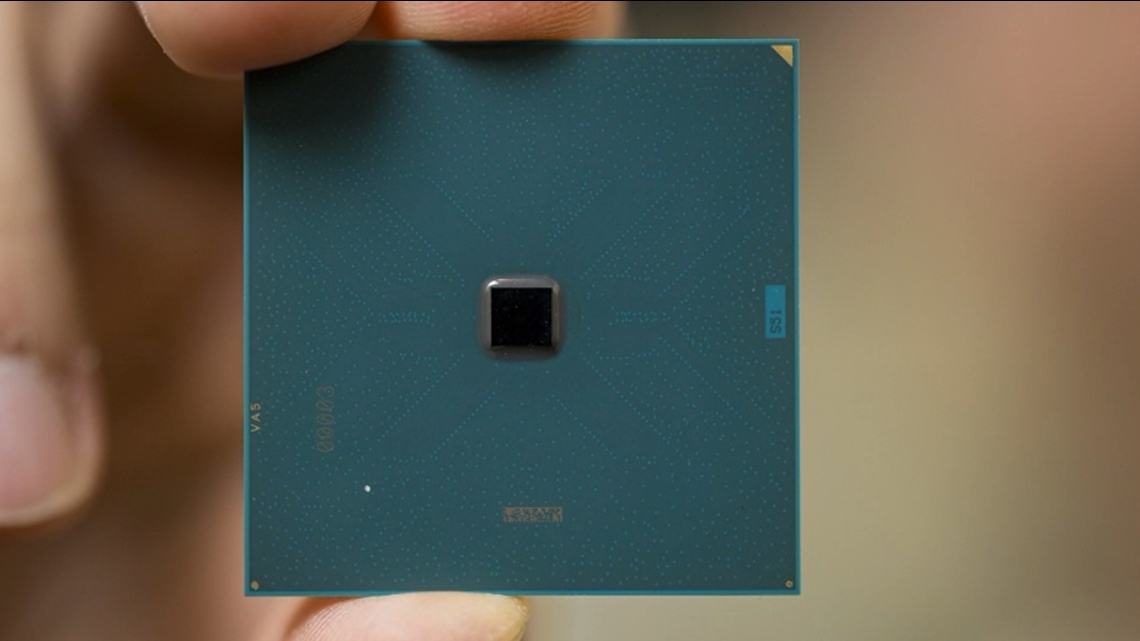

But researchers at Oregon State University may have a promising solution. Engineers at OSU’s College of Engineering have developed a new computer chip that cuts one major aspect of AI’s energy use in half — potentially marking a big step toward more sustainable AI systems.

"The amount of communication that we are talking about is of the order of hundreds of trillions of bits going around in a data center to do something," said Tejasvi Anand, associate professor of integrated electronics at OSU.

Anand and doctoral student Ramin Javadisafdar have been working to make that massive data transfer more efficient — and their new chip does exactly that. Rather than using conventional transceivers that consume large amounts of power shuttling data between processors in data centers, the OSU design leverages artificial intelligence principles to correct transmission errors in a more efficient and streamlined way.

“We are implementing some AI principles in the chip itself to recognize these errors and clean them up in a much smarter and more efficient way,” Javadisafdar said.

The impact could be far-reaching. By Anand’s estimate, the power needed to support AI workloads by 2030 could reach 35 gigawatts. According to the IEA, "Data center electricity consumption is set to more than double to around 945 TWh by 2030. This is slightly more than Japan’s total electricity consumption today."

Even a simple AI query — like asking ChatGPT about its energy usage — can consume 10 to 15 times more electricity than a basic Google search. The new OSU chip offers a way to tackle this energy burden at the infrastructure level, especially as large-language models grow more complex.

Traditionally, data is transferred in small segments or "bytes," which are disassembled, routed across chips in massive data centers, and reassembled at the destination. Each of these steps requires power. The OSU team’s breakthrough bypasses some of that process entirely.

“We are able to basically recover the data or communicate the data from point A to point B in less than half the power than what was required in some of the previous solutions that exist in the industry today,” said Anand.

While it could take a few years for the tech industry to adopt these chip designs at scale, Anand and Javadisafdar are already developing advanced versions of the chip with even greater efficiency.

At the same time, Oregon lawmakers are grappling with the power demands of the data center boom. House Bill 3564, which has passed the Oregon House and awaits a Senate vote, proposes the creation of a separate ratepayer class specifically for high-energy users like data centers. The goal is to ensure that electricity costs are distributed equitably as the state's digital infrastructure expands.

Meanwhile, OSU’s innovation offers a glimpse at how smart engineering — and a little help from AI itself — might keep the AI revolution sustainable

Chris McGinness is a meteorologist and reporter for KGW News. Email him here and reach out on social media: Facebook, Instagram and X