Apple’s AI-driven Stem Splitter audio separation tech has hugely improved in a year

Imagine that you have a song file—drums, guitar, bass, vocals, piano—and you want to rebalance it, bringing the voice down just a touch in the mix.

Or you want to turn a Lyle Lovett country-rock jam into a slamming club banger, and all that's standing between you and the booty-shaking masses is a clean copy of Lovett's voice without all those instruments mucking things up.

Or you recorded a once-in-a-lifetime, Stevie Nicks-meets-Ann Wilson vocal performance into your voice notes app... but your dog was baying in the background, and your guitar was out of tune. Can you extract the magic and discard the rest?

Without access to the original recording project files or master tapes, jobs like these have always been slightly difficult. Specialized tools or software could extract specific sounds, often the vocals, sometimes through crude techniques based around high- and low-passing the audio, but the results were never quite what one might wish. With the advent of machine learning, however, computers have gotten scarily good at reaching into dense audio mixes and surgically extracting complicated parts.

Apple's May 28 update to its flagship audio program, Logic Pro, shows just how far this tech has come—and how quickly it's advancing.

Put to the test

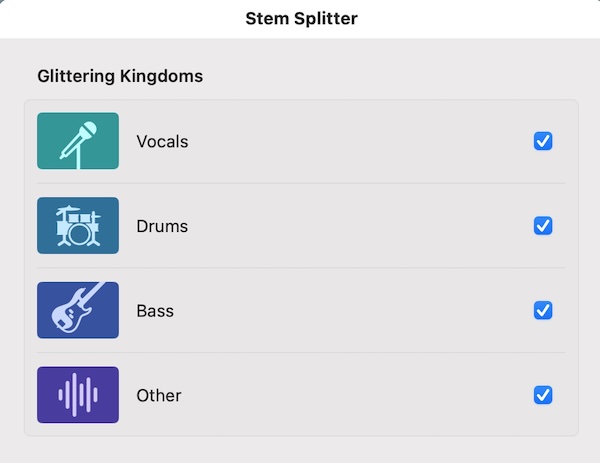

In 2024, Apple rolled out the Stem Splitter feature in Logic 11. Powered by AI tools and requiring Apple Silicon in order to work, Stem Splitter could "recover moments of inspiration from any audio file and separate nearly any mixed audio recording into four distinct parts: Drums, Bass, Vocals, and Other instruments, right on the device," Apple said. "With these tracks separated, it’s easy to apply effects, add new parts, or change the mix."

The original Stem Splitter in Logic.

And it worked—but it worked best as long as you kept all the stems together (that is, if you simply rebalanced the track or added effects to one of the stems). But if you wanted to isolate a single stem, you had to contend with some fairly gnarly audio artifacts.