- Research

- Open access

- Published:

Egyptian Journal of Radiology and Nuclear Medicine volume 56, Article number: 160 (2025) Cite this article

Abstract

Introduction

Fractures pose a critical challenge in emergency settings, necessitating rapid and accurate diagnosis to prevent complications. Recent advances in artificial intelligence (AI) have opened new possibilities for fracture detection using X-ray imaging. This study aimed to evaluate the performance of SmartUrgence® by Milvue, an AI tool designed to identify fractures in emergency cases, comparing its results to computed tomography (CT) scans, the gold standard in fracture diagnosis.

Methods

Patients referred from the Orthopedic Department after clinical suspicion of fractures underwent both AI-assisted X-ray imaging and CT scans. The study compared AI-generated X-ray assessments with CT scan findings to measure the AI tool’s accuracy, sensitivity, and specificity.

Results

SmartUrgence® demonstrated strong diagnostic metrics: specificity of 95.45%, sensitivity of 91.13%, positive predictive value (PPV) of 93.39%, and negative predictive value (NPV) of 93.85%. Overall accuracy reached 93.67%, with a balanced accuracy of 93.25%. Precision (0.934) and recall (0.911) were also high, reflecting the AI’s ability to minimize false positives and negatives. However, the AI tool’s performance remained significantly different from that of CT scans (P < 0.001), particularly in detecting certain fracture types.

Conclusion

The findings suggest that AI has strong potential as an effective tool for fracture detection in emergency care, offering high sensitivity and specificity. Nonetheless, AI should complement, not replace, CT imaging. Variability in detection rates across fracture types indicates the need for further refinement.

Practical implication: Future research should focus on improving AI performance in complex cases and ensuring safe integration into clinical workflows. Enhancing collaboration between AI systems and medical professionals will be key to maximizing the benefits of AI-assisted diagnostics.

Introduction

Bone fractures are a prevalent and debilitating injury affecting people across all age groups and demographics. According to Court-Brown et al. [1], fractures account for a significant portion of musculoskeletal injuries, with the radius, ulna, phalanges, and clavicle being the bones most frequently affected. In Egypt, bone fractures pose a significant public health concern, with the leading causes including road traffic accidents, personal assaults, falls from heights, and gunshots [2].

Despite the increasing availability of advanced imaging techniques such as computed tomography (CT) and magnetic resonance imaging (MRI), conventional radiographs remain essential in the initial assessment of fracture patients. Radiographs are the primary imaging modality in traumatic emergencies due to their accessibility, speed, cost-effectiveness, and low radiation exposure, especially in resource-limited settings [3]. However, interpreting trauma radiographs is challenging and requires radiologic expertise [4].

Advances in medical technology have highlighted the potential of artificial intelligence (AI) in healthcare, particularly in detecting bone fractures through CT images [5]. AI has shown great promise in identifying fractures in emergencies, reducing human errors, and improving diagnosis accuracy [6, 7]. Despite these advantages, most AI algorithms are trained on cleaned, annotated datasets, which do not always reflect the complexities of real-life clinical practice. Nonetheless, various studies have demonstrated AI's potential in enhancing fracture diagnosis accuracy, especially for peripheral skeletal fractures, aiding radiologists and emergency physicians in making prompt and accurate diagnoses. Some AI algorithms have even received Food and Drug Administration approval and Conformité Européenne (CE) markings as class IIa medical devices [8].

The increasing use of AI tools in radiology has prompted national working groups to propose good practice guidelines [9]. Furthermore, the World Health Organization has published principles to ensure AI serves the public interest globally [10]. A recent meta-analysis comparing AI and clinicians'diagnostic performance in fracture detection found no statistically significant differences, with pooled sensitivity and specificity for AI at 92% and 91%, respectively. However, these studies did not assess AI's impact on radiologists'workflows [11].

The technology of AI offers a potential solution by providing an automated and standardized method for detecting fractures on X-ray images [12]. For instance, a study evaluating an AI system's efficacy in diagnosing ankle fractures involved data from 1,050 patients and achieved a sensitivity of 98.7% using three views [13]. SmartUrgence®, developed by the French AI company Milvue, has received Conformité Européenne certification as a class 2a medical device and is used in over ten European institutions, although not yet in the United Kingdom [14]. The AI model was trained on a multicentric dataset of over 600,000 chest and musculoskeletal radiographs to detect seven key pathologies, including fractures, by highlighting abnormalities on the radiograph with a bounding box and providing a binary certainty score. However, it is not certified for analyzing radiographs of the axial skeleton or abdominal radiographs.

We hypothesize that the AI algorithm can detect fractures with the same accuracy as CT imaging. Therefore, this study aimed to evaluate the accuracy of the AI tool SmartUrgence® (developed by Milvue) in detecting bone fractures in emergency cases using X-ray images. It also identifies its limitations and suggests areas for future research.

Methods

Research design

This is a diagnostic cross-sectional study.

Study setting

The study was conducted at the Conventional X-ray and CT units in the Radiology Department. Online remote access to SmartUrgence® (Milvue) was utilized.

Study population

The study included patients with a history of trauma referred to the X-ray unit from the orthopedic department at the emergency unit after clinical assessment for possible fractures. Inclusion and exclusion criteria were applied to select participants.

Inclusion criteria

-

Adult patients of both genders, aged 19 years or older, by the World Health Organization’s (WHO) definition of adulthood.

-

Patients presenting to the emergency department with clinically suspected fractures based on history and physical examination findings.

-

Patients for whom radiographic imaging (CT and X-ray) was clinically indicated and performed as part of their diagnostic workup.

Exclusion criteria

After applying the inclusion criteria, patients were excluded from the study if any of the following conditions were met:

-

Contraindications to undergoing CT or X-ray imaging, such as pregnancy.

-

Presence of suspected axial skeletal fractures (e.g., spine or skull), which are not detectable by the current version of the SmartUrgence® AI model.

-

CT images showing motion artifacts or distortion that compromised image quality.

-

Unsatisfactory X-ray images (e.g., underexposed, misaligned, or incomplete views) as assessed by a radiologist or trained reviewer.

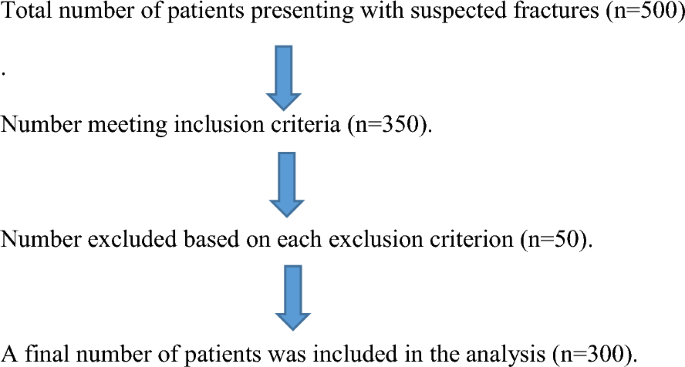

Study population selection flow

Sample unit and sampling technique

The sample size was calculated using the formula by Dawson-Saunders [15], resulting in a sample size of 65. Based on a prevalence of hip fractures of 20.5%, a specificity of AI in diagnosing hip fractures at 96.9%, and a margin of error of 5%, the final sample size was 300 participants [16]. A non-probability convenience sampling method was used, including all patients meeting the criteria and referred to the X-ray unit.

Technique and procedures

Baseline clinical and demographic data were collected for all participants. This included the patient's name, age, gender, and occupation, as well as a detailed clinical history relevant to the presenting complaint. The present history focused on the occurrence of trauma, specifying the mechanism of injury (e.g., fall, direct blow, motor vehicle accident), the force applied, and the anatomical site affected. Clinical symptoms such as pain, localized swelling, instability, limited range of motion, and functional disability were recorded to aid in clinical assessment and correlate with radiological findings. Additionally, past medical history of related significance, such as previous fractures, bone disorders, musculoskeletal conditions, and other comorbidities, was obtained to provide a comprehensive patient profile.

CT technique

Performed with a 16-slice scanner (Activion 16, Toshiba Medical Systems), with scans in the cranio-caudal direction at the fracture site, a slice thickness of 1 mm, 120 kVp, and 50–100 mAs. Images were processed using a workstation for multiple planar reconstruction (MPR).

X-ray technique

Completed using Armonicus (Villa Sistemi Medicali) Model: R225, with specific protocols for different body parts to ensure optimal density, contrast, and clear visualization of soft tissue margins and bony trabeculation.

Post-processing and image analysis (AI Tool)

The AI tool, SmartUrgence®, was linked to the PACS system, allowing accessibility across all workstations. The AI model analyzed radiographs in DICOM format and was trained on a dataset of over 600,000 chest and musculoskeletal radiographs to detect key pathologies, including fractures, with a bounding box and binary certainty score. Doubtful positive findings were considered negative for this study.

Data management and statistical analysis

Data was collected, coded, and entered into Microsoft Excel 2013 and analyzed using SPSS version 20.0. Statistical analyses included calculating predictive values (PPV, NPV), sensitivity, specificity, and total accuracy, as well as constructing Receiver Operating Characteristic (ROC) curves. Continuous data were expressed as mean ± standard deviation, and categorical data as percentages. T-tests and ANOVA were used for comparisons, with Chi-squared tests for qualitative data. The comparison in this study was conducted exclusively between the AI-generated results and those obtained from CT imaging.

Ethical approval

The study, conducted from July 2023 to July 2024, received ethical approval from the Suez Canal University Faculty of Medicine REC (No. 5399). Verbal informed consent was obtained, with confidentiality maintained. Participation was voluntary, with no impact on care for those who declined. The blocks used were safe and established. Data was used only for research, and participants were informed of their results.

The radiologists involved in this study brought a range of professional experience, enhancing the reliability of image interpretation. Dr. N. A.M. Abdellatif had 3 years of experience in radiology, while Dr. A. S. El-Rawy had 13 years of clinical practice. Dr. A. R. Abdellatif contributed with 14 years of experience, and Dr. M. A. Al-Shatouri had more than 22 years of expertise in the field.

Results

Demographic data

The demographic profile of the study population is characterized by a balanced gender representation and a wide age distribution, ensuring inclusivity and representativeness. With 66% males and 34% females, the study achieves a near-equitable gender split (Fig. 1). Participants ranged in age from 19 to 80 years, with a mean age of 39 years, reflecting the heterogeneity of the sample. The age standard deviation of 14.7 points to moderate variability, while the interquartile range of 37 underscores a significant spread within the central half of the age data. These demographic attributes collectively enhance the robustness and generalizability of the research findings, laying a solid foundation for credible and comprehensive analysis.

Clinical data

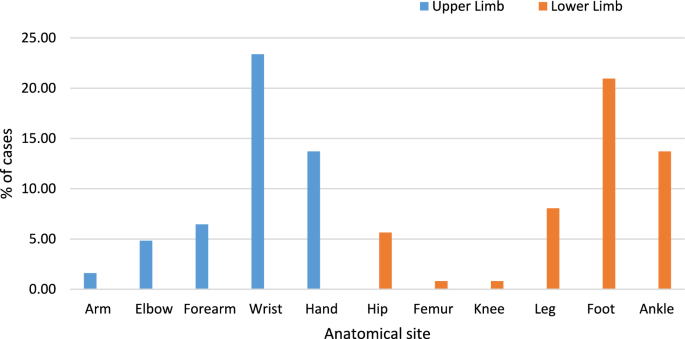

Figure 1 highlights a significant distribution pattern in the anatomical sites of fractures among trauma patients. Lower limb fractures are the most prevalent, accounting for 53.7% of cases, while upper limb fractures comprise 46.3%. Specifically, hand fractures are the most common among upper limb injuries, comprising 18% of cases. Conversely, ankle fractures dominate the lower limb injuries, representing 21.7% of all fractures, followed by foot fractures at 15.3% (Fig. 1). Arm fractures are the least frequent, accounting for only 1% of the cases.

The data in Table 1 presents the distribution of all imaged cases, including both fractured and non-fractured instances. Lower limb cases accounted for 55.6% of the total, with the ankle being the most frequently imaged site (21.7%), followed by the foot (15.3%) and knee (8.3%). Upper limb cases represented 44.3% of the total, with the hand (18.0%) and wrist (14.3%) showing the highest frequencies, while the arm (1.0%) and femur (1.3%) had the fewest cases. This distribution highlights the higher susceptibility of distal extremities and weight-bearing joints to injury, leading to more frequent imaging (Table 1).

Figure 2 shows the distribution of confirmed fractures (124 cases) across different anatomical sites, with an equal number of fractures in the upper limb (62 cases) and lower limb (62 cases). In the upper limb, the wrist had the highest number of fractures (23.39%), followed by the hand (13.71%) and forearm (6.45%). Lower limb fractures were predominantly seen in the foot (20.97%) and ankle (13.71%), with fewer cases in the knee (0.81%) and femur (0.81%). This equal distribution suggests that both upper and lower limb injuries are common, likely due to different injury mechanisms—falls and direct impact for upper limb fractures, and weight-bearing stress and trauma for lower limb fractures (Fig. 2).

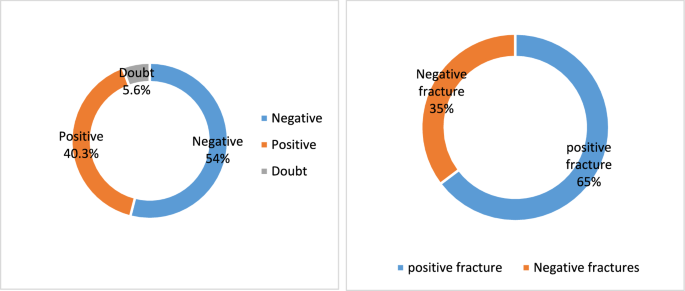

The results in Table 2 offer a compelling insight into the performance of AI in fracture detection, juxtaposed against the gold standard, CT scans. The 300 cases of suspected fractures were included, with 124 (41.3%) having fractures, and 176 (58.7%) with no fractures confirmed by CT scan. The AI detected 121 positive fracture cases and exhibited a minimal degree of uncertainty, as evidenced by a doubt in 5.7% of cases. The AI showed uncertainty likely due to poor image quality, subtle or complex fractures, overlapping anatomy, or limitations in the training dataset. When the AI tool flags a case as doubtful, radiologists review the X-ray image and compare the findings with CT imaging to confirm or exclude the suspected fracture (Table 2).

Table 3 illustrates the correlation between age and fracture occurrence as determined by CT scans, providing valuable insights into the age distribution of fractures. The study’s age range, from 19 to 80 years, underscores its inclusivity and the heterogeneity of the participants. The mean age of those with confirmed fractures is 39.8 years, while the median is 38 years. A mean and median in the late 30 s suggest that fractures are more frequently observed in individuals at the younger end of the studied age range, possibly due to high levels of physical activity, trauma, or occupational hazards. The proximity of the mean (39.8) and median (38) suggests the age distribution is fairly symmetrical, with no significant outliers (e.g., extremely young or elderly individuals dominating the dataset) (Table 3).

The statistical analysis show cases the diagnostic efficacy of the artificial intelligence (AI) tool in detecting fractures among trauma patients, providing a thorough evaluation of the AI model's performance. Table 4 highlights key metrics, demonstrating the robustness of the AI tool. The AI scan achieved an impressive specificity of 95.45%, with only 8 false positives, and a sensitivity of 91.13%, with 11 false negatives. The positive predictive value (PPV) and negative predictive value (NPV) were noteworthy, at 93.39% and 93.85%, respectively (Table 4).

Additionally, Table 4 showed the overall accuracy of the AI tool was 93.67%, while the balanced accuracy, accounting for class imbalance, was 93.25%. With high precision and recall values (0.934 and 0.911, respectively), the AI model effectively minimizes false negatives and false positives, which is crucial for medical diagnostic tools. This ensures that actual fracture cases are not overlooked and unnecessary interventions or treatments for non-fracture cases are avoided. However, the analysis revealed a significant performance difference between the AI tool and CT scans, the gold standard for fracture diagnosis (P < 0.001) (Table 4).

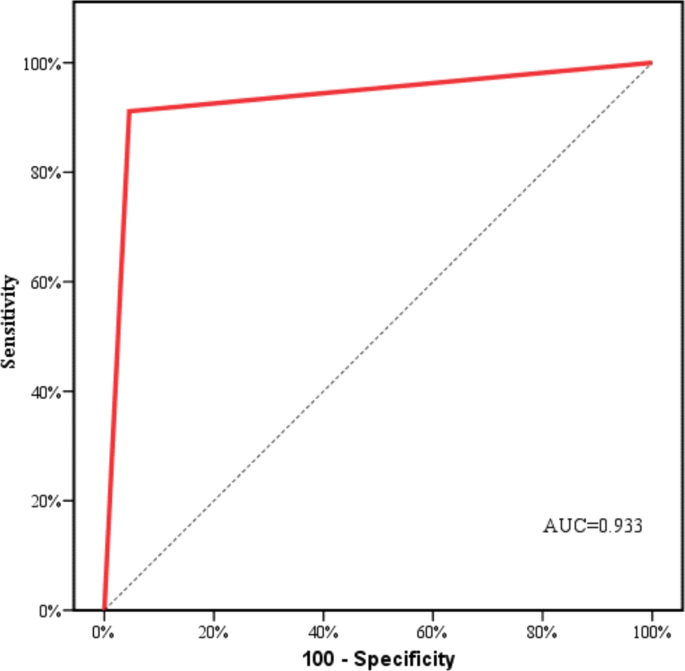

The ROC curve shown in Fig. 3 illustrates the AI's effectiveness in detecting fractures, with an impressive area under the curve (AUC) of 0.933, indicating its strong diagnostic performance. This high AUC value underscores the AI's capability to distinguish effectively between patients with fractures and those without, highlighting its reliability as a diagnostic tool. The significant AUC reflects the AI's sensitivity in identifying individuals with fractures correctly while also maintaining high specificity in accurately excluding those without fractures. This balance between sensitivity and specificity is crucial in clinical settings, ensuring precise diagnosis and minimizing false positives (Fig. 3).

Table 5 and Fig. 4A and B present insightful data on the AI tool's performance compared to CT scans detecting fractures. The indication that 5.7% of all cases were flagged as uncertain by the AI highlights its careful approach when encountering diagnostic uncertainty. This cautiousness is critical for reducing the risk of false positives or incorrect diagnoses. Among these uncertain cases, 11 (65%) were subsequently confirmed by CT as free of fractures, while 6 cases (35%) were validated as fractures. These results underscore the importance of integrating human expertise and clinical judgment to verify AI-generated outputs, especially in cases of uncertainty (Table 5 and Figs. A and B).

Discussion

In emergency medicine, the swift and accurate detection of fractures is critical for timely treatment initiation, alleviating patient discomfort, and averting complications [17]. Physicians face challenges interpreting X-rays, especially in complex areas like the pelvis, where perspective distortions can lead to errors. Additionally, distinguishing fractures from other musculoskeletal issues poses diagnostic hurdles [18], underscoring the importance of reliable diagnostic tools.

The present study evaluated the diagnostic performance of the AI tool SmartUrgence® (by Milvue) in identifying bone fractures on X-ray images in emergency settings. It also highlights the tool’s limitations and outlines directions for future research and improvement. The AI shows promise as such a tool, enhancing fracture detection efficiency in emergency cases. While CT scans are the gold standard, AI successfully identifies fractures. Studies highlight AI's potential to increase radiologists'efficiency and diagnostic confidence and inform treatment decisions [19]. In this context, artificial intelligence (AI) tools have gained attention for their potential to assist in fracture diagnosis and enhance radiological workflow. However, AI should augment rather than replace CT scans, as its sensitivity and specificity vary across studies and fracture types [11]

The study demonstrated that the AI tool SmartUrgences® (Milvue) achieved high diagnostic accuracy in detecting fractures, with a sensitivity of 91.13%, specificity of 95.45%, and overall accuracy of 93.67%. These results align with several prior investigations. For instance, Kuo et al. [11] reported pooled sensitivity and specificity of 91% and 92% for AI models in fracture detection, while Zhang et al. [20] found comparable figures in orthopedic settings. Similarly, Oka et al. [21] and Kraus et al. [22] reported AUC values exceeding 0.90 in diagnosing distal radius and scaphoid fractures, respectively, highlighting the consistent performance of AI across various fracture sites.

Other studies also confirm AI's potential in improving radiological efficiency. Guermazi et al. [23] demonstrated that AI significantly increased fracture detection sensitivity, particularly for non-obvious cases, and reduced interpretation time. Reichert et al. [24] found that AI-assisted systems served as effective tools for junior clinicians in emergency rooms, improving both sensitivity and confidence in diagnosis.

However, our findings differ from those of Udombuathong et al. [25], where AI showed lower accuracy (81.24%) for hip fracture detection compared to orthopedic surgeons (93.59%). Similarly, Inagakii et al. [26] observed that while AI could outperform humans in detecting sacral fractures, its performance varied by anatomical site. These discrepancies can be attributed to multiple factors, including differences in dataset composition, image quality, anatomical region studied, and whether single-view or multi-view radiographs were used. Moreover, the level of radiologist supervision, AI model architecture, and population demographics (e.g., pediatric vs. adult cases) can influence diagnostic outcomes [14, 27].

The novelty of this study is its application of SmartUrgences in a real-world emergency setting within a high-volume Egyptian hospital using a diverse adult population. Unlike many prior studies that focused on retrospective image sets or controlled clinical data, our research evaluated AI performance under operational conditions and included the handling of “doubtful” cases, which constituted 5.7% of the sample. These were further verified through CT imaging, a step often missing in similar studies.

Another unique contribution of the present study is the comparison of AI results against gold-standard CT scans rather than radiologist interpretations alone, providing a robust benchmark for diagnostic accuracy. By using CT-confirmed findings, we minimized bias and strengthened the validity of the performance metrics.

The study also adds value by highlighting the limitations of AI tools. For example, certain fracture types—especially vertebral and skull fractures—were not accurately detected, consistent with findings by Bousson et al. [28], who observed poor AI performance in regions with overlapping anatomical structures such as ribs and spine. Moreover, the"black-box"nature of the AI model complicated interpretation, particularly for junior clinicians, as it provided binary outputs (positive, negative, or doubtful) without indicating fracture type or severity.

Differences between this study and others may also stem from the breadth of anatomical regions included. While some investigations (e.g., Lee et al. [29]) focused on single-region models like the femur or wrist, the analysis encompassed upper and lower limb fractures across multiple sites, allowing for a more comprehensive evaluation. The AI aids in detecting nasal bone fractures with improved sensitivity and specificity compared to traditional methods [30] and classifies femur fractures using deep learning networks [31, 32].

In the case of pelvic fractures, AI demonstrates promising accuracy comparable to radiologists, supporting clinical decision-making in emergency departments [26]. However, while AI aids in fracture detection, challenges remain in validating its performance across diverse clinical scenarios and ensuring its integration into radiology workflows [27].

Overall, AI offers significant promise in fracture diagnosis, enhancing accuracy and efficiency in clinical settings. Continued research and development are essential to optimize AI's role alongside human expertise, ensuring safe and effective integration into routine medical practice.

Limitations of the study

The study was conducted in a single institution with a limited sample size, which may affect the generalizability of the findings. The lack of direct comparison with radiologist interpretations on uncertain cases also limits insight into real-world clinical utility. Notably, the AI was not trained to detect fractures of the skull and spine, limiting its use in critical trauma cases.

Conclusions

The study concludes that AI tools like SmartUrgences demonstrate high diagnostic accuracy for fracture detection and can effectively support radiologists and CT imaging, especially in emergency settings. Although not as accurate as CT scans—the gold standard—the AI tool is valuable in high-pressure scenarios like night shifts. The study emphasizes the need for further research to improve AI performance in complex cases, increase interpretability, and enable smooth integration into clinical workflows. Future work should evaluate AI's real-world impact on patient care and healthcare efficiency to ensure its safe and effective use in routine practice.

Data availability

No datasets were generated or analysed during the current study.

Abbreviations

- ANOVA:

-

Analysis of variance

- AP:

-

Anteroposterior

- AUC:

-

Area under curve

- AI:

-

Artificial intelligence

- CT:

-

Computed tomography

- CE:

-

Conformitè europëenne

- CNNs:

-

Convolutional neural networks

- DCNN:

-

Deep convolutional neural networks

- DICOM:

-

Digital imaging and communications in medicine

- DHES:

-

Distal humeral epiphyseal separation

- FRCR:

-

Fellow of the royal college of radiologists

- FIF:

-

Femoral intertrochanteric fractures

- IT:

-

Information technology

- LHC:

-

Lateral humeral condyle

- ML:

-

Machine learning

- MRI:

-

Magnetic resonance imaging

- MDCT:

-

Multidetector computed tomography

- MPR:

-

Multiple planar reconstructions

- MSK:

-

Musculoskeletal

- NPV:

-

Negative predictive value

- PACS:

-

Picture archiving and communication system

- PPV:

-

Positive predictive value

- REC:

-

Research ethics committee

References

Court-Brown CM, Bugler KE, Clement ND, Duckworth AD, McQueen MM (2012) The epidemiology of open fractures in adults. A 15-year review. Injury 43(6):891–897. https://doi.org/10.1016/j.injury.2011.12.007

Halawa EF, Barakat A, Rizk HI, Moawad EM (2015) Epidemiology of non-fatal injuries among Egyptian children: a community-based cross-sectional survey. BMC Public Health 15(1):1248. https://doi.org/10.1186/s12889-015-2613-5

Canoni-Meynet L, Verdot P, Danner A, Calame P, Aubry S (2022) Added value of an artificial intelligence solution for fracture detection in the radiologist’s daily trauma emergencies workflow. Diagn Interv Imaging 103(12):594–600. https://doi.org/10.1016/j.diii.2022.06.004

Dupuis M, Delbos L, Veil R, Adamsbaum C (2022) External validation of a commercially available deep learning algorithm for fracture detection in children. Diagn Interv Imaging 103(3):151–159. https://doi.org/10.1016/j.diii.2021.10.007

van den Wittenboer GJ, van der Kolk BY, Nijholt IM, Langius-Wiffen E, van Dijk RA, van Hasselt BA, Podlogar M, van den Brink WA, Bouma GJ, Schep NW, Maas M (2024) Diagnostic accuracy of an artificial intelligence algorithm versus radiologists for fracture detection on cervical spine CT. Eur Radiol 34(8):5041–5048

Leite CdC (2019) Artificial intelligence, radiology, precision medicine, and personalized medicine. 52(VII-VIII): SciELO Brasil

Kutbi M (2024) Artificial intelligence-based applications for bone fracture detection using medical images: a systematic review. Diagnostics 14(17):1879

Muehlematter UJ, Daniore P, Vokinger KN (2021) Approval of artificial intelligence and machine learning-based medical devices in the USA and Europe (2015–20): a comparative analysis. Lancet Digit Health 3(3):e195–e203. https://doi.org/10.1016/S2589-7500(20)30292-2

Hussain F, Cooper A, Carson-Stevens A, Donaldson L, Hibbert P, Hughes T, Edwards A (2019) Diagnostic error in the emergency department: learning from national patient safety incident report analysis. BMC Emerg Med 19(1):77. https://doi.org/10.1186/s12873-019-0289-3

Cihon P, Schuett J, Baum SD (2021) Corporate governance of artificial intelligence in the public interest. Information 12(7):275

Kuo RY, Harrison C, Curran TA, Jones B, Freethy A, Cussons D, Stewart M, Collins GS, Furniss D (2022) Artificial intelligence in fracture detection: a systematic review and meta-analysis. Radiology 304(1):50–62. https://doi.org/10.1148/radiol.211785

Xie Y, Li X, Chen F, Wen R, Jing Y, Liu C, Wang J (2024) Artificial intelligence diagnostic model for multi-site fracture X-ray images of extremities based on deep convolutional neural networks. Quant Imaging Med Surg 14(2):1930–1943. https://doi.org/10.21037/qims-23-878

Ashkani-Esfahani S, Yazdi RM, Bhimani R, Kerkhoffs GM, Maas M, DiGiovanni CW, Lubberts B, Guss D (2022) Detection of ankle fractures using deep learning algorithms. Foot Ankle Surg 28(8):1259–1265. https://doi.org/10.1016/j.fas.2022.05.005

Shelmerdine SC, Martin H, Shirodkar K, Shamshuddin S, Weir-McCall JR (2022) Can artificial intelligence pass the fellowship of the Royal College of Radiologists examination? Multi-reader diagnostic accuracy study. BMJ 21:379

Dawson-Saunders B (2004) Basic and Clinical Biostatistics. ALANGE medical book, 42–161

Sato Y, Takegami Y, Asamoto T, Ono Y, Hidetoshi T, Goto R, Kitamura A, Honda S (2021) Artificial intelligence improves the accuracy of residents in the diagnosis of hip fractures: a multicenter study. BMC Musculoskelet Disord 22(1):407. https://doi.org/10.1186/s12891-021-04260-2

Pinto A, Berritto D, Russo A, Riccitiello F, Caruso M, Belfiore MP, Papapietro VR, Carotti M, Pinto F, Giovagnoni A, Romano L (2018) Traumatic fractures in adults: missed diagnosis on plain radiographs in the emergency department. Acta Biomed 89(1-S):111–123. https://doi.org/10.23750/abm.v89i1-S.7015

Moseley M, Rivera-Diaz Z, Fein DM (2022) Ankle injuries. Pediatr Rev. https://doi.org/10.1542/pir.2021-004992

Sharma S (2023) Artificial intelligence for fracture diagnosis in orthopedic X-rays: current developments and future potential. SICOT J 9:21. https://doi.org/10.1051/sicotj/2023018

Zhang X, Yang Y, Shen YW, Zhang KR, Jiang ZK, Ma LT, Ding C, Wang BY, Meng Y, Liu H (2022) Diagnostic accuracy and potential covariates of artificial intelligence for diagnosing orthopedic fractures: a systematic literature review and meta-analysis. Eur Radiol 32(10):7196–7216

Oka K, Shiode R, Yoshii Y, Tanaka H, Iwahashi T, Murase T (2021) Artificial intelligence to diagnosis distal radius fracture using biplane plain X-rays. J Orthop Surg Res 16(1):694. https://doi.org/10.1186/s13018-021-02845-0

Kraus M, Anteby R, Konen E, Eshed I, Klang E (2024) Artificial intelligence for X-ray scaphoid fracture detection: a systematic review and diagnostic test accuracy meta-analysis. Eur Radiol 34(7):4341–4351. https://doi.org/10.1007/s00330-023-10473-x

Guermazi A, Tannoury C, Kompel AJ, Murakami AM, Ducarouge A, Gillibert A, Li X, Tournier A, Lahoud Y, Jarraya M, Lacave E (2022) Improving radiographic fracture recognition performance and efficiency using artificial intelligence. Radiology 302(3):627–636. https://doi.org/10.1148/radiol.210937

Reichert G, Bellamine A, Fontaine M, Naipeanu B, Altar A, Mejean E, Javaud N, Siauve N (2021) How can a deep learning algorithm improve fracture detection on X-rays in the emergency room? J Imaging 7(7):105

Udombuathong P, Srisawasdi R, Kesornsukhon W, Ratanasanya S (2022) Application of artificial intelligence to assist hip fracture diagnosis using plain radiographs. J Southeast Asian Med Res 6:e0111–e0111

Inagaki N, Nakata N, Ichimori S, Udaka J, Mandai A, Saito M (2022) Detection of sacral fractures on radiographs using artificial intelligence. JBJS Open Access 7(3):e22

Langerhuizen DW, Janssen SJ, Mallee WH, Van Den Bekerom MP, Ring D, Kerkhoffs GM, Jaarsma RL, Doornberg JN (2019) What are the applications and limitations of artificial intelligence for fracture detection and classification in orthopedic trauma imaging? A systematic review. Clin Orthop Relat Res 477(11):2482–2491

Bousson V, Benoist N, Guetat P, Attané G, Salvat C, Perronne L (2023) Application of artificial intelligence to imaging interpretations in the musculoskeletal area: where are we? Where are we going? Joint Bone Spine 90(1):105493

Lee C, Jang J, Lee S, Kim YS, Jo HJ, Kim Y (2020) Classification of femur fracture in pelvic X-ray images using meta-learned deep neural network. Sci Rep 10(1):13694. https://doi.org/10.1038/s41598-020-70660-4

Yang C, Yang L, Gao GD, Zong HQ, Gao D (2023) Assessment of artificial intelligence-aided reading in the detection of nasal bone fractures. Technol Health Care 31(3):1017–1025. https://doi.org/10.3233/THC-220501

Ahmed Z, Mohamed K, Zeeshan S, Dong X (2020) Artificial intelligence with multi-functional machine learning platform development for better healthcare and precision medicine. Database (Oxford). https://doi.org/10.1093/database/baaa010

Rosa F, Buccicardi D, Romano A, Borda F, D’Auria MC, Gastaldo A (2023) Artificial intelligence and pelvic fracture diagnosis on X-rays: a preliminary study on performance, workflow integration, and radiologists’ feedback assessment in a spoke emergency hospital. Eur J Radiol Open 11:100504. https://doi.org/10.1016/j.ejro.2023.100504

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abdellatif, N., El-Rawy, A., Abdellatif, A. et al. Assessment of artificial intelligence-aided X-ray in diagnosis of bone fractures in emergency setting. Egypt J Radiol Nucl Med 56, 160 (2025). https://doi.org/10.1186/s43055-025-01580-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43055-025-01580-4